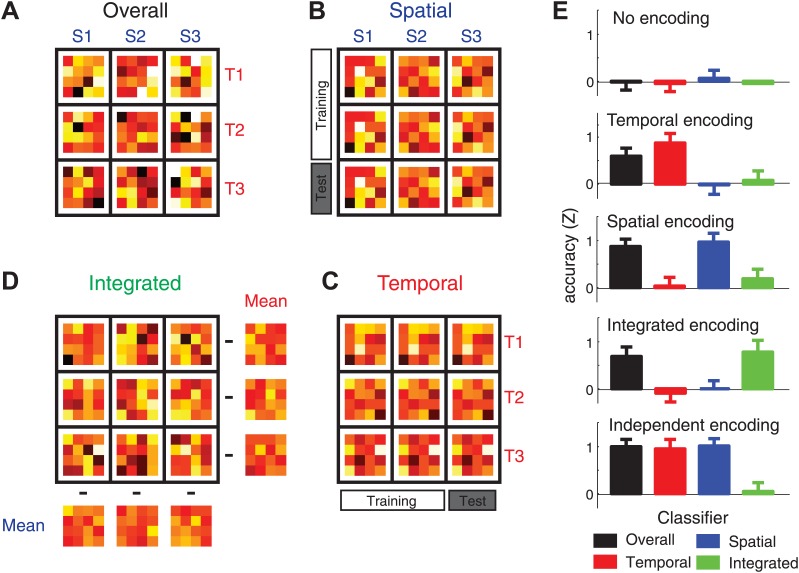

Figure 3. Four classification procedures were employed to classify the voxel pattern of each searchlight (160 voxels, here reduced to 16 units for illustration purposes).

(A) To test whether a voxel searchlight contained any sequential information, the overall classifier distinguished between the nine sequences independently. Classification was always cross-validated across imaging runs (‘Materials and methods’). (B) To determine encoding of the spatial feature, the classifier was trained on data involving only two of the three temporal sequences, and tested on trials from a left-out imaging run in which the spatial sequences were paired with the remaining temporal sequence. (C) The temporal classifier followed the same training-test principle, but in an orthogonal direction. (D) The integrated classifier detected nonlinear encoding of the unique combinations of temporal and spatial features that could not be accounted for by linear superposition of independent encoding. The spatial and temporal mean patterns for each run were subtracted from each combination, respectively, to yield a residual pattern, which was then submitted to a nine-class classification. (E) Classification accuracy of the four classifiers on simulated patterns (z-transformed, chance level = 0). Results indicate that the underlying representation can be sensitively detected by contrasting the overall, temporal, spatial, and integrated classifiers. Importantly these classification procedures can differentiate between a non-linear integrated encoding of the two parameters as opposed to the overlap of independent temporal and spatial encoding.