Abstract

Objective

Models of healthcare organizations (HCOs) are often defined up front by a select few administrative officials and managers. However, given the size and complexity of modern healthcare systems, this practice does not scale easily. The goal of this work is to investigate the extent to which organizational relationships can be automatically learned from utilization patterns of electronic health record (EHR) systems.

Method

We designed an online survey to solicit the perspectives of employees of a large academic medical center. We surveyed employees from two administrative areas: 1) Coding & Charge Entry and 2) Medical Information Services and two clinical areas: 3) Anesthesiology and 4) Psychiatry. To test our hypotheses we selected two administrative units that have work-related responsibilities with electronic records; however, for the clinical areas we selected two disciplines with very different patient responsibilities and whose accesses and people who accessed were similar. We provided each group of employees with questions regarding the chance of interaction between areas in the medical center in the form of association rules (e.g., Given someone from Coding & Charge Entry accessed a patient’s record, what is the chance that someone from Medical Information Services access the same record?). We compared the respondent predictions with the rules learned from actual EHR utilization using linear-mixed effects regression models.

Results

The findings from our survey confirm that medical center employees can distinguish between association rules of high and non-high likelihood when their own area is involved. Moreover, they can make such distinctions between for any HCO area in this survey. It was further observed that, with respect to highly likely interactions, respondents from certain areas were significantly better than other respondents at making such distinctions and certain areas’ associations were more distinguishable than others.

Conclusions

These results illustrate that EHR utilization patterns may be consistent with the expectations of HCO employees. Our findings show that certain areas in the HCO are easier than others for employees to assess, which suggests that automated learning strategies may yield more accurate models of healthcare organizations than those based on the perspectives of a select few individuals.

Keywords: Electronic health records, organizational modeling, data mining, survey

1. Introduction

The healthcare community has made considerable strides in the development of information technology to support clinical operations in healthcare organizations (HCOs). These advances stem from a variety of factors, including commercialization of health information technology (HIT) and policy making that promotes the uptake of such technologies (e.g., the “meaningful use” incentives offered in the United States [1–3]). While there is evidence that health information technology (HIT) can improve the safety [4–6] and the efficiency [7,8] of healthcare delivery, there remain considerable obstacles to adoption and realization of these benefits on a massive scale. [9,10]

In particular, as HIT, and the healthcare workforce more generally, grows in diversity, so too do its complexity. [11–13] This is a concern because, despite the aforementioned benefits, there is also evidence to suggest that HIT can contribute to (though is not necessarily the cause of) the interruption of care services [14,15], induce medical errors [16,17], and expose patient data to privacy breaches [18,19]. Moreover, such events tend to be discovered only after they have transpired en masse, leading to undesirable popular media coverage [20,21], loss of patients’ trust [22–24], and sanctions imposed by state and federal agencies [25,26].

It has been suggested that such problems can be mitigated through the integration of rules to recommend against or even prohibit certain actions (e.g., the prescription of two drugs in combination that are known to cause an adverse reaction [27,28]). At the same time, it is recognized that no rules-based system is perfect and that exceptions need to be granted. These exceptions can, in turn, be audited to determine if the existing set of rules are in alignment with the expectations of the HCO or if they need to be revised to more accurately represent healthcare operations. [29,30] For instance, it has been shown that the exposure of patient records (and thus the violation of their privacy) can be lessened through access control [31–36], which allocates permission to patient information on a need-to-know basis. In this setting, exceptions are granted through a “break the glass” failsafe that allows HCO employees to escalate privileges if necessary. [37] For instance, in a study in the Central Norway Health Region, over the course of a one month period, it was observed that 54% of 99,000 patient’s records had their glass broken by 43% of 12,000 healthcare employees. [38] Patterns of escalation can subsequently be applied by HIT system administrators to evolve access control configurations. [39–42]

Data-driven approaches to HIT improvement will only be acceptable to HCO administrators if the patterns of HIT utilization reflect the expected operations of healthcare environments. This paper begins to address this issue by investigating how a specific type of HIT utilization pattern, which has been suggested for use in audit and refinement of access control models [43–46], aligns with the expectations of employees in a large academic medical center. To do so, we designed a survey to capture the degree to which employees agree with relational patterns (i.e., the likelihood that certain HCO areas in a medical center coordinate to support a patient) as inferred by actual utilization of an electronic health record (EHR) system. [47] This survey was conducted with employees from four areas in the Vanderbilt University Medical Center (VUMC). It was designed to determine if employees 1) agreed with the distinction between relationships of high and non-high likelihood and 2) were better at assessing relationships regarding their own area as opposed to others in the institution.

Our findings illustrate that employees can, with statistical significance, clearly distinguish between relationships of high and non-high likelihood. Moreover, we find that employees were capable of performing such assessments for all of the HCO areas in the study, which implies that our results are robust against bias induced by self-perception. To the best of our knowledge, this is the first study to illustrate humans agree with the organizational models that can be mined from EHR access logs.

2. Background

2.1. Learning Organizational Models

Organizations are often structured to support the completion of certain tasks. As a result, when a task is complex (e.g., a patient who is associated with multiple ailments, which need to be treated by different sections of a hospital), traditional organizational management strategies are often inadequate. [48–50, 76] Managers in such environments tend to exhibit low productivity as a consequence of attempting to coordinate complicated relations. [51] It has been shown that the integration of information technology into an organization’s business practices, can facilitate the flattening of rigid hierarchical organizations and, thus, enable greater agility. [52,53,77,78] Thus, over the past several decades, there has been a significant amount of research dedicated to inference and modeling of organizational structures, particularly with respect to information technology. [54–56]

At the same time, it has been recognized that the relationships within a collaborative environment are often dynamic and context-dependent. Based on this observation, data-driven learning models have been proposed to represent dynamic relations and uncertain context in organizations. [57,58] In particular, much of the research to date has focused on task-based organizational modeling. [57,59] These strategies aim to infer the network of relations between people, resources, and the tasks that constrain and enable organizational behavior. However, there are concerns that the organizational relationships learned by way of inferential strategies may lack precision [60] and fail to capture the actual organizational model [61]. In the context of health, such problems could manifest if workarounds convert electronic to paper-based processes. [62,63] As such, it is important that the relational behaviors learned be sufficiently stable to reflect the expectations of the organizations. [57] To this effect, various data-driven approaches [58,65–67] have been proposed to mine organizational behaviors that are relatively consistent over time. They capture and analyze complex relationships in the collaborative environment data, and evaluate the consistence of these relations over the time.

2.2. Models of Interaction in Healthcare

More recently, with respect to the health and medical domain, studies have shown that organizational learning techniques can be applied to model the relationships between entities within public health environments [64] and large healthcare systems [47]. In turn, it has been suggested that communities of healthcare providers, based on their co-access of patients’ medical records can be leveraged to assess if a certain users’ behavior deviates from a statistical norm. [43–45] These strategies have further been adapted to assess how the evolution of organizational relationships over the time can determine if certain accesses to patients’ records are suspicious. [46] Nonetheless, these investigations did not consider the extent to which the organizational models and patterns extracted from these methodologies align with the expectations of the healthcare community.

2.3. Validation of Learned Models with Experts

Concerns over trustworthiness of the results of automated learning methods are not limited to organizational modeling. Rather, this is a problem that manifests when any knowledge is learned from data collected in the health domain. To assess if learned information is useful, it is often reviewed by knowledgeable experts for consistency. While evaluations along these lines have yet to be performed in arena of healthcare organizations, there is evidence to suggest health-related knowledge can be modeled and evaluated.

There are several notable studies with respect to medical practice that have been performed to evaluate the consistency between expert belief and data mining. [71–73] In these studies, diagnostic rules mined from breast cancer data were compared with expert rules. Rules that were not in an expert knowledgebase were sent to radiologists for further evaluation. If a mined rule was confirmed by at least one radiologist, then the rule was added to the knowledgebase to enhance decision making. While these studies indicated that automatically learned rules could inform clinical decisions, they did not investigate the extent to which the rules that were mined and defined by experts related to one another.

3. METHODS

Our research is motivated by several general hypotheses, which guided the selection of the survey population, the survey design, and the survey implementation process.

3.1. Selection of the Organizational Areas for this Study

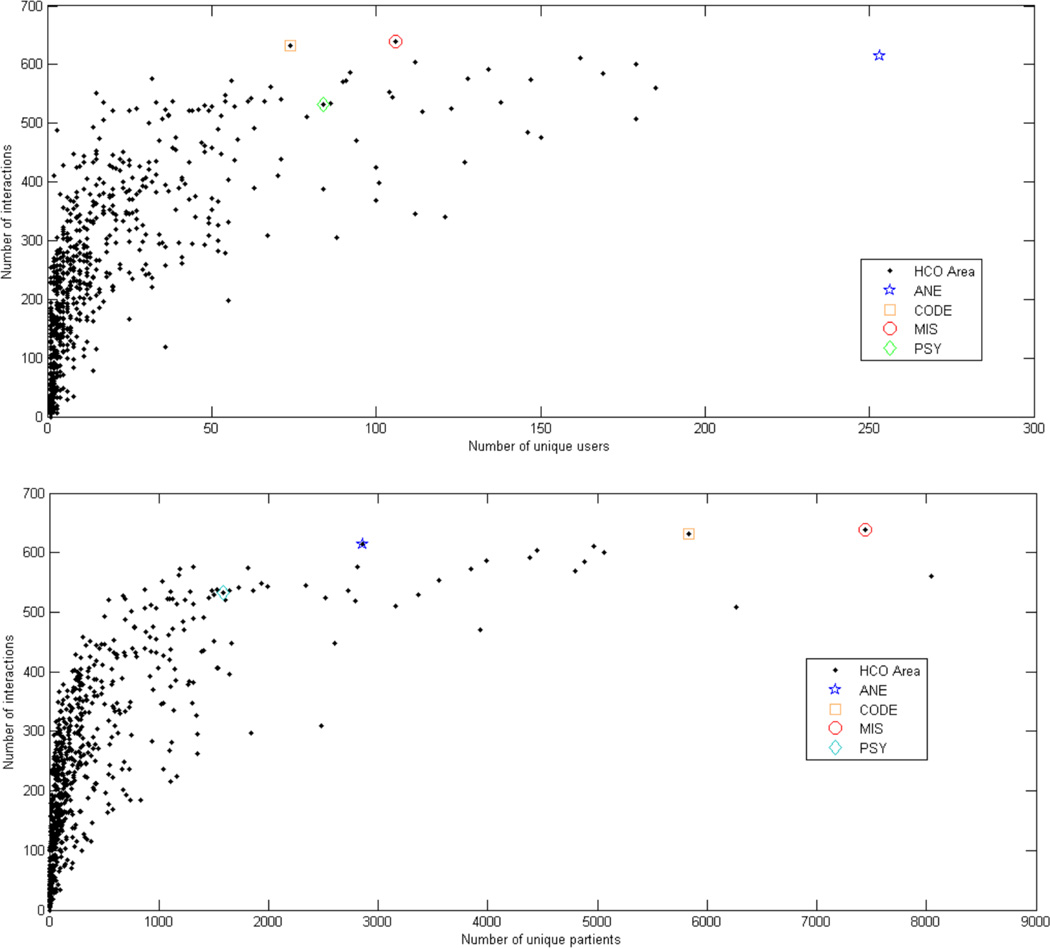

In preparation for this study, we extracted the access logs from the VUMC EHR system for several months in 2010. It was observed that accesses were made by users from 670 HCO areas. Figure 1 illustrates the number of unique users who accessed the system, the number of patients’ records that were viewed per HCO area, and the number of interactions between an HCO area and all of the other HCO areas for an arbitrary week. Based on this analysis, we selected two clinical areas (with substantially different disciplines) and two operational areas with direct responsibilities that involve the electronic record, who were relatively evenly matched on the number of users and patient records they accessed. We acknowledge that the selecting four organizational areas cannot represent all organizational areas. This is an initial study which aims to verify whether the interaction relations retrieved from utilization of HIT are consistent with employees’ expectations in terms of the selected organizational areas. In the event this is verified in this limited setting, these investigations could be extended to a broader range of HCO areas.

Figure 1.

Distribution of VUMC area on the space of top) number of VUMC area interactions as a function of number of users and bottom) number of patients.

To test our hypotheses we selected two administrative units that have work-related responsibilities with electronic records; however, for the clinical areas we selected two disciplines with very different patient responsibilities. Specifically, for the clinical areas, we selected Anesthesiology (~250 users’ accessed ~2900 patient records) and Psychiatry (~160 user’s accessed ~1900 patient records). For the operational areas, we selected Medical Information Services (~100 user’s accessed ~7500 patient records) and Coding & Charge Entry (~75 user’s accessed ~6000 patient records). We were further motivated to select these areas because they have a large number of interactions with other areas in the HCO (as shown in Figure 1) allowing for a selection and assessment of a large number of associations. A leader from each HCO area was contacted and all agreed to participate and to identify 10 users as potential participants of the study. The leaders were asked for people who represented a cross-section of organizational positions, and would be sufficiently knowledgeable to respond to the items in the survey. For simplicity, we refer to the selected four areas as ANE, PSY, MIS, and CODE.

3.2. Creation of the Survey Instrument

Our first hypothesis is that employees in a certain HCO area are capable of distinguishing between HCO interactions of high and non-high likelihood when their own HCO area is involved in the interaction. To assess this hypothesis, we model each HCO area X as set of association rules of the form Area X ⇒ Area Yi, where Yi corresponds to any area in the HCO. This area may be the same, such that X = Yi (i.e., collaboration of users within an HCO area) or different, such that X ≠ Yi (i.e., collaboration of users across HCO areas). The rule corresponds to the conditional probability that a user from area Yi accessed a patient’s record given that a user from area X accessed the patient’s record, as defined in an earlier study. [47]

Following the modeling of [43, 69, 70], an access to a patient’s record is defined as a binary occurrence, such that regardless of the number times the user looked at the record or section of the record that was accessed, it will be regarded as no more than a count of 1. In other words, if a user accessed a patient’s record multiple times with the time period during which the conditional probabilities are computed (e.g., one week), all of these accesses are treated as one access. This is because, as was observed in prior work, the number of accesses to a particular patient’s record can be artificially inflated due to system design. For instance, a user may access different components of a patient’s medical record, such as a laboratory report then a progress note and then the laboratory report again, and such a process may repeat depending on the specific workflow of the user.

The conditional probability we calculate is atemporal, such that it did not matter if the access from area Yi transpired before or after the access from area X. We refer to this probability as the EHR-learned likelihood. To orient the reader with a simple example, consider a scenario with three EHR users. The first two users are affiliated with “Anesthesiology” and accesses patients p1 and p2, whereas the third is affiliated with “Emergency Medicine” and accesses patient p1. The conditional probability from Anesthesiology to Emergency Medicine is 0.5, while the conditional probability from Emergency Medicine to Anesthesiology is 1.

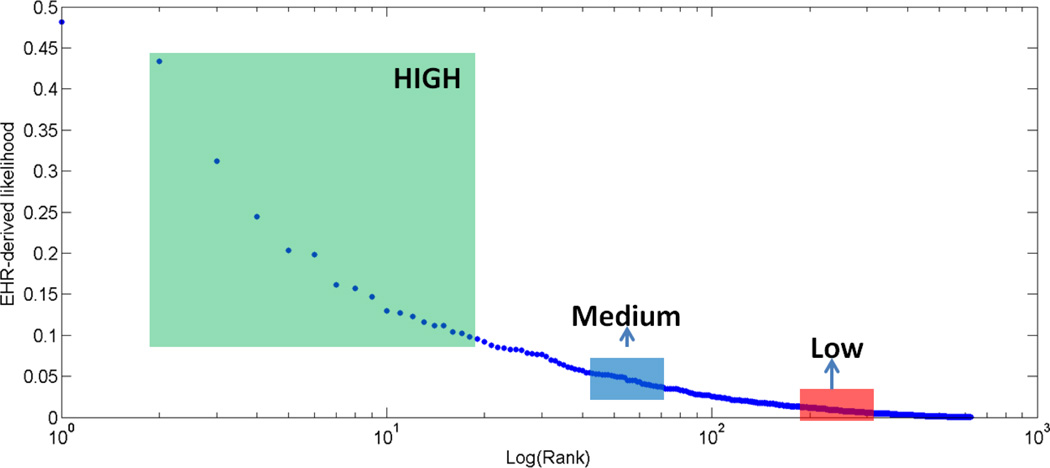

Turning our attention to the conditional probabilities in this specific study, we direct the reader to Table A1 in the online appendix, where it can be seen that when a user from CODE accessed a patient’s record, the probability a user from General Surgery accessed the same record was 0.1984. So the rule associated with this case can be (CODE ⇒ General Surgery, 0.1984). Figure 2 depicts all 626 CODE rules ranked on a log scale (not including rules with probability equal to zero) and the EHR-learned likelihoods. This is clearly more rules than a human can evaluate without fatigue and so we sampled 30 rules: 10 of high, 10 of medium, and 10 of low likelihood. When there were more items than 10 in a subset, we used a systematic sampling technique, such that we selected a random number (in this case, four) and eliminated every fourth item in the subset until we selected ten rules. This process was performed to select 30 rules for each of the four HCO areas.

Figure 2.

Rules associated with the CODE area ranked by their EHR-learned likelihood. Each shaded area will be extracted ten rules.

The employees who responded to the survey were presented with questions of the form: “Someone from Coding & Charge Entry accessed the record of patient John Doe. How likely is it that someone from the following HCO area accessed the same patient’s record?” They were not presented with the EHR-learned likelihood. After being presented with the HCO area, the respondents were asked to choose one of five candidate answers: “Not at all likely”, “Slightly likely”, “Moderately likely”, “Very likely” and “Completely likely”. In order to conduct a survey analysis through statistical models, we convert these answers into integer values in the range 1 to 5 (e.g., “Not at all likely” is mapped to 1).

Our additional hypotheses were based on users’ abilities to assess HCO interactions outside of their own HCO area. Specifically, our next hypothesis was that users can distinguish between high, and non-high likelihood rules regardless of the rule types. And our remaining hypotheses were based on the expectation that users would have better predictive ability on their own rules than other rules. To test these hypotheses, we provided all users who responded to the survey with the rule sets of the other areas. In doing so, all respondents were presented with 120 rules (30 from each area’s rule set). These rules were presented in four sets of 30 rules, which were randomly ordered.

3.3. Implementation of the Survey

We executed two implementation tests of the survey before inviting potential respondents. The first implementation test was for a member of the research team to pretest the survey through which several data consistency issues were identified and corrected. As an example, different headings were used for the same HCO area; e.g., Psychiatric Hospital and the Vanderbilt Psychiatric Hospital.

For a second implementation test, we placed the survey into the REDCap management system [74] and asked members of MIS area, who were not affiliated with the research team and investigated HCO areas, to test the survey. There were two outcomes from this pilot. First, it was observed that we needed clearer communication to the participants regarding the rules and goals of the survey. Second, it was at this point that we realized that, instead of using a 30 item (10 high, 10 medium, 10 low) questionnaire only associated with CODE organizational unit, we needed to provide all participants with the questions from all organizational units (i.e., 120 items). Once this was completed, we invited all 10 participants from each HCO area, as identified by their area’s leaders to respond to the survey. Each potential survey respondent was emailed with an introduction to the goals of the survey and provided with a link to the online REDCap survey.

The total number of users who accepted the invitation and participated in the survey was 34 (out of 40), with 10 from MIS, 7 from CODE, 9 from PSY and 8 from ANE. There were 31 respondents who were administered the survey; however, of this group, it was found that 3 respondents failed to complete the survey and 5 respondents marked each question with the same answer. All 8 of these respondents were removed from further consideration. Thus, there were 26 effective respondents, 9 from MIS, 7 from CODE, 6 from PSY and 4 from ANE. For the survey, there are 40 high, 40 medium, and 40 low observations, respectively, which are further subdivided into groups of 10 for a specific rule type, such as conditional probabilities associated with CODE. However, upon completion of the survey, it was found that five of the questions observations (1 high, 3 medium and 1 low) associated with PSY were corrupt in that the questions were ill-posed, such that they were dropped from the final study. To assess the impact of removing these questions, we simulated the removal of the same distribution from the ANE, CODE, and MIS groups of questions and observed that the statistical significance of the findings reported below were unchanged.

3.4. Hypotheses and Statistical Models

Our research was guided by the following three hypotheses:

-

-Hypothesis H1 (Locally Knowledgeable of Class): Absolute and relative knowledge of rules from one’s own area:

-

○H1a) (Absolute) HCO employees can distinguish between high, and non-high likelihood rules in their own HCO area.

-

○H1b) (Relative) HCO employees can distinguish between high, and non-high likelihood rules in their own HCO area better than they can in other HCO areas.

-

○

-

-Hypothesis H2 (Globally Knowledgeable of Class): Absolute and relative knowledge of rules across all areas:

-

○H2a) (Absolute) HCO employees can correctly distinguish between rules of high, and non-high likelihoods.

-

○H2b) (Relative) HCO employees’ ability to distinguish between rules of high, and non-high likelihoods vary by HCO areas.

-

○

-

-

Hypothesis H3 (Locally Knowledgeable by Order): Members of an HCO area are better at predicting the EHR-learned likelihoods of their own high rules than high rules of other organizational areas.

To more formally characterize the problem, we introduce the following notation. Let p ∈ {MIS, CODE, PSY, ANE} be the respondent type, such that each value represents the set of respondents from their respective HCO area. Let r ∈ {MIS, CODE, PSY, ANE} be the rule type, such that each value represents its respective HCO area rule set. Let c ∈ {high, med, low} represent the high, medium, and low likelihood rule classes, respectively. Additionally, let non be a rule class that combines the a priori defined med and low likelihood classes. This class is introduced because it was clear from the data that participants could not discriminate between these classes. Online Appendix B provides further intuition into this issue, where Figure A1 displays the scores for each likelihood class.

The key outcome variable Si:c,p,r corresponds to the average score across all class c rules regarding HCO area r for participant i from HCO area p. For example, Si:high,MIS,PSY is MIS respondent i ’s (average) score among all high likelihood rules associated with PSY. There are a total of 26 respondents, such that the total number of observations is 208. To illustrate, Table 1 depicts an example of an MIS respondent with 8 observations. Further, µ i:c,p,r= E(Si:c,p,r | c, p, r) is the mean (i.e., expected) value of the score for respondent i, and δp,r= µi:high,p,r - µi:non,p,r is the difference in the mean score for high versus non-high likelihood rules regarding HCO area r for a respondent who is a member of HCO area p. Finally, let δp,• = µ i:high,p -µi:non,p be the difference in the mean or expected score for a high versus non-high likelihood rule for a respondent who is a member of HCO area p across rules from all HCO areas.

Table 1.

An example of 8 observations for a respondent who is a member of the Medical Information Services (MIS) area.

| Respondent (ID) |

Respondent Type (P) |

Rule Type (R) |

Rule Class (C) |

Average Score of Responses |

|---|---|---|---|---|

| 1 | MIS | ANE | High | 3 |

| 1 | MIS | ANE | Non | 2 |

| 1 | MIS | CODE | High | 3.3 |

| 1 | MIS | CODE | Non | 2.1 |

| 1 | MIS | MIS | High | 3.1111 |

| 1 | MIS | MIS | Non | 2.125 |

| 1 | MIS | PSY | High | 2.9 |

| 1 | MIS | PSY | Non | 2.05 |

The first two hypotheses were thus specified as:

-

H1a)

δp,r = p = 0, for each p, where p = r;

-

H1b)

δp,r = p − δp,r ≠ p = 0, for each p and r ≠ p;

-

H2a)

δp,· = 0, for each p; and

-

H2b)

δpi, − δpj,· = 0, for each pi ≠ pj.

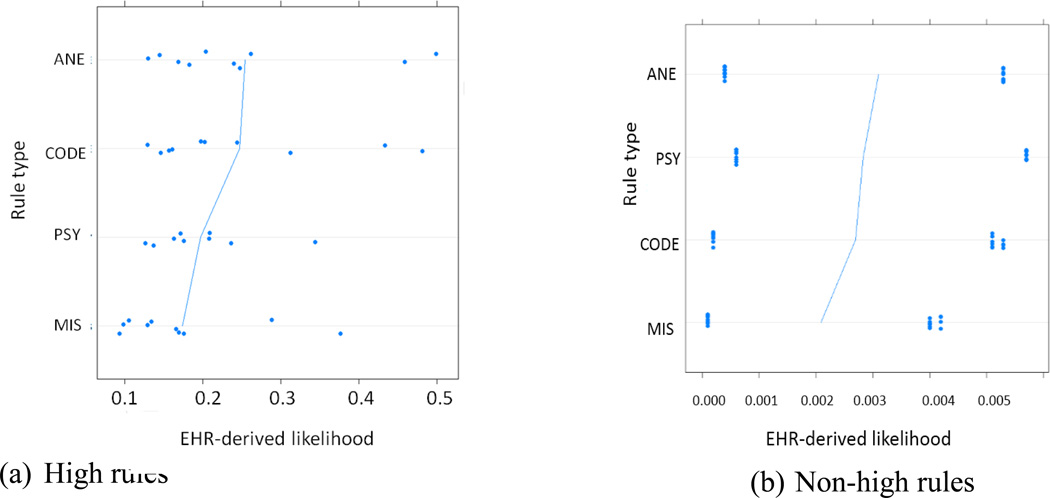

These hypotheses focus on the extent to which respondents can distinguish between high and non-high classes of rules. By contrast, the aim of the final hypothesis is narrower and focuses on high rules only. We focus on this class of rules because of the variability in the EHR-learned likelihoods, depicted in Figure 3a. By contrast, as shown in Figures 3b, there is almost no variability in the EHR-learned likelihoods for non-high rules of every rule type. Thus, to investigate this hypothesis, we calculate the distance between the respondent-derived likelihoods and the EHR-learned likelihoods on the high class rules.

Figure 3.

Distribution of a) high, b) non-high rule classes for EHR-learned likelihoods and rule type.

To test hypothesis H3, let Ti:p,r be the average score across all respondents of type p for high likelihood rule i, whose HCO area is r. For example, Ti:mis,psy corresponds to PSY rule i’s (average) score among all MIS respondents. The observations used to assess this hypothesis are exemplified in Table 6. The first 39 observations correspond to the average scores of all MIS respondents on all 39 high rules, which are partitioned into the four HCO groups (i.e., MIS, CODE, PSY, and ANE). There are 39 high rules (as opposed to 40) because after the completion of the survey it was found that one question associated with a high rule associated with PSY was corrupt. Further, µ’i:p,r = E(Ti:p,r | p, r) is the mean (expected) value of the score for rule i, and δ’p,r = |µ’i:p,r - ei:r|is the difference in the mean (expected) score of rules for respondent-predicted likelihood µ’i:p,r and EHR-learned likelihood ei:r when the rule type is r. To ensure that the respondent-predicted likelihoods and the EHR-learned likelihoods are in the same range, we use the following method to normalize the respondent-predicted likelihood:

where T = {Ti:p,r} and e = {ei:r} The null hypothesis for the third hypothesis was thus: H3) δ’p,r=p-δ’p,r≠p = 0 for each p.

Table 6.

An example of observations for all 39 high rules for MIS respondents.

| Rule (ID) |

Respondent Type (P) |

Rule Type (R) |

Respondent derived Score |

Likelihood |

|

|---|---|---|---|---|---|

| Normalized Respondent-derived |

EHR learned |

||||

| 1 | MIS | ANE | 2.5556 | 0.25151 | 0.1698 |

| … | … | … | … | … | … |

| 10 | MIS | ANE | 2.7778 | 0.27406 | 0.13 |

| 11 | MIS | CODE | 4.3333 | 0.43187 | 0.4816 |

| … | … | … | … | … | … |

| 20 | MIS | CODE | 4.1111 | 0.40932 | 0.3125 |

| 21 | MIS | MIS | 4.8889 | 0.48823 | 0.1764 |

| … | … | … | … | … | … |

| 30 | MIS | MIS | 4.8889 | 0.48823 | 0.2086 |

| 31 | MIS | PSY | 4.8889 | 0.48823 | 0.1835 |

| … | … | … | … | … | … |

| 39 | MIS | PSY | 4.3333 | 0.43187 | 0.2042 |

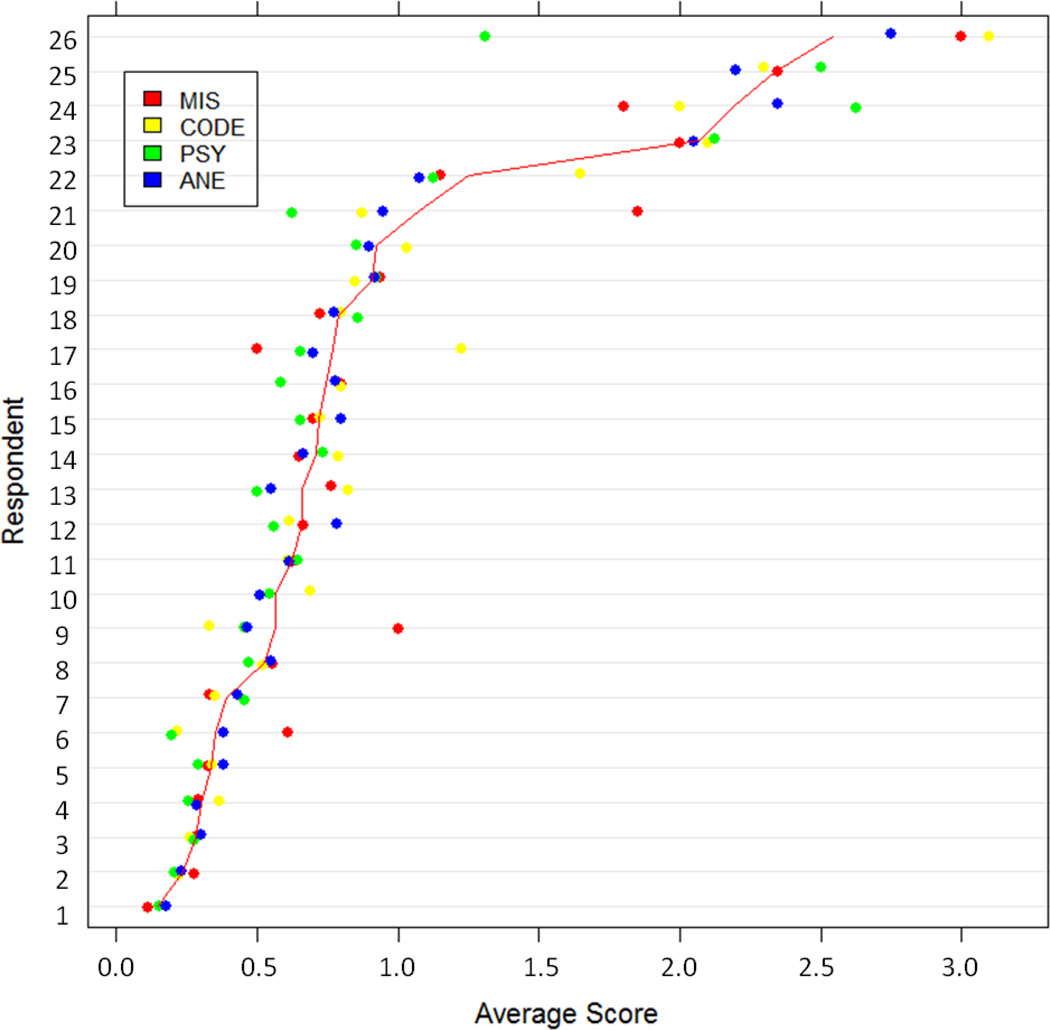

We adopted a linear mixed model with random intercepts [70] to test our hypotheses since observations made within respondents are likely to exhibit correlation with one another. As a result, some respondents may center their responses according to different interpretations of the answer scale. Figure 4 illustrates this effect by showing the average respondent likelihoods for each respondent over all non-high rules. It is evident that certain respondents are inclined (or predisposed or have a preference for) to assign large likelihoods (i.e., upper right section of the plot), while others are included (or predisposed or have a preference for) to assign small likelihoods (i.e., the lower left section of the plot).

Figure 4.

Distribution of respondents’ average score for non-high rules, broken down by rule type. The line traces the mean of the average scores of the respondents.

4. Results

All results are based on the set of 26 respondents who completed the survey with answers of non-zero variance. Statistical significance was assessed using (non-restricted) maximum likelihood ratio tests at the two-sided α=0.05 significance level. All findings deemed to be significant have their corresponding confidence intervals reported in Figure A3 of the Appendix.

4.1. Local Absolute Class Knowledge

The results for Hypothesis 1a are summarized in Table 2. It can be seen that this hypothesis was confirmed for each respondent type; i.e., ANE, CODE, MIS, and PSY. Specifically, each group of respondents was able to distinguish between the high and non-high likelihood rules for their own organizational area.

Table 2.

The results for Hypothesis H1a. The findings indicate the extent to which respondents from a certain HCO area can distinguish between high and non-high likelihood rule classes for their own area.

| HCO Area | δp,r = p | p-value |

|---|---|---|

| ANE | 0.7573 | 0.007 |

| CODE | 0.4486 | 0.011 |

| MIS | 0.3262 | 0.037 |

| PSY | 0.8232 | 0.020 |

4.2. Local Relative Class Knowledge

The results for Hypothesis H1b are summarized in Table 3. This hypothesis investigated whether respondents from a specific HCO area will be better at distinguishing between classes of rules associated with their own HCO area than rules associated with other HCO areas. There is no evidence to suggest that this is the case.

Table 3.

The results for Hypothesis H1b. The findings indicate the extent to which respondents from HCO area X are better at distinguishing between high and non-high likelihood rules classes associated with area X than area Y.

| HCO Area |

δp,r - p – δp,r≠p | p-value | |||||

|---|---|---|---|---|---|---|---|

| X | Y | ||||||

| CODE | 0.15834 | 0.4695 | |||||

| ANE | MIS | 0.16354 | 0.5534 | ||||

| PSY | −0.057363 | 0.7714 | |||||

| ANE | −0.088359 | 0.6245 | |||||

| CODE | MIS | 0.05331 | 0.7775 | ||||

| PSY | −0.256476 | 0.439 | |||||

| ANE | −0.099888 | 0.4511 | |||||

| MIS | CODE | 0.03382 | 0.8425 | ||||

| PSY | −0.195491 | 0.1724 | |||||

| ANE | 0.10588 | 0.7471 | |||||

| PSY | CODE | 0.28893 | 0.58 | ||||

| MIS | 0.18671 | 0.3453 | |||||

4.3. Global Absolute Class Knowledge

The results for Hypothesis H2a are summarized in Table 4. It can be seen that this hypothesis was also confirmed for each respondent type. This implies that respondents can distinguish between high and non-high likelihood rules regardless of the organizational area on which the rules are conditioned.

Table 4.

The results for Hypothesis H2a. The findings indicate the extent to which respondents from a certain HCO area can distinguish between high and non-high likelihood classes of rules across all HCO areas.

| HCO Area | δp· | p-value |

|---|---|---|

| ANE | 0.6911 | 1.22 × 10−8 |

| CODE | 0.5214 | 1.11 × 10−6 |

| MIS | 0.3515 | 1.91 × 10−9 |

| PSY | 0.6778 | 9.33 × 10−8 |

4.4. Global Relative Class Knowledge

The results for Hypothesis H2b are summarized in Table 5. It can be seen that this hypothesis was not confirmed for each respondent type, but there were two cases at which it was confirmed. In the first case, PSY respondents were statistically significantly better than MIS respondents at distinguishing between high and non-high rules (p-value of 0.0028). In the second case, ANE respondents were statistically significantly better than MIS respondents at distinguishing high class and non-high likelihood rules (p-value of 0.00083).

Table 5.

The results for Hypothesis H2b. The findings indicate the extent to which respondents from a certain HCO area X are better than respondents from HCO area Y at distinguishing between high and non-high likelihood rule classes.

| HCO Area |

δpi - δpj, | p-value | |

|---|---|---|---|

| X | Y | ||

| ANE | MIS | 0.3396 | 0.00083 |

| CODE | MIS | 0.1699 | 0.101 |

| PSY | MIS | 0.3263 | 0.0028 |

| ANE | CODE | 0.1696 | 0.257 |

| PSY | CODE | 0.1563 | 0.280 |

| ANE | PSY | 0.0133 | 0.930 |

4.5. Locally Biased by Order

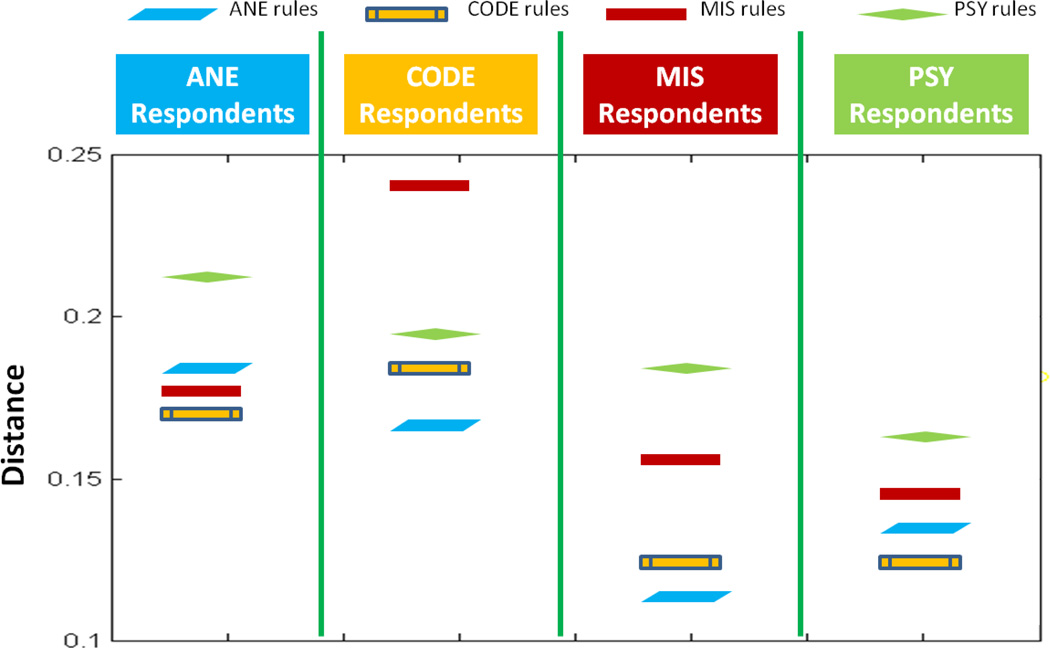

All of the p-values for Hypothesis H3 are larger than 0.2, which indicates this hypothesis could not be verified. The results of Hypothesis H3 in terms of the distance between respondent-predicted likelihood and EHR-learned likelihood for each HCO area’s high class rules are summarized in Figure 5. These results are split, so that each column corresponds to a different respondent group and each point corresponds to the type of rule assessed. Several cases for certain HCO areas (e.g., CODE respondents were better with CODE rules than MIS and PSY rules), but none of the results were statistically significant.

Figure 5.

The results for Hypothesis H3 in terms of the distance between respondent-predicted likelihood and EHR-learned likelihood for each HCO area’s high class rules. The smaller the distance value, the stronger the concordance between the respondents’ expectation and the actual EHR utilization.

At the same time, there are several notable observations. First, in Figure 5, the greatest concordance between respondent-predicted likelihood and EHR-learned likelihood (e.g., smallest distance) is almost always CODE or ANE. Second, respondents from PSY are better at predicting the EHR-learned likelihood of high rules than respondents from other areas.

5. Discussion

This study demonstrated that organizational relationships between areas in an HCO, as inferred from the utilization of an EHR system, are consistent with the expectations of the employees associated with these areas. Specifically, it was shown that the employees of four areas in a large academic medical center (two administrative and two clinical) could distinguish between the relationships of high and non-high likelihood for relationships associated with their own area. These findings are notable because it suggests that the application of automated learning strategies to EHR utilization patterns may be useful for modeling the workings of a large healthcare system. These models may allow for assessments of organizational efficiency, workflow, and provide insight into how to refine such models into more effective organizational structures. These findings indicate that EHR auditing models, such as those leveraged to redefine access control systems [42] may be trustworthy and reflect actual organizational behavior.

It was further found that employees could distinguish between high and non-high likelihood rules regardless of which area the rules corresponded to. This finding is notable because it implies that the general organizational structure of an HCO may not be limited to the individuals associated with their respective area. As a result, if larger, more diverse studies on the structure of a large academic medical center are to be performed, it may be possible to review the correctness of such a structure with a small number of employees.

There are, however, several limitations to this study that we wish to point out. First, the organizational relationships reviewed through our survey were based on our electronic health record system (StarPanel). The EHR audit logs in this study correspond to the central component of the information technology infrastructure at the VUMC and did not incorporate the behavior associated with scheduling systems, the patient portal, or other technologies that facilitate workflow in the medical center. Second, this study only investigated four specific organizational areas. This is significantly smaller than the total number of areas (over 600) at the VUMC. Moreover, we recognize that these areas are biased towards either a larger number of users or a larger number of patients accessed in comparison to other VUMC areas. However, this paper is an initial study and we verify that the behaviors of utilization of HIT is consistent with HCO employees’ expectations in terms of selected four organizational areas. In the future we will extend our works on all of the organizational areas. Third, we believe that a larger study might allow for the confirmation of notions that were trending toward confirmation (e.g., that the rules for certain areas were more readily distinguishable than other areas), but lacked sufficient statistical evidence. Finally, we did not control for certain covariates associated with our respondents, such as demographics (e.g., gender or age), quantity of experience (e.g., years working at the medical center), or level of position within the organization. With a sample of only 26 respondents, the inclusion of such covariates would overparameterize our models. These issues may have led to some of the surprising observations from our experiments, such as why members of PSY were the most adept at assessing the rules of other areas.

6. Conclusions

In this study, we surveyed the employees of four areas within a large academic medical center to assess the correctness of organizational relationships inferred from the utilization patterns of an EHR system. Our investigation indicated, with statistical significance, that inferred organizational relations are often in line with the expectations of employees. Our findings further suggested that the interactions of certain areas of the organization (notably Coding & Charge Entry and Anesthesiology) were easier for employees to assess than others. Though the size of this study was relatively small (115 organizational relationships assessed by 26 employees), our findings suggest that models of HCOs, as well as strategies built on top of such models (e.g., security audits), may be reliable and scalable.

Supplementary Material

Summary Points.

What was already known on this topic?

Electronic health record (EHR) systems can improve safety and efficiency of healthcare delivery

As EHR systems grow in complexity, it is increasingly difficult to design rule-based workflows and controls

Audits of system utilization can allow for enhancement and refinement of initial configurations of EHR systems

What this study added to our knowledge

Medical center employees generally agree with organizational models based on EHR utilization

Certain organizational areas in a medical center are more challenging than other areas for employees to assess, suggesting that data mined models may allow for greater nuance in organizational models in comparison to those specified by administrators

Highlights.

The first study to show healthcare employees agree with organizational models learned from EHR utilization patterns

This study is based on a survey of healthcare employees from four organizational areas in a large medical center

The findings show that certain areas in the medical center are easier than others for employees to assess

Acknowledgements

This project supported in part by grants from the National Center for Advancing Translational Sciences (UL1TR000445), the National Library of Medicine (R01LM010207), and the National Science Foundation (CNS-0964063, CCF-0424422). The authors would like to thank Dr. Stephan Heckers (Department of Psychiatry), Karen Toles (Coding & Charge Entry), Mary Reeves (Medical Information Services), and Dr. Warren Sandberg (Department of Anesthesiology) for their assistance in recruiting the respondents for the survey reported on in this manuscript. The authors would also like to thank Brad Buske for assisting in the preparation of the access logs used in this study.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Authors’ contributions

All authors listed are justifiably credited with authorship. In detail: YC performed the statistical method design, survey design, evaluation and interpretation of the experiments, and writing of the manuscript. NL performed the survey design, method design, interpretation of the experiments, and writing of the manuscript. SN performed extraction and formatting of the data, assisting in survey implementation and writing of the manuscript. JS performed the statistical model design, interpretation of the experiments and writing of the manuscript. BM performed the statistical model design, survey design and interpretation of the experiments, and writing of the manuscript. All authors read and approved the final manuscript.

Conflict of Interest Statement

There are no conflicts of interest to declare.

References

- 1.Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. N Eng J Med. 2010;363:501–504. doi: 10.1056/NEJMp1006114. [DOI] [PubMed] [Google Scholar]

- 2.Jha AK. Meaningful use of electronic health records: the road ahead. JAMA. 2010;304:1709–1710. doi: 10.1001/jama.2010.1497. [DOI] [PubMed] [Google Scholar]

- 3.Wright A, Henkin S, Feblowitz J, McCoy A, Bates D, Sittig D. Early results of the meaningful use program for electronic health records. N Engl J Med. 2013;368:779–780. doi: 10.1056/NEJMc1213481. [DOI] [PubMed] [Google Scholar]

- 4.Shamliyan TA, Duval S, Du J, Kane RL. Just what the doctor ordered. Review of the evidence of the impact of computerized physician order entry system on medication errors. Health Serv Res. 2008;43:32–53. doi: 10.1111/j.1475-6773.2007.00751.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.van Rosse F, Maat B, Rademaker CM, van Vught AJ, Egberts AC, Bollen CW. The effect of computerized physician order entry on medication prescription errors and clinical outcome in pediatric and intensive care: a systematic review. Pediatrics. 2009;123:1184–1190. doi: 10.1542/peds.2008-1494. [DOI] [PubMed] [Google Scholar]

- 6.Zlabek J, Wickus J, Mathiason M. Early cost and safety benefits of an inpatient electronic health record. J Am Med Inform Assoc. 2011;18:169–172. doi: 10.1136/jamia.2010.007229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chen C, Garrido T, Chock D, Okawa G, Liang L. The Kaiser Permanente electronic health record: transforming and streamlining modalities of care. Health Aff. 2009;28:323–333. doi: 10.1377/hlthaff.28.2.323. [DOI] [PubMed] [Google Scholar]

- 8.Lo H, Newmark L, Yoon C, Volk L, Carlson V, et al. Electronic health records in specialty care: a time-motion study. J Am Med Inform Assoc. 2007;14:609–615. doi: 10.1197/jamia.M2318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cresswell K, Bates D, Sheikh Ten key considerations for the successful implementation and adoption of large-scale health information technology. J Am Med Inform Assoc. 2013;20:e9–e13. doi: 10.1136/amiajnl-2013-001684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Stead WW, Lin HS, editors. Computational technology for effective health care: immediate steps and strategic directions. Washington, DC: National Academies Press; 2009. National Research Council Committee on Engaging the Computer Science Research Community in Health Care Informatics. [PubMed] [Google Scholar]

- 11.Bosch M, Faber M, Cruijsberg J, Voerman G, Leatherman S, Grol R, Hulscher M, Wensing M. Effectiveness of patient care teams and the role of clinical expertise and coordination. Med Care Res Rev. 2009;66(suppl):5S–35S. doi: 10.1177/1077558709343295. [DOI] [PubMed] [Google Scholar]

- 12.Hassol A, Walker JM, Kidder D, Rokita K, Young D, Pierdon S, Deitz D, Kuck S, Ortiz E. Patient experiences and attitudes about access to a patient electronic health care record and linked web messaging. J Am Med Inform Assoc. 2004;11:505–513. doi: 10.1197/jamia.M1593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kannampallil T, Schauer G, Cohen T, Patel V. Considering complexity in healthcare systems. J Biomed Inform. 2011;44:943–947. doi: 10.1016/j.jbi.2011.06.006. [DOI] [PubMed] [Google Scholar]

- 14.Ash J, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11:104–112. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Goldschmidt PG. HIT and MIS: Implications of health information technology and medical information systems. Communications of the ACM. 2005;48:69–74. [Google Scholar]

- 16.Campbell E, Sittig D, Ash J, Guappone K, Dykstra R. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc. 2006;13:547–556. doi: 10.1197/jamia.M2042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Han Y, Carcillo J, Venkataraman S, Clark R, Watson R, Nguyen T, Bayer H, Orr R. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics. 2005;116:1506–1512. doi: 10.1542/peds.2005-1287. [DOI] [PubMed] [Google Scholar]

- 18.King J, Smith B, Williams L. Modifying without a trace: general audit guidelines are inadequate for open-source electronic health record audit mechanisms. Proc ACM International Health Informatics Symposium. 2012:305–314. [Google Scholar]

- 19.Lambert B, Schweber N. Hospital workers punished for peeking at Clooney file. New York Times. 2007 Oct 10; [Google Scholar]

- 20.Freudenheim M. Breaches lead to push to protect medical data. New York Times. 2011 May 30; [Google Scholar]

- 21.Sack K. Patient data landed online after a series of missteps. New York Times. 2011 Oct 5; [Google Scholar]

- 22.Francis L. The physician-patient relationship and a National Health Information Network. J Law Med Ethics. 2010;38:36–49. doi: 10.1111/j.1748-720X.2010.00464.x. [DOI] [PubMed] [Google Scholar]

- 23.Kim D, Schleiter K, Crigger BJ, McMahon JW, Benjamin RM, Douglas SP. Council on Ethical, Judicial Affairs American Medical Association. A physician’s role following a breach of electronic health information. J Clin Ethics. 2010;21:30–35. [PubMed] [Google Scholar]

- 24.Rothstein MA. The Hippocratic bargain and health information technology. J Law Med Ethics. 2010;38:7–13. doi: 10.1111/j.1748-720X.2010.00460.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Associated Press. ISU to pay $400K after medical records breach. Associated Press. 2003 May 22; [Google Scholar]

- 26.Ornstein C. Kaiser hospital fined $250,000 for privacy breach in octuplet case. Los Angeles Times. 2009 May 15; [Google Scholar]

- 27.Feldstein AC, Smith DH, Perrin N, Yang X, Simon SR, Krall M, Sittig DF, Ditmer D, Platt R, Soumerai SB. Reducing warfarin medication interactions: an interrupted time series evaluation. Arch Intern Med. 2006;166:1009–1015. doi: 10.1001/archinte.166.9.1009. [DOI] [PubMed] [Google Scholar]

- 28.Koplan KE, Brush AD, Packer MS, Zhang MS, Zhang F, Senese MD, Simon SR. “Stealth” alerts to improve warfarin monitoring when initiating medications. J Gen Intern Med. 2012;27:1666–1673. doi: 10.1007/s11606-012-2137-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Isaac T, Weissman JS, Davis RB, Massagli M, Cyrulik A, Sands DZ, Weingart SN. Overrides of medication alerts in ambulatory care. Arch Intern Med. 2009;169:305–311. doi: 10.1001/archinternmed.2008.551. [DOI] [PubMed] [Google Scholar]

- 30.Yeh ML, Chang YJ, Wang PY, Li YC, Hsu CY. Physicians’ responses to computerized drug-drug interaction alerts for outpatients. Comput Methods Programs Biomed. 2013;111:17–25. doi: 10.1016/j.cmpb.2013.02.006. [DOI] [PubMed] [Google Scholar]

- 31.Anderson R. Clinical system security: interim guidelines. BMJ. 1996;312:109–111. doi: 10.1136/bmj.312.7023.109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Barrows RC, Clayton PD. Privacy, confidentiality, and electronic medical records. J Am Med Inform. 1996;3:139–148. doi: 10.1136/jamia.1996.96236282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ferreira A, Antunes L, Chadwick D, Correia R. Grounding information security in healthcare. Int J Med Inform. 2010;79:268–283. doi: 10.1016/j.ijmedinf.2010.01.009. [DOI] [PubMed] [Google Scholar]

- 34.Mandl K, Szolovits P, Kohane I. Public standards and patients’ control: how to keep electronic medical records accessible but private. BMJ. 2001;322:283. doi: 10.1136/bmj.322.7281.283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Blobel B. Authorisation and access control for electronic health record systems. Int J Med Inform. 2004;31:251–257. doi: 10.1016/j.ijmedinf.2003.11.018. [DOI] [PubMed] [Google Scholar]

- 36.Sujansky W, Faus S, Stone E, Brennan P. A method to implement fine-grained access control for personal health records through standard relational database queries. J Biomed Inform. 2010;s43:S46–s50. doi: 10.1016/j.jbi.2010.08.001. [DOI] [PubMed] [Google Scholar]

- 37.Ferreira A, Cruz-Correia R, Antunes L, et al. How to break access control in a controlled manner. Proc IEEE Symp on Computer-Based Medical Systems. 2006:847–854. [Google Scholar]

- 38.Røstad L, Edsberg O. A study of access control requirements for healthcare systems based on audit trails from access logs. Proc Annual Computer Security Applications Conference. 2006:175–186. [Google Scholar]

- 39.Gunter C, Liebovitz D, Malin B. Experience-based access management: a life-cycle framework for identity and access management systems. IEEE Secur Priv. 2011;9:48–55. doi: 10.1109/MSP.2011.72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bhatti R, Grandison T. Towards improved privacy policy coverage in healthcare using policy refinement. Proc VLDB Workshop on Secure Data Management. 2007:158–173. [Google Scholar]

- 41.Zhang W, Gunter C, Liebovitz D, Tian J, Malin B. Role prediction using electronic medical record system audits. AMIA Annu Symp Proc. 2011:858–867. [PMC free article] [PubMed] [Google Scholar]

- 42.Zhang W, Chen Y, Gunter C, Liebovitz D, Malin B. Evolving role definitions through permission invocation patterns. Proc ACM Symp on Access Control Models and Technologies. 2013:59–70. [Google Scholar]

- 43.Chen Y, Nyemba S, Malin B. Detecting anomalous insiders in collaborative information systems. IEEE Transactions on Dependable and Secure Computing. 2012;9(3):332–344. doi: 10.1109/TDSC.2012.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Chen Y, Malin B. Detection of anomalous insiders in collaborative environments via relational analysis of access logs. Proc ACM Conference on Data and Application Security and Privacy. 2011:63–74. doi: 10.1145/1943513.1943524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Chen Y, Nyemba S, Zhang W, Malin B. Specializing network analysis to detect anomalous insider actions. Secur Inform. 2012;1:5. doi: 10.1186/2190-8532-1-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Chen Y, Nyemba S, Malin B. Auditing medical record accesses via healthcare interaction networks. Proc AMIA Symp. 2012:93–102. [PMC free article] [PubMed] [Google Scholar]

- 47.Malin B, Nyemba S, Paulett J. Learning relational policies from electronic health record access logs. J Biomed Inform. 2011;44:333–342. doi: 10.1016/j.jbi.2011.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ouksel A, Vyhmeister R. Performance of organizational design models and their impact on organization learning. Journal of Computational and Mathematical Organization Theory. 2000;6:395–410. [Google Scholar]

- 49.Simon HA. A behavioral model of rational choice. Quarterly Journal of Economics. 1955;69:99–118. [Google Scholar]

- 50.Tushman M, Nadler D. Information processing as an integrating concept in organizational design. Academy of Management Review. 1978;3:613–624. [Google Scholar]

- 51.Davenport TH, Prosak L. Information Ecology. Oxford University Press. 1997 [Google Scholar]

- 52.Johannsen R, Swigart R. Upsizing the individual in the downsized organization. Basic Books. 1995 [Google Scholar]

- 53.Tomasko RM. Rethinking the corporation: the architecture of change. AmaCom. 1993 [Google Scholar]

- 54.Aral S, Weill P. IT assets, organizational capabilities, and firm performance: how resource allocations and organizational differences explain performance variation. Organization Science. 2007;18:763–780. [Google Scholar]

- 55.Poon S, Chan J, Poon J, Land L. Patterned interactions in complex systems: the role of information technology for re-shaping organizational structures. Proceedings of the European Conference on Information Systems. 2003:35. [Google Scholar]

- 56.Sahaym A, Steensma HK, Schilling M. The influence of information technology on the use of loosely coupled organizational forms: an industry-level analysis. Organization Science. 2007;18:865–880. [Google Scholar]

- 57.Carley KM. Smart agents and organizations of the future. In: Lievrouw L, Livingstone S, editors. The Handbook of New Media. Sage Press; 2002. pp. 206–220. [Google Scholar]

- 58.Nemati HR, Barko C. Organizational Data Mining (ODM) Idea Group Inc. 2004 [Google Scholar]

- 59.Carley KM, Newell A. The nature of the social agent. Journal of Mathematical Sociology. 1994;19:221–222. [Google Scholar]

- 60.Huber GP. Organizational learning: the contributing processes and the literatures. Organization Science. 1991;2:88–115. [Google Scholar]

- 61.Burton RM, Obel B. Strategic organizational design: developing theory for application. Kluwer Academic Publishers. 1998 [Google Scholar]

- 62.Flanagan ME, Saleem JJ, Millitello LG, Russ AL, Doebbeling BN. Paper- and computer-based workarounds to electronic health record use at three benchmark institutions. J Am Med Inform Assoc. 2013;20:e59–e66. doi: 10.1136/amiajnl-2012-000982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Saleem JJ, Russ AL, Justice CF, Hagg H, Ebright PR, Woodbridge PA, Doebbeling BN. Exploring the persistence of paper with the electronic health record. Int J Med Inform. 2009;78:618–28. doi: 10.1016/j.ijmedinf.2009.04.001. [DOI] [PubMed] [Google Scholar]

- 64.Merrill J, Bakken S, Rockoff M, Gebbie K, Carley K. Description of a method to support public health information management: organizational network analysis. J Biomed Inform. 2007;40:422–428. doi: 10.1016/j.jbi.2006.09.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Lindblom CE. The science of “muddling through”. Public Administration Review. 1959;19:78–88. [Google Scholar]

- 66.Steinbruner JD. The cybernetic theory of decision processes. Princeton University Press. 1974 [Google Scholar]

- 67.Lin Z, Carley KM. Designing stress resistant organizations: computational theorizing and crisis applications. Springer Press. 2003 [Google Scholar]

- 68.Chen Y, Malin B. Detection of anomalous insiders in collaborative environments via relational analysis of access logs. Proc ACM Conference on Data and Application Security and Privacy. 2011:63–74. doi: 10.1145/1943513.1943524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Chen Y, Nyemba S, Zhang W, Malin B. Specializing network analysis to detect anomalous insider actions. Secur Inform. 2012;1:5. doi: 10.1186/2190-8532-1-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Chen Y, Nyemba S, Malin B. Auditing medical record accesses via healthcare interaction networks. Proc AMIA Symp. 2012:93–102. [PMC free article] [PubMed] [Google Scholar]

- 71.Kovalerchuk B, Vityaev E, Ruiz JF. Consistent and complete data and “expert” mining in medicine. Medical Data Mining and Knowledge Discovery. 2001:238–280. [Google Scholar]

- 72.Kovalerchuk B, Triantaphyllou E, Ruiz J, Clayton J. Fuzzy logic in computer-aided breast cancer diagnosis. Artif Intell Med. 1997;11:75–85. doi: 10.1016/s0933-3657(97)00021-3. [DOI] [PubMed] [Google Scholar]

- 73.Kovalerchuk B, Vityaev E, Ruiz J. Consistent knowledge discovery in medical diagnosis. IEEE Eng Med Biol Mag. 1999;19:26–37. doi: 10.1109/51.853479. [DOI] [PubMed] [Google Scholar]

- 74.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) - a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Bates DM, Maechler M. lme4: Linear mixed-effects models using S4 classes. R package version 0.999375–32. 2009 [Google Scholar]

- 76.Ragnhild H, Lena S, Margarethe L. Nurses’ information management across complex health care organizations. International Journal of Medical Informatics. 2005;74(11–12):960–972. doi: 10.1016/j.ijmedinf.2005.07.010. [DOI] [PubMed] [Google Scholar]

- 77.Berg M. Implementing information systems in health care organizations: myths and challenges. International Journal of Medical Informatics. 2001;64(2):143–1561. doi: 10.1016/s1386-5056(01)00200-3. [DOI] [PubMed] [Google Scholar]

- 78.Berg M. Patient care information systems and healthcare work: a sociotechnical approach. International Journal of Medical Informatics. 1999;55:87–101. doi: 10.1016/s1386-5056(99)00011-8. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.