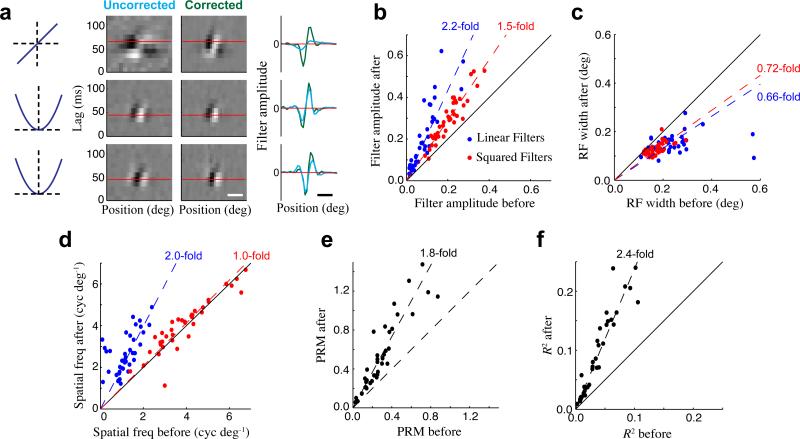

Figure 3. Improvement in model fits after correcting for eye movements.

(a) The quadratic model of an example single-unit before (left) and after (right) correcting for eye movements. Spatiotemporal filters are shown for the linear term (top), and two quadratic terms (bottom), with the grayscale independently normalized for each filter. The spatial tuning of each filter (at the optimal time lag shown by the horizontal red lines) is compared before (cyan) and after (green) eye position correction, clearly demonstrating the large change in the linear filter, and smaller changes in the quadratic filters. Horizontal scale bar is 0.2 deg. (b) Amplitudes of the linear (blue) and squared (red) stimulus filters across all single units (N = 38) were significantly higher after correcting for eye position. (c) Correcting for eye position decreased the apparent widths of linear and squared stimulus filters. (d) The preferred spatial frequency of linear stimulus filters increased greatly with eye correction, while it changed little for the squared filters. (e) Neurons’ spatial phase-sensitivity, measured by their phase-reversal modulation (PRM; see Methods), increased substantially after correcting for eye movements. (f) Model performance (likelihood-based R2) increased by over 2-fold after correcting for eye movements. Note that we use a leave-one-out cross-validation procedure (see Methods) when estimating all the above model changes with eye corrections.