Abstract

Objective monitoring of food intake and ingestive behavior in a free-living environment remains an open problem that has significant implications in study and treatment of obesity and eating disorders. In this paper, a novel wearable sensor system (automatic ingestion monitor, AIM) is presented for objective monitoring of ingestive behavior in free living. The proposed device integrates three sensor modalities that wirelessly interface to a smartphone: a jaw motion sensor, a hand gesture sensor, and an accelerometer. A novel sensor fusion and pattern recognition method was developed for subject-independent food intake recognition. The device and the methodology were validated with data collected from 12 subjects wearing AIM during the course of 24 h in which both the daily activities and the food intake of the subjects were not restricted in any way. Results showed that the system was able to detect food intake with an average accuracy of 89.8%, which suggests that AIM can potentially be used as an instrument to monitor ingestive behavior in free-living individuals.

Index Terms: Automatic ingestion monitor (AIM), chewing, eating disorders, food intake (FI) detection, obesity, pattern recognition, wearable sensors

I. Introduction

Effective interventions are required to reduce the incidence of obesity and eating disorders and their life-threatening complications. Obesity is a major health problem that affects not only adult population but also adolescents and children. A reduction in life expectancy of individuals with severe obesity is plausible [1]. In the United States, the prevalence of obesity reached a total of 35.5% among adults and 16.9% among adolescents in 2009–2010 [2]. On the other hand, eating disorders are serious mental disorders that cause disturbances on eating habits or weight-control behavior of individuals [3]. Anorexia nervosa, bulimia nervosa, and binge eating are the most common eating disorders with lifetime prevalence ranging from 0.6% to 4.5% in the United States [4]. Both obesity and eating disorders are medical conditions highly resistant to treatment and can have severe physical and physiological health consequences [5]. Thus, the implementation of accurate methods for monitoring of ingestive behavior (MIB) is critical to provide a suitable assessment of intake particularly in individuals who would most benefit from professional help.

Current methodologies used to understand and analyze food intake (FI) patterns associated with obesity and eating disorders largely rely on laboratory studies and on self-report rather than on objective observations [6], [7]. The doubly-labeled water [8] is the most precise method to measure energy intake over a period of several days; however, it is not capable of identifying individual eating episodes. Other methods for MIB such as food frequency questionnaires and diet diaries are inaccurate due to subjects tending to underreport and miscalculate food consumption [9]. Thus, new approaches for objective and accurate assessment of free-living FI patterns in humans are necessary for monitoring of eating behavior [10].

Recent advances in the area of FI monitoring focused on the development of systems that attempt to address research and clinical needs by replacing manual self-reporting methods [11]–[19]. Several authors explored the use of chewing sounds captured through an in-ear microphone to detect and characterize FI activity [14], [16]. Specialized algorithms were developed to process the acoustic signal achieving acceptable results for single meal experiments in laboratory settings, where the number of food types consumed was restricted. The recognition of gestures using wearable sensors was also proposed for MIB [12], [18]. Recently, a watch-like device incorporating a miniature gyroscope was developed for measuring intake by an automatic tracking of wrist motions during hand-to-mouth (HtM) gestures or “bites” [18]. This device showed high sensitivity for “bite” counting but may carry the limitations of self-reported FI as subjects need to turn it ON and OFF at every meal to avoid spontaneous hand gestures registering as “bites.” A novel wearable sensor platform was recently presented [19]. It consisted of a microphone and a camera for detecting and characterizing FI with high recognition rate. Although these technologies presented satisfactory performances in the laboratory, the accuracy of the methodologies for detecting unrestricted FI in free-living environments remains to be tested.

Our research group is working toward the development of a noninvasive wearable device for automatic and objective MIB under free-living conditions. Our approach proposes the use of HtM gestures, chews and swallows as indicators of FI (objectively detecting timing and duration of each instance of FI, number of bites and chews in each eating episode, characterizing eating frequency and mass of ingestion) [11], [13]. Monitoring of swallowing activities by acoustical means was integrated with machine learning algorithms in [20] and [21] to create classification models, which detected periods of FI with more than 85% accuracy. The drawback of this methodology is the need for individual calibration due to an apparent uniqueness of swallowing sounds for each individual. Monitoring of chewing activities by sensing characteristic jaw motions was integrated with pattern recognition algorithms in [17] and [22] to create group models that achieved >80% accuracy for FI detection in laboratory settings. The implementation of such group models eliminated the need for individual calibration.

In this paper, we present the design and validation of a novel wearable sensor system (automatic ingestion monitor, AIM) for objective detection of FI in free living. To the best of our knowledge, AIM is the first wearable sensor system that is capable of objective 24-h MIB without relying on any input or self-report from the subject, only compliance with wearing of the device. AIM wirelessly integrates three different sensors with a smartphone: a jaw motion sensor to monitor chewing, a hand gesture sensor to monitor HtM gestures, and an accelerometer to monitor body motion. A novel approach to sensor information fusion and pattern recognition based on artificial neural networks (ANNs) is used for robust and accurate detection of FI in free-living conditions that presents a substantially more challenging environment than the laboratory. The device and methodology were validated with data collected from 12 subjects who wore AIM in free living during 24 h without any restrictions in their FI and daily activities (except showering).

II. AIM

A. Device Description

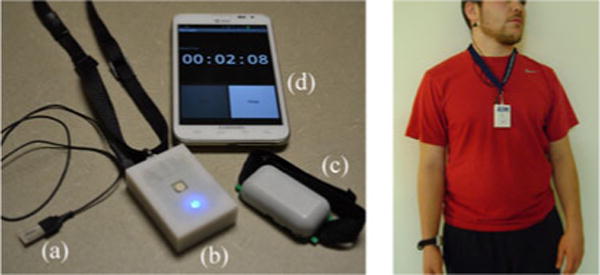

The wearable sensor system of AIM consists of four key parts: a jaw motion sensor, a wireless module, an RF transmitter of the HtM sensor, and an Android smartphone. Fig. 1 (right) shows a picture of a subject wearing AIM.

Fig. 1.

Left: wearable sensor system: (a) jaw motion sensor, (b) wireless module, (c) RF transmitter, and (d) smartphone. Right: subject wearing AIM.

The jaw motion sensor [see Fig. 1 (left-a)] was attached by medical adhesive below the earlobe and used to capture characteristic motion of the jaw during FI [17]. The sensor was the LDT0-028K piezoelectric film element which was interfaced to the microcontroller through a buffering, level shifting, and differential amplifying op-amp circuit.

The wireless module [see Fig. 1 (left-b)] was worn on a lanyard around the neck. It contained a custom-built electronic circuit powered by a Li-Polymer 3.7 V 680 mA h battery (Tenergy). This circuit incorporated: 1) MSP430F2417 processor with an 8-channel 12-bit ADC used to sample analog sensor signals; 2) RN-42 Bluetooth module with serial port profile; 3) M25P64 64 Mbit serial flash memory for data buffering in situations where wireless Bluetooth connection is temporarily unavailable; 4) preamplifier for jaw motion sensor (sampled at 1 kHz); 5) RF receiver for HtM gesture sensor (sampled at 10 Hz) operating in radio frequency identification frequency band of 125 kHz; 6) ADXL335 low-power three-axis accelerometer for capturing body acceleration (sampled at 100 Hz); and 7) a self-report push button (sampled at 10 Hz) that was used in this study for pattern recognition algorithm development and validation and use of which will not be required in the future. The sensor signals were delivered in near real time via Bluetooth to an Android smartphone that acted as a data logger.

RF transmitter module was worn on the inner side of the dominant arm at the wrist [see Fig. 1 (left-c)]. It interacted with the RF receiver in the wireless module to implement a proximity sensor for detection of characteristic HtM gestures during FI [23]. The response of the hand gesture sensor was within a range of 0–20 cm, saturating at its maximum amplitude from 0–10 cm and reaching its minimal value at 20 cm.

B. Data Collection and Signal Preprocessing

A total of 12 subjects (six male and six female) were recruited to participate in this study. The average age was 26.7 y (SD ± 3.7) and the average body mass index was 24.39 kg/m2 (SD ± 3.81). Subjects did not present any medical condition that affected normal FI. This study was approved by the Internal Review Board at The University of Alabama and subjects read and signed an informed consent document.

Subjects were asked to wear AIM in free living for a period of 24 h, usually starting in the morning before breakfast and finishing in the morning of the following day. The experiments were initiated in the laboratory where a member of the research team helped subjects to put on the AIM device. The lanyard was then adjusted to the proper length making sure that subjects were comfortable while the AIM was in a good position for capturing hand gestures. An Android smartphone that included a data logger application was provided to subjects and they were asked to keep the phone with them at all times to ensure proper data transmission. Subjects were then dismissed from the laboratory and asked to continue with their regular activities of daily living (except showering/swimming and other activities including water immersion) without restrictions. During the experiments, subjects accomplished ad libitum intake meaning that they were able to eat any kind of food at any time of the day according to their own preferences and without any restrictions on the content and size of each eating episode. Subjects were asked to come back to the laboratory after 24 h of data collection.

The push button included in AIM was used as the primary method for self-reporting FI. Subjects marked each eating episode by pressing and holding the button with their nondominant hand (not equipped with the proximity sensor) during the chewing process. As a secondary method of self-report, subjects completed a food journal indicating what type of foods they ate during the experiment as well as the start and end time of each eating period. The push button signal was used as the gold standard for identifying, timing, and marking FI events in the sensor signals. The food journal was used to corroborate button data as well as to identify and remove accidental presses of the button. The annotated sensor data were used for developing the FI detection algorithm.

Selecting 24 h as the recording period for this study was based on two main reasons. First, it was long enough to cover a full cycle of daily meals and activities, including potential night eating [24]. Second, it was short enough to avoid introducing a significant error related to self-reporting intake over several days [25]. Also, the goal was to demonstrate recognition of FI in realistic conditions of daily living without the high costs related to a longer observational study.

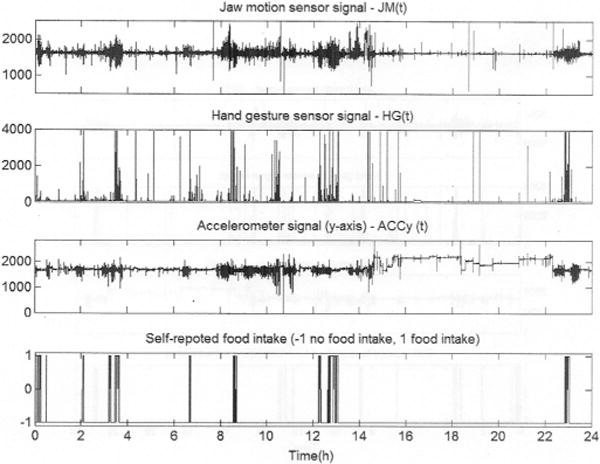

Fig. 2 shows the raw signals collected for one subject during a 24-h period: the jaw motion signal (top graph), the hand gesture signal (second graph), the accelerometer signal for the y-axis (third graph), and the self-reported FI (bottom graph). The self-report indicates the consumption of four major meals: breakfast (at the beginning of the experiment), lunch (at ~3.5 h after beginning), dinner (at ~13 h) and another breakfast before the end of the experiment (at ~23 h). Snacking was also reported to occur at approximately 2, 6.5, and 12.5 h after the beginning of the experiment. The hand gesture signal shows an increment in the hand gesture activity during FI intervals due to the presence of a high number of HtM gestures in the process of bringing the food to the mouth. Also, it is possible to visually identify the interval where the subject was sleeping (i.e., from 16 to 22 h) by looking at the three sensor signals together. The jaw motion, hand gesture, and body acceleration signals present a very low standard deviation during sleeping compared to the rest of the activities due to the subject resting quietly with minimal activity.

Fig. 2.

Example of the signals collected by AIM in a 24-h experiment.

AIM captured and stored the jaw motion signal JM(t), the accelerometer signals for each axis ACCx(t), ACCy(t), and ACCz(t), the hand gesture signal HG(t), and the push button signal PB(t). All sensor signals were time-synchronized and had exactly the same time duration. JM(t) and ACC(t) were high-pass filtered to remove the dc component (0.1 Hz cutoff frequency). These signals were then normalized to compensate for variations in the signal amplitude between subjects. HG(t) was normalized to provide a value of “1” at saturation. HtM gestures outside the 0.25–7.5 s range were removed as most of the hand gestures related to FI were shorter than 7.5 s and having hand gestures sorter than 0.25 s is highly unlikely and it most probably is due to artifacts in the signal.

III. Sensor Fusion and Pattern Recognition Methodology

Monitoring of FI under free-living conditions over an extended period of time generates more complex datasets than monitoring in laboratory. The intra- and intersubject variability of the sensor signals increases due to the presence of artifacts in the signals caused by activities from real-life situations that are not possible to reproduce in a laboratory. The intrasubject variability was stipulated by the different activities performed by a subject during the 24-h period (i.e., walking, talking, eating, sleeping, etc.) and the intersubject variability was given by subjects having different eating patterns and lifestyles. This makes it difficult to apply models created with laboratory data to free-living data and maintain an acceptable performance. For example, a group (population-based) model created in our previous study with a database encompassing chewing information from 20 subjects achieved an accuracy of 81% under laboratory settings [17]. When the same model was applied to the free-living data collected in the present study, the accuracy decreased to 62%. This is a clear indication that laboratory experiments may not provide data that are representative of FI in the community and food detection models should be created using innovative methodologies based on free-living data. For that reason, a novel sensor fusion and pattern recognition approach was implemented in this paper. This approach was divided into three steps, which are explained in detail in this section: 1) the Sensor Fusion steps for reducing the size of the original dataset and eliminating the outliers; 2) the Feature Extraction step for extracting features from the reduced dataset; and 3) the Classification step for training a classifier to detect FI episodes.

A. Sensor Fusion

The data collected after 24 h contained only about 3% of data related to FI, which generated a highly unbalanced dataset. The sensor fusion approach combined information from the sensor signals to identify and remove periods of “no food intake (NFI)” within the signals for balancing the dataset and removal of the signal artifacts and outliers. It involved two major steps. First, the product between the absolute values of JM(t) and HG(t) was computed as

| (1) |

SF1(t) was divided into nonoverlapping epochs ei of 30 s duration with i = 1, 2,…, MS total number of epochs for each subject S. The size selected for the epoch was found to present the best tradeoff between the frequency of physiological events such as bites, chewing and swallowing and time resolution of FI monitoring [11], [13]. The mean absolute value (MAV) of the signal SF1(t) within ei was computed as

| (2) |

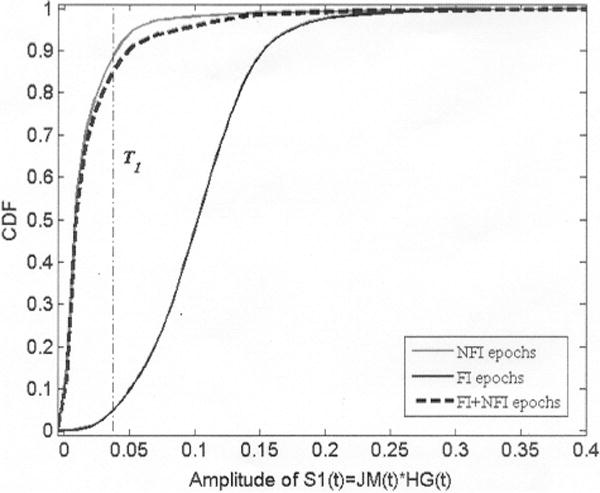

where xk is the kth sample in an epoch ei of SF1(t) containing a total of N samples. The self-report signal, PB(t), was also divided into 30 s epochs and used to assign a class label ci ∈ {“food intake” (FI), “no food intake” (NFI)} to each ei. An epoch was labeled as FI if at least 10 s of self-report within the ith epoch was marked as FI; otherwise, it was labeled as NFI. The 10 s was chosen based on an estimation of the shortest duration of the physiological sequence that generates intake: bite, chews, and swallows [26]. SF1(t) epochs would have higher MAV during FI due to the presence of HtM gestures (associated with bites and use of napkins) and jaw motion activity (chewing) during eating. For that reason, a threshold level T1 was set to remove epochs in SF1(t) belonging to activities that did not present a combination of jaw motion and hand gestures (i.e., sleeping, sitting quietly, working on a computer, watching TV, etc.). Fig. 3 illustrates the cumulative distribution function (CDF) of the MAV for FI and NFI epochs in SF1(t) for one subject. The CDF represents the probability that an epoch will have a MAV less than or equal to a certain number in the x-axis. The CDF for NFI epochs grows faster than the CDF for FI epochs (see Fig. 3), meaning that there is a high probability to find a NFI epoch with low MAV but a low probability to find a FI epoch with the same MAV and vice versa. Consequently, a common threshold value, T1, was determined for all subjects and the indexes of the ith epochs having a MAV below T1 were stored in a vector IdxSF1 for further processing.

Fig. 3.

CDF of the MAV for FI and NFI epochs in SF1 (t). Dashed line represents the CDF for all epochs combined.

In the second step, the mean of the acceleration signals was computed as follows:

| (3) |

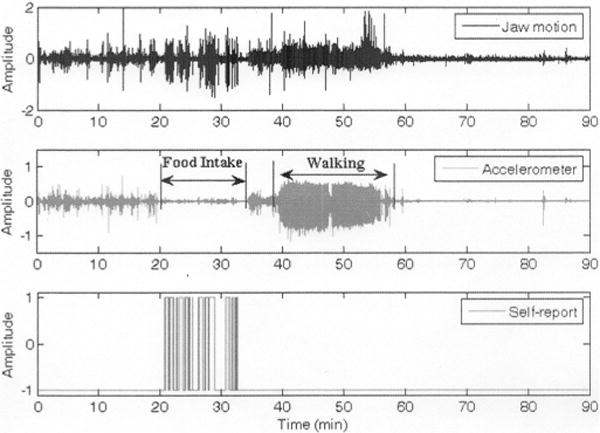

SF2(t) was divided into MS nonoverlapping epochs of 30 s duration and a class label ci was assigned to each epoch ei as in the first step. The MAV of SF2(t) within each epoch was calculated as in (2). Since most of the individuals consumed foods in a sedentary position, it was reasonable to anticipate that SF2(t) epochs would have higher MAV during activities involving body acceleration (i.e., walking, running, etc.) than during FI. An example of this rationale is presented in Fig. 4, which shows a clear difference in the amplitude of SF2(t) (middle) during eating and during walking. This difference is not that clear in the jaw motion signal (top). Thus, a common threshold value T2 was set for all subjects and the indexes of the ith epochs in SF2(t) with a MAV above T2 were stored in a vector IdxSF2 for further processing.

Fig. 4.

FI and walking intervals captured by the jaw motion and accelerometer sensors. Reduced body acceleration is seen during FI.

The two steps of the Sensor Fusion algorithm were performed independently, so the epoch indexes in IdxSF1 and IdxSF2 were grouped into a new vector IdxSF = {IdxSF1 ∪ IdxSF2} ∈ ℜDs with DS < MS total number of epochs for each subject S. Finally, the signals JM (t), HG (t), ACCx(t), ACCy(t), ACCz(t), and PB(t) for each subject were divided into MS nonoverlapping epochs of 30 s duration, which were synchronized in time with SF1 (t) and SF2(t) epochs. Thus, the epoch indexes stored in IdxSF were used to label the sensor signals epochs as NFI and remove them from the dataset used in the pattern recognition task. As a result, a total of DS epochs were removed from the initial MS epochs for each subject S. Implementation of this procedure allowed obtaining a more balanced dataset with approximately 36% of epochs labeled as FI.

B. Feature Extraction

Time- and frequency-domain features were extracted from the remaining epochs of the sensor signals and combined to create a feature vector fi ∈ ℜ68 that represented a 30 s interval. Each vector fi was formed by combining features from sensor signals as: fi = {fJM, fHG, fACC}, where fjm ∈ ℜ38, fHG ∈ ℜ9, and fACC ∈ ℜ21 represented the subsets of features extracted from JM(t), HG(t), and the accelerometer signals, respectively [27].

The subset fjm included time- and frequency-domain features extracted from each epoch of the jaw motion signal (see Table I). Frequency-domain features were computed from different ranges of the frequency spectrum of JM(t) within each epoch. Previous studies determined that features in the 1.25–2.5 Hz range contained information about chewing, which was successfully used to discriminate between FI and NFI epochs [17]. Also, the frequency spectrum in the 100–300 Hz range presented valuable information to identify talking intervals due to fundamental frequencies of voice for adults were found on that range [22]. Finally, features in the 2.5–10 Hz frequency range were also included in fJM as they may contain important information related to other activities (i.e., walking) that could help to discriminate between intake and no intake.

TABLE I.

Features Extracted From the Jaw Motion Signal

| # Description | # Description |

|---|---|

| 1 Mean Absolute Value (MAV) | 20 Energy spectrum in chewing range2 (chew_ene) |

| 2 Root Mean Square (RMS) | 21 Entropy of spectrum chewing range (chcw_entr) |

| 3 Maximum value (Max) | 22 Ratio: chew_ene / spectr_ene |

| 4 Median value (Med) | 23 Energy spectrum in walking range3 (walkene) |

| 5 Ratio: MAV / RMS | 24 Entropy of spectrum walking range (walk_entr) |

| 6 Ratio: Max / RMS | 25 Ratio: walkene / spectrene |

| 7 Ratio: MAV / Max | 26 Energy spectrum in talking range4 (talkene) |

| 8 Ratio: Med / RMS | 27 Entropy of spectrum talking range (talk_entr) |

| 9 Signal entropy (Entr) | 28 Ratio: talk_ene / spectr_ene |

| 10 Number of zero crossings (ZC) | 29 Ratio: chew_ene / walk_ene |

| 11 Mean time between ZC | 30 Ratio: chew_entr / walk_entr |

| 12 Number of peaks (NP) | 31 Ratio: chew_ene / talk_ene |

| 13 Average range | 32 Ratio: chew_entr / talk_entr |

| 14 Mean time between peaks | 33 Ratio: walk_ene / talk_ene |

| 15 Ratio: NP/ZC | 34 Ratio: walk_entr / talk_entr |

| 16 Ratio: ZC/NP | 35 Fractal dimension |

| 17 Wavelength [23] | 36 Peak frequency in chewing range (maxf_chew) |

| 18 Number of slope sign changes | 37 Peak frequency in walking range (maxf_walk) |

| 19 Energy of the entire frequency spectrum1 (spectr_ene) | 38 Peak frequency in talking range (maxf_talk) |

Frequency range: 0.1–500 Hz;

Chewing range: 1.25–2.5 Hz;

Walking range: 2.5–10 Hz;

Talking range: 100–300 Hz.

The subset fHG included time-domain features extracted from the HtM gestures observed within each epoch (see Table II). HtM gestures were detected when the amplitude of HG(t) exceeded a predefined threshold value above the electronic noise.

TABLE II.

Features Extracted From the Hand Gesture Signal

| # Description | # Description |

|---|---|

| 1 Num. of HtM gestures within epoch (num_HtM) | 6 Wavelength (WL) |

| 2 Duration of HtM (D_HtM) | 7 Ratio: WL / Duration HtM |

| 3 MAV of HtM | 8 Ratio: D_HtM / num_HtM |

| 4 Stardard Deviation of HtM | 9 Ratio: MAV_HtM / D_HtM |

| 5 Maximum value (Max_HtM) |

The subset fACC contained time-domain features computed from the accelerometer signals from each axis (see Table III). Features included MAV, SD, and the median value of the signal as well as number of zero crossings, mean time between crossings and entropy of the signal within the epoch. The means of the MAV, SD, and entropy across the three axes were computed to obtain a total 21 features.

TABLE III.

Features Extracted From the Accelerometer Signals

| # Description | # Description |

|---|---|

| 1 MAV of ACCx (MAVx) | 12 Entropy of ACCy (Entry) |

| 2 SD of ACCx (SDx) | 13 MAV of ACCz (MAVz) |

| 3 Median of ACCy | 14 SD of ACCz (SDz) |

| 4 Num. of zero crossings (ZC) for ACCx | 15 Median of ACCz |

| 5 Mean time between ZC for ACCx | 16 Num. of ZC for ACCz |

| 6 Entropy of ACCx (Entrx) | 17 Mean time between ZC for ACCz |

| 7 MAV of ACCy (MAVy) | 18 Entropy of ACCz (Entrz) |

| 8 SD of ACCy (SDy) | 19 Mean of {MA Vx, MAVy, MAVz} |

| 9 Median of ACCy | 20 Mean of {SDx, SDy, SDz} |

| 10 Num. of zero crossings for ACCy | 21 Mean of {Entrx, Entry, Entrz} |

| 11 Mean time between ZC for ACCy |

Finally, each feature vector fi was associated with a class label ti ∈ {1,−1}, where ti = 1 and ti = −1 represented FI and NFI, respectively. The same rule used in the Sensor Fusion step was used here to assign class labels to each fi vector. A dataset containing the pairs {fi,ti} was presented to a classification algorithm for training, validation, and testing.

C. Classification

ANN is a supervised learning technique that has shown excellent results for many pattern recognition and classification problems [28]. ANN is robust and flexible, can analyze complex patterns, and can handle noisy data. In this study, a population-based classification model based on ANN was trained to discriminate between FI and NFI epochs. Implementation of a population-based model (group model) was preferred over subject-dependent models (individual models) to achieve a robust model that included intra- and intersubject variability and required no individual calibration.

A three-layered (input layer, hidden layer, and output layer) feedforward neural network with the back-propagation training algorithm was the network topology implemented. The input layer consisted of 68 predictors (one for each feature) whereas the hidden layer contained a total of ten neurons. The output layer consisted of one output neuron, which indicated the final class label ti assigned to the input vector fi in the test set. The hyperbolic tangent sigmoid was the transfer function used for the hidden and output layers. Training, validation, and testing of the model was done using the neural network toolbox available in MATLAB R2011b (The Mathworks, Inc).

A leave-one-out cross-validation procedure was used to evaluate the performance of the ANN model. This allowed training and validating the model with data from 11 subjects (80% of the data for training and 20% for validation) and testing with data from an independent subject. This procedure allowed each subject to be the test subject once. The final result was obtained by averaging the results across all subjects. Per-epoch classification accuracy was the metric used to evaluate the performance of the classification model [17]. For each subject, the accuracy value was computed using all subject’s epochs available (including epochs labeled as “NFI” in the Sensor Fusion stage). This was done to illustrate the performance the entire Sensor Fusion and Pattern Recognition methodology. Accuracy was computed as the average between precision (P) and recall (R) to account for the high number of true negatives that are typical in monitoring of FI over long periods of time. These metrics measured the ability of the model to recognize FI epochs while rejecting NFI epochs. Finally, a weighted average accuracy was computed to determine whether the size of the subject’s datasets will impact on the final results. This average was weighted by the proportion of epochs of a subject’s dataset in the total amount of epochs.

IV. Results

Information collected from the food diaries indicated that the foods consumed were mostly solids and liquids (a total of 40 different foods). There was only one event associated with semiliquid intake (yogurt). Tea/coffee (consumed 12 times), rice (nine times), toast (six), oranges (six), chips (five), banana (five), granola bar (five), cereal (four), chicken (two), and waffles (two) were the foods of choice for participants.

The dataset used to create the FI detection model contained approximately 10 h of data labeled as FI. Results of the leave-one-out cross-validation procedure for the different sets of features are shown in Table IV. The values in the table represent the mean and standard deviations of the FI detection accuracy as well of the precision and recall results. The best performance was achieved by combining features from the jaw motion and the accelerometer signals (89.8%). Using such features, one-half of the subjects presented accuracies above 90%, being 75.82% and 97.7% the lowest and highest accuracy values obtained, respectively. Inclusion of hand gesture features did not improve the results. Weighted average accuracy values showed no major differences when compared to the original average accuracy.

TABLE IV.

Cross-Validation Results for Different Feature Sets1

| Feature set | Precision (%) | Recall (%) | Accuracy (%) | Weighted accuracy (%) | |||

|---|---|---|---|---|---|---|---|

|

| |||||||

| Mean | SD | Mean | SD | Mean | SD | ||

| fJM | 88.1 | 8.5 | 84.8 | 14.4 | 86.4 | 8.8 | 86.1 |

| fHG | 38.0 | 35.4 | 7.1 | 11.1 | 22.6 | 21.4 | 22.3 |

| fACC | 74.1 | 12.6 | 67.3 | 20.5 | 70.7 | 10.7 | 70.8 |

| fJM + fHG | 87.7 | 9.4 | 86.3 | 9.0 | 87.0 | 6.6 | 86.7 |

| fJM + fACC | 89.8 | 8.8 | 89.9 | 9.0 | 89.8 | 6.7 | 89.7 |

| fHG + fACC | 73.4 | 12.0 | 70.7 | 18.3 | 72.0 | 10.0 | 72.3 |

| fJM + fHG + fACC | 89.1 | 8.5 | 90.4 | 4.9 | 89.7 | 5.5 | 89.5 |

fJM: jaw motion features; fHG: hand gesture features; fACC: accelerometer features.

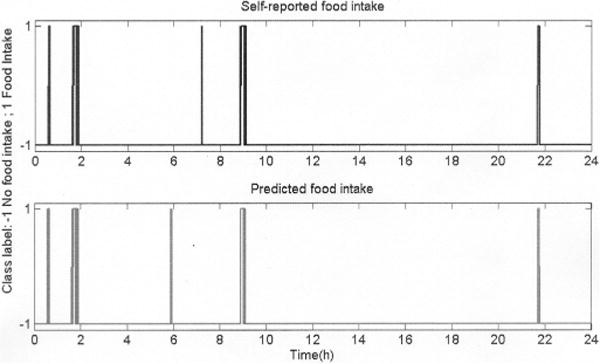

Fig. 5 shows the FI detection results for one subject. The model correctly detected all major meals (lunch, dinner, and breakfast at 2, 9, and 22 h, respectively) and one snacking episode (granola bar at 1 h) but incorrectly predicted FI at 6 h. Also, the model failed to predict a snacking event (slice of bread) at 7 h. The difference in the physical properties of the snacks may have been the reason for correctly detecting one out of two snaking events. Overall, the model correctly detected all of the 30 major meals consumed by the participants while incorrectly predicting the occurrence of one major meal. Regarding to snacking, 18 out of 19 episodes were correctly detected while 27 episodes were incorrectly predicted. Although this number may appear to be high, it only represented a total of 15 min of ingestion (2.5% of the total FI time).

Fig. 5.

FI prediction results for an average performance of the group model. It was able to predict (bottom graph) all major meals (2, 9, and 22 h) but failed to predict a snacking event at 7 h with a false prediction at 6 h.

V. Discussion and Conclusion

The development of new strategies for objective and accurate MIB of free-living individuals is imperative to overcome the current limitations of self-reported FI. This paper introduced the AIM as a wearable device for objective monitoring of FI under free-living conditions. Compared to the state of the art, this paper presents three new contributions: 1) the design and implementation of a novel wearable multisensor device that has the ability to monitor 24 h of ingestive behavior without relying on self-report or any other actions from subjects; 2) the implementation of a robust methodology for reliably detecting FI episodes in the presence of real-life artifacts in the sensor signals; and 3) the validation of the device and methodology in an objective study where 12 subjects wore AIM in free living during 24 h without any restrictions on their eating behavior and activities.

The proposed wearable device presented several benefits that make it suitable for FI monitoring without a conscious effort from the subjects. First, AIM integrated three different sensor modalities for monitoring of jaw motion, HtM gestures, and body motion. Second, AIM was designed as a pendant worn on a lanyard around the neck which intended to satisfy the need for a socially acceptable device. Although further quantitative evaluation of necklace and sensors wear convenience is needed, preliminary studies showed that the jaw motion sensor presented high levels of wearing comfort and did not significantly affect the way subject eat their meals [29]. Third, the electric circuit embedded in the wireless module contained mostly low-power components permitting 24 or more hours of data collection without need for recharging the battery. Finally, AIM presented a reliable data transmission system using Bluetooth technology. Minimal data loss was observed during the experiments. Only about 1.6% of the total data was lost most probably due to subjects walking away from the phone. All of these properties of AIM would theoretically keep subjects away from the burden of self-reporting their intake and provide objective measures of food consumption. Although AIM in its present form is rather a tool for studying of ingestive behavior over a period of several days, in the future it can be miniaturized into a less intrusive device suitable for long-term studies and personalized everyday monitoring.

An accurate detection of FI in free living is highly desirable to obtain reliable information about dietary intake of individuals. Wearable systems integrating sensor signals with complex pattern recognition algorithms have been implemented as a potential solution to self-reported intake [12], [14], [16]–[19]. Such systems achieved recognition rates ranging from 80% to 90%. However, direct comparisons with AIM results cannot be quantified due to they were obtained under different scenarios. The methodologies used in those wearable systems were developed based on data collected in the laboratory, which may result in high accuracies but with a poor generalization that would make them unreliable for free-living data. On the other hand, AIM incorporated a novel methodology based on free-living data that correctly detected around 90% of FI. The advantage is that AIM can accurately detect ingestion events in a challenging scenario, where the real-world variability directly affects the eating behavior and is usually missing in laboratory settings, e.g., food selection, timing of meals (i.e., determined by external schedules, work, etc.), FI environments, and FI behavior, which may vary during the course of a day [30], [31].

The methodology proposed consisted of three steps: sensor fusion, feature extraction, and classification. The sensor fusion step was implemented to balance the dataset and to remove potential outliers in the signals. Sensor fusion used information from the sensor signals to identify and remove more than 85% of NFI epochs for each subject (i.e., resting, walking, and sleeping) while retaining most of the FI epochs (<5% of FI epochs was removed per subject). In the feature extraction step, a set of time- and frequency-domain features were computed every 30 s of sensor signals and feed to the classifier. Table IV demonstrates that features from the jaw motion signals were the most important for FI detection. The classification step presented a robust group model based on ANN. Although various advanced classification techniques and feature selection were previously evaluated by this research team [17], [22], [27], a computationally lightweight ANN algorithm was used because it can be easily implemented in a processor of a wearable system. This ANN model was able to identify when the major meals were consumed and when most of the snacking periods occurred.

In the proposed methodology, it was assumed that FI is mutually exclusive with vigorous physical activity (>6 metabolic equivalents (MET) [32], for example, running). Indeed, although the study did not restrict or specify the way in which the food is to be consumed and involved individuals with origins from five different countries, having different lifestyles, and ingestive behaviors, all of the participants demonstrated a tendency to eat while remaining sedentary. It is possible that some individuals may consume foods during physical activity of moderate intensity (3–6 MET) and the methodology will need to be validated in such scenarios. Finally, the methodology requires no individual calibration meaning that it can potentially be used to detect FI in a wide population. Another observation is that the optimization of the threshold values in the sensor fusion algorithm was based on the population as a whole. However, due to the diversity of subject population, the threshold values should be close to optimal and should not bias the recognition results.

The main limitation of this study is related to the use of self-report as the gold-standard for developing the FI detection methodology. Subjects may provide inaccurate information about dietary intake, thus leading to unreliable results. However, self-report error is mostly related to the amount of food consumed and the misreporting bias becomes significant over longer periods of time [25]. To minimize the reporting error, subjects were asked to report their intake in two different ways: by pushing a button during the chewing process and by writing down in a food journal the times at which foods were consumed. Push button data were analyzed in 30 s epochs and used to label sensor signals. This windowing procedure impacted on the accuracy of the timing reference. However, for the rule selected to label the epochs (see Section III-a), the worst-case scenario would have an epoch labeled as FI when it contains 10 s of data associated with an ingestion event while the remaining 20 s will not be related to FI. Even with this error, the timing of ingestion events using the windowed push button signal is significantly more accurate than timing events using the conventional food journal, which are typically filled post-factum and contain only approximate times.

The use of the push button and the monitoring system itself may change the eating behavior of individuals. However, there are two points to consider. First, even if the behavior was changed by using a push button in this study, AIM was able to accurately detect FI episodes. Also, the use of push button is only required at the stage of algorithm development and in the future will not affect the behavior. Second, AIM is likely to affect behavior less than other methods as it does not rely on the self-report. The testing of this hypothesis is left to the future as it requires a much larger study and methods to assess behavior change under observation by different methods.

Another limitation of the methodology may be related to the capability of detecting liquid intake through the monitoring of jaw motion. The reported accuracy value corresponded only to solid FI. Our previous studies suggested the presence of the characteristic jaw motion during a continuous intake (gulping) of liquids that is similar to the chewing present in the intake of solid foods [17]. Further studies are needed to determine the feasibility of the proposed methodology to detect liquid intake in free living. In addition, effects of sensor positioning on the jaw and their impact on the accuracy of solid and liquid intake detection need to be studied.

Finally, the collected diary data were not enough to analyze the impact of certain activities on accuracy of FI recognition. Our goal was to minimally load the participants with reporting burden; thus, information about daily activities was restricted to what they reported in the diary. An observational study would be needed to obtain greater detail about subject’s activities during the day and to better understand the behavior of AIM under daily life conditions.

The results presented in this study indicated a satisfactory level of robustness of the system for recognizing FI events in free-living population, although testing on a larger and diverse population will be required to demonstrate performance of the device for individuals with uncharacteristic eating behaviors such as binge eating, bulimia nervosa, anorexia nervosa, compulsive eating disorders, etc. Finally, future studies of the system will evaluate social acceptability and subject compliance with wearing the device.

Although the prediction was done offline, in the future AIM is intended to perform real-time recognition and characterization of FI (i.e., how much food is consumed) as well as to provide on-time feedback to individuals about their intake behavior. Estimation of the mass ingested could be achieved through the counts of chews [13] and the type and caloric density of food could potentially be determined by adding a camera triggered by the detection algorithm [19]. Ingested mass could also be predicted by acoustic recognition of chewing cycles and food types. A prior study showed that the bite weight of a reduced number of food types may be predicted using chewing sound features [15]. However, the main challenge facing this sound-based approach is the generalization of the bite weight prediction models for the broad variety of foods encountered in everyday life.

The development of AIM as a wearable device for monitoring FI would intend to serve as a behavioral modification tool for correcting known ingestive behaviors leading to weight gain (snacking, night eating, and weekend overeating) and would help to advance the study of free-living food consumption in obesity and in other eating disorders.

Acknowledgments

This work was supported by the National Institute of Diabetes and Digestive and Kidney Diseases under Grant R21DK085462.

Footnotes

Color versions of one or more of the figures in this paper are available online at http://ieeexplore.ieee.org.

Authors’ photographs and biographies not available at the time of publication.

Contributor Information

Juan M. Fontana, Email: juanmfontana@gmail.com, Department of Electrical and Computer Engineering, University of Alabama, Tuscaloosa, AL 35487 USA, and also with the Facultad de Ingenieria, Universidad Nacional de Rio Cuarto, Rio Cuarto 5800, Cordoba, Argentina.

Muhammad Farooq, Email: farooqespn@gmail.com, Department of Electrical and Computer Engineering, University of Alabama, Tuscaloosa, AL 35487 USA.

Edward Sazonov, Email: esazonov@eng.ua.edu, Department of Electrical and Computer Engineering, University of Alabama, Tuscaloosa, AL 35487 USA.

References

- 1.Olshansky SJ, et al. A potential decline in life expectancy in the united states in the 21st century. New Eng J Med. 2005 Mar;352(11):1138–1145. doi: 10.1056/NEJMsr043743. [DOI] [PubMed] [Google Scholar]

- 2.Flegal KM, Carroll MD, Kit BK, Ogden CL. Prevalence of obesity and trends in the distribution of body mass index among us adults, 1999–2010. J Amer Med Assoc. 2012 Feb;307(5):491–497. doi: 10.1001/jama.2012.39. [DOI] [PubMed] [Google Scholar]

- 3.Fairburn CG, Harrison PJ. Eating disorders. Lancet. 2003 Feb;361(9355):407–416. doi: 10.1016/S0140-6736(03)12378-1. [DOI] [PubMed] [Google Scholar]

- 4.Hudson JI, Hiripi E, Pope HG, Jr, Kessler RC. The prevalence and correlates of eating disorders in the national comorbidity survey replication. Biol Psychiatry. 2007 Feb;61(3):348–358. doi: 10.1016/j.biopsych.2006.03.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sánchez-Carracedo D, Neumark-Sztainer D, López-Guimerà G. Integrated prevention of obesity and eating disorders: Barriers, developments and opportunities. Public Health Nutrition. 2012;15(12):2295–2309. doi: 10.1017/S1368980012000705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Thompson FE, Subar AF. Nutrition in the Prevention and Treatment of Disease. 2. San Diego, CA, USA: Academic Press; 2008. Dietary assessment methodology. [Google Scholar]

- 7.Amft O. Ambient, on-body, and implantable monitoring technologies to assess dietary behavior. In: Preedy VR, Watson RR, Martin CR, editors. Handbook of Behavior, Food and Nutrition. New York, NY, USA: Springer; 2011. pp. 3507–3526. [Google Scholar]

- 8.Schoeller DA, Webb P. Five-day comparison of the doubly labeled water method with respiratory gas exchange. Amer J Clin Nutrition. 1984;40(1):153–158. doi: 10.1093/ajcn/40.1.153. [DOI] [PubMed] [Google Scholar]

- 9.Black AE, Cole TJ. Biased over- or under-reporting is characteristic of individuals whether over time or by different assessment methods. J Amer Dietetic Assoc. 2001 Jan;101(1):70–80. doi: 10.1016/S0002-8223(01)00018-9. [DOI] [PubMed] [Google Scholar]

- 10.Thompson FE, Subar AF, Loria CM, Reedy JL, Baranowski T. Need for technological innovation in dietary assessment. J Amer Dietetic Assoc. 2010 Jan;110(1):48–51. doi: 10.1016/j.jada.2009.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sazonov E, Schuckers S, Lopez-Meyer P, Makeyev O, Sazonova N, Melanson EL, Neuman M. Non-invasive monitoring of chewing and swallowing for objective quantification of ingestive behavior. Physiol Meas. 2008 May;29(5):525–541. doi: 10.1088/0967-3334/29/5/001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Junker H, Amft O, Lukowicz P, Tröster G. Gesture spotting with body-worn inertial sensors to detect user activities. Pattern Recognit. 2008 Jun;41(6):2010–2024. [Google Scholar]

- 13.Sazonov E, Schuckers SAC, Lopez-Meyer P, Makeyev O, Melanson EL, Neuman MR, Hill JO. Toward objective monitoring of ingestive behavior in free-living population. Obesity. 2009;17(10):1971–1975. doi: 10.1038/oby.2009.153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Amft O, Troster G. On-body sensing solutions for automatic dietary monitoring. IEEE Pervasive Comput. 2009 Apr-Jun;8(2):62–70. [Google Scholar]

- 15.Amft O, Kusserow M, Troster G. Bite weight prediction from acoustic recognition of chewing. IEEE Trans Biomed Eng. 2009 Jun;56(6):1663–1672. doi: 10.1109/TBME.2009.2015873. [DOI] [PubMed] [Google Scholar]

- 16.Passler S, Fischer WJ. Food intake activity detection using a wearable microphone system. Proc 7th Int Conf Intell Environ. 2011:298–301. [Google Scholar]

- 17.Sazonov E, Fontana JM. A sensor system for automatic detection of food intake through non-invasive monitoring of chewing. IEEE Sens J. 2012 May;12(5):1340–1348. doi: 10.1109/JSEN.2011.2172411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dong Y, Hoover A, Scisco J, Muth E. A new method for measuring meal intake in humans via automated wrist motion tracking. Appl Psychophysiol Biofeedback. 2012;37(3):205–215. doi: 10.1007/s10484-012-9194-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Liu J, Johns E, Atallah L, Pettitt C, Lo B, Frost G, Yang GZ. An intelligent food-intake monitoring system using wearable sensors. Proc 9th Int Conf Wearable Implantable Body Sens Netw. 2012:154–160. [Google Scholar]

- 20.Lopez-Meyer P, Makeyev O, Schuckers S, Melanson E, Neuman M, Sazonov E. Detection of food intake from swallowing sequences by supervised and unsupervised methods. Ann Biomed Eng. 2010;38(8):2766–2774. doi: 10.1007/s10439-010-0019-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sazonov E, Makeyev O, Lopez-Meyer P, Schuckers S, Melanson E, Neuman M. Automatic detection of swallowing events by acoustical means for applications of monitoring of ingestive behavior. IEEE Trans Biomed Eng. 2010 Mar;57(3):626–633. doi: 10.1109/TBME.2009.2033037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fontana JM, Sazonov ES. A robust classification scheme for detection of food intake through non-invasive monitoring of chewing. Proc Annu Int Conf IEEE Eng Med Biol Soc. 2012:4891–4894. doi: 10.1109/EMBC.2012.6347090. [DOI] [PubMed] [Google Scholar]

- 23.Lopez-Meyer P, Patil Y, Tiffany S, Sazonov E. Detection of hand-to-mouth gestures using a RF operated proximity sensor for monitoring cigarette smoking. Int J Smart Sens Intell Syst. 2013;9:41–49. doi: 10.2174/1874120701307010041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Striegel-Moore RH, Franko DL, Thompson D, Affenito S, May A, Kraemer HC. Exploring the typology of night eating syndrome. Int J Eating Disorders. 2008;41(5):411–418. doi: 10.1002/eat.20514. [DOI] [PubMed] [Google Scholar]

- 25.Suchanek P, Poledne R, Hubacek JA. Dietary intake reports fidelity—Fact or fiction?”. Neuroendocrinol Lett. 2011;32(Suppl. 2):29–31. [PubMed] [Google Scholar]

- 26.Stellar E, Shrager EE. Chews and swallows and the microstructure of eating. Amer J Clin Nutrition. 1985 Nov;42(Suppl. 5):973–982. doi: 10.1093/ajcn/42.5.973. [DOI] [PubMed] [Google Scholar]

- 27.Fontana JM, Farooq M, Sazonov E. Estimation of feature importance for food intake detection based on random forests. Proc Annu Int Conf IEEE Eng Med Biol Soc. 2013:6756–6759. doi: 10.1109/EMBC.2013.6611107. [DOI] [PubMed] [Google Scholar]

- 28.Cohen M, Hudson D. Neural Networks and Artificial Intelligence for Biomedical Engineering. 1. New York, NY, USA: Wiley; 1999. [Google Scholar]

- 29.Fontana JM, Sazonov ES. Evaluation of chewing and swallowing sensors for monitoring ingestive behavior. Sens Lett. 2013;11(3):560–565. doi: 10.1166/sl.2013.2925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.de Castro JM. The control of food intake of free-living humans: Putting the pieces back together. Physiol Behav. 2010 Jul;100(5):446–453. doi: 10.1016/j.physbeh.2010.04.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.deCastro JM. Eating behavior: Lessons from the real world ofhumans. Nutrition. 2000 Oct;16(10):800–813. doi: 10.1016/s0899-9007(00)00414-7. [DOI] [PubMed] [Google Scholar]

- 32.Lee IM, Hsieh CC, Paffenbarger RS., Jr Exercise intensity and longevity in men: The Harvard alumni health study. J Amer Med Assoc. 1995;273(15):1179–1184. [PubMed] [Google Scholar]