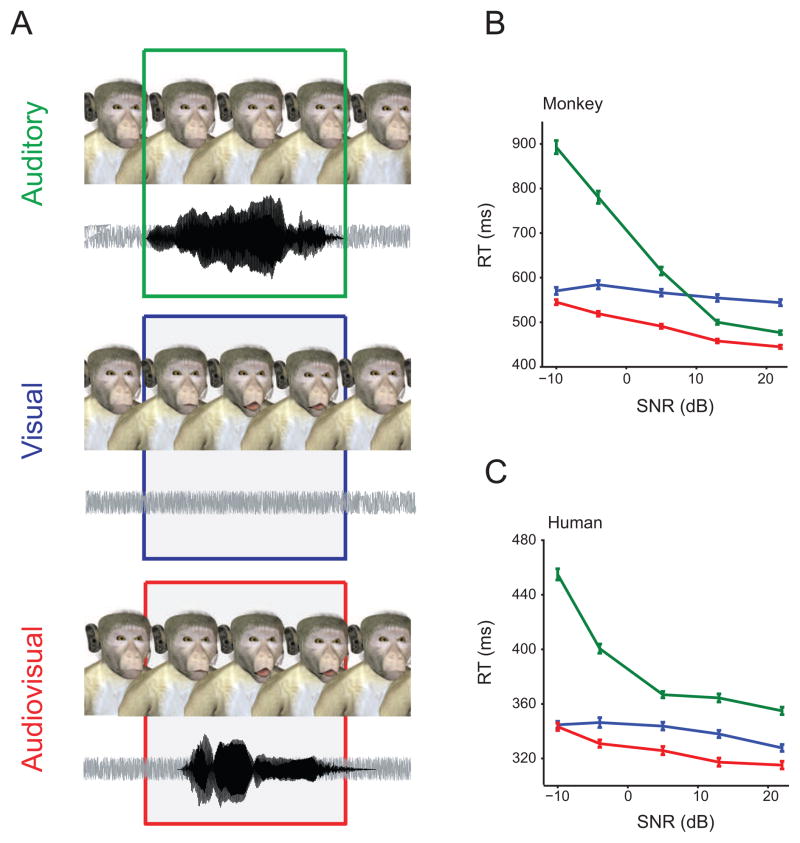

Figure 1.

Auditory, visual, and audiovisual vocalization detection. A. Monkeys were trained to detect auditory (green box), visual (blue box) or audiovisual (red box) vocalizations embedded in noise as fast and as accurately as possible. An avatar and background noise was continuously presented. In the auditory condition, a coo call was presented. In the visual condition, the mouth of the avatar moved without any corresponding vocalization. In the audiovisual, a coo call with a corresponding mouth movement was presented. Each stimulus was presented with four different signal-to-noise ratios (SNR). B. Mean reaction times as a function of SNR for the unisensory and multisensory conditions for one monkey. The color-code is the same as in (A). X-axes denote SNR in dB. Y-axes depict RT in milliseconds. C. An analogous experiment with human avatar and speech was done in humans. The graph represents the mean reaction times as a function of SNR for the unisensory and multisensory conditions for one human. Conventions as in B.