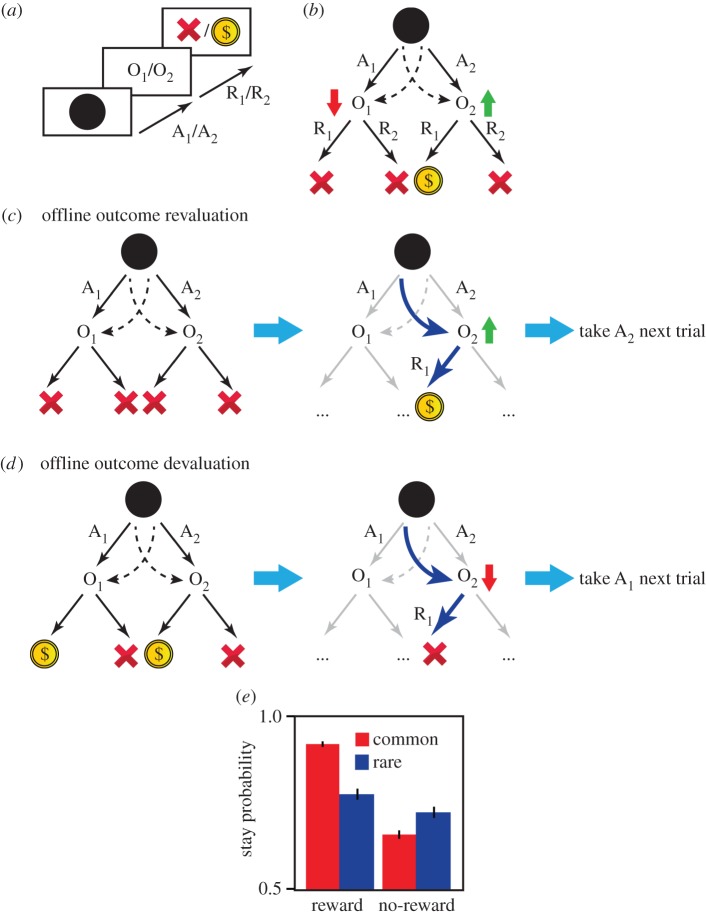

Figure 1.

The two-stage task. (a) At stage 1, subjects choose between A1 and A2, and the outcome can be O1 or O2. They then make another choice (R1 versus R2), which can have a rewarding or neutral result ($ versus X). (b) The outcomes of A1 and A2 are commonly O1 and O2, respectively. On approximately 30% of trials, however, these relationships switch and A1 leads to O2, and A2 leads to O1 (dashed arrows). The values of O1 and O2 depend on the probability of earning a reward after the stage 2 actions, R1 or R2. In the current illustration, actions in O1 are not rewarded and so O1 has a low value, whereas O2 has a high value because an action in O2 is rewarded. Each stage 2 action will result in a reward with either a high (0.7) or a low probability (0.2) independent of the other actions. In each trial, there is a small chance (1 : 7) that these reward probabilities reset to high or low randomly, which causes frequent devaluation/revaluation of outcomes (O1 and O2) across the session. (c) Both choices at O2 have a low value (left), and in the next trial, the reward probability of one of the actions becomes high (right). In a rare trial, the subject executes A1 and receives O2 instead of O1, and then the action in O2 becomes rewarded (blue arrows indicate executed actions), which causes offline revaluation of O2. Thus A2 should be taken in the subsequent trials to reach O2. (d) O2 has a high value (left), and on the next trial, the action that was rewarded previously in O2 is not rewarded (right). In a rare trial, the subject chooses A1 and receives O2 instead of O1, after which the action in O2 is not rewarded. This causes the offline devaluation of O2 and, in subsequent trials, A1 should be chosen so as to avoid O2. (e) The probability of selecting the same stage 1 action on the next trial as a function of whether the previous trial was rewarded, and whether it was a common or rare trial (mixed-effect logistic regression analysis with all coefficients treated as random effects across subjects; ‘reward’ × ‘transition type’ interaction: coefficient estimate = 0.41; s.e. = 0.11; p < 5 × 10−4). Based on (c,d), when rewarded after a rare trial, a different stage 1 action should be taken, whereas when unrewarded after a rare trial, the same stage 1 action should be taken. This pattern is reversed if the previous trial is common: the same stage 1 action should be taken if the previous trial is rewarded, and a different stage 1 action should be taken if it is unrewarded. This stay/switch pattern predicts an interaction between reward and transition type if stage 1 actions are guided by their outcomes values, which is consistent with the behavioural results (see [14]). Error bars, 1 s.e.m.