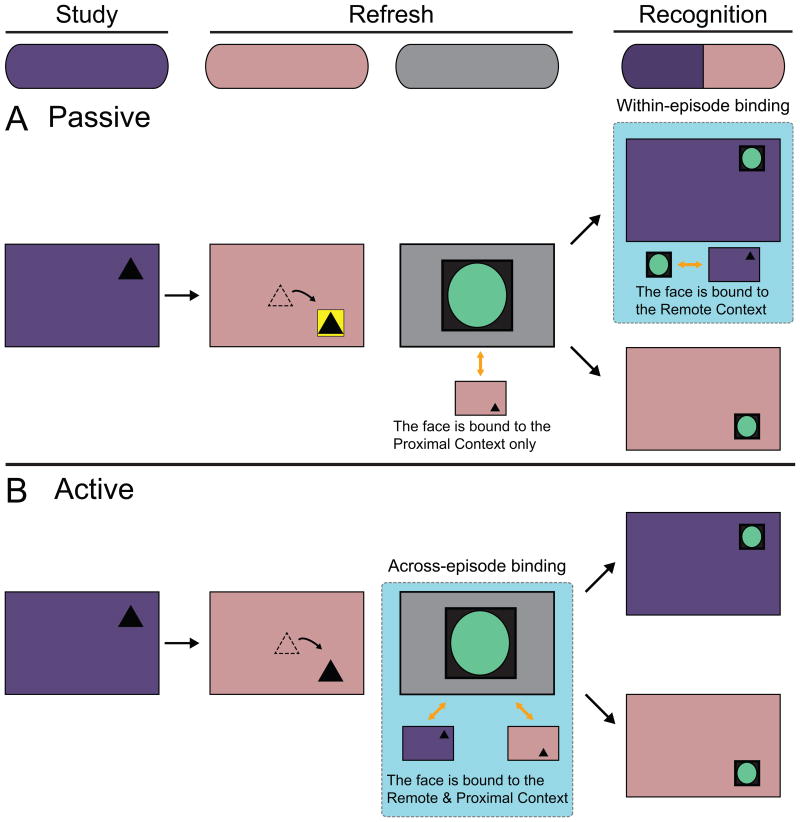

Figure 3. Hypothesized ERP effects related to across- and within-episode binding.

We predicted that faces would be bound to the Remote Context during face encoding in the Active condition (across-episode binding), and during face recognition in the Passive condition (within-episode binding). We expected that faces would always be bound to the Proximal Context during face encoding, because it was active in memory due to temporal proximity. Orange bidirectional arrows between the faces and contexts depict hypothesized binding. The teal backgrounds denote binding between the faces and the Remote Context. Purple boxes represent the Remote Context scene, red boxes represent the Proximal Context scene, and the black triangles represent the objects and their locations. (A) In the Passive condition, we did not expect binding to occur during face encoding, because the Remote Context was not active in memory during Refresh. Instead, we predicted that faces would be bound to the Remote Context during Recognition, when the faces and contexts were physically presented together (within-episode binding). We expected that Remote Context-specific ERPs during Recognition would reflect binding when faces were successfully recognized. (B) In the Active condition, we predicted that binding between the faces and the Remote Context would occur during face encoding because the Remote Context was active in memory during Refresh via reactivation (across-episode binding). We hypothesized that subsequent-memory ERPs would reflect binding between the faces and the Remote Context during encoding.