Abstract

We study the problem of estimating the leading eigenvectors of a high-dimensional population covariance matrix based on independent Gaussian observations. We establish a lower bound on the minimax risk of estimators under the l2 loss, in the joint limit as dimension and sample size increase to infinity, under various models of sparsity for the population eigenvectors. The lower bound on the risk points to the existence of different regimes of sparsity of the eigenvectors. We also propose a new method for estimating the eigenvectors by a two-stage coordinate selection scheme.

Keywords: Minimax risk, high-dimensional data, principal component analysis, sparsity, spiked covariance model

1. Introduction

Principal components analysis (PCA) is widely used to reduce dimensionality of multivariate data. A traditional setting involves repeated observations from a multivariate normal distribution. Two key theoretical questions are: (i) what is the relation between the sample and population eigenvectors, and (ii) how well can population eigenvectors be estimated under various sparsity assumptions? When the dimension N of the observations is fixed and the sample size n → ∞, the asymptotic properties of the sample eigenvalues and eigenvectors are well known [Anderson (1963), Muirhead (1982)]. This asymptotic analysis works because the sample covariance approximates the population covariance well when the sample size is large. However, it is increasingly common to encounter statistical problems where the dimensionality N is comparable to, or larger than, the sample size n. In such cases, the sample covariance matrix, in general, is not a reliable estimate of its population counterpart.

Better estimators of large covariance matrices, under various models of sparsity, have been studied recently. These include development of banding and thresholding schemes [Bickel and Levina (2008a, 2008b), Cai and Liu (2011), El Karoui (2008), Rothman, Levina and Zhu (2009)], and analysis of their rate of convergence in the spectral norm. More recently, Cai, Zhang and Zhou (2010) and Cai and Zhou (2012) established the minimax rate of convergence for estimation of the covariance matrix under the matrix l1 norm and the spectral norm, and its dependence on the assumed sparsity level.

In this paper we consider a related but different problem, namely, the estimation of the leading eigenvectors of the covariance matrix. We formulate this eigenvector estimation problem under the well-studied “spiked population model” which assumes that the ordered set of eigenvalues ℒ(∑) of the population covariance matrix ∑ satisfies

| (1.1) |

for some M ≥ 1, where σ2 > 0 and λ1 > λ2 > ⋯ > λM > 0. This is a standard model in several scientific fields, including, for example, array signal processing [see, e.g., van Trees (2002)] where the observations are modeled as the sum of an M-dimensional random signal and an independent, isotropic noise. It also arises as a latent variable model for multivariate data, for example, in factor analysis [Jolliffe (2002), Tipping and Bishop (1999)]. The assumption that the leading M eigenvalues are distinct is made to simplify the analysis, as it ensures that the corresponding eigenvectors are identifiable up to a sign change. The assumption that all remaining eigenvalues are equal is not crucial as our analysis can be generalized to the case when these are only bounded by σ2. Asymptotic properties of the eigenvalues and eigenvectors of the sample covariance matrix under this model, in the setting when N/n → c ∈ (0, ∞) as n → ∞, have been studied by Lu (2002), Baik and Silverstein (2006), Nadler (2008), Onatski (2006) and Paul (2007), among others. A key conclusion is that when N/n → c > 0, the eigenvectors of standard PCA are inconsistent estimators of the population eigenvectors.

Eigenvector and covariance matrix estimation are related in the following way. When the population covariance is a low rank perturbation of the identity, as in this paper, sparsity of the eigenvectors corresponding to the nonunit eigenvalues implies sparsity of the whole covariance. Consistency of an estimator of the whole covariance matrix in spectral norm implies convergence of its leading eigenvalues to their population counterparts. If the gaps between the distinct eigenvalues remain bounded away from zero, it also implies convergence of the corresponding eigen-subspaces [El Karoui (2008)]. In such cases, upper bounds for sparse covariance estimation in the spectral norm, as in Bickel and Levina (2008a) and Cai and Zhou (2012), also yield upper bounds on the rate of convergence of the corresponding eigenvectors under the l2 loss. These works, however, did not study the following fundamental problem, considered in this paper: How well can the leading eigenvectors be estimated, and namely, what are the minimax rates for eigenvector estimation? Indeed, it turns out that the optimal rates for covariance matrix estimation and leading eigenvector estimation are different. Moreover, schemes based on thresholding the entries of the sample covariance matrix do not achieve the minimax rate for eigenvector estimation. The latter result is beyond the scope of this paper and will be reported in a subsequent publication by the current authors.

Several works considered various models of sparsity for the leading eigenvectors and developed improved sparse estimators. For example, Witten, Tibshirani and Hastie (2009) and Zou, Hastie and Tibshirani (2006), among others, imposed l1-type sparsity constraints directly on the eigenvector estimates and proposed optimization procedures for obtaining them. Shen and Huang (2008) suggested a regularized low rank approach to sparse PCA. The consistency of the resulting leading eigenvectors was recently proven in Shen, Shen and Marron (2011), in a model in which the sample size n is fixed while N → ∞. d’Aspremont et al. (2007) suggested a semi-definite programming (SDP) problem as a relaxation to the l0-penalty for sparse population eigenvectors. Assuming a single spike, Amini and Wainwright (2009) studied the asymptotic properties of the resulting leading eigenvector of the covariance estimator in the joint limit as both sample size and dimension tend to infinity. Specifically, they considered a leading eigenvector with exactly k ≪ N nonzero entries all of the form . For this hardest subproblem in the k-sparse l0-ball, Amini and Wainwright (2009) derived information theoretic lower bounds for such eigenvector estimation.

In this paper, in contrast, following Johnstone and Lu (2009) (JL), we study estimation of the leading eigenvectors of ∑ assuming that these are approximately sparse, with a bounded lq norm. Under this model, JL developed an estimation procedure based on coordinate selection by thresholding the diagonal of the sample covariance matrix, followed by the spectral decomposition of the submatrix corresponding to the selected coordinates. JL further proved consistency of this estimator assuming dimension grows at most polynomially with sample size, but did not study its convergence rate. Since this estimation procedure is considerably simpler to implement and computationally much faster than the l1 penalization procedures cited above, it is of interest to understand its theoretical properties. More recently, Ma (2011) developed iterative thresholding sparse PCA(ITSPCA),which is based on repeated filtering, thresholding and orthogonalization steps that result in sparse estimators of the subspaces spanned by the leading eigenvectors. He also proved consistency and derived rates of convergence under appropriate loss functions and sparsity assumptions. In a later work, Cai, Ma and Wu (2012) considered a two-stage estimation scheme for the leading population eigenvector, in which the first stage is similar to the DT scheme applied to a stochastically perturbed version of the data. The estimates of the leading eigenvectors from this step are then used to project another stochastically perturbed version of the data to obtain the final estimates of the eigenvectors through solving an orthogonal regression problem. They showed that this two-stage scheme achieves the optimal rate for estimation of eigen-subspaces under suitable sparsity conditions.

In this paper, which is partly based on the Ph.D. thesis of Paul [Paul (2005)], we study the estimation of the leading eigenvectors of ∑, all assumed to belong to appropriate lq spaces. Our analysis thus extends the JL setting and complements the work of Amini and Wainwright (2009) in the l0-sparsity setting. For simplicity, we assume Gaussian observations in our analysis.

The main contributions of this paper are as follows. First, we establish lower bounds on the rate of convergence of the minimax risk for any eigenvector estimator under the l2 loss. This analysis points to three different regimes of sparsity, which we denote dense, thin and sparse, each having its own rate of convergence. We show that in the “dense” setting (as defined in Section 3), the standard PCA estimator attains the optimal rate of convergence, whereas in sparser settings it is not even consistent. Next, we show that while the JL diagonal thresholding (DT) scheme is consistent under these sparsity assumptions, it is not rate optimal in general. This motivates us to propose a new refined thresholding method (Augmented Sparse PCA, or ASPCA) that is based on a two-stage coordinate selection scheme. In the sparse setting, both our ASPCA procedure, as well as the method of Ma (2011) achieve the lower bound on the minimax risk obtained by us, and are thus rate-optimal procedures, so long as DT is consistent. For proofs see Ma (2011) and Paul and Johnstone (2007). There is a somewhat special, intermediate, “thin” region where a gap exists between the current lower bound and the upper bound on the risk. It is an open question whether the lower bound can be improved in this scenario, or a better estimator can be derived. Table 1 provides a comparison of the lower bounds and rates of convergence of various estimators.

Table 1.

Comparison of lower bounds on eigenvector estimation and worst case rates of various procedures

| Estimator Lower bound |

Dense O(N/n) |

Thin O(n−(1−q/2)) |

Sparse O((log N/n)1−q/2) |

|---|---|---|---|

| PCA | Rate optimal* | Inconsistent | Inconsistent |

| DT | Inconsistent | Inconsistent | Not rate optimal |

| ASPCA | Inconsistent | Inconsistent | Rate optimal† |

When N/n → 0.

So long as DT is consistent.

The theoretical results also show that under comparable scenarios, the optimal rate for eigenvector estimation O((log N/n)−(1−q/2)) (under squared-error loss) is faster than the rate obtained for sparse covariance estimation, O((log N/n)−(1−q)) (under squared operator norm loss), by Bickel and Levina (2008a) and shown to be optimal by Cai and Zhou (2012).

Finally, we emphasize that to obtain good finite-sample performance for both our two-stage scheme, as well as for other thresholding methods, the exact thresholds need to be carefully tuned. This issue and the detailed theoretical analysis of the ASPCA estimator are beyond the scope of this paper, and will be presented in a future publication. After this paper was completed, we learned of Vu and Lei (2012), which cites Paul and Johnstone (2007) and contains results overlapping with some of the work of Paul and Johnstone (2007) and this paper.

The rest of the paper is organized as follows. In Section 2, we describe the model for the eigenvectors and analyze the risk of the standard PCA estimator. In Section 3, we present the lower bounds on the minimax risk of any eigenvector estimator. In Section 4, we derive a lower bound on the risk of the diagonal thresholding estimator proposed by Johnstone and Lu (2009). In Section 5, we propose a new estimator named ASPCA (augmented sparse PCA) that is a refinement of the diagonal thresholding estimator. In Section 6, we discuss the question of attainment of the risk bounds. Proofs of the results are given in Appendix A.

Throughout, 𝕊N−1 denotes the unit sphere in ℝN centered at the origin, ⌊x⌋ denotes the largest integer less than or equal to x ∈ ℝ.

2. Problem setup

We suppose a triangular array model, in which for each n, the random vectors , each have dimension N = N(n) and are independent and identically distributed on a common probability space. Throughout we assume that Xi’s are i.i.d. as NN(0, ∑), where the population matrix ∑, also depending on N, is a finite rank perturbation of (a multiple of) the identity. In other words,

| (2.1) |

where λ1 > λ2 > ⋯ > λM > 0, and the vectors θ1, … ,θM ∈ ℝN are orthonormal, which implies (1.1). θν is the eigenvector of ∑ corresponding to the νth largest eigenvalue, namely, λν + σ2. The term “finite rank” means that M remains fixed even as n → ∞. The asymptotic setting involves letting both n and N grow to infinity simultaneously. For simplicity, we assume that the λν’s are fixed while the parameter space for the θν’s varies with N.

The observations can be described in terms of the model

| (2.2) |

Here, for each i, υνi, Zik are i.i.d. N(0, 1). Since the eigenvectors of ∑ are invariant to a scale change in the original observations, it is henceforth assumed that σ = 1. Hence, λ1, …, λM in the asymptotic results should be replaced by λ1/σ2, … , λM/σ2 when (2.1) holds with an arbitrary σ > 0. Since the main focus of this paper is estimation of eigenvectors, without loss of generality we consider the uncentered sample covariance matrix S ≔ n−1 XXT, where X = [X1: … : Xn].

The following condition, termed Basic assumption, will be used throughout the asymptotic analysis, and will be referred to as (BA).

(BA) (2.2) holds with σ = 1; N = N(n) → ∞ as n → ∞; λ1 > ⋯ > λM > 0 are fixed (do not vary with N); M is unknown but fixed.

2.1. Eigenvector estimation with squared error loss

Given data , the goal is to estimate M and the eigenvectors θ1, … ,θM. For simplicity, to derive the lower bounds, we first assume that M is known. In Section 5.2 we derive an estimator of M, which can be shown to be consistent under the assumed sparsity conditions. To assess the performance of any estimator, a minimax risk analysis approach is proposed. The first task is to specify a loss function L(θ̂ν, θν) between the estimated and true eigenvector.

Eigenvectors are invariant to choice of sign, so we introduce a notation for the acute (angle) difference between unit vectors,

where a and b are N × 1 vectors with unit l2 norm. We consider the following loss function, also invariant to sign changes:

| (2.3) |

An estimator θ̂ν is called consistent with respect to L, if L(θ̂ν,θν) → 0 in probability as n → ∞.

2.2. Rate of convergence for ordinary PCA

We first consider the asymptotic risk of the leading eigenvectors of the sample covariance matrix (henceforth referred to as the standard PCA estimators) when the ratio N/n → 0 as n → ∞. For future use, we define

| (2.4) |

and

| (2.5) |

In Johnstone and Lu (2009) (Theorem 1) it was shown that under a single spike model, as N/n → 0, the standard PCA estimator of the leading eigenvector is consistent. The following result, proven in the Appendix, is a refinement of that, as it also provides the leading error term.

Theorem 2.1. Let θ̂ν,PCA be the eigenvector corresponding to the νth largest eigenvalue of S. Assume that (BA) holds and N, n → ∞ such that N/n → 0. Then, for each ν = 1, … , M,

| (2.6) |

Remark 2.1. Observe that Theorem 2.1 does not assume any special structure such as sparsity for the eigenvectors. The first term on the right-hand side of (2.6) is a nonparametric component which arises from the interaction of the noise terms with the different coordinates. The second term is “parametric” and results from the interaction with the remaining M − 1 eigenvectors corresponding to different eigenvalues. The second term shows that the closer the successive eigenvalues, the larger the estimation error. The upshot of (2.6) is that standard PCA yields a consistent estimator of the leading eigenvectors of the population covariance matrix when the dimension-to-sample-size ratio (N/n) is asymptotically negligible.

2.3. lq constraint on eigenvectors

When N/n → c ∈ (0, ∞], standard PCA provides inconsistent estimators for the population eigenvectors, as shown by various authors [Johnstone and Lu (2009), Lu (2002), Nadler (2008), Onatski (2006), Paul (2007)]. In this subsection we consider the following model for approximate sparsity of the eigenvectors. For each ν = 1, … , M, assume that θν belongs to an lq ball with radius C, for some q ∈ (0, 2), thus θν ∈ Θq (C), where

| (2.7) |

Note that our condition of sparsity is slightly different from that of Johnstone and Lu (2009). Since 0 < q < 2, for Θq(C) to be nonempty, one needs C ≥ 1. Further, if Cq ≥ N1−q/2, then the space Θq (C) is all of 𝕊N−1 because in this case, the least sparse vector (1, 1, … , 1) is in the parameter space.

The parameter space for θ ≔ [θ1: … :θM] is denoted by

| (2.8) |

where Θq (C) is defined through (2.7), and Cν ≥ 1 for all ν = 1, … , M. Thus consists of sparse orthonormal M-frames, with sparsity measured in lq. Note that in the analysis that follows we allow the Cν’s to increase with N.

Remark 2.2. While our focus is on eigenvector sparsity, condition (2.8) also implies sparsity of the covariance matrix itself. In particular, for q ∈ (0, 1), a spiked covariance matrix satisfying (2.8) also belongs to the class of sparse covariance matrices analyzed by Bickel and Levina (2008a), Cai and Liu (2011) and Cai and Zhou (2012). Indeed, Cai and Zhou (2012) obtained the minimax rate of convergence for covariance matrix estimators under the spectral norm when the rows of the population matrix satisfy a weak-lq constraint. However, as we will show below, the minimax rate for estimation of the leading eigenvectors is faster than that for covariance estimation.

3. Lower bounds on the minimax risk

We now derive lower bounds on the minimax risk of estimating θν under the loss function (2.3). To aid in describing and interpreting the lower bounds, we define the following two auxiliary parameters. The first is an effective noise level per coordinate

| (3.1) |

and the second is an effective dimension

| (3.2) |

where aq ≔ (2/9)1−q/2, c1 ≔ log(9/8) and and finally .

The phrase effective noise level per coordinate is motivated by the risk bound in Theorem 2.1: dividing both sides of (2.6) by N, the expected “per coordinate” risk (or variance) of the PCA estimator is asymptotically . Next, following Nadler (2009), let us provide a different interpretation of τν. Consider a sparse θν and an oracle that, regardless of the observed data, selects a set Jτ of all coordinates of θν that are larger than τ in absolute value, and then performs PCA on the sample covariance restricted to these coordinates. Since θν ∈ Θq (Cν), the maximal squared-bias is

which follows by the correspondence xk = |θνk|q, and the convexity of the function . On the other hand, by Theorem 2.1, the maximal variance term of this oracle estimator is of the order kτ /(nh(λν)) where kτ is the maximal number of coordinates of θν exceeding τ. Again, θν ∈ Θq (Cν) implies that . Thus, to balance the bias and variance terms, we need . This heuristic analysis shows that τν can be viewed as an oracle threshold for the coordinate selection scheme, that is, the best possible estimator of θν based on individual coordinate selection can expect to recover only those coordinates that are above the threshold τν.

To understand why mν is an effective dimension, consider the least sparse vector θν ∈ Θq (Cν). This vector should have as many nonzero coordinates of equal size as possible. If then the vector with coordinates ± N−1/2 does the job. Otherwise, we set the first coordinate of the vector to be for some r ∈ (0, 1) and choose all the nonzero coordinates to be of magnitude τν. Clearly, we must have , where m + 1 is the maximal number of nonzero coordinates, while the lq constraint implies that . The last inequality shows that the maximal m is just a constant multiple of mν. This construction also constitutes the key idea in the proof of Theorems 3.1 and 3.2. Finally, we set

| (3.3) |

Theorem 3.1. Assume that (BA) holds, 0 < q < 2 and n, N → ∞. Then, there exists a constant B1 > 0 such that for n sufficiently large,

| (3.4) |

where δn is given by

We may think of mn, ν ≔ min{N′, mν} as the effective dimension of the least favorable configuration.

In the thin setting, for some c′ > 0), and the lower bound is of the order

| (3.5) |

In the dense setting, on the other hand, mn, ν = N − M, and

| (3.6) |

If N/n → c for some c > 0, then δn ≍ 1, and so any estimator of the eigenvector θν is inconsistent. If N/n → 0, then equation (3.6) and Theorem 2.1 imply that the standard PCA estimator θ̂ν,PCA attains the optimal rate of convergence.

A sharper lower bound is possible if for some α ∈ (0, 1). We call this a sparse setting, noting that it is a special case of the thin setting. In this case the dimension N is much larger than the quantity measuring the effective dimension. Hence, we define a modified effective noise level percoordinate

and a modified effective dimension

Theorem 3.2. Assume that (BA) holds, 0 < q < 2 and n, N → ∞ in such a way that for some α ∈ (0, 1). Then there exists a constant B1 such that for n sufficiently large, the minimax bound (3.4) holds with

| (3.7) |

so long as this quantity is ≤ 1.

Note that in the sparse setting δn is larger by a factor of (log N)1−q/2 compared to the thin setting [equation (3.5)].

It should be noted that for fixed signal strength λν, for the corresponding eigenvector to be thin, but not sparse is somewhat of a “rarity,” as the following argument shows: consider first the case N = o(n). If , then we are in the dense setting, since . On the other hand, if N = o(n) and for some α ∈ (0, 1), then θν is sparse, according to the discussion preceding Theorem 3.2. So, if N = o (n), for the eigenvector θν to be thin but not sparse, we need where sN is a term which may be constant or may converge to zero at a rate slower than any polynomial in N. Next, consider the case n = o (N). For a meaningful lower bound, we require , which means that for some constant cq,ν > 0. Thus, as long as n = O(N1−α) for some α ∈ (0, 1), θν cannot be thin but not sparse. Finally, suppose that N ≍ n, and let for some β ≥ 0. If β < 1 − q/2, then we are in the sparse case. On the other hand, if β > 1 − q/2, then there is no sparsity at all since when the entire 𝕊N−1 belongs to the relevant lq ball for θν. Hence, only if β = 1 − q/2 exactly, it is possible for θν to be sparse. This analysis emphasizes the point that at least for a fixed signal strength, thin but not sparse is a somewhat special situation.

4. Risk of the diagonal thresholding estimator

In this section, we analyze the convergence rate of the diagonal thresholding (DT) approach to sparse PCA proposed by Johnstone and Lu (2009) (JL). In this section and in Section 5, we assume for simplicity that N ≥ n. Let the sample variance of the kth coordinate, the kth diagonal entry of S, be denoted by Skk. Then DT consists of the following steps:

Define I = I (γn) to be the set of indices k ∈ {1, … , N} such that Skk > 1 + γn for some threshold γn > 0.

Let SII be the submatrix of S corresponding to the coordinates I. Perform an eigen-analysis of SII and denote its eigenvectors by fi, i = 1, … , min{n, |I|}.

For ν = 1, … , M, estimate θν by the N × 1 vector f̃ν, obtained from fν by augmenting zeros to all the coordinates in Ic ≔ {1, … , N} \ I.

Assuming that θν ∈ Θq(Cν), and a threshold for some γ > 0, JL showed that DT yields a consistent estimator of θν, but did not further analyze the risk. Indeed, as we prove below, the risk of the DT estimator is not rate optimal. This might be anticipated from the lower bound on the minimax risk (Theorems 3.1 and 3.2) which indicate that to attain the optimal risk, a coordinate selection scheme must select all coordinates of θν of size at least for some c > 0. With a threshold of the form γn above, however, only coordinates of size (log N/n)1/4 are selected. Even for the case of a single signal, M = 1, this leads to a much larger lower bound.

Theorem 4.1. Suppose that (BA) holds with M = 1. Let C > 1 (may depend on n), 0 < q < 2 and n, N → ∞ be such that . Then the diagonal thresholding estimator θ̂1, DT satisfies

| (4.1) |

for a constant Kq > 0, where C̄q = Cq −1.

A comparison of (4.1) with the lower bound (3.5), shows a large gap between the two rates, n−1/2(1−q/2) versus n−(1−q/2). This gap arises because DT uses only the diagonal of the sample covariance matrix S, ignoring the information in its off diagonal entries. In the next section we propose a refinement of the DT scheme, denoted ASPCA, that constructs an improved eigenvector estimate using all entries of S.

In the sparse setting, the ITSPCA estimator of Ma (2011) attains the same asymptotic rate as the lower bound of Theorem 3.2, provided DT yields consistent estimates of the eigenvectors. The latter condition can be shown to hold if, for example, for all ν = 1, … , M. Thus, in the sparse setting, with this additional restriction, the lower bound on the minimax rate is sharp, and consequently, the DT estimator is not rate optimal.

5. A two-stage coordinate selection scheme

As discussed above, the DT scheme can reliably detect only those eigenvector coordinates k for which |θν,k| ≥ c(log N/n)1/4 (for some c > 0), whereas to reach the lower bound one needs to detect those coordinates for which |θν,k| ≥ c(log N/n)1/2.

To motivate an improved coordinate selection scheme, consider the single component (i.e., M = 1) case, and form a partition of the N coordinates into two sets A and B, where the former contains all those k such that |θ1k| is “large” (selected by DT), and the latter contains the remaining smaller coordinates. Partition the matrix ∑ as

Observe that . Let θ̃1 be a “preliminary” estimator of θ1 such that limn → ∞ ℙ(〈θ̃1,A, θ1,A〉 ≥ δ0) = 1 for some δ0 > 0 (e.g., θ̃1 could be the DT estimator). Then we have the relationship

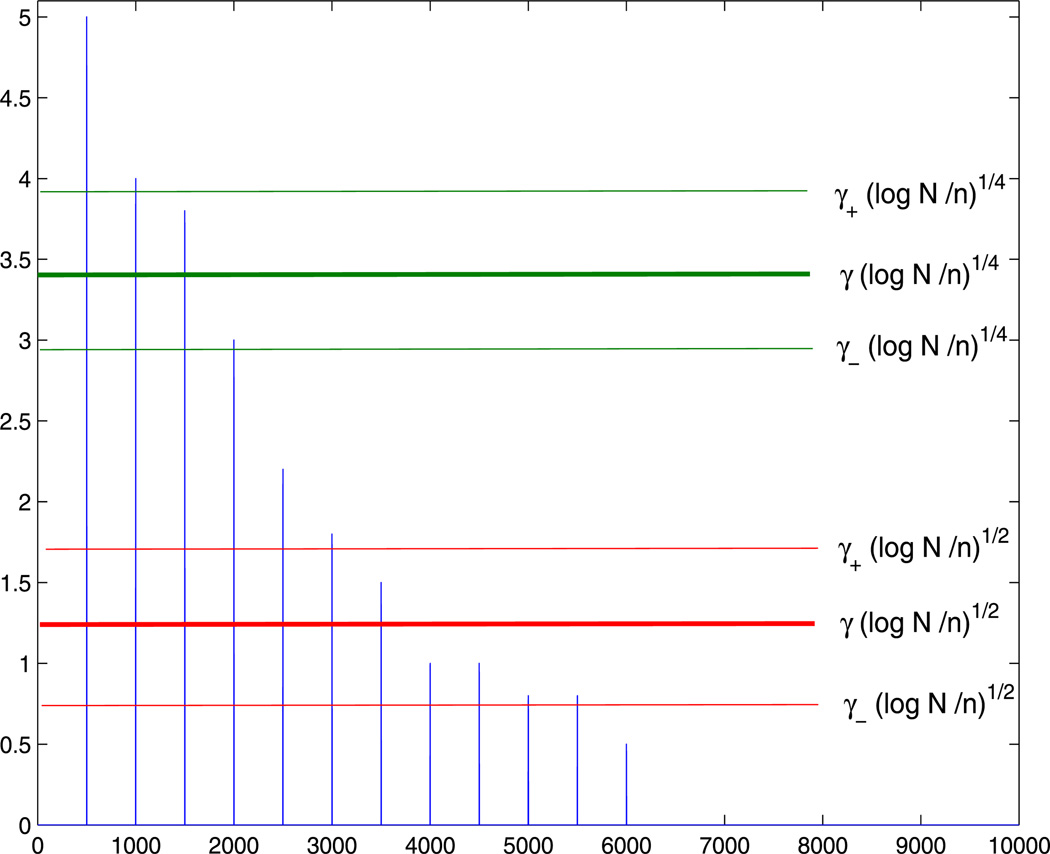

for some c(δ0) bounded below by δ0/2, say. Thus one possible strategy is to additionally select all those coordinates of ∑BAθ̃1,A that are larger (in absolute value) than some constant multiple of . Neither ∑BA nor λ1 is known, but we can use SBA as a surrogate for the former and the largest eigenvalue of SAA to obtain an estimate for the latter. A technical challenge is to show that, with probability tending to 1, such a scheme indeed recovers all coordinates k with , while discarding all coordinates k with for some constants γ+ > γ− > 0. Figure 1 provides a pictorial description of the DT and ASPCA coordinate selection schemes.

Fig. 1.

Schematic diagram of the DT and ASPCA thresholding schemes under the single component setting. The x-axis represents the indices of different coordinates of the first eigenvector and the vertical lines depict the absolute values of the coordinates. The threshold for the DT scheme is γ(log N/n)1/4 while the thresholds for the ASPCA scheme is γ(log N/n)1/2. For some generic constants γ+ > γ > γ− > 0, with high probability, the schemes select all coordinates above the upper limits (indicated by the multiplier γ+) and discard all coordinates below the lower limits (indicated by the multiplier γ−).

5.1. ASPCA scheme

Based on the ideas described above, we now present the ASPCA algorithm. It first makes two stages of coordinate selection, whereas the final stage consists of an eigen-analysis of the submatrix of S corresponding to the selected coordinates. The algorithm is described below.

For any γ > 0 define

| (5.1) |

Let γi > 0 for i = 1, 2 and κ > 0 be constants to be specified later.

Stage 1.

-

1°

Let I = I (γ1,n) where .

-

2°

Denote the eigenvalues and eigenvectors of SII by ℓ̂1 > ⋯ > ℓ̂m1 and f1, … , fm1, respectively, where m1 = min{n, |I|}.

-

3°

Estimate M by M̂ defined in Section 5.2.

Stage 2.

-

4°

Let and Q = SIcI E.

-

5°

Let for some γ2,n > 0. Define K = I ∪ J.

Stage 3.

-

6°

For ν = 1, …, M̂, denote by θ̂ν the νth eigenvector of SKK, augmented with zeros in the coordinates Kc.

Remark 5.1. The ASPCA scheme is specified up to the choice of parameters γ1 and γ2,n that determine its rate of convergence. It can be shown that choosing γ1 = 4 and

| (5.2) |

with for some ε > 0, results in an asymptotically optimal rate. Again, we note that for finite N, n, the actual performance in terms of the risk of the resulting eigenvector estimate may have a strong dependence on the threshold. In practice, a delicate choice of thresholds can be highly beneficial. This issue, as well as the analysis of the risk of the ASPCA estimator, are beyond the scope of this paper and will be studied in a separate publication.

5.2. Estimation of M

Estimation of the dimension of the signal subspace is a classical problem. If the signal eigenvalues are strong enough (i.e., for all ν = 1, … , M, for some c > 1 independent of N, n), then nonparametric methods that do not assume eigenvector sparsity can asymptotically estimate the correct M; see, for example, Kritchman and Nadler (2008). When the eigenvectors are sparse, we can detect much weaker signals, as we describe below.

We estimate M by thresholding the eigenvalues of the submatrix SĪĪ where for some γ̄ ∈ (0, γ1). Let m̄ = min{n, |Ī|} and ℓ̄1 > ⋯ > ℓ̄m̄ be the nonzero eigenvalues of SĪĪ. Let αn > 0 be a threshold of the form

for some user-defined constant c0 > 0. Then, define M̂ by

| (5.3) |

The idea is that, for large enough n, I (γn) ⊂ Ī with high probability and thus |Ī| acts as an upper bound on |I (γ1n)|. Using this and the behavior of the extreme eigenvalues of a Wishart matrix, it can be shown that, with a suitable choice of c0 and γ̄, M̂ is a consistent estimator of M.

6. Summary and discussion

In this paper we have derived lower bounds on eigenvector estimates under three different sparsity regimes, denoted dense, thin and sparse. In the dense setting, Theorems 2.1 and 3.1 show that when N/n → 0, the standard PCA estimator attains the optimal rate of convergence.

In the sparse setting, Theorem 3.1 of Ma (2011) shows that the maximal risk of the ITSPCA estimator proposed by him attains the same asymptotic rate as the corresponding lower bound of Theorem 3.2. This implies that in the sparse setting, the lower bound on the minimax rate is indeed sharp. In a separate paper, we prove that in the sparse regime, the ASPCA algorithm also attains the minimax rate. All these sparse setting results currently require the additional condition of consistency of DT—without this condition, the rate optimality question remains open.

Finally, our analysis leaves some open questions in the intermediate thin regime. According to Theorem 3.1, the lower bound in this regime is smaller by a factor of (log N)1−q/2, as compared to the sparse setting. Therefore, whether there exists an estimator (and in particular, one with low complexity), that attains the current lower bound, or whether this lower bound can be improved is an open question for future research. However, as we indicated at the end of Section 3, the eigenvector being thin but not sparse is a somewhat rare occurrence in terms of mathematical possibilities.

Acknowledgement

The authors thank the Editor and an anonymous referee for their thoughtful suggestions. The final draft was completed with the hospitality of the Institute of Mathematical Sciences at the National University of Singapore.

APPENDIX A: PROOFS

A.1. Asymptotic risk of the standard PCA estimator

To prove Theorem 2.1, on the risk of the PCA estimator, we use the following lemmas. Throughout, ‖B‖ = sup{xT Bx : ‖x‖2 = 1} denotes the spectral norm on square matrices.

Deviation of extreme Wishart eigenvalues and quadratic forms

In our analysis, we will need a probabilistic bound for deviations of ‖n−1ZZT − I‖. This is given in the following lemma, proven in Appendix B.

Lemma A.1. Let Z be an N × n matrix with i.i.d. N (0, 1) entries. Suppose N < n and set and γn = N/n. Then for any c > 0, there exists nc ≥ 1 such that for all n ≥ nc,

| (A.1) |

Lemma A.2 [Johnstone (2001)]. Let denote a Chi-square random variable with n degrees of freedom. Then

| (A.2) |

| (A.3) |

| (A.4) |

Lemma A.3 [Johnstone and Lu (2009)]. Let y1i, y2i, i = 1, … , n, be two sequences of mutually independent, i.i.d. N(0, 1) random variables. Then for large n and any b s.t. 0 < b ≪ ,

| (A.5) |

Perturbation of eigen-structure

The following lemma, modified in Appendix B from Paul (2005), is convenient for risk analysis of estimators of eigenvectors. Several variants of this lemma appear in the literature, most based on the approach of Kato (1980). To state it, let the eigenvalues of a symmetric matrix A be denoted by λ1(A) ≥ ⋯ ≥ λm (A), with the convention that λ0(A) = ∞ and λm+1 (A) = −∞. Let Ps denote the projection matrix onto the possibly multidimensional eigenspace corresponding to λs (A) and define

Note that Hr (A) may be viewed as the resolvent of A “evaluated at λr (A).”

Lemma A.4. Let A and B be symmetric m × m matrices. Suppose that λr (A) is a unique eigenvalue of A with

Let pr denote the unit eigenvector associated with the λr(A). Then

| (A.6) |

where, if (A),

| (A.7) |

and we may take K = 30.

Proof of Theorem 2.1. First we out line the approach. For notational simplicity, throughout this subsection, we write θ̂ν to mean θ̂ν,PCA. Recall that the loss function L(θ̂ν, θν) = ‖θ̂ν ⊝ θν‖2. Invoking Lemma A.4 with A = ∑ and B = S − ∑ we get

| (A.8) |

where and

| (A.9) |

and . Note that Hνθν = 0 and that Hν∑θν = 0.

Let . We have from (A.7) that

and we will show that as n → ∞, εnν → 0 with probability approaching 1 and that

| (A.10) |

Theorem 2.1 then follows from an (exact, nonasymptotic) evaluation,

| (A.11) |

We begin with the evaluation of (A.11). First we derive a convenient representation of HνSθν. In matrix form, model (2.2) becomes

| (A.12) |

where , for ν = 1, … , M. Also, define

| (A.13) |

and

| (A.14) |

Then we have

Using (A.13),

Using (A.9), Hνθμ = (λμ − λν)−1 θμ for μ ≠ ν, and we arrive at the desired representation

| (A.15) |

By orthogonality,

| (A.16) |

Now we compute the expectation. One verifies that zν ~ N(0, In) independently of each other and of each υν ~ N(0, In), so that wν ~ N (0, (1 + λν) In) independently. Hence, for μ ≠ ν,

| (A.17) |

From (A.13),

Now, it can be easily verified that if W ≔ ZZT ~ WN(n, I), then for arbitrary symmetric N × N matrices Q, R, we have

| (A.18) |

Taking Q = P⊥ and and noting that QR = 0, by (A.18) we have

| (A.19) |

Combining (A.17) with (A.19) in computing the expectation of (A.16), we obtain the expression (A.11) for 𝔼‖HνSθν‖2.

A.2. Bound for ‖S − ∑‖

We begin with the decomposition of the sample covariance matrix S. Introduce the abbreviation ξμ = n−1Zυμ. Then

| (A.20) |

and from (2.1), with Vμμ′= |〈υμ, υμ′〉n − δμμ′| and δμμ′ denoting the Kronecker symbol,

| (A.21) |

We establish a bound for ‖S − ∑‖ with probability converging to one. Introduce notation

Fix c > 0 and assume that γn ≤ 1. Initially, we assume that 2cηn ≤ 1/2, which is equivalent to N ≥ 16c2 log n.

We introduce some events of high probability under which (A.21) may be bounded. Thus, let D1 be the intersection of the events

| (A.22) |

and let D2 be the event

| (A.23) |

To bound the probability of , in the case of the first line of (A.22), use (A.3) and (A.4) with ε = 2cη̄n. For the second, use (A.5) with b = c2 log n. For the third, observe that Zυμ/‖υμ‖ ~ NN(0, I), and again use (A.4), this time with ε = 2cηn < 1/2. For , we appeal to Lemma A.1. As a result,

| (A.24) |

To bound (A.21) on the event D1 ∩ D2, we use bounds (A.22) and (A.23), and also write

| (A.25) |

say, and also noting that η̄n ≤ ηn, we obtain

| (A.26) |

Now combine the bound 2cηn < 1/2 with Hn ≤ 3/2 and to conclude that on D1 ∩ D2,

and so

since N/n → 0.

Let us now turn to the case N ≤ 16c2 log n. We can replace the last event in (A.22) by the event

and the second bound holds for for sufficiently large n, using the bound for any a > 0. In (A.25), we replace the term (1 + 2cηn)1/2 by (2c2 log n + 2 log N)1/2 which may be bounded by . As soon as N ≥ 4c2, we also have and so . This leads to a bound for the analog of Hn in (A.26) and so to

When N ≤ 16c2 log n, we have and so

To summarize, choose , say, so that Dn = D1 ∩ D2 has probability at least 1−O(n−2), and on Dn we have εnν → 0. This completes the proof of (A.10).

Theorem 2.1 now follows from noticing that L(θ̂ν, θν) ≤ 2 and so

and an additional computation using (A.16) which shows that

A.3. Lower bound on the minimax risk

In this subsection, we prove Theorems 3.1 and 3.2. The key idea in the proofs is to utilize the geometry of the parameter space in order to construct appropriate finite-dimensional subproblems for which bounds are easier to obtain. We first give an overview of the general machinery used in the proof.

Risk bounding strategy

A key tool for deriving lower bounds on the minimax risk is Fano’s lemma. In this subsection, we use superscripts on vectors θ as indices, not exponents. First, we fix ν ∈ {1, … , M} and then construct a large finite subset ℱ of (C1, … , CM), such that for some δ > 0, to be chosen

This property will be referred to as “4δ-distinguishability in θν.” Given any estimator θ̄ of θ, based on data Xn = (X1, … , Xn), define a new estimator ϕ(Xn) = θ*, whose M components are given by , where θ̂μ is the μth column of θ̂. Then, by Chebyshev’s inequality and the 4δ-distinguishability in θν, it follows that

| (A.27) |

The task is then to find an appropriate lower bound for the quantity on the right-hand side of (A.27). For this, we use the following version of Fano’s lemma, due to Birgé (2001), modifying a result of Yang and Barron (1999), pages 1570 and 1571.

Lemma A.5. Let {Pθ : θ ∈ Θ} be a family of probability distributions on a common measurable space, where Θ is an arbitrary parameter set. Let pmax be the minimax risk over Θ, with the loss function L′(θ, θ′) = 1θ≠θ′,

where T denotes an arbitrary estimator of θ with values in Θ. Then for any finite subset ℱ of Θ, with elements θ1, … ,θJ where J = |ℱ |,

| (A.28) |

where Pi = ℙθi, and Q is an arbitrary probability distribution, and K(Pi,Q) is the Kullback–Leibler divergence of Q from Pi.

The following lemma, proven in Appendix B, gives the Kullback–Leibler discrepancy corresponding to two different values of the parameter.

Lemma A.6. Let be two parameters (i.e., for each j, are orthonormal). Let ∑j denote the matrix given by (2.1) with θ = θj (and σ = 1). Let Pj denote the joint probability distribution of n i.i.d. observations from N (0, ∑j) and let η(λ) = λ/(1 + λ). Then the Kullback–Leibler discrepancy of P2 with respect to P1 is given by

| (A.29) |

.

Geometry of the hypothesis set and sphere packing

Next, we describe the construction of a large set of hypotheses ℱ, satisfying the 4δ distinguishability condition. Our construction is based on the well-studied sphere-packing problem, namely how many unit vectors can be packed onto 𝕊m−1 with given minimal pairwise distance between any two vectors.

Here we follow the construction due to Zong (1999) (page 77). Let m be a large positive integer, and m0 = ⌊2m/9⌋. Define as the maximal set of points of the form z = (z1, … , zm) in 𝕊m−1 such that the following is true:

and

For any m ≥ 1, the maximal number of points lying on 𝕊m−1 such that any two points are at distance at least 1, is called the kissing number of an m-sphere. Zong (1999) used the construction described above to derive a lower bound on the kissing number, by showing that for m large.

Next, for m < N − M we use the sets to construct our hypothesis set ℱ of the same size, . To this end, let denote the standard basis of ℝN. Our initial set θ0 is composed of the first M standard basis vectors, θ0 = [e1: … :eM]. Then, for fixed ν, and values of m, r yet to be determined, each of the other hypotheses θj ∈ ℱ has the same vectors as θ0 for k ≠ ν. The difference is that the νth vector is instead given by

| (A.30) |

where , is an enumeration of the elements of . Thus perturbs eν in subsets of the fixed set of coordinates {M + 1, … , M + m}, according to the sphere-packing construction for 𝕊m−1.

The construction ensures that are orthonormal for each j. In particular, , vanishes unless μ = μ′, and so (A.29) simplifies to

| (A.31) |

for j = 1, … , |ℱ |. Finally, , and so by construction, for any θj, θk ∈ ℱ with j ≠ k, we have

| (A.32) |

In other words, the set ℱ is r2-distinguishable in θν. Consequently, combining (A.27), (A.28) and (A.31) (taking Q = Pθ0 in Lemma A.5), we have

| (A.33) |

with

| (A.34) |

Proof of Theorem 3.1. It remains to specify m and let r ∈ (0, 1). Let be the sphere-packing set defined above, and let ℱ be the corresponding set of hypotheses, defined via (A.30).

Let c1 = log(9/8), then we have log |ℱ | ≥ bmc1m, where bm → 1 as m → ∞. Now choose r = r(m) so that a(r, ℱ) ≤ 3/4 asymptotically in the bound (A.33). To accomplish this, set

| (A.35) |

Indeed, inserting this into (A.34) we find that

Therefore, so long as m ≥ m*, an absolute constant, we have a(r, ℱ0) ≤ 3/4 and hence .

We also need to ensure that . Since exactly m0 coordinates are nonzero out of {M + 1, … , M + m},

, where aq = (2/9)1−q/2. A sufficient condition for is that

| (A.36) |

Our choice (A.35) fixes r2/m, and so, recalling that , the previous display becomes

To simultaneously ensure that (i) r2 < 1, (ii) m does not exceed the number of available co-ordinates, N − M and (iii) , we set

Recalling (3.1), (3.2) and (3.3), we have

To complete the proof of Theorem 3.1, set B1 = [(m* + 1)/m*]c1/16 and observe that

Proof of Theorem 3.2. The construction of the set of hypotheses in the proof of Theorem 3.1 considered a fixed set of potential nonzero coordinates, namely {M + 1, … ,M + m}. However, in the sparse setting, when the effective dimension is significantly smaller than the nominal dimension N, it is possible to construct a much larger collection of hypotheses by allowing the set of nonzero coordinates to span all remaining coordinates {M + 1, … , N}.

In the proof of Theorem 3.2 we shall use the following lemma, proven in Appendix B. Call A ⊂ {1, … , N} an m-set if |A| = m.

Lemma A.7. Let k be fixed, and let 𝒜k be the maximal collection of m-sets such that the intersection of any two members has cardinality at most k −1. Then, necessarily,

| (A.37) |

Let k = [m0/2] + 1 and m0 = [βm] with 0 < β < 1. Suppose that m, N → ∞with m = o(N). Then

| (A.38) |

, where ε(x) is the Shannon entropy function,

Let π be an m-set contained in {M + 1, … , N}, and construct a family ℱπ by modifying (A.30) to use the set π rather than the fixed set {M +1, … , M + m} as in Theorem 3.1,

We will choose m below to ensure that . Let 𝒫 be a collection of sets π such that, for any two sets π and π′ in 𝒫, the set π ∩ π′ has cardinality at most m0/2. This ensures that the sets ℱπ are disjoint for π ≠ π′, since each is nonzero in exactly m0 + 1 coordinates. This construction also ensures that

Define ℱ ≔ ∪π∈𝒫 ℱπ. Then

| (A.39) |

By Lemma A.7, there is a collection 𝒫 such that |𝒫| is at least exp([Nε(m/9N)− 2mε(1/9)](1 + o(1))). Since ε(x) ≥ − x log x, it follows from (A.39) that

since m = O (N1−α).

Proceeding as for Theorem 3.1, we have log |ℱ| ≥ bm (α/9)m log N, where bm → 1. Let us set (with m still to be specified)

| (A.40) |

Again, so long as m ≥ m*, we have a(r, ℱ) ≤ 3/4 and . We also need to ensure that , which as before is implied by (A.36). Substituting (A.40) puts this into the form

To simultaneously ensure that (i) r2 < 1, (ii) m does not exceed the number of available co-ordinates, N − M and (iii) , we set

The assumption that for some α ∈ (0, 1) is equivalent to the assertion m̄ν = O(N1−α), and so for n sufficiently large, m̄ν ≤ N − M and so m′ = m̄ν so long as . Theorem 3.2 now follows from our bound on .

A.4. Lower bound on the risk of the DT estimator

To prove Theorem 4.1, assume w.l.o.g. that 〈θ̂1,DT,θ1〉 > 0, and decompose the loss as

| (A.41) |

where I = I (γn) is the set of coordinates selected by the DT scheme and θ1, I denotes the subvector of θ1 corresponding to this set. Note that, in (A.41), the first term on the right can be viewed as a bias term while the second term can be seen as a variance term.

We choose a particular vector θ1 = θ* ∈ Θq(C) so that

| (A.42) |

This, together with (A.41), proves Theorem 4.1 since the worst case risk is clearly at least as large as (A.42). Accordingly, set rn = C̄q/2n−(1−q/2)/4, where C̄q = Cq −1. Since Cqnq/4 = o(n1/2), we have rn = o(1), and so for sufficiently large n, we can take rn < 1 and define

where mn = ⌊(1/2)C̄qnq/4⌋. Then by construction θ* ∈ Θq(C), since

where the last inequality is due to q ∈ (0, 2) and C̄q = Cq −1.

For notational convenience, let . Recall that DT selects all coordinates k for which Skk > 1 + αn. Since , coordinate k is not selected with probability

| (A.43) |

, where . Notice that, for k = 2, …, mn + 1, pk = p2 and θ*,k = 0 for k > mn + 1. Hence,

Now, use bound (A.3) to show that in (A.43) and hence that p2 → 1. Indeed , and so

for sufficiently large n. Hence, , and the proof is complete.

APPENDIX B: PROOFS OF RELEVANT LEMMAS

B.1. Proof of Lemma A.1

We use the following result on extreme eigenvalues of Wishart matrices from Davidson and Szarek (2001).

Lemma B.1. Let Z be a p × q matrix of i.i.d. N (0, 1) entries with p ≤ q. Let smax (Z) and smin(Z) denote the largest and the smallest singular value of Z, respectively. Then

| (B.1) |

| (B.2) |

Observe first that

Let s± denote the maximum and minimum singular values of N−1/2Z. Define for t > 0. Then since , and letting Δn(t) ≔ 2γ(t) + γ(t)2, we have

We apply Lemma B.1 with p = N and q = n, and get

We observe that, with γn = N/n ≤ 1,

| (B.3) |

Now choose so that tail probability is at most 2e−n2t2/2 = 2n−c2. The result is now proved, since if , then t (4 + t) ≤ ctn.

B.2. Proof of Lemma A.4

Paul (2005) introduced the quantities

| (B.4) |

,

| (B.5) |

and showed that the residual term Rr can be bounded by

| (B.6) |

where the second bound holds only if 6r .

We now show that if Δ̄r ≤ 1/4, then we can simplify bound (B.6) to obtain (A.7). To see this, note that |λr(A + B) − λr(A)| ≤ ‖B‖ and that ‖Hr(A)‖ ≤ [minj≠r |λj (A) − λr(A)|]−1, so that

Now, defining δ ≔ 2 Δ̄r (1 + 2Δ̄r) and β ≔ ‖Hr (A)Bpr (A)‖, we have , and the bound (B.6) may be expressed as

For x > 0, the function x → min{5x/2, 1 + 1/x} ≤ 5/2. Further, if Δ̄r < 1/4, then δ < 3 Δ̄r < 3/4, and so we conclude that

B.3. Proof of Lemma A.6

Recall that, if distributions F1 and F2 have density functions f1 and f2, respectively, such that the support of f1 is contained in the support of f2, then the Kullback–Leibler discrepancy of F2 with respect to F1, to be denoted by K(F1, F2), is given by

| (B.7) |

For n i.i.d. observations Xi, i = 1, … , n, the Kullback–Leibler discrepancy is just n times the Kullback–Leibler discrepancy for a single observation. Therefore, without loss of generality we take n = 1. Since

| (B.8) |

the log-likelihood function for a single observation is given by

| (B.9) |

From (B.9), we have

which equals the RHS of (A.29), since the columns of θj are orthonormal for each j = 1, 2.

B.4. Proof of Lemma A.7

Let 𝒫m be the collection of all m-sets of {1, … , N}, clearly . For any m-set A, let ℐ(A) denote the collection of “inadmissible” m-sets A′ for which |A ∩ A′| ≥ k. Clearly

If 𝒜k is maximal, then 𝒫m = ∪A∈𝒜k ℐ(A), and so (A.37) follows from the inequality

and rearrangement of factorials.

Turning to the second part, we recall that Stirling’s formula shows that if k and N → ∞,

where ζ ∈ (1 − (6k)−1, 1 + (12N)−1). The coefficient multiplying the exponent in is

under our assumptions, and this yields (A.38).

Footnotes

Supported by NSF Grants DMS-09-06812 and NIH R01 EB001988.

Supported by a grant from the Israeli Science Foundation (ISF).

Supported by the NSF Grants DMR 1035468 and DMS-11-06690.

Contributor Information

Aharon Birnbaum, School of Computer Science and Engineering Hebrew University of Jerusalem The Edmond J. Safra Campus Jerusalem, 91904 Israel aharob01@cs.huji.ac.il.

Iain M. Johnstone, Department of Statistics Stanford University Stanford, California 94305 USA imj@stanford.edu.

Boaz Nadler, Department of Computer Science and Applied Mathematics Weizmann Institute of Science P.O. Box 26, Rehovot, 76100 Israel boaz.nadler@weizmann.ac.il.

Debashis Paul, Department of Statistics University of California Davis, California 95616 USA debashis@wald.ucdavis.edu.

REFERENCES

- Amini AA, Wainwright MJ. High-dimensional analysis of semidefinite relaxations for sparse principal components. Ann. Statist. 2009;37:2877–2921. MR2541450. [Google Scholar]

- Anderson TW. Asymptotic theory for principal component analysis. Ann. Math. Statist. 1963;34:122–148. MR0145620. [Google Scholar]

- Baik J, Silverstein JW. Eigenvalues of large sample covariance matrices of spiked population models. J. Multivariate Anal. 2006;97:1382–1408. MR2279680. [Google Scholar]

- Bickel PJ, Levina E. Covariance regularization by thresholding. Ann. Statist. 2008a;36:2577–2604. MR2485008. [Google Scholar]

- Bickel PJ, Levina E. Regularized estimation of large covariance matrices. Ann. Statist. 2008b;36:199–227. MR2387969. [Google Scholar]

- Birgé L. Technical report. Univ. Paris; 2001. A new look at an old result: Fano’s lemma; p. 6. [Google Scholar]

- Cai T, Liu W. Adaptive thresholding for sparse covariance matrix estimation. J. Amer. Statist. Assoc. 2011;106:672–684. MR2847949. [Google Scholar]

- Cai TT, Ma Z, Wu Y. Sparse PCA: Optimal rates and adaptive estimation. Technical report. 2012 Available at arXiv: 1211.1309.

- Cai TT, Zhang C-H, Zhou HH. Optimal rates of convergence for covariance matrix estimation. Ann. Statist. 2010;38:2118–2144. MR2676885. [Google Scholar]

- Cai TT, Zhou HH. Minimax esrimation of large covariance matrices under l1 norm. Statist. Sinica. 2012;22:1319–1378. [Google Scholar]

- D’Aspremont A, El Ghaoui L, Jordan MI, Lanckriet GRG. A direct formulation for sparse PCA using semidefinite programming. SIAM Rev. 2007;49:434–448. (electronic). MR2353806. [Google Scholar]

- Davidson KR, Szarek SJ. Local operator theory, random matrices and Banach spaces. In: Johnson WB, Linden-strauss J, editors. Handbook of the Geometry of Banach Spaces, Vol. I. North-Holland: Amsterdam; 2001. pp. 317–366. MR1863696. [Google Scholar]

- El Karoui N. Operator norm consistent estimation of large-dimensional sparse covariance matrices. Ann. Statist. 2008;36:2717–2756. MR2485011. [Google Scholar]

- Johnstone IM. Chi-square oracle inequalities. In: de Gunst M, Klaassen C, van der Waart A, editors. State of the Art in Probability and Statistics (Leiden, 1999) Institute of Mathematical Statistics Lecture Notes—Monograph Series. Vol. 36. Beachwood, OH: IMS; 2001. pp. 399–418. MR1836572. [Google Scholar]

- Johnstone IM, Lu AY. On consistency and sparsity for principal components analysis in high dimensions. J. Amer. Statist. Assoc. 2009;104:682–693. doi: 10.1198/jasa.2009.0121. MR2751448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jolliffe IT. Principal Component Analysis. Berlin: Springer; 2002. [Google Scholar]

- Kato T. Perturbation Theory of Linear Operators. New York: Springer; 1980. [Google Scholar]

- Kritchman S, Nadler B. Determining the number of components in a factor model from limited noisy data. Chemometrics and Intelligent Laboratory Systems. 2008;94:19–32. [Google Scholar]

- Lu AY. Ph.D. thesis. Stanford, CA: Stanford Univ; 2002. Sparse principal components analysis for functional data. [Google Scholar]

- Ma Z. Technical report, Dept. Statistics. Pennsylvania, Philadelphia, PA: The Wharton School, Univ.; 2011. Sparse principal component analysis and iterative thresholding. [Google Scholar]

- Muirhead RJ. Aspects of Multivariate Statistical Theory. New York: Wiley; 1982. MR0652932. [Google Scholar]

- Nadler B. Finite sample approximation results for principal component analysis: A matrix perturbation approach. Ann. Statist. 2008;36:2791–2817. MR2485013. [Google Scholar]

- Nadler B. Discussion of “On consistency and sparsity for principal component analysis in high dimensions”. J. Amer. Statist. Assoc. 2009;104:694–697. doi: 10.1198/jasa.2009.0121. MR2751449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Onatski A. Technical report. Columbia Univ.; 2006. Determining the number of factors from empirical distribution of eigenvalues. [Google Scholar]

- Paul D. Ph.D. thesis. Stanford, CA: Stanford Univ.; 2005. Nonparametric estimation of principal components. [Google Scholar]

- Paul D. Asymptotics of sample eigenstructure for a large dimensional spiked covariance model. Statist. Sinica. 2007;17:1617–1642. MR2399865. [Google Scholar]

- Paul D, Johnstone IM. Technical report. Univ. California, Davis; 2007. Augmented sparse principal component analysis for high dimensional data. Available at arXiv:1202.1242. [Google Scholar]

- Rothman AJ, Levina E, Zhu J. Generalized thresholding of large covariance matrices. J. Amer. Statist. Assoc. 2009;104:177–186. MR2504372. [Google Scholar]

- Shen H, Huang JZ. Sparse principal component analysis via regularized low rank matrix approximation. J. Multivariate Anal. 2008;99:1015–1034. MR2419336. [Google Scholar]

- Shen D, Shen H, Marron JS. Consistency of sparse PCA in high dimension, low sample size contexts. Technical report. 2011 Available at http://arxiv.org/pdf/1104.4289v1.pdf.

- Tipping ME, Bishop CM. Probabilistic principal component analysis. J.R. Stat. Soc. Ser. B Stat. Methodol. 1999;61:611–622. MR1707864. [Google Scholar]

- Van Trees HL. Optimum Array Processing. New York: Wiley; 2002. [Google Scholar]

- Vu VQ, Lei J. Minimax rates of estimation for sparse PCA in high dimensions. Technical report. 2012 Available at http://arxiv.org/pdf/1202.0786.pdf.

- Witten DM, Tibshirani R, Hastie T. A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics. 2009;10:515–534. doi: 10.1093/biostatistics/kxp008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang Y, Barron A. Information-theoretic determination of minimax rates of convergence. Ann. Statist. 1999;27:1564–1599. MR1742500. [Google Scholar]

- Zong C. Sphere Packings. New York: Springer; 1999. MR1707318. [Google Scholar]

- Zou H, Hastie T, Tibshirani R. Sparse principal component analysis. J. Comput. Graph. Statist. 2006;15:265–286. MR2252527. [Google Scholar]