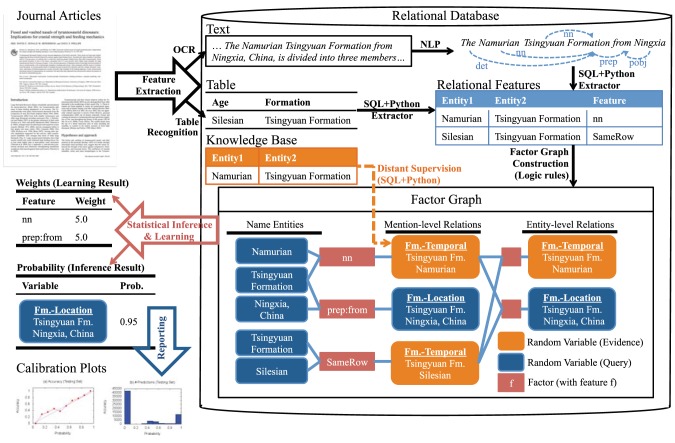

Figure 1. Schematic representation of the PaleoDeepDive workflow.

PDF documents (upper left) are subject to Optical Character Recognition (OCR), Natural Language Processing (NLP), table recognition and other third-party software applications that parse and identify document elements. Entities (in this example, geological formations and geographic locations) are identified and related to one another by features (e.g., parts of speech, locations in table), which are recognized and extracted by SQL queries and scripts (e.g., written in Python). Factor graphs are then constructed for entities and possible relationships among them. DeepDive estimates the weight of features based on their expressions in the data. The final step is reporting, which includes explicit probability estimates for each relationship and calibration reports, which can be used to evaluate and improve the system in an iterative fashion.