Abstract

Individuals spend a majority of their time in their home or workplace and for many, these places are our sanctuaries. As society and technology advance there is a growing interest in improving the intelligence of the environments in which we live and work. By filling home environments with sensors and collecting data during daily routines, researchers can gain insights on human daily behavior and the impact of behavior on the residents and their environments. In this article we provide an overview of the data mining opportunities and challenges that smart environments provide for researchers and offer some suggestions for future work in this area.

1. Introduction

Modeling and analyzing the behavior of humans is a valuable tool for computational systems. The modeling of human behavior provides insights on human habits and their influence on health, sustainability, and well being. Many automated systems that focus on the needs of a human require information about human behavior. Researchers are recognizing that human-centric technologies can assist with valuable functions such as home automation, health monitoring, energy efficiency, and behavioral interventions. The need for the development of such technologies is underscored by the aging of the population, the cost of health care, and the rising concern over resource usage and sustainability.

Until recently, gathering data about human behavior meant conducting surveys or placing observers in the sphere of other humans to record observations about human behavioral habits. Since the miniaturization of microprocessors, however, computing power has been embedded in familiar objects such as home appliances and mobile devices; it is gradually pervading almost every level of society. Advances in pervasive computing have resulted in the development of unobtrusive, wireless and inexpensive sensors for gathering information in everyday environments. When this information is analyzed using data mining techniques, this power can not only be integrated with our lives but it can provide context-aware, automated support in our everyday environments. One physical embodiment of such a system is a smart home. In a smart home, computer software plays the role of an intelligent agent that perceives the state of the physical environment and residents using information from sensors, reasons about this state using artificial intelligence techniques, and then selects actions that can be taken to achieve specified goals [1].

During perception, sensors that are embedded into the home generate readings while residents perform their daily routines. The sensor readings are collected by a computer network and stored in a database that the intelligent agent uses to generate more useful knowledge, such as patterns, predictions, and trends. Action execution moves in the opposite direction – the agent selects an action and stores this selection in the database. The action is transmitted through the network to the physical components that execute the command. The action changes the state of the environment and triggers a new perception/action cycle.

Because of their role in understanding human behavior and providing context-aware services, research in smart environments has grown dramatically in the last decade. A number of physical smart environment testbeds have been implemented [2]–[10] and many of the resulting datasets are available for researchers to mine. The wealth of data that is generated by sensors in home environments is rich, complex, and full of insights on human behavior. In this article we highlight advances that have been made in data mining smart home data and offer ideas for continued research.

2. Smart Home Data

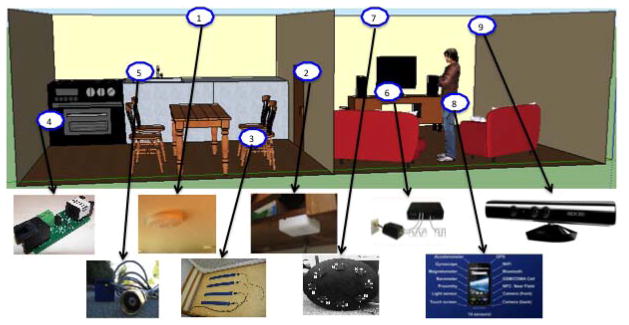

One reason why the vision of ambient intelligence is powerful is that it is becoming very accessible. Sensors are available off-the-shelf to localize movement in the home, provide readings for ambient light and temperature levels, and monitor interaction with doors, phones, water, appliances, and other items in the home (see Figure 1). There are two broad categories of smart home sensors based on where the sensors are placed, namely environmental and wearable sensors. Environmental sensors are embedded in the environment and can detect changes (and types of changes) to the environment as a result of human interactions. Examples of these sensors include video cameras, passive infra-red (PIR) motion detectors, temperature sensors, magnetic reed switches, etc. On other hand wearable sensors are located on the smart home residents themselves and monitor changes into measurement values resulting from human motion and location. Sensors such as accelerometers, gyroscopes, magnetometers and Wi-Fi strength detecting sensors, to name a few, are examples of wearable sensors. These categories of sensors measure specific nuances of human interactions in the smart home and thus are used for different applications. The sensors provide an awareness of the resident context (location, preferences, activities), the physical context (lighting temperature, house design), and time context (hour of day, day of week, season, year). Sensors are used that provide an awareness of the resident context (location, preferences, activities), the physical context (lighting temperature, house design), and time context (hour of day, day of week, season, year).

Figure 1.

Example smart home sensors including 1) a passive infrared motion detector [4], 2) a magnetic reed switch door sensor [4], 3) a pressure sensor to detect if a chair or bed is occupied [18], 4) a temperature sensor to detect if the stove is being used [4], 5) a water usage sensor [19], 6) an electricity consumption sensor to detect appliance usage [20], 7) a microphone array [21], 8) a smart phone [22], and 9) a video camera [23].

Video camera based approaches (commonly referred to as vision based approaches) extract information regarding the interactions and changes taking place in the home by employing a cascade of techniques such as motion segmentation, human detection, action recognition and activity extraction [11]–[14]. These techniques analyze the captured visual data and generate human-interpretable information about activities and interactions in the home. While video cameras and microphone arrays provide a rich source of data, they are not always widely accepted as a means of monitoring human activities [15].

One type of sensor that is more readily accepted and is commonly found in smart homes is an infrared motion sensor. Wireless motion sensors such as those shown in Figure 1 contain a PIR sensor that measures nearby heat-based movement, a small chip that generates a message to send when the change is sufficiently large, and a radio to transmit the message to a central computer.

Other wireless sensors generate messages when different types of events are detected. Multiple motion sensors are embedded in the home environment and the sequence of motion sensor events resulting from heat-based (human) movement is used to extract information about interactions in the smart home, such as mobility and resident activities [16]. In addition, magnetic door closure sensors indicate when the magnetic circuit is completed or broken indicating door close or open status and shake sensors are often attached to objects and indicate when vibration is sensed, possibly signifying interaction with the object. Another popular home-based sensor relies on Radio Frequency Identification (RFID) technology [17]. RFID tags are affixed to objects of interest and register their presence within the range of an RFID reader. These tags can be used to detect some types of manipulation with the objects.

In addition to sensors that are embedded in the environment, data can be collected in the home by other means. A number of researchers and companies are developing technologies to recognize when devices are being used through their energy consumption [20], and similar means can be used to detect the use of water and gas [19]. Data collected from these sensors can be mined and used to develop applications that promote the design of energy-efficient appliances and sustainable resident behaviors.

Wearable sensors placed on the home residents can provide more fine-grained data through accelerometers that measure body movement [24]–[28]. Applications such as ambulation monitoring and tracking body energy expenditure process the data obtained from the wearable sensors. These sensors are positioned directly or indirectly on the resident’s body and track movements and physiological states of the resident. If residents carry smart phones with them in the home, a vast amount of data can be collected via the phone’s microphone, video, accelerometer, gyro, and magnetometer [29]–[33]. This data can be correlated to the different contexts associated with the resident such as location and activity.

The proliferation of sensors in the home results in large amounts of raw data that must be analyzed to extract relevant information. Data mining plays a pivotal role in the process of seeking bits and pieces of information that provide useful observations on resident behavior and the state of the home. Here we focus on a subset of data mining research on smart environment data that includes activity recognition and discovery, multiple resident tracking, correlation of behavior with other parameters of interest, and mining behaviors across a population.

3. Behavioral Complexity

In order to understand human behavior in a home environment, researchers need to be able to describe observations using a common vocabulary. In most cases, the vocabulary consists of commonly-understood activities that individuals perform in their home. However, there exists quite a disparity between the types of behaviors that are discovered, modeled, recognized and analyzed using data mining techniques.

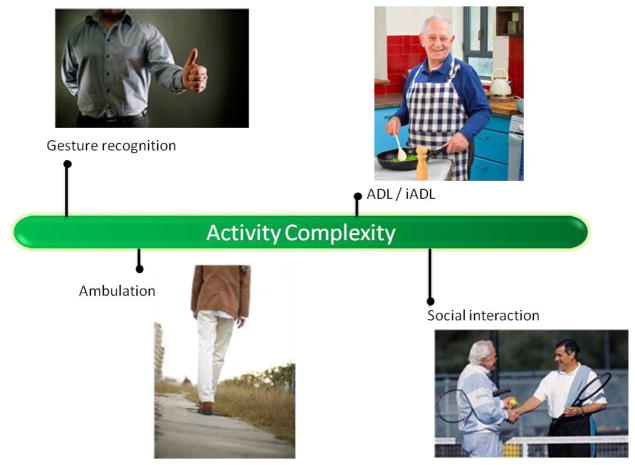

Figure 2 characterizes the continuum of activity complexities that have been explored in the literature, from the perspective of the difficulty of sensing, detecting, and performing the activities [33]. At the lowest level is the recognition of basic body poses or gestures. This has most widely been explored in the computer vision literature [34][35][36], but success has also be achieved with wearable sensors [26] and the resulting technologies have been used to control devices found in smart homes [37].

Figure 2.

Continuum of activity classes for recognition.

The next level of activity complexity is ambulation. In this case sensors (most commonly wearable sensors such as accelerometers) are used to recognize single-movement or cylic-movement ambulation activities such as walking, running, sitting, standing, climbing, lying down or falling [27][38]. More recently, researchers have been exploring the use of smart phones equipped with accelerometers and gyroscopes to track ambulatory movements and gesture patterns as well [29][30]. Most of these approaches have been able to model such simple activities and recognize them in real time primarily by characterizing the constituent movements [39] using a sliding window protocol. Since the movement information related to ambulatory activities is typically well represented within a window of the data from accelerometers with a high sampling rate, a sliding window based approach is appropriate for real-time processing of these behavior classes.

As we move along the continuum, the classes of activities become more complex. For example, researchers are very interested in using smart home technologies to monitor the ability of residents to perform important Activities of Daily Living (ADLs) and instrumented ADLs (iADLs) because of their role in health management and living independently [40]–[44]. These activities include common daily tasks such as cooking, grooming, and taking medicine. Unlike the previous activity classes, ADL activities are complex, containing sequences and cycles of subtasks. These activities include common daily tasks such as cooking, grooming, and taking medicine. Unlike the previous activity classes, ADL activities are complex, containing sequences and cycles of subtasks. These activities are an expression of the interaction between different objects and humans through complex movements. A denser sensing infrastructure is required to detect and recognize these activities. In fact, some researchers explore representing such activities using formal grammars [33], [45], [46]. The individual symbols in these grammars actually represent entire activities from a lower complexity class such as gestures and ambulation; ADL activities can be described as a combination of such activity classes.

Finally, we reach one of the most complex activity classes, that of social interaction. In smart homes, monitoring social activities and interactions has received less attention but is widely recognized as being fundamental to human health. Social signals are recognized as determinants of human behavior [47], indicators of work productivity [48], and essential for cognitive and physical health [49], [50]. Recognizing interactions between individuals and group activities again relies on the ability to recognize the less complex classes of activities on the continuum. In addition, new types of subtasks are introduced in multi-person settings that introduce additional data mining challenges [51].

4. Activity Recognition

Activity recognition is a challenging and well researched problem. The goal of activity recognition is to map a sequence of sensor observations to a label indicating the activity class to which the sensor sequence belongs. Many data mining and machine learning techniques have been designed and evaluated for their ability to recognize activities in settings that range from scripted activities in laboratories to real-time detection of activities in everyday homes with multiple residents.

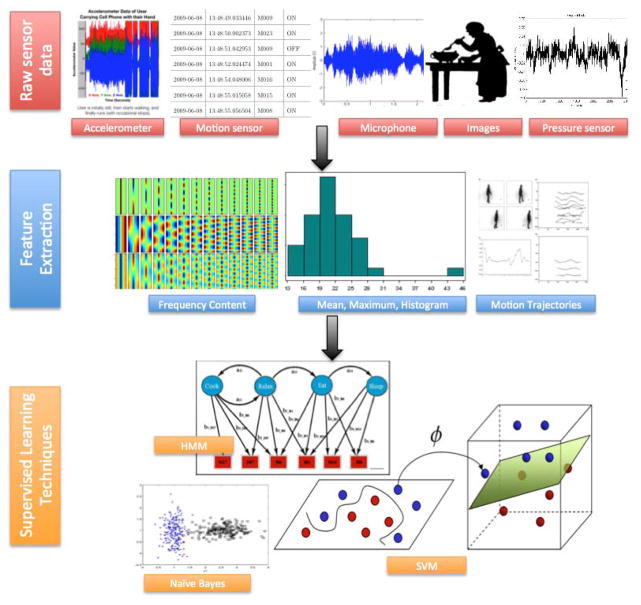

The typical framework for activity recognition algorithms is illustrated in Figure 3. The activity recognition problem is typically posed as a supervised learning problem with the goal of learning the mapping that associates features extracted from the sensor data to the underlying human activities that resulted in the sensor data in the first place.

Figure 3.

Commonly used framework for activity recognition.

Wearable sensor-based activity recognition approaches extract various statistical and spectral features such as mean, variance, maximum, spectral entropy, and dominant frequency component to characterize the sensor data [27], [30], [38], [39]. Typically fixed length window sizes (each containing a few seconds of data) are employed to sample the wearable sensors such as accelerometers. The data windows are then transformed into features. In applications such as tracking human ambulation, this is a robust approach as the information required to describe an activity is captured within a single window. The features are then used in conjunction with the activity labels for training supervised learning techniques.

Environmental sensor based activity recognition follows a similar track of transforming the raw sensor data into a richer representation of features. When dealing with PIR sensors, example features include the count of the sensor firings within the duration of an activity, contextual features such as location (for example, Bedroom, Dining room and Couch) of the PIR sensors, the sequence of sensor firings, motion trajectories estimated from videos as well as object interaction information extracted from images and RFID data [16], [52]. These features are then used to build activity models using supervised learning approaches.

The supervised learning that have demonstrated success for the task of learning activity models can be broadly categorized into template matching or transductive techniques, generative, and discriminative approaches. Researchers have explored these approaches for both wearable and environmental sensor based activity recognition. Template matching techniques employ a kNN classifier to label sensor sequences based on either the distance that is computed between a test window and training window based on Euclidean distance in the case of a fixed window size [53] or dynamic time warping in the case of a varying window size [34]. Template matching techniques have focused on a static set of spatial-temporal features [34] as well as description of resident trajectories [54].

The simplest generative approach is the naïve Bayes classifier, which has been utilized with promising results for activity recognition in smart homes [11][27][55][52]. Naïve Bayes classifiers model all sensor readings as arising from a common causal source: the activity, as given by a discrete label. Using a bag-of-words approach, the dependence of the sensor observations on activity labels is modeled as a probabilistic function that can be used to identify the most likely activity given the set of observed sensor features. Despite the fact that these classifiers assume conditional independence of the features given the activity class, they are robust when large amounts of sample data are provided. Nevertheless, naïve Bayes classifiers do not explicitly model any temporal information, which is usually considered important in activity recognition.

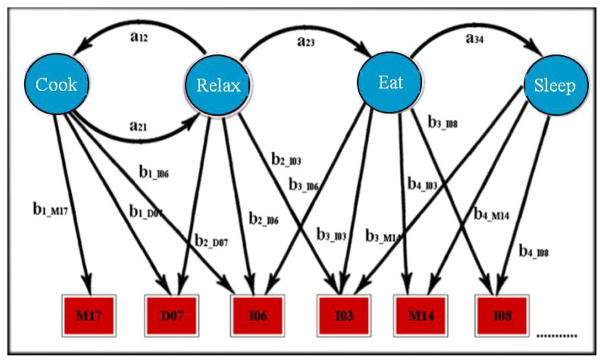

The hidden Markov model (HMM) is arguably the most popular generative approach that models temporal relationships. A HMM is a probabilistic model consisting of a set of hidden states coupled in a stochastic Markov chain, such that the distribution over states at one point in time depends only on the state values for a finite number of preceding points in time. Given a sequence of observed sensor values, computational methods can be used to compute the hidden state sequence that most likely corresponds to the observations. When used in activity recognition, hidden states represent the activities, as is shown in Figure 4. To increase the accuracy of activity recognition using HMMs, the model can be trained incorporating prior knowledge of the activity classes.

Figure 4.

HMM for an activity recognition task with four hidden states (activities) and a set of observable nodes that corresponding to observed sensor events.

HMMs can also be coupled to recognize multiple simultaneous activities [56]. Other structures of dynamic Bayesian networks have been tested for resident tracking [57] and to model activities together with other parameters of interest such a emotional state [58]. In one case, a three-level HMM represented as a DBN was used to infer activities from GPS sensor readings [59]. HMMs have played the role of a post-processor [60] to smooth out activity recognition results generated by an AdaBoost classifier.

In contrast to generative methods, discriminative approaches explicitly model the boundary between different activity classes. Decision trees such as C4.5 model activities based on properties of the activity sensor-based features [55]. Meta classifiers based on boosting and bagging have also been tested for activity recognition [61][62]. Support vector machines have demonstrated success at real-time recognition of activities in complex everyday settings [16], and discriminative probabilistic graphical models such as conditional random fields have been effective in home settings [52][63].

An important aspect of the activity recognition is the settings in which the data has been collected and the activity models are tested. Most of the activity recognition approaches experiment with data that is collected in scripted laboratory settings [8], [39][64]. This is the most constrained setting where the subjects perform the activities under the supervision of the researchers. The markers for the beginning and ending of the activity are very clear. Activity recognition algorithms focus on learning these clean pre-segmented activity sequences. The next step taken by the researchers is to recognize activities in unscripted settings, an example of which is a smart home while residents perform their daily routine. This setting provides the natural setting for the residents to perform the activities. However the activity algorithms use an offline annotation process to segment the required data for building the activity models [63], [65]. Recently, there has been an increasing focus on activity recognition algorithms that work directly on senor streams, offering a practical solution for real-world settings. This represents the most complicated yet realistic setting for training and testing activity recognition algorithms.

One of the challenges for data mining researchers working with smart home data is the lack of uniformity with which activities are recognized and labeled even among human observers and annotators [66]. Another challenge is the similarity between activities – if an observer is given a limited time window of information, cooking and cleaning activities could appear identical. Knowledge-driven activity recognition techniques attempt to overcome these challenges by making use of external sources of information to model, recognize, and analyze activity data. Examples of external information sources are activity ontologies and domain knowledge [67] and semantic activity information that is mined from publically available sources [8][68][69]. Activity recognition across generalized settings has also been investigated by a number of researchers [70]. Examples of the generalized settings include different sensor layouts, activity recognition across different sensors and different residents.

5. Behavioral Pattern Discovery

While recognizing predefined activities often relies on supervised learning techniques, unsupervised learning is valuable for its ability to discover recurring sequences of unlabeled sensor activities that may comprise activities of interest in smart homes. Methods for behavior pattern discovery in smart homes build on a rich history of unsupervised learning research, including methods for mining frequent sequences [71][72], mining frequent patterns using regular expressions [3], constraint-based mining [73], mining frequent temporal relationships [74], and frequent-periodic pattern mining [75].

More recent work extends these early approaches to look for more complex patterns. Ruotsalainen et al. [77] design the Gais genetic algorithm to detect interleaved patterns in an unsupervised learning fashion. Other approaches have been proposed to mine discontinuous patterns [73], in different types of sequence datasets and to allow variations in occurrences of the patterns [78]. Discovered behavioral patterns are valuable to interpret sensor data, and models can be constructed from the discovered patterns to recognize instances of the patterns when they occur in the future.

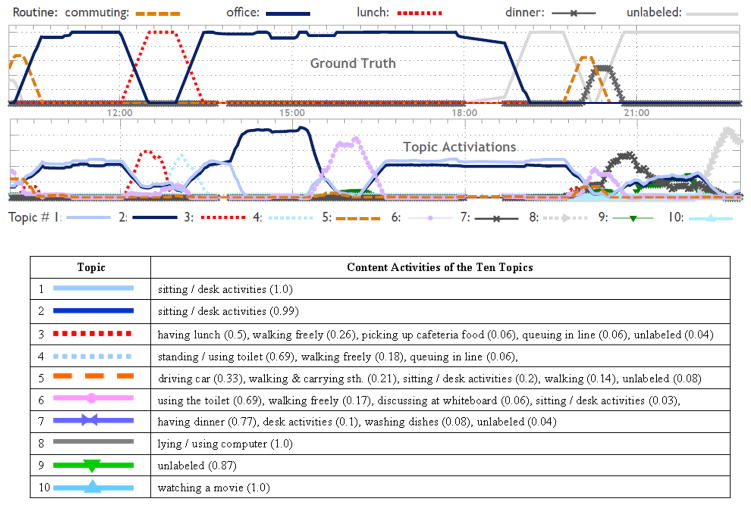

In contrast to approaches that mine patterns in an unsupervised fashion from sensor data, topic model-based daily routine discovery [76][79] builds a hierarchical activity model in a combination of supervised and unsupervised methods. The less complex activities (see Figure 2) are recognized using a supervised learning algorithm. Next, the model’s higher level discovers combinations of lower-level activities that comprise more complex activity patterns. A mixture of activities is modeled as a multinomial probability distribution where the importance of each feature is also modeled as a probability distribution. Figure 5 (top) shows an intuitive description of activities noted by a human subject and Figure 5 (bottom) shows the activities that were automatically discovered using the topic model.

Figure 5.

Ground truth and discovered activities using topic models [76].

Behavior pattern discovery and activity recognition are not always pursued as separate directions within data mining research. Pattern discovery methods identify patterns of interest that can later be tracked to monitor a smart home resident’s daily routine and look for trends and abrupt changes [78]. In addition, unsupervised discovery can help analyze data that does not fall into predefined patterns. In fact, some researchers found that activity recognition accuracy is actually boosted when unsupervised learning methods are used to find patterns in data not falling into a predefined activity class [80]. The discovered patterns can themselves be modeled and recognized, allowing for characterization and tracking of a much larger portion of a resident’s daily routine.

In addition to characterizing and recognizing common normal activities that account for the majority of the sensor events that are generated, smart home residents are also very interested in abnormal events. These abnormal events may indicate a crisis or an abrupt change in a regimen that is associated with health difficulties. Abnormal activity detection, or anomaly detection, is also important in security monitoring where suspicious activities need to be flagged and handled. Anomaly detection is most accurate when it is based on behaviors that are frequent and predictable. There are common statistical methods to automatically detect and analyze anomalies including the box plot, the x chart, and the CUSUM chart [81]. Anomalies can be captured at different population scales. For example, while most of the population may perform activity A, one person carries out activity B, which pinpoints a condition needing further investigation [82]. Anomalies may also be discovered at different temporal scales, including single events, days, or weeks [83].

Little attention has been devoted to anomaly detection in smart homes. This is partly because the notion of an anomaly is somewhat ill-defined. Many possible interpretations of anomalies have been offered and use cases have even been generated for ambient intelligent environments [84]. Some algorithmic approaches have been suggested that build on the notion of expected temporal relationships between events and activities [85]. Others tag events as anomalies if the occur rarely and they are not anticipated for the current context [86].

6. Monitoring and Promoting Behavioral Influence

As we have discussed, data mining techniques can be used to recognize predefined classes of behaviors as well as identify interesting or anomalous patterns from smart home sensor data. Once resident behavior is characterized in this way, it can be further analyzed to achieve several goals. First, behavior can be correlated with parameters of interest to the resident or to researchers. Second, the mined patterns can be use to proactively automate control of the home. Third, home-based interventions and modifications can be identified through data mining techniques that will promote desired resident behaviors.

Researchers who have analyzed smart home data realize even more profoundly that people do not live in a vacuum. They are influenced by their environment and other residents in the environment, and they have a profound impact on the other residents as well as their physical environment. The ability to measure the correlation between smart home behavior and other parameters of interest is both a unique opportunity and a unique challenge for data mining researchers. Such correlations have been identified between smart home-based behaviors and well being, energy usage, and air quality.

The possibilities of using smart environments for health monitoring and assistance are perceived as “extraordinary” [87] and are timely given the aging of the population [88][89]. A smart home is an ideal environment for performing automated health monitoring and assessment, because the resident can be remotely observed performing their normal routines in their everyday settings. As an example, Pavel et al. [90] observed using smart home data that changes in home-based mobility patterns are related to changes in cognitive health. Lee and Dey [91] also designed an embedded sensing system and presented information to older adults to determine if this information was useful to them in gaining increased awareness of their functional abilities. Hodges et al. [92] found a positive correlation between sensor data gathered during a home-based coffee-making task and the first principal component of standard neuropsychological scores for the participants. In a large study with hundreds of participants, Dawadi et al. employed data mining techniques to automatically group participants into cognitive health categories based on sensor features collected while they performed a set of complex activities in a smart home testbed [93].

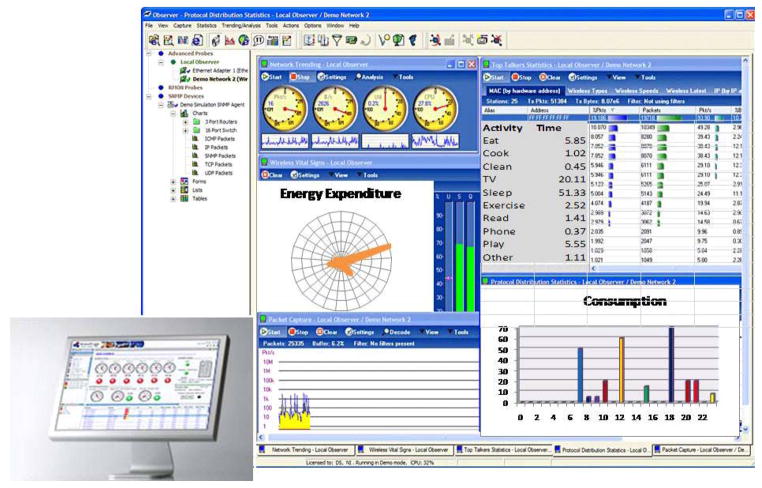

The ability of a smart home to provide insights on well being can also be evidenced in the research performed to gain insights on healthy habits. As an example, Wang et al. analyzed smart home data to estimate exercise levels and energy expenditure for residents [94]. Helal et al. not only estimate overall energy expenditure but also monitoring eating habits in the home to provide feedback on healthy behaviors for diabetes patients [95] (see Figure 6).

Figure 6.

Feedback on data mined healthy behaviors detected in a smart home [95].

In addition, data mining techniques can be used to investigate the relationship between behavioral patterns and sustainability. Households and buildings are responsible for over 40% of energy usage in most countries [96], yet many residents still receive little or no detailed feedback about their personal energy usage. On the other hand, previous studies have shown that home residents actually reduce energy expenditure by 5%–15% as a simple response to acquiring and viewing related usage data [97]. By designing data mining techniques to process smart home data as well as whole-home energy usage data, researchers have been able to provide detailed feedback to residents [98] and to find correlations between behavior and energy usage and to predict energy usage based on activities detected in smart homes [99]. Similarly, using data mining techniques detailed feedback on air quality is provided to residents [100] and correlations have been identified between behavior and indoor air quality [101].

It is important to note that the combination of smart home and data mining techniques is not only useful for monitoring behavior and behavior-based correlation, but can also be used as the basis for providing proactive assistance from the home itself. As an example, Komatsuzaki et al. designed a technique to find items in a room using smart home sensors, intelligent reasoning and robotics [102]. While data mining techniques can identify automation strategies that relieve residences of the burden of interacting devices [10], they can also be directed to improving usage of the home. As examples of this idea, Scott et al. use occupancy prediction techniques to automate the control of a home’s heating system [103], and Mishra et al. use smart home data to automate device usage and prevent spikes in the power grid [104].

7. Challenges for Researchers who Mine the Home

The dream of building smart homes is hampered by very some formidable challenges. A primary concern is the need to consider the implications of maintaining privacy and security while mining smart home data. Many individuals are reluctant to introduce sensing technologies into their home, wary of leaving “digital bread crumbs”. Studies highlight evidence that such wariness is well founded. Residents are willing to allow a home to collect tremendous amount of personal information including behavioral routines, personal preferences, diet, medication, and health status, so that the home can provide context-aware services. However, this same information may be stored in the cloud and either hacked or intentionally sent to individuals and companies that can utilize the information for advertising or for more nefarious purposes [105]. Personal information can be collected in ways that may not be easily anticipated. For example, occupancy detection and behavior inference can be accomplished by monitoring smart meters [106].

While organizations such as the FTC have been discussing ways to ensuring security before it becomes a widespread issue [107], researchers also need to be proactive in investigating related issues. Specifically, researchers need to define and provide guarantees for levels of privacy and for the safety of the technologies. As ambient intelligent systems become more ubiquitous, more information will be collected about individuals and their lives. The impact of such monitoring needs to be better understood [108][109] and kept secure from those who would use the information for malicious purposes. Even when information is shared only with caring friends and family members, studies indicate that the result can impact an individual’s sense of privacy and independence [110].

Many privacy concerns focus on the perception of intrusive monitoring [111]. At the same time, however, many heavily-deployed Internet gadgets and current ambient intelligent systems are nearly devoid of security against adversaries, and many others employ only crude methods for securing the system from internal or external attacks. When smart home technologies were in their infancy, the possibility of hacking such connected environments seemed implausible. As the technology matures, so does the very real ability for hackers to infiltrate the home and collect usable information about its residents [112], [113]. As a result, every aspect of data collection, inference, communication, and storage software design needs to have privacy and security at the forefront. Privacy-preserving sensed environments need to be designed [114], [115] and researchers need to study the relationship between privacy assurance and quality of information gathering [116].

The definition of privacy will continue to evolve as ambient intelligent systems mature [117]. This is highlighted by the fact that even if personal information is not directly obtained by an unwanted party, much of the information can be inferred even from aggregated data. For this reason, a number of approaches are being developed to ensure that important information cannot be gleaned from mined patterns [118][119].

As well as introducing some major challenges for data mining researchers, smart home sensor data and technologies offer some unprecedented opportunities. Because smart homes are becoming more prevalent, sensing and analysis no longer needs to be performed on an individual basis. Data mining researchers can consider modeling and understanding an entire population. This has been considered in initial studies focusing on movement patterns [120] but can be extended to analyze more behaviors such as eating, sleeping, and socializing.

The benefits of smart home technologies and patterns mined from smart homes are typically confined to the interior of the building in which an individual resides. As a result, another critical challenge for data mining researchers is to fuse data and models based on smart home data with those based on external sources such as smart phones. Given the progress that we have witnessed in smart homes and related data mining techniques, researchers and practitioners can now look toward these next steps and anticipate the continued growth of the field.

Contributor Information

Diane J. Cook, Email: cook@eecs.wsu.edu.

Narayanan Krishnan, Email: ckn@eecs.wsu.edu.

References

- 1.Cook DJ, Das SK. Smart Environments: Technologies, Protocols, and Applications. Wiley; 2005. [Google Scholar]

- 2.Abowd G, Mynatt ED. Designing for the human experience in smart environments. Smart Environments: Technologies, Protocols and Applications. 2005:153–174. [Google Scholar]

- 3.Barger T, Brown D, Alwan M. Health status monitoring through analysis of behavioral patterns. IEEE Trans Syst Man, Cybern Part A. 2005;35(1):22–27. [Google Scholar]

- 4.Cook DJ, Crandall A, Thomas B, Krishnan N. CASAS: A smart home in a box. IEEE Comput. 2012 doi: 10.1109/MC.2012.328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hagras H, Doctor F, Lopez A, Callaghan V. An incremental adaptive life long learning approach for type-2 fuzzy embedded agents in ambient intelligent environments. IEEE Trans Fuzzy Syst. 2007 [Google Scholar]

- 6.Helal A, Mann W, El-Zabadani H, King J, Kaddoura Y, Jansen E. The Gator Tech Smart House: A programmable pervasive space. IEEE Comput. 2005;38(3):50–60. [Google Scholar]

- 7.Mozer M. The Neural Network House: An Environment that Adapts to its Inhabitants. Proceedings of the AAAI Spring Symposium on Intelligent Environments. 1998:110–114. [Google Scholar]

- 8.Philipose M, Fishkin KP, Perkowitz M, Patterson DJ, Hahnel D, Fox D, Kautz H. Inferring activities from interactions with objects. IEEE Pervasive Comput. 2004;3(4):50–57. [Google Scholar]

- 9.Rahal Y, Pigot H, Mabilleau P. Location estimation in a smart home: System implementation and evaluation using experimental data. Int J Telemed Appl. 2008:4. doi: 10.1155/2008/142803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Youngblood GM, Cook DJ. Data mining for hierarchical model creation. IEEE Trans Syst Man, Cybern Part C. 2007;37(4):1–12. [Google Scholar]

- 11.Brdiczka O, Crowley JL, Reignier P. Learning situation models in a smart home. IEEE Trans Syst Man, Cybern Part B. 2009;39(1) doi: 10.1109/TSMCB.2008.923526. [DOI] [PubMed] [Google Scholar]

- 12.Hollosi D, Schroder J, Goetze S, Appell J. Voice activity detection driven acoustic event classification for monitoring in smart homes. International Symposium on Applied Sciences in Biomedical and Communication Technologies. 2010:1–5. [Google Scholar]

- 13.Hongeng S, Nevatia R, Bremond F. Video-based event recognition: Activity representation and probabilistic recognition methods. Comput Vis Image Underst. 2004;96(2):129–162. [Google Scholar]

- 14.Suk M, Ramadass A, Jim Y, Prabhakaran B. Video human motion recognition using a knowledge-based hybrid method based on a hidden Markov model. ACM Trans Intell Syst Technol. 2012;3(3) [Google Scholar]

- 15.Hensel BK, Demiris G, Courtney KL. Defining Obtrusiveness in Home Telehealth Technologies: A Conceptual Framework. J Am Med Informatics Assoc. 2006;13(4):428–431. doi: 10.1197/jamia.M2026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Krishnan N, Cook DJ. Activity recognition on streaming sensor data. Pervasive Mob Comput. 2012 doi: 10.1016/j.pmcj.2012.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Buettner M, Prasad R, Philipose M, Wetherall D. Recognizing daily activities with RFID-based sensors. International Conference on Ubiquitous Computing. 2009:51–60. [Google Scholar]

- 18.Su BY, Ho kC, Skubic M, Rosales L. Pulse rate estimation using hydraulic bed sensor. International Conference of the IEEE Engineering in Medicine and Biology Society. 2012 doi: 10.1109/EMBC.2012.6346493. [DOI] [PubMed] [Google Scholar]

- 19.Larson E, Froehlich J, Campbell T, Haggerty C, Fogarty J, Patel SN. Disaggregated water sensing from a single, pressure-based sensor. Pervasive Mob Comput. 2012 [Google Scholar]

- 20.Froehlich J, Larson E, Gupta S, Cohn G, Reynolds M, Patel SN. Disaggregated end-use energy sensing for the smart grid. IEEE Pervasive Comput. 2011;10(1):28–39. [Google Scholar]

- 21.Li Y, Ho KC, Popescu M. A microphone array system for automatic fall detection. IEEE Trans Biomed Eng. 2012;59:1291–1301. doi: 10.1109/TBME.2012.2186449. [DOI] [PubMed] [Google Scholar]

- 22.Derbach S, Das B, Krishnan N, Thomas B, Cook D. Simple and complex activity recognition through smart phones. International Conference on Intelligent Environments. 2012 [Google Scholar]

- 23.Benerjee T, Keller MJ, Skubic M. Resident identification using Kinect depth image data and fuzzy clustering techniques. International Conference of the IEEE Engineering in Medicine and Biology Society. 2012 doi: 10.1109/EMBC.2012.6347141. [DOI] [PubMed] [Google Scholar]

- 24.Cohn G, Gupta S, Lee T, Morris D, Smith JR, Reynolds MS, Tan DS, Patel SN. An ultra-low-power human body motion sensor using static electric field sensing. ACM International Joint Conference on Pervasive and Ubiquitous Computing. 2012:99–102. [Google Scholar]

- 25.Jarafi R, Sastry S, Bajcsy R. Distributed recognition of human actions using wearable motion sensor networks. J Ambient Intell Smart Environ. 2009 [Google Scholar]

- 26.Junker H, Amft O, Lukowicz P, Groster G. Gesture spotting with body-worn inertial sensors to detect user activities. Pattern Recognit. 2008;41:2010–2024. [Google Scholar]

- 27.Maurer U, Smailagic A, Siewiorek D, Deisher M. Activity recognition and monitoring using multiple sensors on different body positions. Proceedings of the International Workshop on Wearable and Implantable Body Sensor Networks. 2006:113–116. [Google Scholar]

- 28.Yatani K, Truong K. BodyScope: A wearable acoustic sensor for activity recognition. ACM International Conference on Ubiquitous Computing. 2012 [Google Scholar]

- 29.Gyorbiro N, Fabian A, Homanyi G. An activity recognition system for mobile phones. Mob Networks Appl. 2008;14:82–91. [Google Scholar]

- 30.Kwapisz J, Weiss G, Moore S. Activity recognition using cell phone accelerometers. International Workshop on Knowledge Discovery from Sensor Data. 2010:10–18. [Google Scholar]

- 31.Li F, Zhao C, Zhao F, Ding G, Gong J, Liu C, Jian S, Hsu F-H. A reliable and accurate indoor localization method using phone inertial sensors. ACM International Conference on Ubiquitous Computing. 2012 [Google Scholar]

- 32.Lu H, Rabbi M, Chittaranjan GT, Frauendorfer D, Schmid Mast M, Campbell AT, Gatica-Perez D, Choudhury T. StressSense: Detecting stress in unconstrained acoustic environments using smartphones. ACM International Conference on Ubiquitous Computing. 2012 [Google Scholar]

- 33.Sahaf Y, Krishnan N, Cook DJ. Defining the complexity of an activity. AAAI Workshop on Activity Context Representation: Techniques and Languages. 2011 [Google Scholar]

- 34.Alon J, Athitsos V, Yuan Q, Sclaroff S. A unified framework for gesture recognition and spatiotemporal gesture segmentation. IEEE Trans Pattern Anal Mach Intell. 2008;31:1685–1699. doi: 10.1109/TPAMI.2008.203. [DOI] [PubMed] [Google Scholar]

- 35.Cheng SY, Trivedi MM. Articulated human body pose inference from voxel data using a kinematically constrained Gaussian mixture model. Workshop on Evaluation of Articulated Human Motion and Pose Estimation. 2007 [Google Scholar]

- 36.Starner T, Pentland A. Real-time American sign language recognition from video using hidden Markov models. IEEE Trans Pattern Anal Mach Intell. 1998;20(12):1371–1375. [Google Scholar]

- 37.Do J-H, Jung SH, Jang H, Yang S-E, Jung J-W, Bien Z. Gesture-Based Interface for Home Appliance Control in Smart Home. Smart Homes and Beyond - Proceedings of the 4th International Conference On Smart homes and health Telematics (ICOST2006) 2006;19:23–30. [Google Scholar]

- 38.Krishnan N, Panchanathan S. Analysis of low resolution accelerometer data for human activity recognition. International Conference on Acoustic Speech and Signal Processing. 2008 [Google Scholar]

- 39.Krishnan NC, Colbry D, Juillard C, Panchanathan S. Real time human activity recognition using tri-axial accelerometers. Sensors Signals and Information Processing Workshop. 2008 [Google Scholar]

- 40.Diel M, Marsiske M, Horgas A, Rosenberg A, Saczynski J, Willis S. The revised observed tasks of daily living: A performance-based assessment of everyday problem solving in older adults. J Appl Gerontechnology. 2005;24:211–230. doi: 10.1177/0733464804273772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Farias S, Mungas D, Reed B, Harvey D, Cahn-Weiner D, DeCarli C. MCI is associated with deficits in everyday functioning. Alzheimer Dis Assoc Disord. 2006;20:217–223. doi: 10.1097/01.wad.0000213849.51495.d9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Perneczky R, Sorg C, Hartmann J, Tosic N, Grimmer T, Heitele S, Kurz A. Impairment of activities of daily living requiring memory or complex reasoning as part of the MCI syndrome. Int J Geriatr Psychiatry. 2006;21:158–162. doi: 10.1002/gps.1444. [DOI] [PubMed] [Google Scholar]

- 43.Schmitter-Edgecombe M, Woo E, Greeley D. Characterizing multiple memory deficits and their relation to everyday functioning in individuals with mild cognitive impairment. Neuropsychology. 2009;23:168–177. doi: 10.1037/a0014186. [DOI] [PubMed] [Google Scholar]

- 44.Rialle V, Ollivet C, Guigui C, Herve C. What do family caregivers of Alzheimer’s disease patients desire in smart home technologies? Methods Inf Med. 2008;47:63–69. [PubMed] [Google Scholar]

- 45.Ryoo MS, Aggarwal JK. Recognition of composite human activities through context-free grammar based representation. IEEE Conference on Computer Vision and Pattern Recognition. 2006 [Google Scholar]

- 46.Teixeira T, Jung D, Dublon G, Savvides A. Recognizing activities from context and arm pose using finite state machines. ACM/IEEE International Conference on Distributed Smart Cameras. 2009 [Google Scholar]

- 47.Ambady N, Rosenthal R. Thin slices of expressive behavior as predictors of interpersonal consequences: A meta-analysis. Psychol Bull. 1992;2:256–275. [Google Scholar]

- 48.Baker W. Achieving Success Through Social Capital. Wiley; 2000. [Google Scholar]

- 49.Fratiglioni L, Wang H, Ericsson K, Maytan M, Winblad B. Influence of social network on occurrence of dementia: A community-based longitudinal study. Lancet. 2000;335:1315–1319. doi: 10.1016/S0140-6736(00)02113-9. [DOI] [PubMed] [Google Scholar]

- 50.York E, White L. Social disconnectedness, perceived isolation, and health among older adults. J Health Soc Behav. 2009;50(1):31–48. doi: 10.1177/002214650905000103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Natarajan P, Nevatia R. Coupled hidden semi Markov models for activity recognition. Workshop on Motion and Video Computing. 2007 [Google Scholar]

- 52.van Kasteren T, Krose B. Bayesian activity recognition in residence for elders. Proceedings of the IET International Conference on Intelligent Environments. 2007:209–212. [Google Scholar]

- 53.Foerster F, Smeja M, Fahrenberg J. Detection of posture and motion by accelerometry: A validation study in ambulatory monitoring. Comput Human Behav. 1999;15:571–583. [Google Scholar]

- 54.Gritai A, Sheikh Y, Rao C, Shah M. Matching trajectories of anatomical landmarks under viewpoint, anthropometric and temporal transforms. Int J Comput Vis. 2009;84(3):325–343. [Google Scholar]

- 55.Bao L, Intille S. Activity recognition from user annotated acceleration data. Pervasive. 2004:1–17. [Google Scholar]

- 56.Brand M, Oliver N, Pentland A. Coupled hidden Markov models for complex action recognition. International Conference on Computer Vision and Pattern Recognition. 1997:994–999. [Google Scholar]

- 57.Wilson D, Atkeson C. Simultaneous tracking and activity recognition (STAR) using many anonymous, binary sensors. Pervasive. 2005:62–79. [Google Scholar]

- 58.Kan P, Huq R, Hoey J, Mihailidis A. The development of an adaptive upper-limb stroke rehabilitation robotic system. J Neuroeng Rehabil. 2011;8(33) doi: 10.1186/1743-0003-8-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Liao L, Patterson DJ, Fox D, Kautz H. Learning and inferring transportation routines. Artif Intell. 2007;171(5–6):311–331. [Google Scholar]

- 60.Lester J, Choudhury T, Kern N, Borriello G, Hannaford B. A hybrid discriminative/generative approach for modeling human activities. International Joint Conference on Artificial Intelligence. 2005:766–772. [Google Scholar]

- 61.Ravi N, Dandekar N, Mysore P, Littman ML. Activity recognition from accelerometer data. Innovative Applications of Artificial Intelligence. 2005:1541–1546. [Google Scholar]

- 62.Wang S, Pentney W, Popescu AM, Choudhury T, Philipose M. Common sense based joint training of human activity recognizers. International Joint Conference on Artificial Intelligence. 2007:2237–2242. [Google Scholar]

- 63.Cook D. Learning setting-generalized activity models for smart spaces. IEEE Intell Syst. doi: 10.1109/MIS.2010.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Wang L, Gu T, Tao X, Lu J. A hierarchical approach to real-time activity recognition in body sensor networks. Pervasive Mob Comput. 2012;8(1):115–130. [Google Scholar]

- 65.Logan B, Healey J, Philipose M, Tapia EM, Intille S. A Long-Term Evaluation of Sensing Modalities for Activity Recognition. Proceedings of the 9th International Conference on Ubiquitous Computing. 2007 [Google Scholar]

- 66.Hu DH, Zheng VW, Yang Q. Cross-domain activity recognition via transfer learning. Pervasive Mob Comput. 2011;7(3):344–358. [Google Scholar]

- 67.Chen L, Nugent CD, Wang H. A knowledge-driven approach to activity recognition in smart homes. IEEE Trans Knowl Data Eng. 2012;24(6):961–974. [Google Scholar]

- 68.Wyatt D, Philipose M, Choudhury T. Unsupervised activity recognition using automatically mined common sense. National Conference on Artificial Intelligence. 2005:21–27. [Google Scholar]

- 69.Munguia Tapia E, Choudhury T, Philipose M. Building reliable activity models using hierarchical shrinkage and mined ontology. Pervasive. 2006:17–32. [Google Scholar]

- 70.Hu DH, Yang Q. Transfer learning for activity recognition via sensor mapping. International Joint Conference on Artificial Intelligence. 2011:1962–1967. [Google Scholar]

- 71.Agrawal R, Srikant R. Mining sequential patterns. Proceedings of the International Conference on Data Engineering. 1995:3–14. [Google Scholar]

- 72.Gu T, Chen S, Tao X, Lu J. An unsupervised approach to activity recognition and segmentation based on object-use fingerprints. Data Knowl Eng. 2010;69(6):533–544. [Google Scholar]

- 73.Pei J, Han J, Wang W. Constraint-based sequential pattern mining: The pattern-growth methods. J Intell Inf Syst. 2007;28(2):133–160. [Google Scholar]

- 74.Aztiria A, Augusto J, Cook D. Discovering frequent user-environment interactions in intelligent environments. Pers Ubiquitous Comput. 2012;16(1):91–103. [Google Scholar]

- 75.Heierman EO, Cook DJ. Improving home automation by discovering regularly occurring device usage patterns. IEEE International Conference on Data Mining. 2003:537–540. [Google Scholar]

- 76.Huynh T, Fritz M, Schiele B. Discovery of activity patterns using topic models. International Conference on Ubiquitous Computing. 2008:10–19. [Google Scholar]

- 77.Ruotsalainen A, Ala-Kleemola T. Gais: A method for detecting discontinuous sequential patterns from imperfect data. Proceedings of the International Conference on Data Mining. 2007:530–534. [Google Scholar]

- 78.Rashidi P, Cook D, Holder L, Schmitter-Edgecombe M. Discovering activities to recognize and track in a smart environment. IEEE Trans Knowl Data Eng. 2011;23(4):527–539. doi: 10.1109/TKDE.2010.148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Niebles JC, Wang H, Fei-Fei L. Unsupervised learning of human action categories using spatial-temporal words. Int J Comput Vis. 2008;79(3):299–318. [Google Scholar]

- 80.Cook DJ, Krishnan N, Rashidi P. Activity discovery and activity recognition: A new partnership. IEEE Trans Syst Man, Cybern Part B. 2013;43(3):820–828. doi: 10.1109/TSMCB.2012.2216873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Tukey J. Exploratory Data Analysis. Addison-Wesley; 1977. [Google Scholar]

- 82.Dawadi P, Cook DJ, Parsey C, Schmitter-Edgecombe M, Schneider M. An approach to cognitive assessment in smart homes. KDD Workshop on Medicine and Healthcare. 2011 [Google Scholar]

- 83.Song C, Koren T, Wang P, Barabasi AL. Modeling the scaling properties of human mobility. Nat Phys. 2010;7:713–718. [Google Scholar]

- 84.Lyons P, Tran A, Marsland S, Dietric J, Guesgen H. Use cases for abnormal behavior detection in smart homes. International Conference on Smart Homes and Health Telematics. 2010:144–151. [Google Scholar]

- 85.Jakkula V, Cook DJ. Anomaly detection using temporal data mining in a smart home environment. Methods Inf Med. 2008;47(1):70–75. doi: 10.3414/me9103. [DOI] [PubMed] [Google Scholar]

- 86.Yin J, Yang Q, Pan JJ. Sensor-based abnormal human-activity detection. IEEE Trans Knowl Data Eng. 2008;20(8):1082–1090. [Google Scholar]

- 87.Department of Health. Speech by the Rt Hon Patricia Hewitt MP, Secretary of State for Health. Long-term Conditions Alliance Annual Conference; 2007. [Google Scholar]

- 88.U. Nations. World population ageing: 1950–2050. 2012 [Online]. Available: www.un.org/esa/population/publications/worldageing19502050/

- 89.Vincent G, Velkoff V. The next four decades - the older population in the United States: 2010 to 2050. 2010. [Google Scholar]

- 90.Pavel M, Adami A, Morris M, Lundell J, Hayes TL, Jimison H, Kaye JA. Mobility assessment using event-related responses. Transdisciplinary Conference on Distributed Diagnosis and Home Healthcare. 2006:71–74. [Google Scholar]

- 91.Lee ML, Dey AK. Embedded assessment of aging adults: A concept validation with stake holders. International Conference on Pervasive Computing Technologies for Healthcare. 2010:22–25. [Google Scholar]

- 92.Hodges M, Kirsch N, Newman M, Pollack M. Automatic assessment of cognitive impairment through electronic observation of object usage. International Conference on Pervasive Computing. 2010:192–209. [Google Scholar]

- 93.Dawadi P, Cook DJ, Schmitter-Edgecombe M. Automated cognitive health assessment using smart home monitoring of complex tasks. IEEE Trans Syst Man, Cybern Part B. 2012 doi: 10.1109/TSMC.2013.2252338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Wang S, Skubic M, Zhu Y, Galambos C. Using passive sensing to estimate relative energy expenditure for eldercare monitoring. PerCom Workshop on Smart Environments to Enhance Health Care. 2011 doi: 10.1109/PERCOMW.2011.5766968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Helal A, Cook DJ, Schmalz M. Smart home-based health platform for behavioral monitoring and alteration of diabetes patients. J Diabetes Sci Technol. 2009;3(1):1–8. doi: 10.1177/193229680900300115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Wbcsd. Energy efficiency in buildings. 2012 [Online]. Available: www.wbcsd.org.

- 97.Seryak J, Kissock K. Occupancy and behavioral effects on residential energy use. Sol Conf. 2003:717–722. [Google Scholar]

- 98.Costanza E, Ramchurcn S, Jennings N. Understanding domestic energy consumption through interactive visualisation: A field study. International Conference on Ubiquitous Computing. 2012 [Google Scholar]

- 99.Chen C, Cook DJ, Crandall A. The user side of sustainability: Modeling behavior and energy usage in the home. Pervasive Mob Comput. 2012 [Google Scholar]

- 100.Jiang Y, Li K, Tian L, Piedrahita R, Yun X, Mansata O, Lv Q, Dick RP, Hannigan M, Shang L. MAQS: A personalized mobile sensing system for indoor air quality monitoring. International Conference on Ubiquitous Computing. 2011 [Google Scholar]

- 101.Deleawe S, Kusznir J, Lamb B, Cook DJ. Predicting air quality in smart environments. J Ambient Intell Smart Environ. 2010;2(2):145–154. doi: 10.3233/AIS-2010-0061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Komatsuzaki M, Tsukada K, Siio I, Verronen P, Luimula M, Pieska S. IteMinder: Finding items in a room using passive RFID and an autonomous robot. International Conference on Ubiquitous Computing. 2011 [Google Scholar]

- 103.Scott J, Brush AJB, Krumm J, Meyers B, Hazas M, Hodges S, Villar N. PreHeat: Controlling home heating using occupancy prediction. International Conference on Ubiquitous Computing. 2011 [Google Scholar]

- 104.Barker SK, Mishra A, Irwin D, Shenoy P, Albrecht JR. SmartCap: Flattening peak electricity demand in smart homes. IEEE International Conference on Pervasive Computing and Communications. 2012 [Google Scholar]

- 105.Larson S. Privacy at home: 5 things the connected home can learn from social media. readwrite. 2013 [Online]. Available: http://readwrite.com/2013/11/15/five-things-the-connected-home-can-learn-from-social-media#feed=/series/home&awesm=~osmE1DqUR9mSt4.

- 106.Chen D, Irwin D, Shenoy P, Albrecht J. Combined heat and privacy: Preventing occupancy detection from smart meters. IEEE International Conference on Pervasive Computing and Communications. 2014 [Google Scholar]

- 107.Orsini L. Connected home invasion: You’ve seen the madness, here are the methods. readwrite. 2013 [Google Scholar]

- 108.Choe EK, Consolvo S, Jung J, Harrison B, Kientz JA. Living in a glass house: A survey of private moments in the home. International Conference on Ubiquitous Computing. 2011 [Google Scholar]

- 109.Waltz E. How I quantified myself. IEEE Spectr. 2012 Sep;:43–47. [Google Scholar]

- 110.Vines J, Lindsay S, Pritchard GW, Lie M, Greathead D, Olivier P, Brittain K. Making family care work: Dependence, privacy and remote home monitoring. ACM International Joint Conference on Pervasive and Ubiquitous Computing. 2013 [Google Scholar]

- 111.Demiris G, Oliver DP, Dickey G, Skubic M, Rantz M. Findings from a participatory evaluation of a smart home application for older adults. Technol Heal Care. 2008;16:111–118. [PubMed] [Google Scholar]

- 112.Hill K. When ‘smart homes’ get hacked: I haunted a complete stranger’s house via the Internet. Forbes. 2013 [Google Scholar]

- 113.Lee A. Hacking the connected home: When your house watches you. readwrite. [Online]. Available: http://readwrite.com/2013/11/13/hacking-the-connected-home-when-your-house-watches-you#feed=/tag/connected-home&awesm=~osmDA6o9bkgx84.

- 114.Wightman P, Zulbaran M, Rodriguez M, Labrador MA. MaPIR: Mapping-based private information retrieval for location privacy in LBS. IEEE Local Computer Networks Conference. 2013 [Google Scholar]

- 115.Vergara I, Mendez D, Labrador MA. On the interactions between privacy-preserving, incentive, and inerence mechanisms in participatory sensing systems. International Conference on Network and System Security. 2013 [Google Scholar]

- 116.Vergara-Laurens I, Mendez D, Labrador MA. Privacy, quality of information, and energy consumption in participatory sensing systems. IEEE International Conference on Pervasive Computing and Communications. 2014 [Google Scholar]

- 117.Hayes G, Poole E, Iachello G, Patel S, Graimes A, Abowd G, Truong K. Physical, social, and experimental knowledge of privacy and security in a pervasive computing environment. IEEE Pervasive Comput. 2007;6(4):56–63. [Google Scholar]

- 118.Laszlo M. Minimum spanning tree partitioning algorithm for microaggregation. IEEE Trans Knowl Data Eng. 2005;17(7):902–911. [Google Scholar]

- 119.Wang J. A survey on privacy preserving data mining. International Workshop on Database Technology and Applications. 2009:111–114. [Google Scholar]

- 120.Kjergarrd MB, Wirz M, Roggen D, Troster G. Mobile sensing of pedestrian flocks in indoor environments using WiFi signals. IEEE International Conference on Pervasive Computing and Communication. 2012 [Google Scholar]