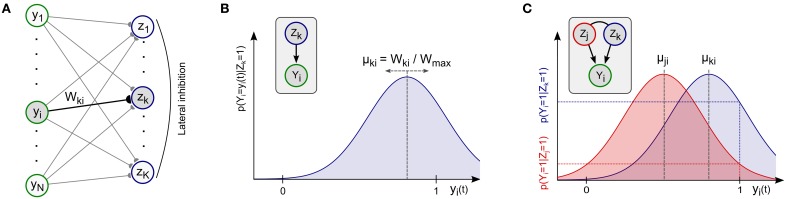

Figure 2.

Spiking network for probabilistic inference and online learning. (A) Winner-take-all network architecture with lateral inhibition and synaptic weights Wki. (B) Network neurons zk implicitly maintain a Gaussian likelihood function for each input Yi in their afferent synaptic weights Wki. The mean μki of the distribution is encoded by the fraction Wki/Wmax = mki/M of active switches in the compound memristive synapse, i.e., stronger synaptic weights Wki correspond to higher mean values μki. Inset: Local implicit graphical model. (C) Illustration of Bayesian inference for two competing network neurons zk, zj and one active input Yi(t) = 1. Different means μki, μji encoded in the weights give rise to different values in the likelihood function and shape the posterior distribution according to Bayes rule.