Abstract

Recent progress in retinal image acquisition techniques, including optical coherence tomography (OCT) and scanning laser ophthalmoscopy (SLO), combined with improved performance of adaptive optics (AO) instrumentation, has resulted in improvement in the quality of in vivo images of cellular structures in the human retina. Here, we present a short review of progress on developing AO-OCT instruments. Despite significant progress in imaging speed and resolution, eye movements present during acquisition of a retinal image with OCT introduce motion artifacts into the image, complicating analysis and registration. This effect is especially pronounced in high-resolution datasets acquired with AO-OCT instruments. Several retinal tracking systems have been introduced to correct retinal motion during data acquisition. We present a method for correcting motion artifacts in AO-OCT volume data after acquisition using simultaneously captured adaptive optics-scanning laser ophthalmoscope (AO-SLO) images. We extract transverse eye motion data from the AO-SLO images, assign a motion adjustment vector to each AO-OCT A-scan, and re-sample from the scattered data back onto a regular grid. The corrected volume data improve the accuracy of quantitative analyses of microscopic structures.

Index Terms: Aberration compensation, adaptive optics, imaging system, motion artifact correction, ophthalmology, optical coherence tomography, scanning laser ophthalmoscopy

I. Introduction

Over the last two decades, all three retinal imaging modalities that are used in ophthalmic clinics [i.e., fundus camera, scanning laser ophthalmoscope (SLO) and optical coherence tomography (OCT)] have been combined successfully with adaptive optics (AO) making possible imaging of different aspects of retinal morphology and function. The fundus camera was originally proposed over 130 years ago and from its early days has been applied successfully to clinical retinal imaging [1]. Thanks to advances in optical design and digital photography it still remains the most commonly used ophthalmic instrument [2]. The SLO was first described in 1980 and later improved by application of a laser source and confocal pinhole in the detection channel [3], [4]. The SLO acquires images by raster scanning an imaging beam on the retina and measuring the backscattered light intensity. It offers improved contrast in retinal images as compared a to conventional fundus camera.

OCT was originally described in 1991, and implemented using the principle of low coherence interferometry. This instrument scans a beam of light across a sample, and at each point in the scanning pattern measures light scattering intensity profiles as a function of depth (so-called A-scans) [5]. The first OCT device obtained cross-sectional images (B-scans) of the human retina and cornea by scanning the imaging beam across the sample and generating ultrasound-like tomograms [6]. These first systems used a time-domain (Td) detection scheme in which the depth scattering profile of the sample was extracted by moving and monitoring a reference mirror position and detecting corresponding interference of low coherence light [7]–[10]. At that time, Td-OCT offered rather limited acquisition speeds, sensitivity and resolution that limited its application to 2-D in vivo retinal imaging. The introduction of Fourier domain (Fd) OCT allowed an increase of the collection rate by 100-fold, without reducing system sensitivity [11]. An Fd-OCT detection scheme also allowed high axial resolution imaging without reduction in OCT system sensitivity [12]–[18]. These properties of Fd-OCT enabled, for the first time, relatively short acquisition time (few seconds or less), high axial resolution in vivo volumetric imaging and made OCT a potentially viable medical imaging technique [14], [19], [20].

Adaptive optics was first introduced for retinal imaging in 1997 in combination with a fundus camera [21]. A few years later, in 2002 the first AO-SLO was presented [22] and followed shortly by the combination of AO with OCT. The first implementations of AO-OCT were based on Time domain OCT. This included a flood illuminated version based on an areal CCD [23] and a more “classical” version based on tomographic scanning (xz) [24]. These early instruments demonstrated the potential of the combined technologies, but fundamental technical limitations, primarily in speed, precluded their scientific and clinical use. Nevertheless, they represented first steps toward more practical designs that became possible with new OCT methods. The one notable time-domain method combined with AO that continues to be developed is a high-speed transverse scanning Td-OCT [25], [26].

The first reports of AO Fourier domain OCT (AO-Fd-OCT) occurred shortly after major developments in Fd-OCT exploring its advantages over Td-OCT [27]–[29]. This led to a rapid transition of AO-OCT systems from Td-OCT to Fd-OCT [30]–[32]. Fast raster scanning of Fd-OCT offers considerable flexibility in the scan pattern, including that for volume imaging. These reports were followed by a large number of developments that targeted improvements in AO-OCT system design and performance, and included an expanded list of laboratories pursuing AO-OCT [34]–[43]. Today, Fd-OCT is employed in almost all AO-OCT systems, with spectral-domain OCT (Sd-OCT) the principal design configuration and swept source OCT (SS-OCT) gaining increased interest due to higher imaging speeds and flatter sensitivity roll-off with depth [44].

To date, AO-based instruments for in vivo human retinal imaging other than AO-OCT have been most successful in imaging the photoreceptor mosaic, including recent reports of foveal cone [45], [46] and rod photoreceptors [47]–[49]. Additionally, several groups reported imaging of macroscopic inner retinal morphology including capillary beds and nerve fiber layer (NFL) bundles [50]–[52]. However it is important to note that reliable visualization of the cellular structures in the inner retina still has not been achieved, mostly due to the low sensitivity and low axial sectioning of AO-SLO, speckle noise pattern and motion artifacts (due to relatively long volume acquisition speeds) in AO-OCT. Nevertheless, AO-OCT has theoretically the greatest potential for successful imaging of cellular features in the inner retina due to its high sensitivity and dynamic range advantage [53]. The aforementioned limitations can be potentially overcome in future generations of instruments.

Despite these limitations, in vivo retinal imaging with AO holds potential for more scientific and clinical applications including direct probing of retinal function both in humans and animal models of human disease. Generally in clinical imaging the use of AO is necessary if required retinal lateral resolution must be better than 10 μm (demanding use of an eye pupil aperture larger than 2 mm). With large pupil apertures, diffraction theory predicts an improvement in resolution due to increased numerical aperture (NA), but the presence of high-order ocular aberrations results in reduced resolution. AO correction of ocular aberrations results in resolution restored to the diffraction limit.

The main difference between AO-SLO and AO-OCT lies in the image acquisition scheme: direct measurements of reflected/back scattered light intensity in SLO versus detection of reflected/back scattered light amplitude as a function of depth in OCT. Therefore, AO-SLO can be used to detect both scattered and fluorescent photons from the sample. This makes it potentially very attractive for functional retinal imaging. In contrast, the standard OCT, due to its coherent detection nature, can only detect elastic, back-scattered photons. Thus, it is more challenging to apply OCT to functional imaging. This difference has many implications, and explains why SLO can be considered complementary to OCT (by detecting signals that OCT cannot).

Involuntary eye movement is one of the main problems inherent in the use of ophthalmic instruments. In flood illuminated instruments, like the fundus camera, eye movements are manifested as image blurring. In instruments that capture images by scanning the acquisition beam (e.g., SLO and OCT), eye movements generate motion artifacts in acquired images. Good fixation of the subject is particularly important for obtaining high-quality optical coherence tomography (OCT) volumetric datasets of the retina. This is due to OCT’s slow transverse scanning speed relative to SLO. Eye movements introduce motion artifacts in OCT volumes that prevent measurement and complicate registration. This effect is magnified in high-resolution datasets acquired by AO-OCT instruments [54]. Recent advances in high-speed Fd-OCT acquisition [55] allow for reduction of volume acquisition time and therefore reduce eye motion artifacts; however, this speed is still too low to limit the effect of eye motion. Moreover, increased imaging speed is correlated with reduction of system sensitivity that may become critical when imaging older patients with subtle structural abnormalities, resulting in insufficient image quality. Several retinal tracking systems, including some built into commercial OCT instruments, or software-based motion correction in post processing have been introduced to correct for retina motion during data acquisition. This study however remains limited to clinical grade OCT systems (10–20 μm lateral resolution) [56]–[61]. Correcting motion artifacts in AO-OCT volumes still remains a challenge.

We previously described a system that captures an AO-SLO image with each AO-OCT B-scan [62]. Using this system, we produce a series of AO-SLO images and AO-OCT B-scans, where each B-scan is registered temporally and spatially with its corresponding AO-SLO image. We extract retinal motion information from the AO-SLO images by calculating transverse position adjustment (translation) vectors which are applied to the corresponding B-scan positions, and we perform data interpolation by using a cubic spline to determine a position adjustment vector for each A-scan in the B-scan. A description of this correction method is provided in this paper.

II. Materials and Methods

In this section we present a brief overview of the historical development of AO-OCT instruments followed by basic characterization of AO-OCT system components and application. A novel method for correcting motion artifacts in AO-OCT datasets is presented as well.

A. Adaptive Optics—Optical Coherence Tomography

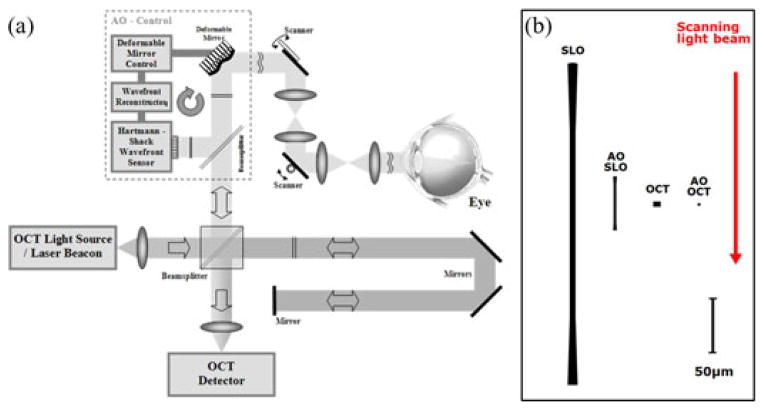

Development of AO-OCT systems has closely followed advances of the OCT technology, progressing rapidly along several different design configurations and taking advantage of different key OCT detection technologies. Over the last ten years, all major OCT design configurations have been combined with AO. Regardless of design configuration, AO-OCT performance has been commonly assessed using standard AO and OCT metrics (axial and lateral resolution), but ultimately judged by the quality of the retinal image and retinal microstructure that are revealed. Fig. 1 illustrates an AO-OCT system and a comparison of theoretical point-spread functions achieved by different retinal imaging systems.

Fig. 1.

Adaptive Optics OCT Basics. (a) Schematic of AO-OCT system key components. (b) The cross sections of theoretical diffraction-limited point-spread functions are compared among two different retinal imaging modalities for 2 and 7 mm pupil size [53]. Red arrow indicates imaging beam direction with respect to the PSF cross-sections. Axial resolution of OCT system is drawn as 5 μm axial resolution while AO-OCT system is drawn as 2.2 μm.

The differences between axial resolution of the SLO and OCT based imaging systems, as depicted by the right panel in Fig. 1, are due to application of different detection schemes. Namely, OCT axial resolution Δz depends only on the coherence properties of the light source and not on the imaging optics numerical aperture (NA), while SLO resolution does depend on NA. OCT axial resolution can be estimated using the central wavelength (λ0) and bandwidth (Δλ) of the light source [63]:

| (1) |

This equation allows one to take into account the refractive index (n) in the imaged media, and assumes a Gaussian-shaped spectrum of the light source and matched dispersion between reference and sample arms of the OCT system [64]. Most of the ophthalmic OCT systems offer axial resolution in the range of 3 μm to 10 μm. For example, an OCT light source centered at 860 nm and 50 nm FWHM spectral bandwidth should offer ~4.8 μm axial resolution in water. Alternatively, a light source centered at 860 nm and 112 nm FWHM spectral bandwidth should offer ~2.2 μm axial resolution in water. It is important to note, however, that the measured axial resolution is usually 10–50% worse than the theoretical value due to the spectral losses in the optical components of the OCT systems including fibers, imaging optics and absorption in the imaging sample.

Axial resolution of the SLO system depends on the focusing geometry of the beam used for imaging and can be described by the following equation:

| (2) |

Note that the same equation can be used to describe OCT depth of focus, defined as the axial distance between points where the beam is times larger (axial resolution is worse). Axial resolution of an SLO system (and depth range of an OCT system) can vary between 600 μm and 50 μm for 850 nm center wavelength and 2 mm to 7 mm pupil diameter respectively.

In contrast to axial resolution, transverse resolution (Δx) in OCT and SLO is limited by diffraction and therefore depends on the light source wavelength and NA of the imaging optics. In the case of clinical imaging it is defined as the portion of the eye’s pupil. Therefore we have identical lateral area for OCT and SLO PSFs in Fig. 1. If we assume no aberrations, which is the best possible scenario, and non-confocal detection, the transverse resolution can be estimated from the following equation [65].

| (3) |

where D is the imaging pupil diameter and f is the focal length of the imaging system (~17 mm for human eye). Assuming a center wavelength of 850 nm and pupil diameters of 2 mm and 7 mm, for non AO and AO imaging respectively, theoretical diffraction-limited transverse resolution varies between 8 μm and 2.5 μm. Correction of ocular aberrations with AO is necessary to obtain diffraction-limited performance for imaging through pupils larger than 3 mm diameter.

Most of the AO systems used in ophthalmology employ the Shack-Hartmann wavefront sensor (SH-WFS) for measuring wavefront aberrations that need to be corrected. During nearly 15 years of AO system development, several different wavefront correctors, mostly deformable mirrors, have been proposed and successfully implemented for retinal imaging. A comprehensive review of the ophthalmic application of AO can be found in a book edited by Porter et al. [66] and in a chapter in the Handbook of Optics by Miller and Roorda [67].

The main components of an AO control sub-system include a SH-WFS and a wavefront corrector (deformable mirror, DM). Most modern AO-OCT systems operate in a closed-loop AO correction mode, where residual wavefront error remaining after applying AO correction (the shape of the DM that counterbalances eye aberrations) is continuously monitored and updated, as the aberrations often vary over time [53].

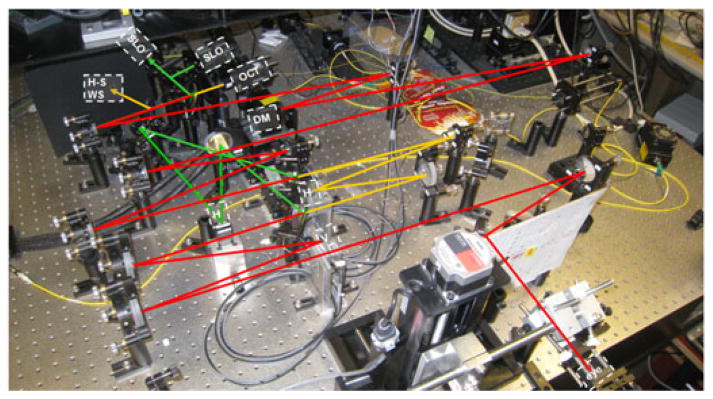

As an example, Fig. 2 shows an AO-OCT system built at UC Davis that was used to acquire all the data presented in this manuscript. This instrument also includes an AO-SLO acquisition channel that was used to simultaneously record a movie of the retina at the depth of photoreceptors. The AO-SLO movie was later processed to measure and extract retina motion that occurred during AO-OCT acquisition.

Fig. 2.

The AO-OCT/AO-SLO instrument sample arm at UC Davis. Red rays—shared AO-OCT/AO-SLO beam path; yellow rays—AO-OCT path only; green rays —AO-SLO path only. AO sub-system uses OCT light for wavefront sensing. SH WSF—wavefront sensor; H —horizontal scanner (OCT or SLO); V —vertical scanner; DM—deformable mirror.

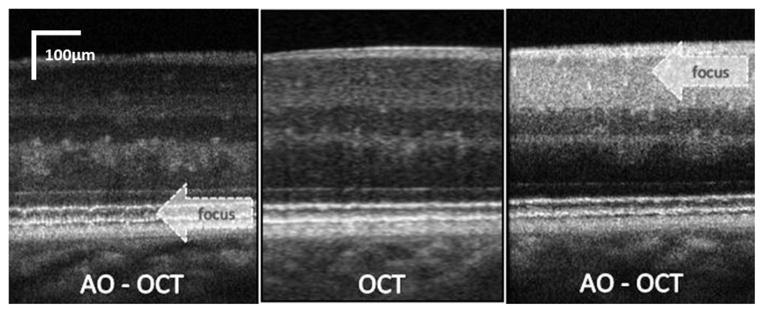

As an example of the AO-OCT system performance, two images (B-scans) that have been acquired with the system presented in Fig. 2 are compared to a clinical OCT B-scan acquired from the same subject at similar retinal eccentricity (see Fig. 3). It is evident that increased NA (pupil) diameter reduced the average speckle size and additionally that the AO ensured improved resolution and intensity of the retinal layers in focus by correcting monochromatic eye aberrations. Two AO-OCT B-scans are shown with AO focus set at two different depth reference planes [at outer retina (left) and inner retina layers (right)].

Fig. 3.

Cross-sectional images of the retina (0.5 mm scanning range) obtained with low lateral resolution OCT (center) and high lateral resolution AO-OCT. (left) AO-OCT with focus set at the photoreceptor layer; (right) AO-OCT with focus set at the ganglion cell layer [68].

One of the drawbacks of the increased lateral resolution in retinal AO-OCT is the limited depth range over which the retinal structures remain in focus. This can be seen clearly in Fig. 3 where only structures in focus, marked by the arrow, show high contrast, whereas out-of-focus areas appear similar to those in the low lateral resolution OCT scan. Therefore in clinical imaging one must choose where to set the AO focus before imaging, or else acquire several volumes with different focus settings. Potential solutions to this limitation include application of depth enhancing illumination beams or computational approaches, which are now the subject of intensive investigation [69], [70].

The main applications of AO-OCT systems include clinical experimental imaging of healthy and diseased retinal structures. Reports on applying AO-OCT to study retinal and optic nerve head diseases have emerged in recent years [71]–[78]. New emerging directions for AO-OCT systems include the study of retinal function [79] and development of dedicated instruments for testing animal models of human disease [80].

B. Motion Artifact Correction in AO-OCT Datasets

Fixation eye motion comprises micro saccadic jerks of the eye, a slow drift of the eye and high frequency micro tremors. Saccades are typically around 1/4 degree (~72 μm) in amplitude and may occur often. The drift component is a random walk with an approximately 1/f amplitude spectrum, so that the longer the interval between samples, the farther the eye will have moved. For an imaging system that samples the eye position at 60 Hz, the eye will typically move less than one arc minute due to drift, with occasional larger excursions due to micro saccades [81]. These shifts in gaze direction show up in SLO and OCT images as transverse motion of the retina. Blood circulation, blinks, and bulk motion of the subject’s head can result in shifts parallel to the scanning beam that are observed as axial B-scan movements on OCT images.

Mitigation of motion artifacts is a necessary step in the production of useful SLO and OCT images and remains an active area of research. One method is to co-register the B-scans to maximize the cross-correlation between each adjacent pair [82]. Other approaches involve real-time hardware-based correction of eye motion in the axial direction [83] or in transverse directions [84] using an additional light beam to track distance to the cornea or features on the retina. Finally, several systems have been developed to track eye motion using SLO image sequences.

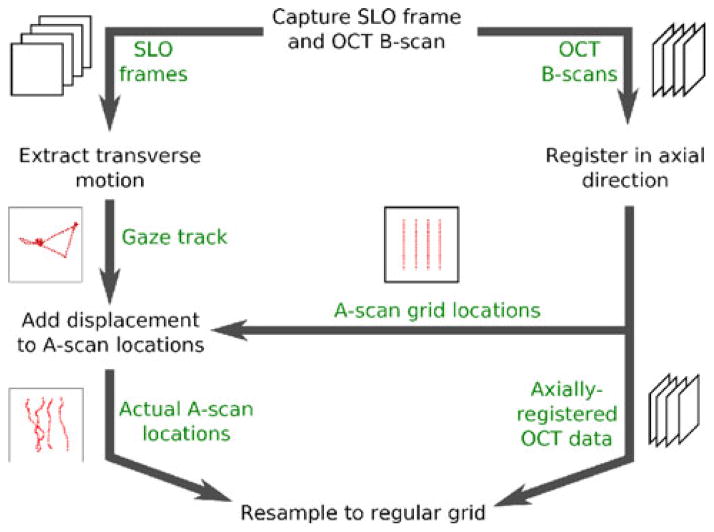

The system described in [83] tracks the surface of the cornea with an additional beam of light to correct axial motion. This system also collects both OCT and SLO frames, registers entire SLO frames to compute transverse eye motion, and uses the resulting detected eye motion to correct the OCT volume. In previous work by Stevenson et al. [85], eye motion was extracted from an SLO image sequence by building up a composite reference image from co-registered individual SLO frames, then registering equal-sized strips from the SLO stream to estimate the retina’s displacement while the strip was captured. This scheme was extended [86] to derive a mathematical model from observed eye motion and correct the SLO image sequence using the model. The method described in [87] involves capturing a single SLO frame after completing an OCT scan, then deforming the OCT image to match its en face projection to the SLO frame. The method described in [88] comes the closest to the present method, tracking eye motion with SLO, deforming the OCT sampling pattern to compensate and re-sampling from the OCT data to a regular grid. However, the present system uses a different, more flexible method for gaze tracking, implemented in software rather than hardware; allows users to adjust time resolution in tracking the target and provides a means to adjust key reconstruction parameters as a step toward implementing fully adaptive reconstruction. Fig. 4 shows an overview of our correction algorithm.

Fig. 4.

Overview of the motion correction algorithm.

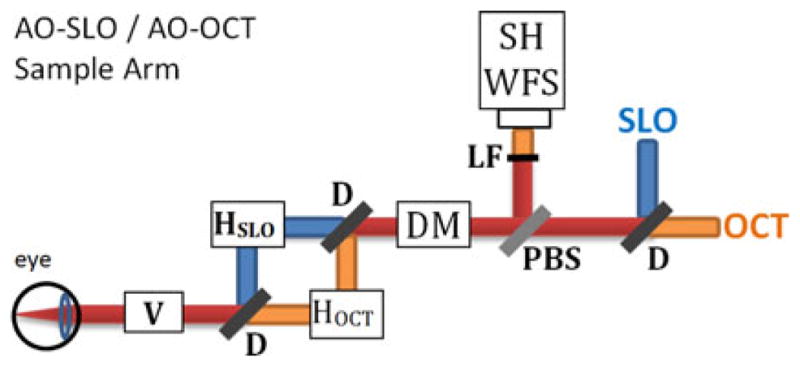

1) AO-OCT Data Acquisition

The key feature of our system is simultaneous acquisition of AO-OCT and AO-SLO datasets. Fig. 5 shows a block diagram of the sample arm of the AO-OCT/AO-SLO instrument. For OCT we use 836 nm light with a bandwidth of 112 nm; for SLO we use 683.4 nm light with a bandwidth of 8.2 nm. The SLO and OCT beams share the same path for most of the instrument’s sample arm. We use dichroic mirrors (D on the block diagram) to separate SLO light from OCT light for the horizontal scanning mirrors HSLO and HOCT and recombine the beams to share the vertical-scan mirror V.

Fig. 5.

Illustration of AO-OCT/AO-SLO instrument sample arm, showing scanning mirror arrangement and some adaptive optics details. Yellow rays—AO-OCT path; green rays—AO-SLO path; red rays—common path for both systems. HSLO —SLO horizontal scanner; HOCT —OCT horizontal scanner; V —Vertical Scanner; D—dichroic mirror, DM—deformable mirror for adaptive optics, PBS—pellicle beam splitter, LF—low-pass optical filter, SH WFS—Shack-Hartmann wavefront sensor.

The vertical mirror (V) provides the slow scan for capturing SLO frames: with each pass of the mirror, the instrument captures one SLO frame. Mirror V also provides the fast scan for OCT; with each pass of the mirror, the system captures one OCT B-scan (in our system, oriented vertically). The B-scan acquisition is repeated for different positions of the OCT horizontal scanner, not seen by the SLO beam, allowing acquisition of volumetric AO-OCT datasets. Both AO-OCT volume and AO-SLO image cover the same lateral area of the retina.

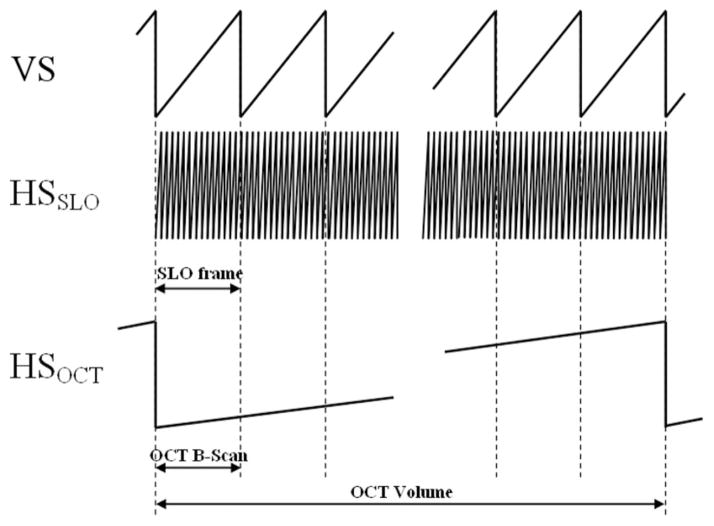

Fig. 6 illustrates the scan timing for this design. Each B-scan is registered in time and space with one SLO frame so any motion evident in the SLO image must also affect the corresponding B-scan.

Fig. 6.

Timing diagrams of vertical scanner (VS) and horizontal OCT/SLO scanners HSOCT HSSLO for volumetric data acquisition.

Mirror HSLO, the AO-SLO subsystem resonant scanner, scans horizontally at about 13.9 kHz and mirror HV runs in a vertical plane at about 27 Hz, imaging a 512 × 512 point grid at 27 frames per second and resulting in a movie from which we track retina movement. Both AO-OCT volume and AO-SLO image cover the same area of the retina.

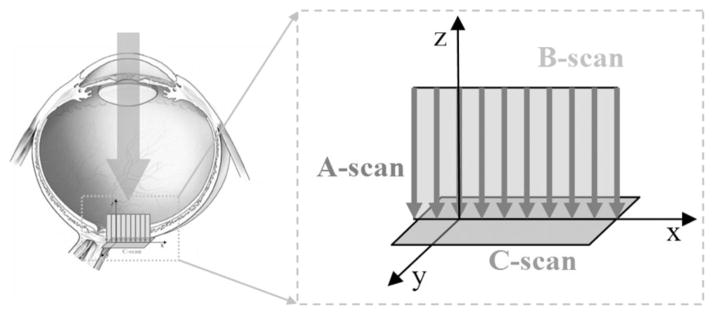

At each point in the AO-OCT raster scan an A-scan is reconstructed over a range of about 1 mm in depth. All the A-scans from one pass of the vertical scanning mirror combine together in a B-scan to portray a cross-sectional slice of the retina, and all the B-scans in an AO-OCT dataset are registered to form a 3D representation of the target (see Fig. 7 for orientation of the scanning planes).

Fig. 7.

Schematic of main acquisition planes of AO-OCT (B-scan) and AO-SLO (C -scan) in our instrument [53].

In our correction algorithm we consider the amount of time required to capture an A-scan to be the fundamental time step, so that the A-scan at time t has grid position gt = (xt, yt), where xt is the B-scan containing the current A-scan, and yt is the index of the current A-scan within B-scan xt. A key feature of the system is that the AO-SLO and AO-OCT subsystems share the vertical scanning mirror. Every pass by the vertical mirror captures both an AO-SLO frame and an AO-OCT B-scan. Thus, any change or movement in the retina during image capture is reflected in both the AO-OCT B-scan and the AO-SLO frame (C-scan), and a feature or signal detected at any point in an AO-SLO frame or AO-OCT B-scan can be precisely related to a point in the other modality.

Due to laser safety considerations we limit the amount of light entering the eye (~500 μW total power). See [62] for more details. This fact, combined with AO-SLO detector noise, sometimes produces low-contrast, noisy images. To improve the signal-to-noise ratio we process our AO-SLO data after collection (Gaussian blurring and contrast enhancement).

2) Detection of Eye Motion Artifacts

We use the AO-SLO image series to track eye movement transverse to the scanning beam. Our method [85], [89] is briefly summarized here. First, we construct a reference image. We select several frames distributed throughout the AO-SLO image series, avoiding frames where a significantly lower overall brightness might indicate an eye blink. We register the reference frames to maximize the cross-correlation between each selected frame and the registered average of the other frames, and then average the registered frames. After constructing the reference image, we divide the AO-SLO images into equally sized strips. Each strip extends the width of the AO-SLO image along the fast-scan dimension and a small width along the slow-scan dimension, thus representing a contiguous interval of scanning time. We register each strip to the composite reference image. In the limit, the strip may comprise just a single scan line, allowing each A-scan to be individually corrected. The displacement of a strip from its expected location on the reference image is the average displacement of the target while the strip was being captured, and the sequence of all displacement vectors shows the movement of the target during the entire scan. When the target is an eye, the sequence of all displacement vectors constitutes the gaze track of the subject.

C. Correction of Eye Motion Artifacts

To correct the artifacts produced in OCT datasets by involuntary eye motion, we push each sample to its A-scan’s correct position, register adjoining B-scans to correct for axial motion, then interpolate a value for each voxel in a regular grid overlaying the sample space. Interpolation methods must strike a balance between accuracy and computational cost. Techniques such as triangular and tetrahedral subdivision with polynomial interpolation are local methods whose cost varies with the degree of the interpolation scheme. Inverse distance weighting methods, known as Shepard’s method [90], [91], and radial basis functions, among which is Hardy’s multiquadrics method [92], were originally proposed as global methods but local variants have been devised as well. Natural neighbor methods such as Sibson’s interpolant [93] may give the highest-quality results but are computationally costly [94]–[96]. We selected a local variant of Shepard’s method or inverse distance weighting to allow for an adjustable neighborhood radius and an adjustable weighting function at a computational cost lower than that of natural neighbor methods.

Our first step is to apply displacement vectors calculated from the AO-SLO gaze track to the locations where we captured AO-OCT A-scans. We use cubic spline interpolation to ensure that each time step t has an associated displacement Δdt = (dxt, dyt), and we rescale the displacement vectors from AO-SLO pixels to AO-OCT voxels. We determine the scaling factors by imaging a stationary test target containing a regular grid at the beginning of each session. The step results in each A-scan having a raster-scan location gt, an estimated displacement Δdt, and a measured brightness profile that is the actual volume image. We store the corrected AO-OCT sampling sites (the A-scan raster scan position plus the displacement, ct = gt + Δdt = (xt + dxt, yt + Δdyt)) in a K − D tree [97].

Axial target motion, parallel to the scanning beam, must be corrected as well as transverse motion. The AO-SLO image sequence provides us with no information about axial motion, so we rely on prior knowledge of retinal structure to correct axial motion using AO-OCT data only. Specifically we translate the B-scans in the axial direction to maximize the cross-correlation between each adjacent B-scan pair [98]. This technique relies on the fact that the human retina is composed of parallel layers generally orthogonal to the scanning beam, which do not change much within the B-scan or even between B-scans.

By correcting the AO-OCT A-scans for axial motion and placing them at the sampling locations stored in the K − D tree, we have corrected target motion artifacts present in the image. Next, we resample from the scattered A-scans to a regular 3D grid using a local inverse distance weighted interpolation scheme in order to enable convenient visualization with existing tools. For each A-scan a in the new volume image, we find the neighboring sample A-scans within transverse distance R of a, so that for each neighbor n, . We compute a weight w for each neighbor n based on the distance from n to a:

| (4) |

For each voxel ai within A-scan a, where 1 ≤ i ≤ height(a), we interpolate value from its neighbors. Here nj,i is the ith value in a’s jth neighbor:

| (5) |

The image resulting from the resampling operation is a regular grid, and is amenable to current methods of volume rendering and other analyses.

The neighborhood radius R is an important parameter in this scheme. We set R according to the feature size we want to reconstruct. The algorithm interpolates a value for the voxel ai based on A-scans within a distance of R from a. The larger the neighborhood radius, the more A-scans will support the new value and the lower the spatial frequency cut-off. Since we want to ensure support for interpolation over a wide range of sampling densities we use a value of R = 1.5 nominal transverse distance (pixel) between A-scans (~1–2 μm depending on sampling density). This choice of value for R results in a forgiving interpolation regime appropriate to large variation in sampling density, tending to fill in a void rather than expose it.

III. Results

A. Validating Test Data

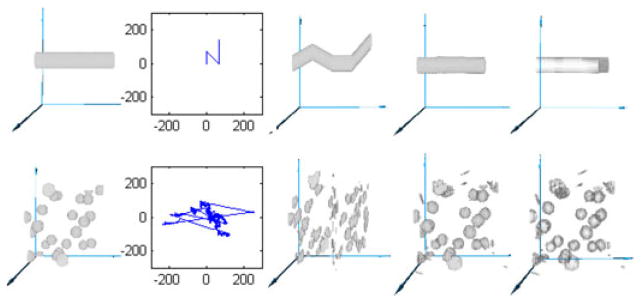

We generated test cases to validate our method, as shown in Fig. 8. The upper row demonstrates the basic operation of our system. The leftmost column shows the ground truth, the volume sampled with no motion. The second column shows the motion track we used to resample from the leftmost column to the middle column. In the upper row, because the original image was a straight bar, each segment of the middle image corresponds to a segment in the motion trace, with the third, horizontal segment corresponding to the stationary period at (70, 0).

Fig. 8.

Two synthetic datasets. (Top row) bar shifted by piecewise linear translation, (bottom row) 25 spheres translated by recorded gaze track. Columns, left to right: original dataset, movement track, volume with motion artifacts, corrected dataset, absolute value of error (original minus corrected).

The fourth column shows the result of applying our motion correction to the image in the middle column, and the rightmost column shows the difference between the ground truth image and the resampled motion-corrected image.

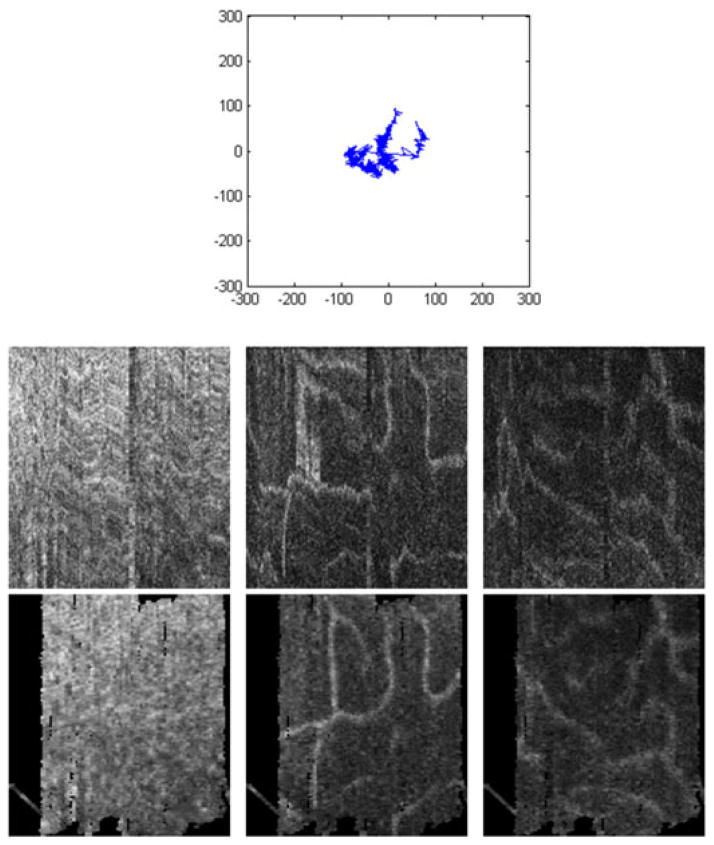

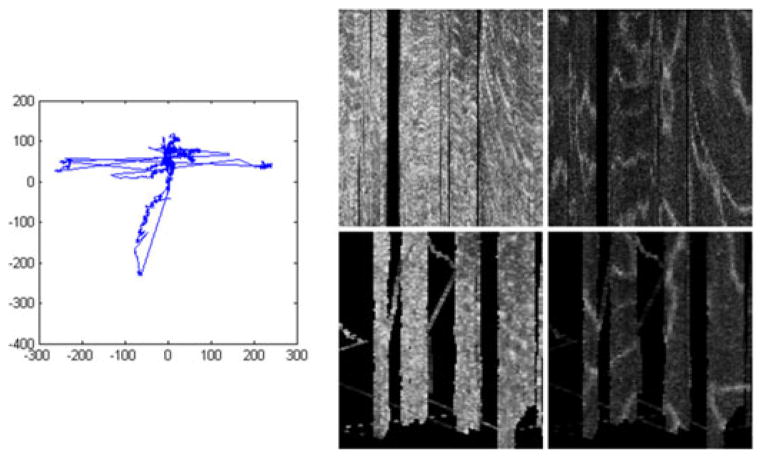

The second synthetic example, in the lower row of Fig. 8, shows correction of more complicated motion. We use a synthetic dataset consisting of 25 spherical blobs each with a diameter of 8 pixels, shown in the leftmost column, sampled as they move along a previously recorded human gaze track shown in the second column. It is interesting to note some characteristics of human involuntary eye motion (drift and tremor) shown in this gaze track as well as Figs. 9 and 10: there are several large lateral jumps, the gaze stays fairly close around the center (fixated on target) for most of the image, and there is substantial high-frequency jitter as well as low-frequency drift.

Fig. 9.

(Top) Gaze track reconstructed from AO-SLO during single AO-OCT volume acquisition. (Middle) Uncorrected AO-OCT scans of photoreceptor (left), outer plexiform (center), and inner plexiform layers (right.) (Bottom) motion-corrected AO-OCT: C -Scans of the same layers. Units on the gaze track are in pixels of AO-SLO image (1 pixel ~ 1.1 μm). Transverse scanning range of C -scans is about 560 μm (2 deg).

Fig. 10.

(Left) Gaze track reconstructed from AO-SLO during single AO-OCT volume acquisition, (middle) photoreceptor layer extracted from one motion-corrected AO-OCT volume, (right) outer plexiform layer extracted from one motion-corrected AO-OCT volume. Units on the gaze track and transverse scanning range of C -scans are the same as those used for Fig. 9.

The middle column shows how disruptive this motion is even to a relatively simple synthetic test set. The corrected image and difference from the ground truth, shown in the fourth and fifth columns, show that the original blobs are approximated well.

The rightmost column shows that errors in the corrected images are confined to interpolation at the surface of the solids. For sparse images with no internal structure, this is as expected, and builds confidence that the motion correction procedure will work for dense images such as OCT volumes.

B. Correcting AO-OCT Datasets

The data in Figs. 9 and 10 were collected from a healthy 33-year-old male volunteer. Fig. 9 (bottom) shows before-correction and after-correction images (virtual C-scans) from several layers within the retina taken from a single volume image, and Fig. 9 top displays the gaze track that was detected and removed. Fig. 9 (bottom left) shows the photoreceptor layer, where the AO-OCT instrument was focused during this imaging session. Fig. 9 (bottom center and right) show two layers of vasculature in the inner retina. All three layers shows great improvement as artifacts such as kinked or disconnected vessels and smeared photoreceptors are repaired.

Fig. 10 shows the detected eye motion (left) and the before-and after-correction images (middle and right, respectively) from two layers in another AO-OCT volume.

This volume was affected more by motion than the one shown in Fig. 9, which results in large voids visible in the corrected, lower images where no A-scans were acquired. In fact, we had to discard a few of the B-scans from the uncorrected volume because of an inopportune blink (large gap, on the left) and a stronger-than-usual eye twitch (one B-scan removed about one third of the way in from the right). However, some clear features are visible in the corrected volume, where not much was visible before, and motion correction makes the under-sampled areas explicit rather than assumed.

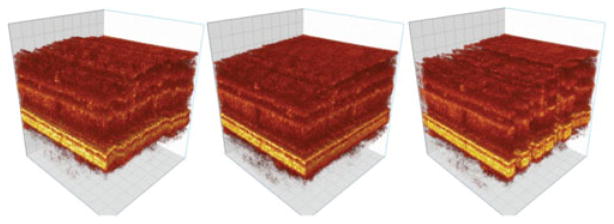

Generation of motion artifact free AO-OCT volumes will allow better quantification of the morphological details observed on these images. Nowadays these images are mainly used as qualitative representations of tissue morphology. Thus corrected AO-OCT volumes would allow follow up of changes in any retinal layer during treatment or allow monitoring of disease progression at resolution levels previously not possible. Fig. 11 shows an example visualization of the AO-OCT volumes before and after motion artifact correction.

Fig. 11.

AO-OCT volume before correction (left), with z correction (middle) and with x − y − z correction (right).

Table I presents the runtimes and error metrics for datasets shown in this paper. The synthetic datasets used generated or prerecorded target motion data, so they have no times recorded for data extraction. We have no ground truth for the real-world datasets shown in Figs. 9 through 10, so they are not quantified.

TABLE I.

Runtime and Error Metric for DataSets Presented Here

One additional benefit of creating motion-artifact-free AO-OCT volumes is the possibility of comparing multiple volumes acquired during a single data acquisition session. This should allow studies of fast changes of optical properties of measured retinal tissue, opening a window to study retinal function in 3D. Similar studies are nowadays performed in a 2D enface plane by AO-SLO systems. Additionally, averaging of multiple volumes might allow visualization of cellular structures that are not visible on a single volume due to insufficient signal intensity or presence of coherence noise (speckle pattern). For example successful visualization of retinal ganglion cells (the cells that send the signals from the retina to other parts of the brain (Lateral Geniculate Nucleus) and cannot be visualized with any noninvasive modality) will allow improved diagnostic and monitoring of many eye diseases (e.g., glaucoma).

IV. Conclusion

AO-OCT is a relatively novel retinal imaging technology that still continues to develop. Its two key sub-systems (AO and OCT) are subject to active research by many laboratories and any improvement in hardware and data processing methods will benefit AO-OCT instrumentation. Additionally, first clinical and psychophysical applications of AO-OCT reveal its great potential for high resolution diagnostic and monitoring of cellular level changes in the living retina.

It is known that transverse chromatic aberrations shift the relative lateral position of OCT and SLO beams on the retina. However, as our method relies on motion tracking (change in position rather than absolute position) we don’t expect this to have any effect on extracting retinal motion data.

Motion correction of in vivo AO-OCT volumes of the human retina has potentially significant benefits for vision science and physiology. The framework presented in this paper makes possible the use of datasets that are otherwise of marginal use because of motion artifacts, supports the registration of volumes to 2D images and stitching with other volumes, and permits accurate shape analysis of structures in the volume. Retinal AO-OCT stitching and registration have already been used for larger volume datasets and scales [39], but our method should improve dataset stitching for magnified AO-OCT datasets that was previously not possible due to large eye movements. Our system also allows detection and visualization of under-sampled regions in the volume, which prior to motion correction were simply not apparent, hidden in the regular grid spacing of uncorrected volumes. Thus the motion corrected AO-OCT volumes reveal the actual sampling pattern as affected by motion artifacts and clearly show problems with slow acquisition speed of AO-OCT volumes. As a result, the corrected volumes may actually look distorted.

Motion correction presents considerable challenges. Our system is limited by the quality of the gaze track we extract from the AO-SLO image stream. If the AO-SLO images do not exhibit a distinct texture, the algorithm will not be able to construct a good composite reference image and will have difficulties with registering strips from the AO-SLO stream to the reference image. We plan to devise a way to quickly measure the quality of an AO-SLO image sequence for extracting eye motion. The conversion factors to change eye motion detected in AO-SLO pixels to AO-OCT pixels must be accurate to ensure the correct replacement of A-scans to their actual sampling locations. Our current method of calculating the conversion factors requires the system operator to measure images of a calibration grid. We calculate SLO-to-OCT conversion factors at the beginning of each imaging session so manual calculation does not pose an undue burden. Nevertheless, we would like to be able to automatically verify the imaging process as much as possible. As the result of these limitations it is currently not possible to reliably visualize the photoreceptor mosaic on the reconstructed motion corrected AO-OCT photoreceptor layer projections. Additionally, one of the consequences of the motion correction method presented in this manuscript is reduction of speckle contrast. This is due to interpolation of the corrected voxels to a rectangular grid to generate a 3-D dataset. As some voxels are acquired repetitively the resulting interpolation reduces speckle contrast.

We plan to refine some details of the reconstruction process, including exploration of adaptive neighborhood size, by dynamically adjusting R, and neighborhood shape, by interpolating over an ellipsoidal rather than spherical neighborhood. Although Shepard’s method of distance weighted interpolation is useful and versatile we also plan to explore other methods of scattered data reconstruction.

Our method extends the utility of retinal AO-OCT datasets. We expect that our method will reduce the number of scans that need to be captured, ultimately reducing imaging time and increasing patient comfort. By correcting structural distortion, our method makes image combination possible, opening the way for large OCT mosaics and accurate time-series. Thus, this motion artifact correction method promises to contribute to retinal imaging research and eventually to disease monitoring and treatment.

Acknowledgments

The authors gratefully acknowledge the contributions of Scot Olivier and Steve Jones of the Lawrence Livermore National Laboratory, and the VSRI UC Davis lab members Suman Pilli, Ravi Jonnal and Susan Garcia. This research was supported by the National Eye Institute (EY 014743) and Research to Prevent Blindness (RPB). It also performed, in part, under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52–07NA27344. LLNL-JRNL-639865

Biographies

Robert J. Zawadzki was born in Torun, Poland, in 1975. He received the B.S. and M.S. degrees in experimental physics and medical physics from the Nicolaus Copernicus University Torun, Poland, in 1998 and 2000, and the Ph.D. degree in natural sciences from Technical University of Vienna, Vienna, Austria in 2003. In 2004, he joined the UC Davis Vision Science and Advanced Retinal Imaging (VSRI) laboratory, where he is now an Associate Researcher. He is the author of more than 50 peer-reviewed articles, and more than 40 conference proceedings.

Robert J. Zawadzki was born in Torun, Poland, in 1975. He received the B.S. and M.S. degrees in experimental physics and medical physics from the Nicolaus Copernicus University Torun, Poland, in 1998 and 2000, and the Ph.D. degree in natural sciences from Technical University of Vienna, Vienna, Austria in 2003. In 2004, he joined the UC Davis Vision Science and Advanced Retinal Imaging (VSRI) laboratory, where he is now an Associate Researcher. He is the author of more than 50 peer-reviewed articles, and more than 40 conference proceedings.

Arlie G. Capps received the B.S. degree in computer science from Brigham Young University, Provo, UT, USA, in 2004. He is currently working toward the Ph.D. degree in computer science at the University of California, Davis, CA, USA. He is a Lawrence Scholar at Lawrence Livermore National Laboratory. His research interests include scientific and medical volume visualization, multimodal data fusion, and error quantification and correction.

Arlie G. Capps received the B.S. degree in computer science from Brigham Young University, Provo, UT, USA, in 2004. He is currently working toward the Ph.D. degree in computer science at the University of California, Davis, CA, USA. He is a Lawrence Scholar at Lawrence Livermore National Laboratory. His research interests include scientific and medical volume visualization, multimodal data fusion, and error quantification and correction.

Dae Yu Kim was born in Yecheon, Korea, in 1976. He received the B.S. and M.S. degrees in electrical engineering from Inha University, Korea, and University at Buffalo, State University of New York (SUNY Buffalo), Buffalo, NY, USA, respectively, and the Ph.D. degree in biomedical engineering from the University of California, Davis, CA, USA, where he was a Med-into-Grad scholar from the Howard Hughes Medical Institute. From 2012 to 2013, he was a Postdoctoral Scholar at the Biological Imaging Center, Beckman Institute, California Institute of Technology. He is currently a Postdoctoral Scholar at the Molecular and Computational Biology Section, University of Southern California. His research interests include biomedical optics and imaging to improve diagnosis, treatment, and prevention of human diseases.

Dae Yu Kim was born in Yecheon, Korea, in 1976. He received the B.S. and M.S. degrees in electrical engineering from Inha University, Korea, and University at Buffalo, State University of New York (SUNY Buffalo), Buffalo, NY, USA, respectively, and the Ph.D. degree in biomedical engineering from the University of California, Davis, CA, USA, where he was a Med-into-Grad scholar from the Howard Hughes Medical Institute. From 2012 to 2013, he was a Postdoctoral Scholar at the Biological Imaging Center, Beckman Institute, California Institute of Technology. He is currently a Postdoctoral Scholar at the Molecular and Computational Biology Section, University of Southern California. His research interests include biomedical optics and imaging to improve diagnosis, treatment, and prevention of human diseases.

Athanasios Panorgias received the B.S. degree in physics from the University of Crete, Crete, Greece, in 2005, and the M.S. degree in optics and vision from the Institute of Vision and Optics, University of Crete in 2007, and the Ph.D. degree in neuroscience from The University of Manchester, Manchester, U.K., in 2010. He has been a Postdoctoral Fellow since 2011 at the University of California, Davis, CA, USA, in the laboratory of Vision Science and Advanced Retinal Imaging. His research interests include, among others, retinal electrophysiology and visual psychophysics. He is currently working on correlating retinal function, as measured with multifocal electroretinograms, with retinal structure, as imaged with adaptive optics–optical coherence tomography.

Athanasios Panorgias received the B.S. degree in physics from the University of Crete, Crete, Greece, in 2005, and the M.S. degree in optics and vision from the Institute of Vision and Optics, University of Crete in 2007, and the Ph.D. degree in neuroscience from The University of Manchester, Manchester, U.K., in 2010. He has been a Postdoctoral Fellow since 2011 at the University of California, Davis, CA, USA, in the laboratory of Vision Science and Advanced Retinal Imaging. His research interests include, among others, retinal electrophysiology and visual psychophysics. He is currently working on correlating retinal function, as measured with multifocal electroretinograms, with retinal structure, as imaged with adaptive optics–optical coherence tomography.

Scott B. Stevenson was born in Huntsville, AL, USA, in 1959. He received the B.A. degree in psychology from Rice University in 1981 and the Ph.D. degree in experimental psychology from Brown University in 1987. From 1987 to 1991, he was an NRSA fellow at the University of California at Berkeley, and then an Assistant Researcher at UCB until 1995. In 1995, he joined the faculty of the University of Houston College of Optometry, where he is now an Associate Professor of Optometry and Vision Sciences. Dr. Stevenson is a member of the Association of Researchers in Vision and Ophthalmology (ARVO) and the Vision Sciences Society (VSS). He is a past recipient of a National Research Service Award from the NIH and the Cora and J. Davis Armistead Teaching Award from the University of Houston.

Scott B. Stevenson was born in Huntsville, AL, USA, in 1959. He received the B.A. degree in psychology from Rice University in 1981 and the Ph.D. degree in experimental psychology from Brown University in 1987. From 1987 to 1991, he was an NRSA fellow at the University of California at Berkeley, and then an Assistant Researcher at UCB until 1995. In 1995, he joined the faculty of the University of Houston College of Optometry, where he is now an Associate Professor of Optometry and Vision Sciences. Dr. Stevenson is a member of the Association of Researchers in Vision and Ophthalmology (ARVO) and the Vision Sciences Society (VSS). He is a past recipient of a National Research Service Award from the NIH and the Cora and J. Davis Armistead Teaching Award from the University of Houston.

Bernd Hamann studied mathematics and computer science at the Technical University of Braunschweig, Germany, and Arizona State University, Tempe, USA. He is currently a Professor of computer science at the University of California, Davis. His research interests include data visualization, computer graphics, geometric design, and data processing.

Bernd Hamann studied mathematics and computer science at the Technical University of Braunschweig, Germany, and Arizona State University, Tempe, USA. He is currently a Professor of computer science at the University of California, Davis. His research interests include data visualization, computer graphics, geometric design, and data processing.

John S. Werner was born in Nebraska. He studied experimental psychology at the University of Kansas, and received the Ph.D. degree in psychology from Brown University. He conducted postdoctoral research on physiological optics at the Institute for Perception—TNO, Soesterberg, The Netherlands, before joining the faculty at the University of Colorado, Boulder. He is currently a Distinguished Professor in the Department of Ophthalmology and Vision Science and Department of Neurobiology, Physiology and Behavior, University of California, Davis. He is the author of more than 250 peer-reviewed papers and a number of books. He is the Coeditor of the forthcoming volume of The New Visual Neurosciences (MIT Press).

John S. Werner was born in Nebraska. He studied experimental psychology at the University of Kansas, and received the Ph.D. degree in psychology from Brown University. He conducted postdoctoral research on physiological optics at the Institute for Perception—TNO, Soesterberg, The Netherlands, before joining the faculty at the University of Colorado, Boulder. He is currently a Distinguished Professor in the Department of Ophthalmology and Vision Science and Department of Neurobiology, Physiology and Behavior, University of California, Davis. He is the author of more than 250 peer-reviewed papers and a number of books. He is the Coeditor of the forthcoming volume of The New Visual Neurosciences (MIT Press).

Contributor Information

Robert J. Zawadzki, Email: rjzawadzki@ucdavis.edu, Vision Science and Advanced Retinal Imaging Laboratroy (VSRI), Department of Ophthalmology and Vision Science, and Department of Cell Biology and Human Anatomy, University of California Davis, Sacramento, CA 95817 USA

Arlie G. Capps, Email: agcapps@ucdavis.edu, Vision Science and Advanced Retinal Imaging Laboratory (VSRI), Department of Ophthalmology and Vision Science, with the Institute for Data Analysis and Visualization (IDAV), Department of Computer Science, University of California, Davis, Davis, CA 95616 USA, and also with Physical and Life Sciences, Lawrence Livermore National Laboratory, CA 94551 USA

Dae Yu Kim, Email: dyukim@ucdavis.edu, Vision Science and Advanced Retinal Imaging Laboratroy (VSRI), Department of Ophthalmology and Vision Science, University of California Davis, Sacramento, CA 95817 USA.

Athanasios Panorgias, Email: apanorgias@ucdavis.edu, Vision Science and Advanced Retinal Imaging Laboratroy (VSRI), Department of Ophthalmology and Vision Science, University of California Davis, Sacramento, CA 95817 USA.

Scott B. Stevenson, Email: SBStevenson@UH.edu, College of Optometry, University of Houston, Houston, TX 77204 USA

Bernd Hamann, Email: hamann@cs.ucdavis.edu, Institute for Data Analysis and Visualization (IDAV), Department of Computer Science, University of California, Davis, CA 95616–8562 USA.

John S. Werner, Email: jswerner@ucdavis. edu, Vision Science and Advanced Retinal Imaging Laboratroy (VSRI), Department of Ophthalmology and Vision Science, University of California Davis, Sacramento, CA 95817 USA

References

- 1.Howe L. Photographs of the interior of the eye. Trans Amer Ophthalmol Soc. 1887;4:568–571. [PMC free article] [PubMed] [Google Scholar]

- 2.Yannuzzi LA, Ober MD, Slakter JS, Spaide RF, Fisher YL, Flower RW, Rosen R. Ophthalmic fundus imaging: Today and beyond. Amer J Ophthalmol. 2004 Mar;137(3):511–524. doi: 10.1016/j.ajo.2003.12.035. [DOI] [PubMed] [Google Scholar]

- 3.Webb RH, Hughes GW, Pomerantzeff O. Flying spot TV ophthalmoscope. Appl Opt. 1980;19(17):2991–2997. doi: 10.1364/AO.19.002991. [DOI] [PubMed] [Google Scholar]

- 4.Webb RH, Hughes GW, Delori FC. Confocal scanning laser ophthalmoscope. Appl Opt. 1987;26(8):1492–1499. doi: 10.1364/AO.26.001492. [DOI] [PubMed] [Google Scholar]

- 5.Fercher AF, Mengedoht K, Werner W. Eye-length measurement by interferometry with partially coherent light. Opt Lett, [Online] 1988 Mar;13(3):186–188. doi: 10.1364/ol.13.000186. Available: http://www.opticsinfobase.org/ol/abstract.cfm?URI=ol-13-3-186. [DOI] [PubMed] [Google Scholar]

- 6.Huang D, Swanson EA, Lin CP, Schuman JS, Stinson WG, Chang W, Hee MR, Flotte T, Gregory K, Puliafito CA, Fujimoto JG. Optical coherence tomography. Sci, [Online] 1991 Nov;254(5035):1178–1181. doi: 10.1126/science.1957169. Available: http://www.sciencemag.org/content/254/5035/1178.short. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fercher AF, Hitzenberger CK, Drexler W, Kamp G, Sattmann H. In vivo optical coherence tomography. Amer J Ophthalmol. 1993 Jul;116(1):113–114. doi: 10.1016/s0002-9394(14)71762-3. [DOI] [PubMed] [Google Scholar]

- 8.Swanson EA, Izatt JA, Hee MR, Huang D, Lin CP, Schuman JS, Puliafito CA, Fujimoto JG. In vivo retinal imaging by optical coherence tomography. Opt Lett, [Online] 1993 Nov;18(21):1864–1866. doi: 10.1364/ol.18.001864. Available: http://www.opticsinfobase.org/ol/abstract.cfm?id=12015. [DOI] [PubMed] [Google Scholar]

- 9.Hee MR, Izatt JA, Swanson EA, Huang D, Schuman JS, Lin CP, Puliafito CA, Fujimoto JG. Optical coherence tomography of the human retina. Arch Ophthalmol, [Online] 1995 Mar;113(3):325–332. doi: 10.1001/archopht.1995.01100030081025. Available: http://archopht.jamanetwork.com/article.aspx?articleid=641054. [DOI] [PubMed] [Google Scholar]

- 10.Fercher AF. Optical coherence tomography. J Biomed Opt, [Online] 1996 Apr;1(2):157–173. doi: 10.1117/12.231361. Available: http://biomedicaloptics.spiedigitallibrary.org/article.aspx?articleid=1101056. [DOI] [PubMed] [Google Scholar]

- 11.Wojtkowski M. High-speed optical coherence tomography: Basics and applications. Appl Opt. 2010;49:30–61. doi: 10.1364/AO.49.000D30. [DOI] [PubMed] [Google Scholar]

- 12.Wojtkowski M, Bajraszewski T, Targowski P, Kowalczyk A. Real-time in vivo imaging by high-speed spectral optical coherence tomography. Opt Lett. 2003;28(19):1745–1747. doi: 10.1364/ol.28.001745. [DOI] [PubMed] [Google Scholar]

- 13.Wojtkowski M, Bajraszewski T, Gorczynska I, Targowski P, Kowalczyk A, Wasilewski W, Radzewicz C. Ophthalmic imaging by spectral optical coherence tomography. Amer J Ophthalmol. 2004 Sep;138(3):412–419. doi: 10.1016/j.ajo.2004.04.049. [DOI] [PubMed] [Google Scholar]

- 14.Nassif NA, Cense B, Park BH, Pierce MC, Yun SH, Bouma BE, Tearney GJ, Chen TC, de Boer JF. In vivo high-resolution video-rate spectral-domain optical coherence tomography of the human retina and optic nerve. Opt Exp. 2004 Feb;12(3):367–376. doi: 10.1364/opex.12.000367. [DOI] [PubMed] [Google Scholar]

- 15.Yun SH, Tearney GJ, Bouma BE, Park BH, de Boer JF. High-speed spectral-domain optical coherence tomography at 1.3 μm wavelength. Opt Exp. 2003 Dec;11(26):3598–3604. doi: 10.1364/oe.11.003598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wojtkowski M, Srinivasan V, Fujimoto JG, Ko T, Schuman JS, Kowalczyk A, Duker JS. Three-dimensional retinal imaging with high-speed ultrahigh-resolution optical coherence tomography. Ophthalmol. 2005 Oct;112(10):1734–1746. doi: 10.1016/j.ophtha.2005.05.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Alam S, Zawadzki RJ, Choi S, Gerth C, Park SS, Morse L, Werner JS. Clinical application of rapid serial Fourier-domain optical coherence tomography for macular imaging. Ophthalmol. 2006 Aug;113(8):1425–1431. doi: 10.1016/j.ophtha.2006.03.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Potsaid B, Gorczynska I, Srinivasan VJ, Chen Y, Jiang J, Cable A, Fujimoto JG. Ultrahigh speed spectral/Fourier domain OCT ophthalmic imaging at 70 000 to 312 500 axial scans per second. Opt Exp. 2008 Sep;16(19):15149–15169. doi: 10.1364/oe.16.015149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wojtkowski M, Srinivasan VJ, Ko TH, Fujimoto JG, Kowalczyk A, Duker JS. Ultrahigh-resolution high-speed Fourier domain optical coherence tomography and methods for dispersion compensation. Opt Exp. 2004 May;12(11):2404–2422. doi: 10.1364/opex.12.002404. [DOI] [PubMed] [Google Scholar]

- 20.Leitgeb RA, Drexler W, Unterhuber A, Hermann B, Bajraszewski T, Le T, Stingl A, Fercher AF. Ultrahigh resolution Fourier domain optical coherence tomography. Opt Exp. 2004 May;12(10):2156–2165. doi: 10.1364/opex.12.002156. [DOI] [PubMed] [Google Scholar]

- 21.Liang JZ, Williams DR, Miller DT. Supernormal vision and high-resolution retinal imaging through adaptive optics. J Opt Soc Amer A. 1997 Nov;14(11):2884–2892. doi: 10.1364/josaa.14.002884. [DOI] [PubMed] [Google Scholar]

- 22.Roorda A, Romero-Borja F, Donnelly WJ, Queener H, Hebert TJ, Campbell MCW. Adaptive optics scanning laser ophthalmoscopy. Opt Exp. 2002;10(9):405–412. doi: 10.1364/oe.10.000405. [DOI] [PubMed] [Google Scholar]

- 23.Miller DT, Qu J, Jonnal RS, Thorn K. Coherence gating and AO in the eye. Proc. SPIE 7th Coherence Domain Opt. Methods Opt. Coherence Tomography Biomed; Jul. 2003; pp. 65–72. [Google Scholar]

- 24.Hermann B, Fernandez EJ, Unterhuber A, Sattmann H, Fercher AF, Drexler W, Prieto PM, Artal P. Adaptive-optics ultrahigh-resolution optical coherence tomography. Opt Lett. 2004 Sep;29(18):2142–2144. doi: 10.1364/ol.29.002142. [DOI] [PubMed] [Google Scholar]

- 25.Pircher M, Zawadzki RJ, Evans JW, Werner JS, Hitzenberger CK. Simultaneous imaging of human cone mosaic with adaptive optics enhanced scanning laser ophthalmoscopy and high-speed transversal scanning optical coherence tomography. Opt Lett. 2008 Jan;33(1):22–24. doi: 10.1364/ol.33.000022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pircher M, Götzinger E, Sattmann H, Leitgeb RA, Hitzenberger CK. In vivo investigation of human cone photoreceptors with SLO/OCT in combination with 3D motion correction on a cellular level. Opt Exp. 2010;18(13):13935–13944. doi: 10.1364/OE.18.013935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Leitgeb R, Hitzenberger CK, Fercher AF. Performance of fourier domain versus time domain optical coherence tomography. Opt Exp. 2003;11(8):889–894. doi: 10.1364/oe.11.000889. [DOI] [PubMed] [Google Scholar]

- 28.de Boer JF, Cense B, Park BH, Pierce MC, Tearney GJ, Bouma BE. Improved signal-to-noise ratio in spectral-domain compared with time-domain optical coherence tomography. Opt Lett. 2003 Nov;28(21):2067–2069. doi: 10.1364/ol.28.002067. [DOI] [PubMed] [Google Scholar]

- 29.Choma MA, Sarunic MV, Yang C, Izatt JA. Sensitivity advantage of swept source and Fourier domain optical coherence tomography. Opt Exp. 2003 Sep;11(18):2183–2189. doi: 10.1364/oe.11.002183. [DOI] [PubMed] [Google Scholar]

- 30.Zhang Y, Rha J, Jonnal R, Miller D. Adaptive optics parallel spectral domain optical coherence tomography for imaging the living retina. Opt Exp. 2005 Jun;13(12):4792–4811. doi: 10.1364/opex.13.004792. [DOI] [PubMed] [Google Scholar]

- 31.Zawadzki RJ, Jones SM, Olivier SS, Zhao M, Bower BA, Izatt JA, Choi S, Laut S, Werner JS. Adaptive-optics optical coherence tomography for high-resolution and high-speed 3-D retinal in vivo imaging. Opt Exp. 2005 Oct;13(21):8532–8546. doi: 10.1364/opex.13.008532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Fernández EJ, Považay B, Hermann B, Unterhuber A, Sattmann H, Prieto PM, Leitgeb R, Ahnelt P, Artal P, Drexler W. Three-dimensional adaptive optics ultrahigh-resolution optical coherence tomography using a liquid crystal spatial light modulator. Vis Res. 2005 Oct;45(28):3432–3444. doi: 10.1016/j.visres.2005.08.028. [DOI] [PubMed] [Google Scholar]

- 33.Zhang Y, Cense B, Rha J, Jonnal RS, Gao W, Zawadzki RJ, Werner JS, Jones S, Olivier S, Miller DT. High-speed volumetric imaging of cone photoreceptors with adaptive optics spectral-domain optical coherence tomography. Opt Exp. 2006 May;14(10):4380–4394. doi: 10.1364/OE.14.004380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Merino D, Dainty C, Bradu A, Podoleanu AG. Adaptive optics enhanced simultaneous en-face optical coherence tomography and scanning laser ophthalmoscopy. Opt Exp. 2006 Apr;14(8):3345–3353. doi: 10.1364/oe.14.003345. [DOI] [PubMed] [Google Scholar]

- 35.Zawadzki RJ, Choi SS, Jones SM, Oliver SS, Werner JS. Adaptive optics-optical coherence tomography: optimizing visualization of microscopic retinal structures in three dimensions. J Opt Soc Amer A. 2007 May;24(5):1373–1383. doi: 10.1364/josaa.24.001373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Zawadzki RJ, Cense B, Zhang Y, Choi SS, Miller DT, Werner JS. Ultrahigh-resolution optical coherence tomography with monochromatic and chromatic aberration correction. Opt Exp. 2008 May;16(11):8126–8143. doi: 10.1364/oe.16.008126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Fernandez EJ, Hermann B, Povazay B, Unterhuber A, Sattmann H, Hofer B, Ahnelt PK, Drexler W. Ultrahigh resolution optical coherence tomography and pancorrection for cellular imaging of the living human retina. Opt Exp. 2008 Jul;16(15):11083–11094. doi: 10.1364/oe.16.011083. [DOI] [PubMed] [Google Scholar]

- 38.Torti C, Povazay B, Hofer B, Unterhuber A, Carroll J, Ahnelt PK, Drexler W. Adaptive optics optical coherence tomography at 120000 depth scans/s for non-invasive cellular phenotyping of the living human retina. Opt Exp. 2009 Oct;17(22):19382–19400. doi: 10.1364/OE.17.019382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Zawadzki RJ, Choi SS, Fuller AR, Evans JW, Hamann B, Werner JS. Cellular resolution volumetric in vivo retinal imaging with adaptive optics-optical coherence tomography. Opt Exp. 2009 May;17(5):4084–4094. doi: 10.1364/oe.17.004084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Cense B, Koperda E, Brown JM, Kocaoglu OP, Gao W, Jonnal RS, Miller DT. Volumetric retinal imaging with ultrahigh-resolution spectral-domain optical coherence tomography and adaptive optics using two broadband light sources. Opt Exp. 2009 Mar;17(5):4095–4111. doi: 10.1364/oe.17.004095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Cense B, Gao W, Brown JM, Jones SM, Jonnal RS, Mujat M, Park BH, de Boer JF, Miller DT. Retinal imaging with polarization-sensitive optical coherence tomography and adaptive optics. Opt Exp. 2009 Nov;17(24):21634–21651. doi: 10.1364/OE.17.021634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kurokawa K, Tamada D, Makita S, Yasuno Y. Adaptive optics retinal scanner for one-micrometer light source. Opt Exp. 2010 Jan;18(2):1406–1418. doi: 10.1364/OE.18.001406. [DOI] [PubMed] [Google Scholar]

- 43.Kocaoglu OP, Cense B, Jonnal RS, Wang Q, Lee S, Gao W, Miller DT. Imaging retinal nerve fiber bundles using optical coherence tomography with adaptive optics. Vis Res. 2011 Aug;51(16):1835–1844. doi: 10.1016/j.visres.2011.06.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Mujat M, Ferguson RD, Patel AH, Iftimia N, Lue N, Hammer DX. High resolution multimodal clinical ophthalmic imaging system. Opt Exp. 2010 May;18(11):11607–11621. doi: 10.1364/OE.18.011607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Li KY, Tiruveedhula P, Roorda A. Intersubject variability of foveal cone photoreceptor density in relation to eye length. Invest Ophthalmol Vis Sci. 2010 Dec;51(12):6858–6867. doi: 10.1167/iovs.10-5499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Dubra A, Sulai Y. Reflective afocal broadband adaptive optics scanning ophthalmoscope. Biomed Opt Exp. 2011 May;2(6):1757–1768. doi: 10.1364/BOE.2.001757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Doble N, Choi SS, Codona JL, Christou J, Enoch JM, Williams DR. In vivo imaging of the human rod photoreceptor mosaic. Opt Lett. 2011 Jan;36(1):31–33. doi: 10.1364/OL.36.000031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Dubra A, Sulai Y, Norris JL, Cooper RF, Dubis AM, Williams DR, Carroll J. Noninvasive imaging of the human rod photoreceptor mosaic using a confocal adaptive optics scanning ophthalmoscope. Biomed Opt Exp. 2011 Jul;2(7):1864–1876. doi: 10.1364/BOE.2.001864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Merino D, Duncan JL, Tiruveedhula P, Roorda A. Observation of cone and rod photoreceptors in normal subjects and patients using a new generation adaptive optics scanning laser ophthalmoscope. Biomed Opt Exp. 2011 Aug;2(8):2189–2201. doi: 10.1364/BOE.2.002189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Takayama K, Ooto S, Hangai M, Ueda-Arakawa N, Yoshida S, Akagi T, Ikeda HO, Nonaka A, Hanebuchi M, Inoue T, Yoshimura N. High-resolution imaging of retinal nerve fiber bundles in glaucoma using adaptive optics scanning laser ophthalmoscopy. Amer J Ophthalmol. 2013;155(5):870–881. doi: 10.1016/j.ajo.2012.11.016. [DOI] [PubMed] [Google Scholar]

- 51.Chui TYP, Gast TJ, Burns SA. Imaging of vascular wall fine structure in human retina using adaptive optics scanning laser ophthalmoscopy. Invest Opthalmol Vis Sci. 2013 Sep; doi: 10.1167/iovs.13-13027. [Online]. Epub Ahead of Print, IOVS-13–13027, Available: http://www.iovs.org/content/early/2013/09/25/iovs.13-13027.full.pdf+html. [DOI] [PMC free article] [PubMed]

- 52.Pinhas A, Dubow M, Shah N, Chui TY, Scoles D, Sulai YN, Weitz R, Walsh JB, Carroll J, Dubra A, Rosen RB. In vivo imaging of human retinal microvasculature using adaptive optics scanning light ophthalmoscope fluorescein angiography. Biomed Opt Exp. 2013 Aug;4(8):1305–1317. doi: 10.1364/BOE.4.001305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Pircher M, Zawadzki RJ. Combining adaptive optics with optical coherence tomography: Unveiling the cellular structure of the human retina in vivo. Expert Rev Ophthalmol. 2007 Dec;2(6):1019–1035. [Google Scholar]

- 54.Zawadzki RJ, Jones SM, Kim DY, Poyneer L, Capps AG, Hamann B, Olivier SS, Werner JS. In vivo imaging of inner retinal cellular morphology with adaptive optics-optical coherence tomography: Challenges and possible solutions. Proc. SPIE 22nd Ophthal. Technol; Mar. 2012; pp. 8209G-1–8209G-5. [Google Scholar]

- 55.Klein T, Wieser W, Eigenwillig CM, Biedermann BR, Huber R. Megahertz OCT for ultrawide-field retinal imaging with a 1050 nm Fourier domain mode-locked laser. Opt Exp. 2011 Feb;19(4):3044–3062. doi: 10.1364/OE.19.003044. [DOI] [PubMed] [Google Scholar]

- 56.Hammer DX, Ferguson RD, Magill JC, White MA, Elsner AE, Webb RH. Compact scanning laser ophthalmoscope with high-speed retinal tracker. Appl Opt. 2003 Aug;42(22):4621–4632. doi: 10.1364/ao.42.004621. [DOI] [PubMed] [Google Scholar]

- 57.Stevenson SB, Roorda A. Correcting for miniature eye movements in high resolution scanning laser ophthalmoscopy. Proc. SPIE 15th Ophthal. Technol; May 2005; pp. 145–151. [Google Scholar]

- 58.Ricco S, Chen M, Ishikawa H, Wollstein G, Schuman J. Correcting motion artifacts in retinal spectral domain optical coherence tomography via image registration. Proc Med Image Comput Comput-Assisted Intervent. 2009;5761:100–107. doi: 10.1007/978-3-642-04268-3_13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kraus MF, Potsaid B, Mayer MA, Bock R, Baumann B, Liu JJ, Hornegger J, Fujimoto JG. Motion correction in optical coherence tomography volumes on a per A-scan basis using orthogonal scan patterns. Biomed Opt Exp. 2012 Jun;3(6):1182–1199. doi: 10.1364/BOE.3.001182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Braaf B, Vienola KV, Sheehy CK, Yang Q, Vermeer KA, Tiruveedhula P, Arathorn DW, Roorda A, de Boer JF. Real-time eye motion correction in phase-resolved OCT angiography with tracking SLO. Biomed Opt Exp. 2012 Dec;4(1):51–65. doi: 10.1364/BOE.4.000051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Braaf B, Vienola KV, Sheehy CK, Yang Q, Vermeer KA, Tiruveedhula P, Arathorn DW, Roorda A, de Boer JF. Real-time eye motion correction in phase-resolved OCT angiography with tracking SLO. Biomed Opt Exp. 2012 Dec;4(1):51–65. doi: 10.1364/BOE.4.000051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Zawadzki RJ, Jones SM, Pilli S, Balderas-Mata S, Kim DY, Olivier SS, Werner JS. Integrated adaptive optics optical coherence tomography and adaptive optics scanning laser ophthalmoscope system for simultaneous cellular resolution in vivo retinal imaging. Biomed Opt Exp. 2011 May;2(6):1674–1686. doi: 10.1364/BOE.2.001674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Fercher AF, Hitzenberger CK. Optical coherence tomography. In: Wolf E, editor. Progress in Optics. ch 4. Vienna, Austria: Elsevier; 2002. pp. 215–302. [Google Scholar]

- 64.Hitzenberger CK, Baumgartner A, Drexler W, Fercher AF. Dispersion effects in partial coherence interferometry: Implications for intraocular ranging. J Biomed Opt. 1999 Jan;4(1):144–151. doi: 10.1117/1.429900. [DOI] [PubMed] [Google Scholar]

- 65.Zhang Y, Roorda A. Evaluating the lateral resolution of the adaptive optics scanning laser ophthalmoscope. J Biomed Opt. 2006 Jan-Feb;11(1):014002–014002-5. doi: 10.1117/1.2166434. [DOI] [PubMed] [Google Scholar]

- 66.Porter J, Queener H, Lin J, Thorn K, Awwal A. Adaptive optics for vision science: principles, practices, design and applications. Hooboken, NJ, USA: Wiley; 2006. [Google Scholar]

- 67.Miller DT, Roorda A. Adaptive optics in retinal microscopy and vision. In: Bass M, editor. Handbook of Optics. New York, NY, USA: McGraw-Hill; 2009. [Google Scholar]

- 68.Wojtkowski M, Kaluzny B, Zawadzki RJ. New directions in ophthalmic optical coherence tomography. Optom Vis Sci. 2012 May;89(5):524–542. doi: 10.1097/OPX.0b013e31824eecb2. [DOI] [PubMed] [Google Scholar]

- 69.Sasaki K, Kurokawa K, Makita S, Yasuno Y. Extended depth of focus adaptive optics spectral domain optical coherence tomography. Biomed Opt Exp. 2012 Oct;3(10):2353–2370. doi: 10.1364/BOE.3.002353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Adie SG, Graf BW, Ahmad A, Carney PS, Boppart SA. Computational adaptive optics for broadband optical interferometric tomography of biological tissue. Proc Nat Academy Sci USA. 2012 May;109(19):7175–7180. doi: 10.1073/pnas.1121193109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Choi SS, Zawadzki RJ, Keltner JL, Werner JS. Changes in cellular structures revealed by ultra-high resolution retinal imaging in optic neuropathies. Invest Opthalmol Vis Sci. 2008 May;49(5):2103–2119. doi: 10.1167/iovs.07-0980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Hammer DX, Iftimia NV, Ferguson RD, Bigelow CE, Ustun TE, Barnaby AM, Fulton AB. Foveal fine structure in retinopathy of prematurity: an adaptive optics Fourier domain optical coherence tomography study. Invest Opthalmol Vis Sci. 2008 May;49(5):2061–2070. doi: 10.1167/iovs.07-1228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Považay B, Hofer B, Torti C, Hermann B, Tumlinson AR, Esmaeelpour M, Egan CA, Bird AC, Drexler W. Impact of enhanced resolution, speed and penetration on three-dimensional retinal optical coherence tomography. Opt Exp. 2009 Mar;17(5):4134–4150. doi: 10.1364/oe.17.004134. [DOI] [PubMed] [Google Scholar]

- 74.Torti C, Považay B, Hofer B, Unterhuber A, Carroll J, Ahnelt PK, Drexler W. Adaptive optics optical coherence tomography at 120,000 depth scans/s for non-invasive cellular phenotyping of the living human retina. Opt Exp. 2009 Oct;17(22):19382–19400. doi: 10.1364/OE.17.019382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Choi SS, Zawadzki RJ, Lim MC, Brandt JD, Keltner JL, Doble N, Werner JS. Evidence of outer retinal changes in glaucoma patients as revealed by ultrahigh-resolution in vivo retinal imaging. Brit J Ophthalmol. 2011 Jan;95(1):131–141. doi: 10.1136/bjo.2010.183756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Werner JS, Keltner JL, Zawadzki RJ, Choi SS. Outer retinal abnormalities associated with inner retinal pathology in nonglaucomatous and glaucomatous optic neuropathies. Eye. 2011 Mar;25(3):279–289. doi: 10.1038/eye.2010.218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Kocaoglu OP, Cense B, Jonnal RS, Wang Q, Lee S, Gao W, Miller DT. Imaging retinal nerve fiber bundles using optical coherence tomography with adaptive optics. Vis Res. 2011 Aug;51(16):1835–1844. doi: 10.1016/j.visres.2011.06.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Panorgias A, Zawadzki RJ, Capps AG, Hunter AA, Morse LS, Werner JS. Multimodal assessment of microscopic morphology and retinal function in patients with geographic atrophy. Invest Opthalmol Vis Sci. 2013 Jun;54(6):4372–4384. doi: 10.1167/iovs.12-11525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Jonnal RS, Kocaoglu OP, Wang Q, Lee S, Miller DT. Phase-sensitive imaging of the outer retina using optical coherence tomography and adaptive optics. Biomed Opt Exp. 2012 Jan;3(1):104–124. doi: 10.1364/BOE.3.000104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Jian Y, Zawadzki RJ, Sarunic MV. Adaptive optics optical coherence tomography for in vivo mouse retinal imaging. J Biomed Opt. 2013 May;18(5):056007–056007. doi: 10.1117/1.JBO.18.5.056007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Riggs LA, Armington JC, Ratliff F. Motions of the retinal image during fixation. J Opt Soc Amer. 1954 Apr;44(4):315–321. doi: 10.1364/josa.44.000315. [DOI] [PubMed] [Google Scholar]

- 82.Zawadzki RJ, Fuller AR, Choi SS, Wiley DF, Hamann B, Werner JS. Correction of motion artifacts and scanning beam distortions in 3D ophthalmic optical coherence tomography imaging. Proc. SPIE 27th Ophthal. Technol; Feb. 2007; pp. 642607-1–642607-11. [Google Scholar]

- 83.Pircher M, Götzinger E, Sattmann H, Leitgeb RA, Hitzenberger CK. In vivo investigation of human cone photoreceptors with slo/oct in combination with 3-D motion correction on a cellular level. Opt Exp. 2010 Jun;18(13):13935–13944. doi: 10.1364/OE.18.013935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Ferguson RD, Hammer DX, Paunescu LA, Beaton S, Schuman JS. Tracking optical coherence tomography. Opt Lett. 2004 Sep;29(18):2139–2141. doi: 10.1364/ol.29.002139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Stevenson SB, Roorda A, Kumar G. Eye tracking with the adaptive optics scanning laser ophthalmoscope. Proc Symp Eye-Tracking Res Appl. 2010:195–198. [Google Scholar]

- 86.Dubra A, Harvey Z. Registration of 2-D images from fast scanning ophthalmic instruments. Biomed Image. 2010;6204:60–71. [Google Scholar]

- 87.Ricco S, Chen M, Ishikawa H, Wollstein G, Schuman J. Correcting motion artifacts in retinal spectral domain optical coherence tomography via image registration. Proc Med Image Comput Comput-Assisted Intervention. 2009;5761:100–107. doi: 10.1007/978-3-642-04268-3_13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Capps AG, Zawadzki RJ, Yang Q, Arathorn DW, Vogel CR, Hamman B, Werner JS. Correction of eye-motion artifacts in AO-OCT data sets. Proc. SPIE 21st Ophthal. Technol; Feb. 2011; pp. 78850D-1–78850D-7. [Google Scholar]

- 89.Stevenson SB, Roorda A. Correcting for miniature eye movements in high resolution scanning laser ophthalmoscopy. Proc SPIE 15th Ophthal Technol. 2005;5688:145–151. [Google Scholar]

- 90.Shepard D. A two-dimensional interpolation function for irregularly-spaced data. Proc23rd ACM Nat Conf. 1968:517–524. [Google Scholar]

- 91.Barnhill RE. Representation and approximation of surfaces. In: Rice JR, editor. Mathematical Software III. New York, NY, USA: Academic; 1977. p. 112. [Google Scholar]

- 92.Kansa E. Multiquadrics-a scattered data approximation scheme with applications to computational fluid-dynamics-i: surface approximations and partial derivative estimates. Comput Math Appl. 1990;19(8/9):127–145. [Google Scholar]

- 93.Sibson R. A vector identity for the Dirichlet tessellation. Math Proc Camb Phil Soc. 1980 Jan;87(1):151–155. [Google Scholar]

- 94.Franke R. Scattered data interpolation: Tests of some methods. Math Comput. 1982 Jan;38(157):181–200. [Google Scholar]

- 95.Nielson G. Scattered data modeling. IEEE Comput Graphics Appl. 1993 Jan;13(1):60–70. [Google Scholar]

- 96.Amidror I. Scattered data interpolation methods for electronic imaging systems: A survey. J Electron Imag. 2002 Apr;11(2):157–176. [Google Scholar]

- 97.de Berg M, Cheong O, van Kreveld M, Overmars M. Computational Geometry: Algorithms and Applications. Berlin, Germany: Springer; 2008. [Google Scholar]

- 98.Thévenaz P, Ruttimann U, Unser M. A pyramid approach to sub-pixel registration based on intensity. IEEE Trans Image Process. 1998 Jan;7(1):27–41. doi: 10.1109/83.650848. [DOI] [PubMed] [Google Scholar]