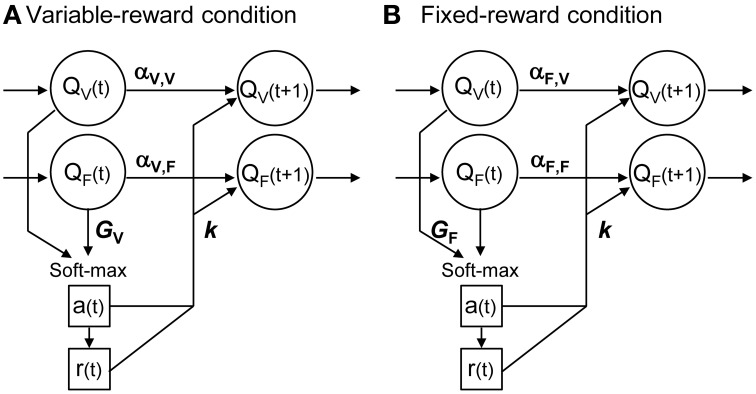

Figure 2.

Interference reinforcement learning model. Our reinforcement learning models assumed that rats estimated expected rewards (values) of left and right choices in both variable- and fixed-reward conditions, i.e., QV and QF; models had four action values in total. All action values were updated both in variable-reward-condition (A) and fixed-reward-condition (B) trials. α was the learning rate or forgetting rate in the selected or unselected option, respectively; α depended on the trial condition and value condition. k was the reinforcer strength of the outcome. A choice probability was predicted with a soft-max equation based on all values. The soft-max equation had a free parameter, G, which adjusted the contribution of action values from the non-trial condition in the choice prediction.