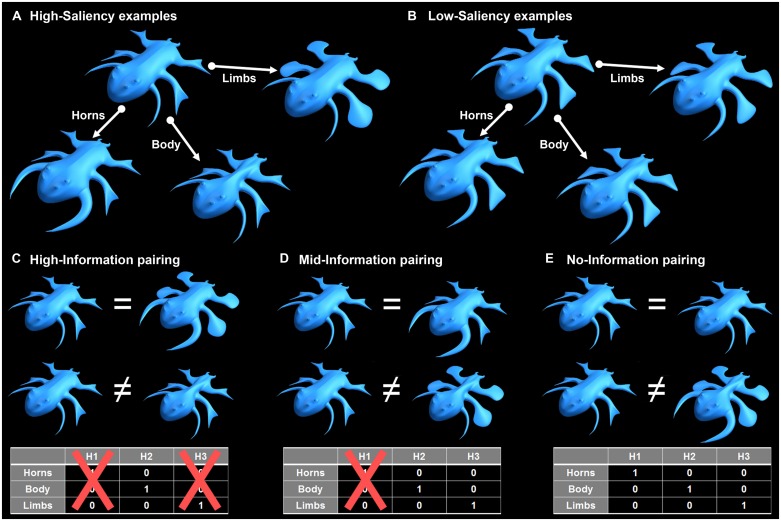

FIGURE 4.

Creature-pairing compositions. (A) Example of high-saliency feature dimensions. (B) Example of low-saliency feature dimensions. (C) Examples of same-category (upper) and different-categories (lower) high-information pairs (using the high-saliency stimuli). The portion of feature dimensions detected as relevant based on a given trial determined the quantity of information provided in this trial. Here, the only feasible ‘hypothesis’ that could be considered by the participant was that the creatures’ body width is relevant for categorization (-log 21/3 = 1.585 bits). (D) Examples of same-category (upper) and different-categories (lower) mid-information pairs. Based on these examples, either the creatures’ body width or their limbs could be relevant for categorization (-log 22/3 = 0.585 bits). (E) Examples of same-category (upper) and different-categories (lower) pairs with no-information (control task). Based on these examples, the creatures’ body width, their limbs or their horns could be relevant for categorization (-log 23/3 = 0 bits). In learning-blocks, the category relation between the paired stimuli could be deduced from the feedback that followed a categorization decision. The tables at the bottom describe the possible hypothesis space. When all feature dimensions are salient and participants are informed that only one feature dimension is task-relevant, VCL requires the participant deciding which of the 3 hypotheses (H1–H3) is correct. When features are not salient, and the participant is more likely to be unaware of any between-stimuli differences, VCL require the participant rejecting the null hypothesis stating, “all creatures are the same”.