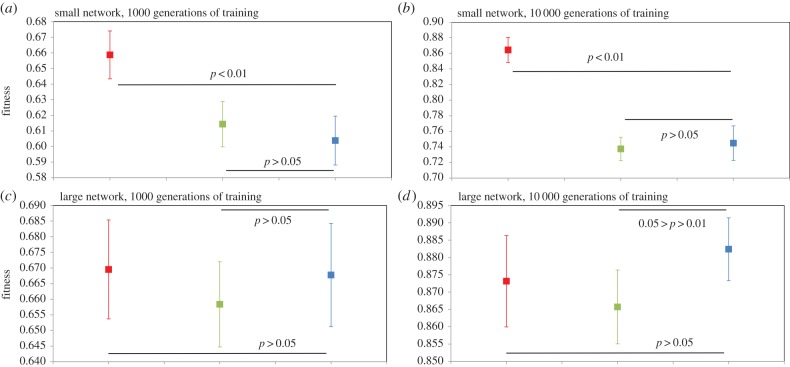

Figure 4.

Performance of ‘small’ and ‘large’ modular (PMN) and non-modular (FCNMN and SNMN) neural networks with the same number of nodes training to identify subsets of random inputs. Networks are as those in figures 1 and 2 but now network output encoding is modified. Now networks must respond with an output > 0.9 (rather than > 0.5 as in the networks of figures 1 and 2) to ‘accept’ an input and any output < 0.9 constitutes a ‘rejection’ of that input. Mean networks fitness (n = 20) with 95% CIs is analysed at generation 1000 and 10 000 of training. Statistics for small networks: (a) generation 1000; FCNMN (red) versus PMN (blue), t38 = −5.23; SNMN (green) versus PMN (blue), t38 = −1.03; (b) generation10 000; FCNMN versus PMN, t38 = −9.15; SNMN versus PMN, t38 = 0.579. Statistics for large networks: (c) generation 1000; FCNMN (red) versus PMN (blue), t38 = −0.162; SNMN (green) versus PMN (blue), t38 = 0.915; (d) generation10 000; FCNMN versus PMN, t38 = 1.21; SNMN versus PMN, t38 = 2.49. Note that the vertical axes of plots are not standardized and show different ranges. (Online version in colour.)