Abstract

We present a vision of clinical science, based on a conceptual framework of intervention development endorsed by the Delaware Project. This framework is grounded in an updated stage model that incorporates basic science questions of mechanisms into every stage of clinical science research. The vision presented is intended to unify various aspects of clinical science toward the common goal of developing maximally potent and implementable interventions, while unveiling new avenues of science in which basic and applied goals are of equally high importance. Training in this integrated, translational model may help students learn how to conduct research in every domain of clinical science and at each stage of intervention development. This vision aims to propel the field to fulfill the public health goal of producing implementable and effective treatment and prevention interventions.

Keywords: intervention development, implementation, stage model, translation

This article presents a vision for clinical science that aims to facilitate the implementation of scientifically supported interventions and to enhance our understanding of why interventions work, which interventions and implementation strategies work best, and for whom they work better.1 This vision also aims to enhance students’ training with a conceptualization that unifies the field in pursuit of these goals. Psychological clinical science encompasses broad and diverse perspectives (McFall, 2007), but there is a fundamental commonality that binds psychological clinical science together: the relevance of its various scientific endeavors to producing and improving treatment and prevention interventions. As such, all domains of clinical science are integral to the intervention development process, and the vision we propose in this article represents a comprehensive conceptual framework for the intervention development process and for training the next generation of clinical scientists.

The framework described in the current article delineates specific stages of intervention development research that is inspired by and consistent with calls to action to identify mechanisms of change as a way of improving interventions (e.g., Borkovec & Castonguay, 1998; Hayes, Long, Levin, & Follette, in press; Kazdin, 2001; Kazdin & Nock, 2003; Rounsaville, Carroll, & Onken, 2001a). This framework inextricably links basic and applied clinical science (Stokes, 1997) and sharpens the distinction between implementation science that is focused on service delivery system research as opposed to intervention generation, testing, and refinement research, while recognizing that both share the goal of getting interventions into the service delivery system. Furthermore, the model presented in this article defines the intervention development process as incomplete until an intervention is optimally efficacious and implementable with fidelity by practitioners in the community. We contend that a reinvigorated stage model unites diverse and fragmented fields of clinical psychological science and can serve as an engine for the production of highly potent and implementable treatment and prevention interventions. We further contend that this framework can do this in such a way as to enrich every aspect of clinical science and the training of clinical scientists.

Where Has Clinical Science Succeeded?

The major accomplishments of psychological clinical science are well documented. Perhaps most remarkable is the development of efficacious behavioral treatments in the past half century. For many of the most severe behavioral health problems, there are efficacious treatments where there once were none. Mid-20th-century reviews of the child treatment literature by Levitt (1957, 1963) and the adult treatment literature by Eysenck (1952) were critical regarding the efficacy of psychotherapy. Levitt (1963) confirmed his 1957 conclusion that “available evaluation studies do not furnish a reasonable basis for the hypothesis that psychotherapy facilitates recovery from emotional illness in children” (p. 49), and Eysenck (1952) stated that the adult studies “fail to support the hypothesis that psychotherapy facilitates recovery from neurotic disorder” (p. 662). As recently as four decades ago, even after the articulation of behavioral therapies for anxiety disorders, Agras, Chapin, and Oliveau (1972) reported that untreated adults suffering from phobia improve at the same rate as treated ones, and Kringlen (1970) reported that most typical obsessional patients “have a miserable life” (p. 418). Today, anxiety disorders are considered among the most treatable disorders, with behavioral interventions serving as the gold standard, outperforming psychopharmacology (Arch & Craske, 2009; McHugh, Smits, & Otto, 2009; McLean & Foa, 2011; Roshanaei-Moghaddam et al., 2011). Efficacious behavioral interventions developed by clinical scientists include treatments for cocaine addiction, autism, schizophrenia, conduct disorder, and many others (e.g., Baker, McFall, & Shoham, 2008; Chambless & Ollendick, 2001; DeRubeis & Crits-Christoph, 1998; Kendall, 1998).

These advances in developing behavioral treatments are especially impressive given that each of these treatments attempts to address seemingly discrete, albeit often overlapping diagnostic categories (Hyman, 2010). In spite of obstacles created by a diagnostic system in which categories based on clinical consensus exhibit considerable heterogeneity (British Psychological Society, 2011; Frances, 2009a, 2009b), our understanding of the basic processes of psychopathology that should be addressed in targeted treatments has substantially increased (T. A. Brown & Barlow, 2009; Cuthbert & Insel, 2013; Insel, 2012). With this better understanding of mechanisms of disorders, the promise for a new generation of more efficient and implementable interventions is improved.

We now have solid basic behavioral science and neuroscience, good psychopathology research, innovative intervention development, numerous clinical trials producing efficacious treatment and prevention interventions, an extensive set of effectiveness trials aimed to confirm the value of these interventions, and a robust research effort in implementation science. Many clinical psychology training programs include the teachings of empirically supported treatments or at least engage in debating their value (Baker et al., 2008; Follette & Beitz, 2003). All these notable accomplishments raise a question: What is broken that needs fixing?

What Are the Problems and Who Should Fix Them?

Although efficacious behavioral treatments for many mental disorders exist, patients who seek treatment in community settings rarely receive them (Institute of Medicine, 2006). Several factors converge to create this widely acknowledged science-to-service gap, or what Weisz et al. (Weisz, Jensen-Doss, & Hawley, 2006; Weisz, Ng, & Bearman, 2014) call the implementation cliff. For one, we can trace the implementation cliff back to the effect size drop evident in many effectiveness trials (Curtis, Ronan, & Borduin, 2004; Henggeler, 2004; Miller, 2005; Weisz, Weiss, & Donenberg, 1992). There is a clear disconnect between efficacy research that values internal validity and effectiveness research that prioritizes external validity at the expense of internal validity. Despite this gap, many investigators still move directly from traditional efficacy studies (with research therapists) to effectiveness studies, without first conducting an efficacy (i.e., controlled) study with community therapists to ensure that the intervention being studied is implementable with fidelity when administered by community practitioners. This strategy is particularly puzzling in light of the fact that so many efficacious behavioral interventions do not make their way down the pipeline through implementation (Carroll & Rounsaville, 2003, 2007; Craske, Roy-Byrne, et al., 2009).

A major factor that can explain this drop in effect size is treatment fidelity (also known as treatment integrity), which refers to the implementation of an intervention in a manner consistent with principles outlined in an established manual (Henggeler, 2011; Perepletchikova, Treat, & Kazdin, 2007). To wit, only a small fraction of clinicians who routinely provide interventions such as cognitive behavioral therapy (CBT) are able to do so with adequate fidelity (Beidas & Kendall, 2010; Olmstead, Abraham, Martino, & Roman, 2012). For instance, in one study CBT concepts were mentioned in fewer than 5% of sessions based on direct observation (Santa Ana, Martino, Ball, Nich, & Carroll, 2008). This may reflect the insufficiency of commonly used training and dissemination methods such as workshops and lectures, which by themselves effect little substantive change in clinician behavior (Miller, Yahne, Moyers, Martinez, & Pirritano, 2004; Sholomskas et al., 2005). Furthermore, even documented acquisition of fidelity skills under close supervision does not guarantee continued, postsupervision fidelity maintenance. Direct supervision, via review of clinicians’ levels of fidelity and skill in delivering evidence-based practice, is rarely provided in community-based settings and is also not reimbursed or otherwise incentivized (Olmstead et al., 2012; Schoenwald, Mehta, Frazier, & Shernoff, 2013).

Moreover, community providers’ motivation and comfort level with empirically supported treatments is lower than that of research therapists (Stewart, Chambless, & Baron, 2012). For example, in a study of clinical psychologists’ use of exposure therapy for posttraumatic stress disorder, only 17% of therapists reported using the evidence-based treatment, and 72% reported a lack of comfort with exposure therapy (Becker, Zayfert, & Anderson, 2004). Research therapists tend to be committed to the therapy they are administering and to the research process, and they are directly incentivized to implement treatments with skill and fidelity. There is no similar incentive system for community therapists.

These and other barriers to implementation contribute to a mounting sentiment that business as usual must change (Institute of Medicine, 2006). Authors such as B. S. Brown and Flynn (2002) have exclaimed that clinical science can and should do much more to implement efficacious treatment and prevention interventions (see also Glasgow, Lichtenstein, & Marcus, 2003; Hoagwood, Olin, & Cleek, 2013). Some even suggested a 10-year moratorium on efficacy trials (Kessler & Glasgow, 2011).

On the other side of the translation-implementation continuum (Shoham et al., 2014), we are missing a tighter link between basic and applied clinical science (Onken & Blaine, 1997; Onken & Bootzin, 1998). Despite substantial advances in the understanding of neurobiological, behavioral, and psychological mechanisms of disorders, these understandings are not sufficiently linked to mechanisms of action in intervention development research (Kazdin, 2007; Murphy, Cooper, Hollon, & Fairburn, 2009). In the absence of better understanding of how interventions work, efforts to adapt interventions (e.g., dose reduction), a common practice in community settings, may render the interventions devoid of their original efficacy.

A meta-level problem is that these problems fall between scientific cracks. Perhaps a reason why there has been such difficulty implementing empirically supported interventions is that no subgroup of clinical scientists have a defined role for ensuring implementability of interventions: Is it the responsibility of basic behavioral scientists to ensure that interventions get implemented? Surely that is not their job! Their mission is to understand basic normal and dysfunctional behavioral processes, not to directly develop interventions or ensure their implementability.

What about the researchers who generate, refine, and test interventions in efficacy trials? Would they say that it is their mission to develop and test the best interventions possible, but it is not their job to strive toward the implementability of those interventions? Would they argue that ensuring implementability is the responsibility of someone else, such as researchers who conduct effectiveness trials? If effectiveness is not sufficiently strong, could it not be that the problem lies within the way the intervention was delivered, or within the design of the effectiveness trial, not with the efficacious intervention?

Conversely, effectiveness researchers claim responsibility for real-world testing of interventions that have initial scientific support. If these empirically supported interventions are not viable for use in the real world, is not this the fault of the intervention developers? Should not the intervention developers produce interventions that can be sustained effectively in the real world?

Finally, consider the implementation scientists (e.g., Proctor et al., 2009). One can assume that implementation researchers must be responsible for implementation! These scientists are doing all they can to determine how to get interventions adopted and have identified a multitude of program- and system-level constraints and barriers to implementation (e.g., Fixsen, Naoom, Blase, Friedman, & Wallace, 2005; Hoagwood et al., 2013; Lehman, Simpson, Knight, & Flynn, 2011). When system-level barriers are addressed by community practitioners who adapt empirically supported interventions for the populations they serve, intervention developers assert that these adapted interventions are no longer the same interventions that were shown to have efficacy. As it turns out, nobody takes charge, and the cycle continues.

Possible Solutions

Changing the System

Suggestions to solve the science-practice gap by changing the service delivery system have encountered formidable barriers. The infrastructure of existing delivery systems may be too weak to provide the complex, albeit high-quality empirically supported therapies practiced in efficacy studies (McLellan & Meyers, 2004). For example, implementation of such treatments may require fundamental changes in the training and ongoing supervision of community-based clinicians (Carroll & Rounsaville, 2007), smaller numbers of patients assigned to each clinician, and increased time allotted per patient. Another particularly unfortunate barrier is that empirically supported therapies are not always preferentially reimbursed, whereas some interventions that have been shown to be ineffective or worse (e.g., repeated inpatient detoxification without aftercare) continue to be reimbursed (Humphreys & McLellan, 2012). Any one of these systemic barriers could be difficult to change, and a synthesis of the literature suggests that successful implementation necessitates a sustained, multilevel approach (Damschroder et al., 2009; Fairburn & Wilson, 2013; Fixsen et al., 2005), requiring that multiple barriers be addressed simultaneously for implementation to be successful. In the meanwhile, we turn the spotlight to an alternative and complementary solution.

Changing the Interventions: Adapting Square Pegs to Fit Into Round Holes

Multiple unsuccessful attempts to change service delivery systems bring up the possibility that what needs to change is the intervention. Perhaps instead of forcing the square pegs of our evidence-based interventions into the round holes of the delivery system (Onken, 2011) we should consider making our interventions somewhat more round. If efficacy findings are to be replicated in effectiveness studies, perhaps it is time for clinical scientists to accept the responsibility of routinely and systematically creating and adapting interventions to the intervention delivery context as an integral part of the intervention development process. Knowing how to adapt an intervention so that the intervention retains its effects while at the same time fitting in the real world requires knowledge about mechanisms and conditions in relevant settings. This solution may require the participation of practitioners in a research team that is ready to ask hard questions regarding why the intervention works and how to preserve its effective ingredients while adapting the intervention to fit broader and more varied contexts (Chorpita & Viesselman, 2005; Lilienfeld et al., 2013).

Unfortunately, clinical scientists are not usually the conveyers of such modifications, nor do they always have the tools necessary to retain effective intervention ingredients while guiding adaptation efforts. Often, community practitioners modify the intervention, including those participating in effectiveness studies of science-based interventions (Stewart, Stirman, & Chambless, 2012). Such alterations typically involve delivering the intervention in far fewer sessions, in group versus individual format, and other shortcuts that could diminish potency. Changes to interventions are made with good intentions, often due to necessity (e.g., insurers’ demands), and they are frequently based on clinical intuition, clinical experience, or clinician or patient preferences, but not on science (Lilienfeld et al., 2013). Adapting the intervention in response to practical constraints is an inherently risky endeavor: The intervention may or may not retain the elements that make it work. Whether done by clinicians attempting to meet real-world demands or by scientists lacking evidence of the intervention’s mechanism of action, practical alteration of evidenced-based interventions could very well diminish or eliminate the potency of the (no-longer-science-based) interventions. On the other hand, when clinical scientists uncover essential mechanisms of action, they may be able to package the intervention in a way that is highly implementable. For example, with an understanding of mechanisms, Otto et al. (2012) were able to create an ultra-brief treatment for panic disorder. Another example is the computerized attention modification program that directly targets cognitive biases operating in the most prevalent mood disorders (Amir & Taylor, 2012).

Redefining When Intervention Development Is Incomplete

Intervention development is incomplete until the intervention is maximally potent and implementable for the population for which it was developed. Intervention developers need to address issues of fit within a service delivery system, simplicity, fidelity and supervision, therapist training, and everything else that relates to implementability before the intervention development is considered complete. For example, intervention development is incomplete if community providers are expected to deliver the intervention, but there are no materials available that ensure that they administer the intervention with fidelity, or know the level of fidelity required to deliver the intervention effectively. Therefore, materials to ensure fidelity of intervention delivery (e.g., training and supervision materials) are an essential part of any intervention for which they are required and can be developed even after an intervention has proven efficacious in a research setting.

Efficacy Testing in the Real-World Settings

As noted, complex interventions administered with a high degree of fidelity in research settings with research providers seem destined to weaken when implemented in community settings with community providers. There is a need for research between traditional efficacy and effectiveness research where an intervention is tested for efficacy in a community setting, with community providers, such as in the model described by Weisz, Ugueto, Cheron, and Herren (2013). For such testing to occur, the intervention will likely need to be further developed or “adapted” for community providers. The conceptual framework presented here includes a stage (Stage III) of research where a therapy is tested, with high internal validity, in a community setting with community practitioners, and where fidelity assurance is included as part of the treatment before the therapy is tested in high external validity effectiveness trials.

Identifying Mechanisms of Change

It is entirely possible to develop highly potent, implementable interventions without understanding mechanisms of change. But is it likely to be a highly successful or tenable strategy? Decades of research with little attention to change mechanisms have produced very few interventions that are efficacious, effective, and implementable. We argue that an understanding of change mechanisms is often critical for developing the most effective interventions that can be scaled up and trimmed down. First, understanding mechanisms can guide the enhancement and simplification of interventions (Kazdin & Nock, 2003). If we understood how and why an intervention is working, we are better positioned to emphasize what is potent. Simplification of interventions could help with training, cost, and transportability. Understanding mechanism could help to clarify scientifically based principles of interventions, and imparting these principles may help practitioners deliver interventions with a higher level of fidelity than requiring a strict adherence to intervention manuals. Knowing principles could also help clarify when it is important to intervene in one particular way, or to intervene in any number of ways, as long as the intervention is faithful to the mechanism of action. As Kazdin (2008) pointed out, “There are many reasons to study mechanisms, but one in particular will help clinical work and patient care” (p. 152).

There have been numerous calls for research on mechanisms of change. Indeed, one recent announcement, “Use-Oriented Basic Research: Change Mechanisms of Behavioral Social Interventions” (http://grants.nih.gov/grants/guide/pa-files/PA-12-119.html), called for research to study putative mechanisms of action of behavioral, with the ultimate goal of simplifying and creating more implementable interventions. The idea of use-oriented basic research was inspired by Louis Pasteur, who saw little differentiation between the ultimate goals of basic and applied science and who viewed all science as essential to achieve practical goals (Stokes, 1997). A similar perspective with regard to psychotherapy research was described by Borkovec and Miranda (1999). Understanding mechanism of action and the principles behind an intervention may involve basic science expertise, but can have pragmatic effects, such as helping to (a) strengthen the effects of interventions; (b) pare down interventions to what is essential, which can make the intervention more implementable and cut costs; and (c) simplify interventions for easier transportability, such as computerizing parts of the intervention (e.g., Bickel, Marsch, Buchhalter, & Badger, 2008; Carroll et al., 2008; Carroll & Rounsaville, 2010; Craske, Rose, et al., 2009).

Despite these frequent calls for studying change mechanisms in intervention development research, many clinical scientists are not yet taking this approach. What is new about the emphasis on mechanism propounded here? We suggest that a major reason for the relative lack of attention to mechanisms in prior research may be an unfortunate consequence of largely disconnected areas of clinical science. Questions regarding mechanisms of disorders and mechanisms of change are conceptually similar to questions asked within basic behavioral science. Hence, basic science questions and relevant research paradigms should not be limited to research that occurs prior to intervention development; they are integral to the entire behavioral intervention development process. We suggest that the development of potent and implementable behavioral interventions could be revolutionized if the field were unified around fundamental mechanisms of behavior change and if all types of clinical scientists were trained to address mechanism of action of behavioral interventions at every stage of the intervention development process.

Could a new vision for clinical science help bridge the science-practice gap? To that end we propose a conceptual framework for intervention development that (a) defines intervention development as incomplete if the intervention is not implementable and (b) propels all clinical science to examine mechanisms of behavior change. This framework inherently integrates all components of clinical science—from basic to applied.

NIH Stage Model

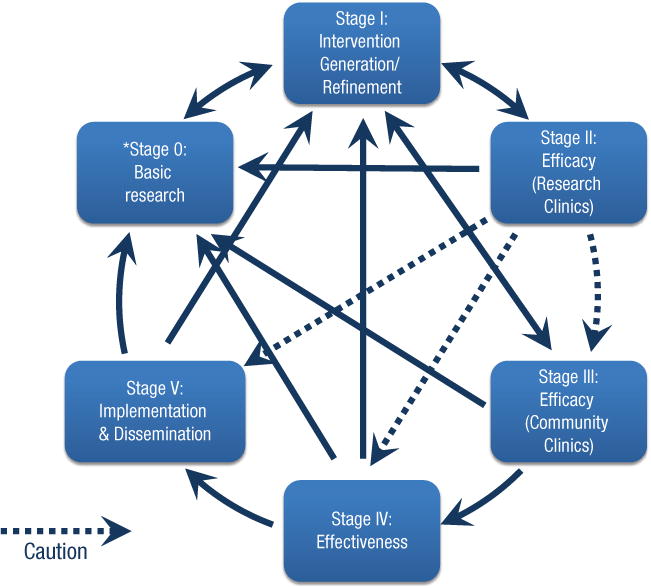

Various conceptualizations of research on intervention development share the notion of phases or stages of intervention development.2 Many stress the importance of translational research. Most intervention development models generally agree that efficacy and effectiveness research vary along a continuum, from maximizing internal validity to maximizing generalizability. However, not all models explicitly highlight the same stages or designate the same “numbering” system for the stages of research. Models also differ in terms of the relevance of basic research to intervention development and, if relevant, where to place it. In addition, they differ in terms of how much focus is given to implementability, and how to achieve implementable interventions. The conceptual framework of intervention development described in this article is an update of the model described by Onken, Blaine, and Battjes (1997) and Rounsaville, Carroll, and Onken (2001a, 2001b). A visual representation of this model can be viewed in Figure 1.

Fig. 1.

NIH stage model: common and cautionary pathways.

Note: Dotted arrow indicates the importance of using caution when considering this pathway.

The updated model, a “stage model,” asserts that the work is not complete until an intervention reaches its highest level of potency and is implementable with the maximum number of people in the population for which it was developed. One can begin at any stage, and go back and forth as necessary to achieve this goal; the model is not prescriptive, nor is it linear. That is, there is no requirement that research be done in any particular order, other than one that is logical and scientifically justifiable. This model is an iterative, recursive model of behavioral intervention development. The focus of this model is on adapting interventions (square pegs) not only until they are simply maximally potent, but also until they can most easily fit into the service delivery system (round hole). The model includes a basic science component, as something that can be done separately (e.g., prior to intervention development, or after intervention development to clarify mechanism), but also something that can be done within the context of every other stage of intervention development, to ascertain information about mechanism during the intervention development process. The efficacy/effectiveness gap is addressed, with a stage that involves efficacy trials, with an emphasis on internal validity, but conducted in community settings with community practitioners (Stage III).

Why is it important to have stages of research and a conceptual framework if it is not prescriptive? There are many reasons, but one that stands out is that defining stages of behavioral intervention development highlights the value and frequent necessity of a particular type of research endeavor. For example, defining treatment generation and refinement helped to legitimize this necessary part of behavioral treatment research. Moreover, defining a stage in between efficacy and effectiveness, where interventions can be tested with high internal validity, with research therapists in research settings, draws attention to the importance of this stage of intervention development, and also provides more clarity when the words efficacy and effectiveness are used. Finally, defining the stages creates a common language through which behavioral intervention development can be discussed across clinical, academic, and research settings. This common language facilitates research grant proposal writing and review, ultimately leading to a more coherent, efficient, and progressive science. So even though the stages of behavioral intervention development do not always—or even usually—occur in a prescribed order, and even though it is not always—or even usually—necessary to progress through every stage, it facilitates communication and helps clarify research activities and necessary next steps when the stages of intervention development research are defined.

Stage 0

Basic science, considered to be Stage 0, plays a major role in intervention development. Stage 0 can precede and provide the basis for the generation of a new intervention, or the modification of an existing one. Basic science could include any basic behavioral, cognitive, affective, or social science or neuroscience that is being conducted with the ultimate goal of informing the development of behavioral intervention development.

Basic science can also be incorporated into all other stages of intervention development. Specifically, the mechanism of action, mediator, and moderator research is an integral part of Stage I, Stage II, Stage III, and Stage IV research. Asking questions about mechanisms of change involves asking basic science questions to answer basic behavioral, cognitive, or social science or biological questions about how an intervention produces its effects, and for whom the intervention works best. As discussed earlier, we believe that understanding the basic principles of behavior change for an intervention will fuel every stage of intervention development and will facilitate the creation of ever more potent and implementable interventions.

Stage I

Stage I encompasses all activities related to the creation of a new intervention, or the modification, adaptation, or refinement of an existing intervention (Stage IA), as well as feasibility and pilot testing (Stage IB). Stage I research can be conducted to develop, modify, refine, adapt, or pilot test (a) behavioral treatment interventions, (b) behavioral prevention interventions, (c) medication adherence interventions, and (d) components of behavioral interventions. In addition, Stage I research includes the development, modification, refinement, adaptation, and pilot testing of therapist/provider training, supervision, and fidelity-enhancing interventions (considered integral to all interventions), and interventions to ensure maintenance of the fidelity of intervention delivery (also considered integral to all).

Stage I research can be conducted in research settings with research therapists/providers, as well as in “real-world” or community settings with community therapists/providers. Usually a goal of a Stage I project is to provide necessary materials and information to proceed to a Stage II or Stage III project. An equally important goal is to obtain scientific knowledge of the processes that lead to behavior change (i.e., behavioral, cognitive, social, or biological mechanism of behavior change). That is, Stage I goals encompass obtaining basic science information about how the intervention might be exerting its effects (Rounsaville et al., 2001a).

Stage I is an iterative process that involves multiple activities. Stage IA includes (a) identifying promising basic or clinical scientific findings relevant to the development or refinement of an intervention; (b) generating/formulating theories relevant to intervention development; (c) operationally defining and standardizing new or modified principle-driven interventions; and (d) as necessary, further refining, modifying, or adapting an intervention to boost effects or for ease of implementation in real-world settings. Initial or pilot testing of the intervention is considered to be Stage IB. Stage I involves testing the theory on which the intervention is based to understand the mechanisms and principles of behavior change.

It cannot be stressed enough that therapist/provider training and fidelity assessment and enhancement methods are an integral part of behavioral intervention development. Intervention development is incomplete if there are no materials and methods for administering that intervention. Research on the development, modification, and pilot testing of training and fidelity intervention/procedures for community providers is considered to be Stage I, whether conducted prior to taking the intervention to an efficacy study or after an intervention has proven efficacious.

Stage II

Stage II research consists of testing of promising behavioral interventions in research settings, with research therapists/providers. Stage II does not specify a particular research design. Testing of interventions may be done in randomized clinical trials, but may also be conducted using other methodologies (e.g., adaptive designs, multiple-baseline single-case designs, A-B-A designs, etc.). Stage II also involves basic science (Stage 0), in that Stage II involves examining mechanisms of behavior change. Indeed, a Stage II study can provide an ideal opportunity to experimentally manipulate and test mechanisms. Stage II studies may include examination of intervention components, dose response, and theory-derived moderators.

After conducting a Stage I study, proceeding to Stage II (or Stage III in the case of an intervention developed in a community setting) presumes that promising pilot data of feasibility and outcomes exist. If sufficiently strong evidence of promise does not exist, but if there is a good rationale for additional modification of the intervention, such modification can be done in a Stage I study. Information obtained from Stage II studies may be used to inform future Stage I studies. For example, if it is shown that an intervention works for some people, but not for others, a Stage II study may lay the groundwork for a Stage I proposal aimed at generating an intervention (or modifying the intervention) for people who were unresponsive to the initial intervention.

Stage III

Why should there be an expectation that an intervention showing efficacy will also show effectiveness? Complex treatments administered with a high degree of fidelity in research settings with research therapists seem destined to weaken when implemented in community settings with community providers. It is not reasonable to expect that a positive efficacy study will automatically or even usually lead to a positive effectiveness study (Flay, 1986). Before proceeding to effectiveness testing, it may be practical and logical to test that intervention for efficacy in a community setting, and a need has been identified for research between traditional efficacy and effectiveness research (Carroll & Rounsaville, 2007; Weisz et al., 2013). Of course, prior to this testing the intervention will likely need to be further developed or adapted (i.e., in Stage I) for community providers. This new vision of intervention development includes an additional stage, Stage III, of research where an intervention is tested in a well-controlled, internally valid study in a community setting with community therapists/providers, and where community-friendly fidelity monitoring and enhancement procedures are included as part of the intervention before testing in an externally valid effectiveness trial (see Henggeler, 2011).

Stage III research is unique to the current revision of the model. Stage III is similar to Stage II research, except that instead of research providers and settings, it consists of testing in a community context while maintaining a high level of control necessary to establish internal validity (Carroll & Rounsaville, 2003). As is the case in all of the stages, examination of the mechanism of action of interventions is considered integral. Like Stage II, Stage III does not specify a particular research design. Testing of interventions may be done in randomized clinical trials, but may also be conducted using other methodologies (e.g., adaptive designs, multiple-baseline single-case designs, A-B-A designs, etc.). Stage III studies may include examination of intervention components, dose response, and theory-derived moderators.

Proceeding to Stage III requires Stage I research to be promising. In addition, Stage III research demands that the intervention generated in the Stage I research is an intervention believed to be implementable or “community-friendly.” This could mean that the intervention was pilot tested in a community setting with community therapists/providers, and should mean that fidelity and therapist training measures have been handled and/or incorporated into the intervention.

Immediately and directly proceeding from Stage II to Stage III is not a typical path within the stage model. Generally, when Stage II (rather than Stage III) is proposed, it is because the intervention is not ready to be tested in the community: If the intervention was ready to be tested in the community, logic dictates that Stage III would have been proposed. If positive results have been obtained from a Stage II study, usually additional community-friendly Stage I work is needed to make the intervention ready for a Stage III trial. An exception might be a highly computerized intervention that requires little attention to provider training or fidelity. As is the case for Stage II, information obtained from Stage III studies may be used to inform future Stage I studies. For example, if it is shown in Stage III that an intervention works for some people, but not for others, a Stage III study may lay the groundwork for a Stage I proposal aimed at developing an intervention (or modifying the intervention) for people who were unresponsive to the initial intervention.

Stage IV

Stage IV is effectiveness research. Stage IV research examines behavioral interventions in community settings, with community therapists/providers, while maximizing external validity. It is generally considered necessary to show that an intervention is effective before proceeding to implementation and dissemination in the real world. As is the case in all of the stages, examination of the moderators and the mechanism of action of interventions and/or training procedures is considered to be an integral part of Stage IV. It may be more complex to incorporate Stage 0 (basic science) into Stage IV than into Stages I to III, but there may be methods for doing so (Collins, Dziak, & Li, 2009; Doss & Atkins, 2006).

Skipping Stage III and proceeding from Stage II to Stage IV is not a typical path within the stage model. Moving to an effectiveness study from Stage II, without validated measures to train community providers and ensure fidelity, increases the likelihood of a failed effectiveness trial. A successful Stage III trial shows that it is possible to deliver faithfully an intervention in the community, and that the intervention retains efficacy. Stage III is meant to maximize the chances of a successful Stage IV study.

Stage V

Stage V is implementation and dissemination research. Implementation concerns methods of adopting scientifically supported interventions and incorporating them into community settings. Dissemination refers to the distribution of information and materials about these interventions to relevant groups. Generally speaking, dissemination and implementation (D&I) research focuses at least as much if not more on the service delivery system as opposed to the intervention itself. Although relevant to intervention development, not all D&I research looks at the intervention per se, except as something to be adopted by the system. This does not imply, however, that interventions at this stage are exempt from the desiderata regarding mechanism that are discussed in previous sections: It is important to understand how implementation strategies work.

What About Training of Clinical Scientists?

The thrust of our arguments for a new vision and long-term change culminates in training. Realistically, the large majority of clinical researchers, sympathetic as they may be to this sort of integrative vision, were not trained in this kind of outlook and are far too ensconced in the intense pressure of contemporary clinical research to change rapidly. Moreover, clinical science training now produces excellent basic scientists, intervention generators and testers, effectiveness researchers, and implementation scientists. What we need to do is produce excellent scientists of all these sorts, but also train them to see where they fit as the science moves into practice, and how they can contribute to it. They need to be trained to understand the entire process of intervention development (Stages 0 through V), not just one particular aspect of it. In a sense, they need multi-subdisciplinary training. Basic scientists need to continue to be trained to do great basic science, but they also need to know how to conduct such science within applied intervention development studies. Efficacy researchers and researchers who generate and refine interventions need to understand the importance of determining mechanisms of behavior change, and also need to continue their efforts to strive to make an intervention more implementable, beyond the point of establishing efficacy. Effectiveness researchers need to know how to conduct great effectiveness studies, but they need to know from efficacy researchers when interventions are ready (not until after Stage III—usually), and they need to know what kind of methodologies can get them the most information about mechanisms and mediators. Researchers from the most basic realm of clinical science to the most applied realm of intervention development not only can help each other, but also can help themselves by opening up new avenues of research pursuits, and asking and answering new research questions—all while fostering the public health.

Such training is substantive and not trivial. Therefore, hard work will be needed to determine what sort of curricula can be developed that provide students with this necessary background without detracting unnecessarily from their core research training. Just as many clinical science programs have considered alternative accreditation systems to avert onerous requirements for class work and clinical hours that do not fit the needs of their students, clinical science programs need to avoid simply substituting another set of overly time-consuming requirements. However, the need for students to acquire a more integrative vision of the entire field, to see themselves as part of it, and to understand how to interact with scientists in other parts of the clinical science system is critical for clinical science to flourish in the long term, and to achieve its rich potential for exerting a positive impact on public health.

Clinical scientists need to be trained to understand that it is everyone’s job to produce potent, well-understood, efficacious, effective, and implementable interventions. They all have a role to play. The stage model is intended to underscore the interconnectedness of the multiple domains of clinical science, to bring an awareness of the importance of mechanisms, to define essential aspects of intervention development, to promote the use of a common language, and to underscore the importance of fully developing potent interventions all the way through to implementation. Training students to use the stage model as a heuristic can help to foster all of these goals.

Concluding Remarks

A new vision for clinical science is, in a sense, equivalent to a new vision for intervention development, in that the ultimate goal of both is interventions that promote the physical and mental health of individuals. Although the ultimate goal may be applied, basic science is an integral part of that goal because basic and all types of applied clinical scientists are necessary to fully achieve it. For the vision to be realized, clinical science students need to be taught how to integrate the subdisciplines of basic behavioral science, efficacy and effectiveness research, and implementation science.

In a world in which it is becoming increasingly the norm to conduct multidisciplinary translational team science, and where quite frequently students are already being trained in more than one discipline, is it reasonable to ask them to achieve fluency in the subdisciplines of clinical science? Basic science divorced from applied science can yield fascinating findings, but if basic science can inform and be informed by applied clinical science, the field can expand and can help solve public health problems, and be noticed for doing so. Efficacy and effectiveness research divorced from the science of how and why interventions work can yield interventions that work, and in some cases that can be good enough. On the other hand, without an understanding of mechanisms, endless questions can arise when clinical trials fail (e.g., questions about appropriateness of design, delivery, fidelity, population, providers, etc.) and even when they succeed (Does the intervention need to be given exactly as is? Will the intervention work if partially administered or if delivered in another form? Does the intervention require adaptation for another population or another setting? etc.). Questions will still arise if the field is integrated, but the science will be better positioned to coherently progress. Given the likely benefits, it seems unreasonable not to ask them to achieve fluency in the subdisciplines of clinical science.

What steps can be taken to integrate these subdisciplines? Perhaps students need not be trained as an expert in more than one subdiscipline; perhaps it is enough that they be trained to contribute in a meaningful way to other subdisciplines, and to understand how other subdisciplines can contribute to their own. For example, scientists who conduct basic research on psychopathology need not be trained to conduct clinical trials, but need to know enough about clinical trials to know how to design studies that can inform these trials, and substudies that can be included with those clinical trials, such as sub-studies on mechanisms of change. This benefits the psychopathology researcher by opening up new avenues to test theories about behavior and behavior change, and it benefits clinical trials researchers by giving them insight into the reasons why their interventions work, or do not work. Conversely, clinical trials researchers might not need to be trained as experts in basic behavioral science, but they can be trained to understand how to appreciate and include the right basic behavioral science and scientists to help them develop more potent and more implementable interventions.

What else needs to be done? The vision presented here demands that clinical scientists understand and train their students to understand that intervention development is far from complete when an intervention shows efficacy in Stage II. They need to expect that successful research setting–based efficacy trials are not likely to directly result in successful effectiveness trials, and should understand the frequent necessity of further developing an intervention (e.g., in Stages I and III) toward implementability. If an intervention is not implementable in the community, there is more work to be done—even if that work ends up showing that there is no conceivable way currently to change the intervention into a more implementable form.

Finally, students need to be trained to understand the importance of using a common language. A common language fosters a progressive discipline, creating the groundwork to solve public health problems. With a common language, the (oftentimes) missing link of Stage III defined, and a mind-set that includes an appreciation of basic science and implementability within intervention development, we expect to replace the merry-go-round of efficacy and effectiveness trials that go nowhere with a map that leads toward implementation of effective interventions.

We hope that this new vision—anchored in a stage model of intervention development—not only will bring the field together in the pursuit of a common goal, but also will foster the successful realization of that goal. Emphasizing that interventions are not fully developed until they are implementable and underscoring the importance of research on change mechanisms have been two pillars of this vision. Training the next generation of clinical scientists to embrace this new vision could mean a new generation of powerful and implementable interventions for the public health.

Acknowledgments

Funding

K. M. Carroll was supported by NIDA awards P50-DA09241, R01 DA015969, and U10 DA015831 (Carroll, PI). The other authors are federal employees who did not receive federal or foundation grants to support this work.

Footnotes

Author Contributions

The order of authorship reflects the authors’ relative contribution.

Declaration of Conflicting Interests

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

As defined by the Academy of Psychological Clinical Science, clinical science is “a psychological science directed at the promotion of adaptive functioning; at the assessment, understanding, amelioration, and prevention of human problems in behavior, affect, cognition or health; and at the application of knowledge in ways consistent with scientific evidence” (http://acadpsychclinicalscience.org/mission/).

We are using stages for a model of psychosocial intervention development to differentiate it from biomedical treatments, where phases are defined for medication development by the FDA (see http://www.fda.gov/drugs/resourcesforyou/consumers/ucm143534.htm).

References

- Agras WS, Chapin HN, Oliveau DC. The natural history of phobia. Course and prognosis. Archives of General Psychiatry. 1972;26:315–317. doi: 10.1001/archpsyc.1972.01750220025004. [DOI] [PubMed] [Google Scholar]

- Amir N, Taylor CT. Combining computerized home-based treatments for generalized anxiety disorder: an attention modification program and cognitive behavioral therapy. Behavior Therapy. 2012;43:546–559. doi: 10.1016/j.beth.2010.12.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arch JJ, Craske MG. First-line treatment: A critical appraisal of cognitive behavioral therapy developments and alternatives. Psychiatric Clinics of North America. 2009;32:525–547. doi: 10.1016/j.psc.2009.05.001. [DOI] [PubMed] [Google Scholar]

- Baker TB, McFall RM, Shoham V. Current status and future prospects of clinical psychology: Toward a scientifically principled approach to mental and behavioral health care. Psychological Science in the Public Interest. 2008;9:67–103. doi: 10.1111/j.1539-6053.2009.01036.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becker CB, Zayfert C, Anderson E. A survey of psychologists’ attitudes towards and utilization of exposure therapy for PTSD. Behaviour Research and Therapy. 2004;42:277–292. doi: 10.1016/S0005-7967(03)00138-4. [DOI] [PubMed] [Google Scholar]

- Beidas RS, Kendall PC. Training therapists in evidence based practice: A critical review of studies from a systems-contextual perspective. Clinical Psychology: Science and Practice. 2010;17:1–30. doi: 10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel WK, Marsch LA, Buchhalter AR, Badger GJ. Computerized behavior therapy for opioid-dependent outpatients: A randomized controlled trial. Experimental and Clinical Psychopharmacology. 2008;16:132–143. doi: 10.1037/1064-1297.16.2.132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borkovec TD, Castonguay LG. What is the scientific meaning of empirically supported therapy? Journal of Consulting and Clinical Psychology. 1998;66:136–142. doi: 10.1037//0022-006x.66.1.136. [DOI] [PubMed] [Google Scholar]

- Borkovec TD, Miranda J. Between-group psychotherapy outcome research and basic science. Journal of Clinical Psychology. 1999;55:147–158. doi: 10.1002/(sici)1097-4679(199902)55:2<147::aid-jclp2>3.0.co;2-v. [DOI] [PubMed] [Google Scholar]

- British Psychological Society. Response to the American Psychiatric Association DSM-V development. 2011 Retrieved from http://apps.bps.org.uk/_publicationfiles/consultation-responses/DSM-5%202011%20-%20BPS%20response.pdf.

- Brown BS, Flynn PM. The federal role in drug abuse technology transfer: A history and perspective. Journal of Substance Abuse Treatment. 2002;22:245–257. doi: 10.1016/s0740-5472(02)00228-3. [DOI] [PubMed] [Google Scholar]

- Brown TA, Barlow DH. A proposal for a dimensional classification system based on the shared features of the DSM-IV anxiety and mood disorders: Implications for assessment and treatment. Psychological Assessment. 2009;21:256–271. doi: 10.1037/a0016608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll KM, Ball SA, Martino S, Nich C, Babuscio TA, Nuro KF, Rounsaville BJ. Computer-assisted delivery of cognitive-behavioral therapy for addiction: A randomized trial of CBT4CBT. American Journal of Psychiatry. 2008;165:881–888. doi: 10.1176/appi.ajp.2008.07111835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll KM, Rounsaville BJ. Bridging the gap: A hybrid model to link efficacy and effectiveness research in substance abuse treatment. Psychiatric Services. 2003;54:333–339. doi: 10.1176/appi.ps.54.3.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll KM, Rounsaville BJ. A vision of the next generation of behavioral therapies research in the addictions. Addiction. 2007;102:850–869. doi: 10.1111/j.1360-0443.2007.01798.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll KM, Rounsaville BJ. Computer-assisted therapy in psychiatry: Be brave, it’s a new world. Current Psychiatry Reports. 2010;12:426–432. doi: 10.1007/s11920-010-0146-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambless DL, Ollendick TH. Empirically supported psychological interventions: Controversies and evidence. Annual Review of Psychology. 2001;52:685–716. doi: 10.1146/annurev.psych.52.1.685. [DOI] [PubMed] [Google Scholar]

- Chorpita BF, Viesselman JO. Staying in the clinical ballpark while running the evidence bases. Journal of the American Academy of Child and Adolescent Psychiatry. 2005;44:1193–1197. doi: 10.1097/01.chi.0000177058.26408.d3. [DOI] [PubMed] [Google Scholar]

- Collins LM, Dziak JJ, Li R. Design of experiments with multiple independent variables: A resource management perspective on complete and reduced factorial designs. Psychological Methods. 2009;14:202–224. doi: 10.1037/a0015826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craske MG, Rose RD, Lang A, Welch SS, Campbell-Sills L, Sullivan G, Roy-Byrne PP. Computerassisted delivery of cognitive behavioral therapy for anxiety disorders in primary-care settings. Depression and Anxiety. 2009;26:235–242. doi: 10.1002/da.20542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craske MG, Roy-Byrne PP, Stein MB, Sullivan G, Sherbourne C, Bystritsky A. Treatment for anxiety disorders: Efficacy to effectiveness to implementation. Behavior Research and Therapy. 2009;47:931–937. doi: 10.1016/j.brat.2009.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curtis NM, Ronan KR, Borduin CM. Multisystemic treatment: A meta-analysis of outcome studies. Journal of Family Psychology. 2004;18:411–419. doi: 10.1037/0893-3200.18.3.411. [DOI] [PubMed] [Google Scholar]

- Cuthbert BN, Insel TR. Toward the future of psychiatric diagnosis: The seven pillars of RDoC. BMC Medicine. 2013;11:126. doi: 10.1186/1741-7015-11-126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsch SR, Alexander JA, Lowery JC. Fostering implementation of health service research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeRubeis RJ, Crits-Christoph P. Empirically supported individual and group psychological treatments for adult mental disorders. Journal of Consulting and Clinical Psychology. 1998;66:37–52. doi: 10.1037//0022-006x.66.1.37. [DOI] [PubMed] [Google Scholar]

- Doss BD, Atkins DC. Investigating treatment mediators when simple random assignment to a control group is not possible. Clinical Psychology: Science and Practice. 2006;13:321–336. [Google Scholar]

- Eysenck HJ. The effects of psychotherapy: An evaluation. Journal of Consulting Psychology. 1952;16:659–663. doi: 10.1037/h0063633. [DOI] [PubMed] [Google Scholar]

- Fairburn CG, Wilson GT. The dissemination and implementation of psychological treatments: Problems and solutions. International Journal of Eating Disorders. 2013;46:516–521. doi: 10.1002/eat.22110. [DOI] [PubMed] [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa: University of South Florida, Louis de la Parte Florida Mental Health Institute, National Implementation Research Network; 2005. (FMHI Pub. No. 231). [Google Scholar]

- Flay BR. Efficacy and effectiveness trials (and other phases of research) in the development of health promotion programs. Preventive Medicine. 1986;15:451–474. doi: 10.1016/0091-7435(86)90024-1. [DOI] [PubMed] [Google Scholar]

- Follette WC, Beitz K. Adding a more rigorous scientific agenda to the empirically supported treatment movement. Behavior Modification. 2003;27:369–386. doi: 10.1177/0145445503027003006. [DOI] [PubMed] [Google Scholar]

- Frances A. Issues for DSM-V: The limitations of field trials: A lesson from DSM-IV. American Journal of Psychiatry. 2009a;166:1322. doi: 10.1176/appi.ajp.2009.09071007. [DOI] [PubMed] [Google Scholar]

- Frances A. Whither DSM-V? British Journal of Psychiatry. 2009b;195:391–392. doi: 10.1192/bjp.bp.109.073932. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Lichtenstein E, Marcus AC. Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. American Journal of Public Health. 2003;93:1261–1267. doi: 10.2105/ajph.93.8.1261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayes SC, Long DM, Levin ME, Follette WC. Treatment development: Can we find a better way? Clinical Psychology Review. doi: 10.1016/j.cpr.2012.09.009. (in press) [DOI] [PubMed] [Google Scholar]

- Henggeler SW. Decreasing effect sizes for effectiveness studies—Implications for the transport of evidence-based treatments: Comment on Curtis, Ronan, and Borduin (2004) Journal of Family Psychology. 2004;18:420–423. doi: 10.1037/0893-3200.18.3.420. [DOI] [PubMed] [Google Scholar]

- Henggeler SW. Efficacy studies to large-scale transport: The development and validation of multisystemic therapy programs. Annual Review of Clinical Psychology. 2011;7:351–381. doi: 10.1146/annurev-clinpsy-032210-104615. [DOI] [PubMed] [Google Scholar]

- Hoagwood K, Olin S, Cleek A. Beyond context to the skyline: Thinking in 3D. Administration and Policy in Mental Health. 2013;40:23–28. doi: 10.1007/s10488-012-0451-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys K, McLellan AT. A policy-oriented review of strategies for improving the outcomes of services for substance use disorder patients. Addiction. 2012;106:2058–2066. doi: 10.1111/j.1360-0443.2011.03464.x. [DOI] [PubMed] [Google Scholar]

- Hyman SE. The diagnosis of mental disorders: The problem of reification. Annual Review of Clinical Psychology. 2010;6:155–179. doi: 10.1146/annurev.clinpsy.3.022806.091532. [DOI] [PubMed] [Google Scholar]

- Insel TR. Next-generation treatments for mental disorders. Science and Translational Medicine. 2012;4:155ps19. doi: 10.1126/scitranslmed.3004873. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. Improving the quality of health care for mental and substance-use conditions: Quality chasm series. Washington, DC: National Academies Press; 2006. [PubMed] [Google Scholar]

- Kazdin AE. Progression of therapy research and clinical application of treatment require better understanding of the change process. Clinical Psychology: Science and Practice. 2001;8:143–151. [Google Scholar]

- Kazdin AE. Mediators and mechanisms of change in psychotherapy research. Annual Review of Clinical Psychology. 2007;3:1–27. doi: 10.1146/annurev.clinpsy.3.022806.091432. [DOI] [PubMed] [Google Scholar]

- Kazdin AE. Evidence-based treatment and practice: New opportunities to bridge clinical research and practice, enhance the knowledge base, and improve patient care. American Psychologist. 2008;63:146–159. doi: 10.1037/0003-066X.63.3.146. [DOI] [PubMed] [Google Scholar]

- Kazdin AE, Nock MK. Delineating mechanisms of change in child and adolescent therapy: Methodological issues and research recommendations. Journal of Child Psychology and Psychiatry. 2003;43:1116–1129. doi: 10.1111/1469-7610.00195. [DOI] [PubMed] [Google Scholar]

- Kendall PC. Empirically supported psychological therapies. Journal of Consulting and Clinical Psychology. 1998;66:3–6. doi: 10.1037//0022-006x.66.1.3. [DOI] [PubMed] [Google Scholar]

- Kessler R, Glasgow RE. A proposal to speed translation of healthcare research into practice: Dramatic change is needed. American Journal of Preventive Medicine. 2011;40:637–644. doi: 10.1016/j.amepre.2011.02.023. [DOI] [PubMed] [Google Scholar]

- Kringlen E. Natural history of obsessional neurosis. Seminars in Psychiatry. 1970;2:403–419. [PubMed] [Google Scholar]

- Lehman WE, Simpson DD, Knight DK, Flynn PM. Integration of treatment innovation planning and implementation: Strategic process models and organizational challenges. Psychology of Addictive Behaviors. 2011;25:252–261. doi: 10.1037/a0022682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt EE. The results of psychotherapy with children: An evaluation. Journal of Consulting Psychology. 1957;21:189–196. doi: 10.1037/h0039957. [DOI] [PubMed] [Google Scholar]

- Levitt EE. Psychotherapy with children: A further evaluation. Behaviour Research and Therapy. 1963;1:45–51. doi: 10.1016/0005-7967(63)90007-x. [DOI] [PubMed] [Google Scholar]

- Lilienfeld SO, Ritschel LA, Lynn SJ, Brown AP, Cautin RL, Latzman RD. The research-practice gap: Bridging the schism between eating disorder researchers and practitioners. International Journal of Eating Disorders. 2013;46:386–394. doi: 10.1002/eat.22090. [DOI] [PubMed] [Google Scholar]

- McFall RM. On psychological clinical science. In: Treat T, Bootzin RR, Baker TB, editors. Psychological clinical science: Recent advances in theory and practice Integrative perspectives in honor of Richard M McFall. New York, NY: Erlbaum; 2007. pp. 363–396. [Google Scholar]

- McHugh RK, Smits JAJ, Otto MW. Empirically supported treatments for panic disorder. Psychiatric Clinics of North America. 2009;32:593–610. doi: 10.1016/j.psc.2009.05.005. [DOI] [PubMed] [Google Scholar]

- McLean CP, Foa EB. Prolonged exposure therapy for post-traumatic stress disorder: A review of evidence and dissemination. Expert Review of Neurotherapeutics. 2011;11:1151–1163. doi: 10.1586/ern.11.94. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Meyers K. Contemporary addiction treatment: A review of systems problems for adults and adolescents. Biological Psychiatry. 2004;56:764–770. doi: 10.1016/j.biopsych.2004.06.018. [DOI] [PubMed] [Google Scholar]

- Miller WR. Motivational interviewing and the incredible shrinking treatment effect. Addiction. 2005;100:421. doi: 10.1111/j.1360-0443.2005.01035.x. [DOI] [PubMed] [Google Scholar]

- Miller WR, Yahne CE, Moyers TB, Martinez J, Pirritano M. A randomized trial of methods to help clinicians learn motivation interviewing. Journal of Consulting and Clinical Psychology. 2004;72:1050–1062. doi: 10.1037/0022-006X.72.6.1050. [DOI] [PubMed] [Google Scholar]

- Murphy R, Cooper Z, Hollon SD, Fairburn CG. How do psychosocial treatments work? Investigating mediators of change. Behaviour Research and Therapy. 2009;47:1–5. doi: 10.1016/j.brat.2008.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olmstead TA, Abraham AJ, Martino S, Roman PM. Counselor training in several evidence-based psychosocial addiction treatments in private US substance abuse treatment centers. Drug and Alcohol Dependence. 2012;120:149–154. doi: 10.1016/j.drugalcdep.2011.07.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Onken L. PRECEDE-PROCEED and the NIDA stage model: The value of a conceptual framework for intervention research. Journal of Public Health Dentistry. 2011;71:S18–S19. doi: 10.1111/j.1752-7325.2011.00221.x. [DOI] [PubMed] [Google Scholar]

- Onken LS, Blaine JD. Behavioral therapy development and psychological science: Reinforcing the bond. Psychological Science. 1997;8:143–144. [Google Scholar]

- Onken LS, Blaine JD, Battjes RJ. Behavioral therapy research: A conceptualization of a process. In: Henggeler SW, Santos AB, editors. Innovative approaches for difficult-to-treat populations. Washington, DC: American Psychiatric Press; 1997. pp. 477–485. [Google Scholar]

- Onken LS, Bootzin RR. Behavioral therapy development and psychological science: If a tree falls in the forest and no one hears it. Behavior Therapy. 1998;29:539–543. [Google Scholar]

- Otto MW, Tolin DF, Nations KR, Utschig AC, Rothbaum BO, Hofmann SG, Smits JA. Five sessions and counting: Considering ultra-brief treatment for panic disorder. Depression and Anxiety. 2012;29:465–470. doi: 10.1002/da.21910. [DOI] [PubMed] [Google Scholar]

- Perepletchikova F, Treat TA, Kazdin AE. Treatment integrity in psychotherapy research: Analysis of the studies and examination of the associated factors. Journal of Consulting and Clinical Psychology. 2007;75:829–841. doi: 10.1037/0022-006X.75.6.829. [DOI] [PubMed] [Google Scholar]

- Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health. 2009;36:24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roshanaei-Moghaddam B, Pauly MC, Atkins DC, Baldwin SA, Stein MB, Roy-Byrne P. Relative effects of CBT and pharmacotherapy in depression versus anxiety: Is medication somewhat better for depression, and CBT somewhat better for anxiety? Depression and Anxiety. 2011;28:560–567. doi: 10.1002/da.20829. [DOI] [PubMed] [Google Scholar]

- Rounsaville BJ, Carroll KM, Onken LS. Methodological diversity and theory in the stage model: Reply to Kazdin. Clinical Psychology: Science and Practice. 2001a;8:152–154. [Google Scholar]

- Rounsaville BJ, Carroll KM, Onken LS. NIDA’s stage model of behavioral therapies research: Getting started and moving on from Stage 1. Clinical Psychology: Science and Practice. 2001b;8:133–142. [Google Scholar]

- Santa Ana E, Martino S, Ball SA, Nich C, Carroll KM. What is usual about “treatment as usual”: Audiotaped ratings of standard treatment in the Clinical Trials Network. Journal of Substance Abuse Treatment. 2008;35:369–379. doi: 10.1016/j.jsat.2008.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenwald SK, Mehta TG, Frazier SL, Shernoff ES. Clinical supervision in effectiveness and implementation research. Clinical Psychology: Science and Practice. 2013;20:44–49. [Google Scholar]

- Shoham V, Rohrbaugh MJ, Onken LS, Cuthbert BN, Beveridge RM, Fowles TR. Redefining clinical science training: Purpose and products of the Delaware Project. Clinical Psychological Science. 2014;2:8–21. [Google Scholar]

- Sholomskas D, Syracuse G, Ball SA, Nuro KF, Rounsaville BJ, Carroll KM. We don’t train in vain: A dissemination trial of three strategies for training clinicians in cognitive behavioral therapy. Journal of Consulting and Clinical Psychology. 2005;73:106–115. doi: 10.1037/0022-006X.73.1.106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stewart RE, Chambless DL, Baron J. Theoretical and practical barriers to practitioners willingness to seek training in empirically supported treatments. Journal of Clinical Psychology. 2012;68:8–23. doi: 10.1002/jclp.20832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stewart RE, Stirman SW, Chambless DL. A qualitative investigation of practicing psychologists attitudes toward research informed practice: Implications for dissemination strategies. Professional Psychology: Research and Practice. 2012;43:100–109. doi: 10.1037/a0025694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokes DE. Pasteur’s quadrant: Basic science and technological innovation. Washington, DC: Brookings Institution; 1997. [Google Scholar]

- Weisz JR, Jensen-Doss A, Hawley KM. Evidence-based youth psychotherapies versus usual clinical care: A meta-analysis of direct comparisons. American Psychologist. 2006;61:671–689. doi: 10.1037/0003-066X.61.7.671. [DOI] [PubMed] [Google Scholar]

- Weisz JR, Ng MY, Bearman SK. Odd couple? Reenvisioning the relation between science and practice in the dissemination-implementation era. Clinical Psychological Science. 2014;2:58–74. [Google Scholar]

- Weisz JR, Ugueto AM, Cheron DM, Herren J. Evidence-based youth psychotherapy in the mental health ecosystem. Journal of Clinical Child & Adolescent Psychology. 2013;42:274–286. doi: 10.1080/15374416.2013.764824. [DOI] [PubMed] [Google Scholar]

- Weisz JR, Weiss B, Donenberg GR. The lab versus the clinic: Effects of child and adolescent psychotherapy. American Psychologist. 1992;47:1578–1585. doi: 10.1037//0003-066x.47.12.1578. [DOI] [PubMed] [Google Scholar]