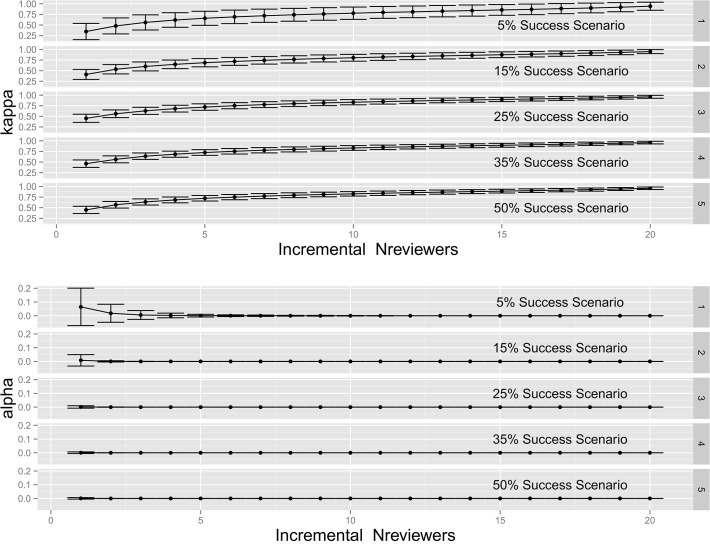

Fig 4. Bootstrapped kappa statistics of peer review decision consistency with incremental N to N+1 reviewers.

In simulation of CIHR Fellowships competition outcomes, overall decision consistency improved with incremental addition of reviewers regardless of overall success rate scenario. Monte Carlo error analysis (standard deviation of bootstrapped estimates of kappa coefficients) [33] indicated broad overlap among incremental kappa values with increased reviewers. Kappa levels > 0.8 represented “almost perfect” consistency [32]. Kappa values were significant (α < 0.05) except in the 5% success scenario at the 1–2 and 2–3 reviewer increment.