Abstract

Although alcohol use continues to be a major problem, when high-risk users enter social services, they are not provided with empirically supported treatments (ESTs). This study investigates predictors of successful implementation in agencies not specializing in addiction services. Fifty-four frontline workers in six organizations were enrolled in the study. After completing self-administered surveys of organizational culture and climate and attitudes toward ESTs, workers were trained to implement a brief intervention. The results indicate that organizational factors and attitudes may not be related to implementation. Although high implementers had similar traits, further research is needed to characterize successful EST implementers.

Keywords: alcohol and drug use, empirically supported treatments, implementation, organizational culture and climate, worker characteristics

BACKGROUND

Untreated alcohol and drug addiction continues to be a major health issue in the United States. Alcohol abuse alone results in about 80,000 premature deaths annually and costs our economy about $220 billion each year (Bouchery et al., 2011). About 11 percent of our youth meet criteria for lifetime alcohol use disorder, and about 17 percent of adults meet the disorder criteria (Kessler et al., 2005). In 2007, about 20 million Americans needed treatment for alcohol problems (Office of Applied Studies, 2009). Although alcohol use continues to be associated with high risk factors (Rehm et al., 2009), and the number of people seeking treatment could double as the result of the Affordable Care Act (Beronio et al., 2013), most people who seek professional services are not provided with empirically supported treatments (ESTs) (Miller et al., 2006; Sorensen & Midkiff, 2002).

Because the gold standard of quality care is providing clients with ESTs, much has been written about the need to implement these evidence-informed practices in clinical health services (Patterson, 2014). For instance, several national reports petition researchers and community mental health providers to focus their attention on implementing ESTs rather than adding new treatments to the list of underused evidence-based practices (Institute of Medicine, 2000, 2001, 2006; National Institute on Drug Abuse, 2009, 2012; U.S. Department of Health and Human Services, 2006). There has also been extensive discussion regarding the many reasons ESTs are not sufficiently implemented. For instance, researchers have investigated various and numerous barriers to the implementation process, such as poor organizational context (Aarons, 2005; Burns & Hoagwood, 2005; Glisson et al., 2008; Patterson & Dulmus, 2012; Patterson et al., 2013) and worker characteristics (McGovern et al., 2004; Nelson, Steele, & Mize, 2006).

Of particular concern is understanding the ways in which organizational culture and climate erect barriers when workers try to implement ESTs into existing social services (Hemmelgarn et al., 2006). While characterizing a specific organization’s culture and climate is often difficult (Schneider, Ehrhart, & Macey, 2011; Verbeke, Volgering, & Hessels, 1998), organizational culture is generally described as the “way things are done around here.” Workers within an organization communicate shared norms, beliefs, and behavioral expectations that are valued by the organization (Cooke & Szumal, 1993; Hemmelgarn et al., 2006; Verbeke et al., 1998). The climate of an organization is best represented by employees’ perceptions and the emotional responses to the characteristics of their environment (Glisson & James, 2002). Accordingly, organizational culture and climate are related yet distinct constructs, and both influence working conditions in organizations (Glisson et al., 2008).

Worker Characteristics

There is a developing literature focusing on worker attitudes toward ESTs. Providers’ attitudes toward new clinical practices may hamper or facilitate the implementation of ESTs into practice settings (Patterson, Dulmus, & Maguin, 2013). A brief measure of workers’ attitudes toward implementing ESTs—the Evidence-Based Practice Attitudinal Scale (EBPAS)—was developed, and attitudes were investigated in relation to a set of individual differences (Aarons, 2004; Aarons & Sawitzky, 2006; Garland, Kruse, & Aarons, 2003; Stahmer & Aarons, 2009). According to Aarons (2004) and Patterson and colleagues (2013), workers’ attitudes toward ESTs can be reliably measured and vary in relation to individual differences. These attitudes have the potential to improve the process and effectiveness of implementation efforts (Aarons, 2004).

Although the primary purpose of Aarons’s (2004) study was to develop a brief EST attitude measure, the study tested other hypotheses as well. The original study found no differences in attitudes toward implementation of ESTs across disciplines (e.g., social work, marriage and family therapy, psychology, psychiatry, and others); there were however individual differences across higher educational levels and professional statuses (Aarons, 2004; Aarons & Sawitsky, 2006).

Other studies measuring workers’ attitudes about ESTs have produced mixed outcomes (Patterson et al., 2013). Findings appear to be inconsistent between studies pertaining to subjects’ educational attainment. The studies that reported educational attainment (Aarons, 2004; Ogborne et al., 1998) found that higher degreed workers conveyed more positive attitudes compared to those with less education. Yet a more recent study found differences between the attitudes of workers with equal levels of educational attainment (Stahmer & Aarons, 2009). Aarons’s (2004) original EBPAS validation study did not find significant differences between a worker’s educational discipline and EST attitudes. However a later study (Stahmer & Aarons, 2009) found attitudinal differences between workers with different educational backgrounds.

Although there is a growing and developing literature on organizational-level and worker-level barriers to implementing ESTs, studies that specifically control for these factors are lacking. If there are specific organizational factors and/or worker characteristics that erect barriers to EST implementation, agencies would be wise to address those factors before depleting their limited resources on futile efforts.

Importance of Integrating Alcohol Screening and Brief Intervention throughout Community-Based Mental Health Organizations

Oftentimes high-risk drinking goes undetected throughout community-based health and mental health organizations. To address this issue the National Institute on Alcohol Abuse and Alcoholism (NIAAA) developed a clinician’s guide for helping patients who drink excessively (National Institutes of Health, 2005). This guide was created and mainly tested among medical, primary care providers to integrate an alcohol intervention into standard medical care services. Its overall goal was to assist medical professionals who are in prime positions to reach potential problem drinkers and screen for at-risk drinking as well as providing a brief intervention.

According to Fleming and colleagues (2002) clinical trials have shown that providing a brief intervention can lead to significant and long-lasting reductions in drinking levels in people who are considered at-risk drinkers. Clinical trials have also demonstrated that repeated alcohol-focused brief interventions with a health care provider can lead to significant improvements for dependent drinkers (Willenbring & Olson, 1999).

With clear evidence that implementing an alcohol screening and brief intervention in health care settings produces widespread positive outcomes (Babor et al., 1999, 2004, 2005, 2006; Babor & Higgins-Biddle, 2000; Bien, Miller, & Tonigan 1993; Holder & Blose, 1992; Kahan, Wilson, & Becker 1995), understanding barriers to their implementation is the next prime area of study. Babor and colleagues (2005, 367) state that “implementation of both screening and brief intervention was associated with organizational factors and provider characteristics.” Though provider training and orientation have been reported to be possible barriers, Roche, Horham, and Richmond (2002) emphasize a major paradigm shift away from training obstacles to factors encapsulating organizational structures. If community mental health organizations can implement an empirically supported brief alcohol screening and education intervention, high-risk drinking and its public health consequences could be greatly reduced.

The purpose of this study is to test whether organizational cultural and climate factors, as Glisson and colleagues (2008) identified, are related to successfully implementing a brief alcohol screening and educational intervention in community mental health organizations. This study also investigates potential relationships between worker characteristics and implementing the brief intervention. On the basis of the literature, it is hypothesized that programs with productive cultures and climates and workers with positive attitudes toward ESTs will have higher implementation rates.

METHODS

This pilot study recruited organizations in two U.S. cities. The study utilized a pool of community-based mental health organizations throughout western New York State and St. Louis, Missouri, and the frontline clinicians working within these organizations. Recruitment took a two-stage approach. Agency executive directors were contacted and recruited as the result of telephone and face-to-face meetings explaining the pilot study’s expectations and overall research goals. With the support of the agencies’ directors, frontline workers were made available for explanation and recruitment, usually during regular staff meetings. The inclusion criteria were organizations with frontline workers providing at least two new assessments or intakes per week. The intake caseload criterion was set so that normal month-to-month variation in caseload would not overly influence the intervention use rate computation. Organizations providing only or primarily substance abuse–specific services were also not included.

The final sample consisted of six organizations (two college-based and one high school–based student health service and three adult health and mental health agencies) with a total of 54 workers. All participants in this study were frontline workers (e.g., employees having direct service contact with clients). Table 1 reports the demographic characteristics for the workers. At the end of the official study workers in each location were recruited to participate in a focus group in order to more deeply understand their barriers and pathways to implementation. Baseline measures were collected before training and implementation of intervention began.

Table 1.

Sample Demographics

| Variable | % |

|---|---|

| Position | |

| Frontline worker | 94.1 |

| Manager/other | 5.9 |

| Education | |

| High school | 2.0 |

| Associate’s degree | 2.0 |

| Bachelor’s degree | 15.7 |

| Master’s degree | 60.8 |

| Doctoral degree | 19.6 |

| Major | |

| Education | 5.9 |

| Social work | 27.5 |

| Medicine | 3.9 |

| Psychology | 27.5 |

| Other | 35.3 |

| White | 76.9 |

| Female | 76.0 |

| Years of experience | |

| Range | 0–37 |

| Mean (SD) | 10.6 (9.2) |

| Years in present agency | |

| Range | 0–30 |

| Mean (SD) | 5.8 (6.4) |

| Age (years) | |

| Range | 23–71 |

| Mean (SD) | 40.5 (11.5) |

Note. N = 54.

Measures

Organizational social context

The Organizational Social Context Measurement Model (OSC) is a measurement system guided by a model of social context that consists of both organizational-level (structure and culture) and individual-level (work attitudes and behavior) constructs. These constructs include individual and shared perceptions (climate), which are believed to mediate the impact of the organization on the individual (Glisson, 2002; Glisson et al., 2008). The OSC measurement tool contains 105 items that form four domains, 16 first-order factors and 7 second-order factors that have been confirmed in a national sample of 100 mental health service organizations with approximately 1,200 clinicians. The self-administered Likert scale survey takes approximately 20 minutes to complete and is presented on a scannable bubble sheet booklet.

The OSC is a measure of a program’s culture and climate as reported by its workers; thus scores are computed for the program as a whole and not for its individual workers. The scores reported are T scores, whose computation is based on Glisson and colleagues’ (2008) sample of agencies. The three factors that comprise an organization’s culture are proficiency (.94), rigidity (.81), and resistance (.81.) (Glisson et al., 2008). Proficient cultures will place the health and well-being of clients first, and workers will be proficient, working to meet the unique needs of individual clients, with the most recent available knowledge (e.g., “Members of my organizational unit are expected to be responsive to the needs of each client” and “Members of my organizational unit are expected to have up-to-date knowledge”). Rigid cultures allow workers a small amount of discretion and flexibility in their activities, with the majority of controls coming from strict bureaucratic rules and regulations (e.g., “I have to ask a supervisor or coordinator before I do almost anything” and “The same steps must be followed in processing every piece of work”). Resistant cultures are described as workers showing little interest in changes or new ways of providing services. Workers in resistant cultures will suppress any openings to change (e.g., “Members of my organizational unit are expected to not make waves” and “Members of my organizational unit are expected to be critical”).

The factors for organizational climate are engagement (.78), functionality (.90), and stress (.94) (Glisson et al., 2008). Engaged climates are characterized by the workers’ perceptions that they can accomplish worthwhile activities and stay personally involved in their work while remaining concerned about their clients (e.g., “I feel I treat some of the clients I serve as impersonal objects” [reverse coded] and “I have accomplished many worthwhile things in this job”). Workers in functional climates receive support from their coworkers and have a well-defined understanding of how they fit into the organizational work unit (e.g., “This agency provides numerous opportunities to advance if you work for it” and “My job responsibilities are clearly defined”). Stressful climates are ones in which workers are emotionally exhausted and overwhelmed as the result of their work; they feel that they are unable to accomplish the necessary tasks at hand (e.g., “I feel like I am at the end of my rope” and “The amount of work I have to do keeps me from doing a good job”). In addition to the 105 culture and climate items, the OSC also contains items assessing respondent age, gender, ethnicity, education level and major, agency position, and total and current agency years of experience.

Evidence-Based Practice Attitude Scale

The EBPAS (Aarons, 2004) consists of 15 items that assess four dimensions of attitudes toward implementation of evidence-based practices. A 5-point response format (0 = not at all, 1 = to a slight extent, 2 = to a moderate extent, 3 = to a great extent, and 4 = to a very great extent) is used for each item. Scale scores were computed as the mean of items composing the scale. The four scales are as follows. Requirements is a three-item scale that assesses the likelihood that the worker would implement a new EST if it were required. Appeal is a four-item scale that measures the likelihood the worker would implement a new EST if colleagues were happy with it or it was intuitively appealing, made sense, and could be used correctly. Openness is a four-item scale that measures the worker’s “openness” to trying or actually implementing new interventions. Divergence is a four-item scale that assesses the worker’s assessment of the clinical value of research-based interventions versus clinical experience. Importantly, a higher score on the Divergence subscale indicates valuing clinical experience and knowledge over research-derived knowledge. In addition, a total (mean) score was computed for the 15 items in the measure after reverse scoring the Divergence subscale items. Internal consistency reliability values for these data were .90 for Requirements, .81 for Openness, .81 for Appeal, .60 for Divergence, and .82 for the total scale, which are similar to previously reported values (e.g., Aarons, 2004; Aarons et al., 2010).

Implementation measure

The measure of implementation, intervention percentage, was computed as the sum of the number of interventions recorded as being delivered divided by the sum of the number of intakes recorded as being completed, both over the three month follow-up period. Zero intakes or zero interventions were treated as valid data. The agency intervention percentage was computed by averaging the intervention percentage values for workers in each agency.

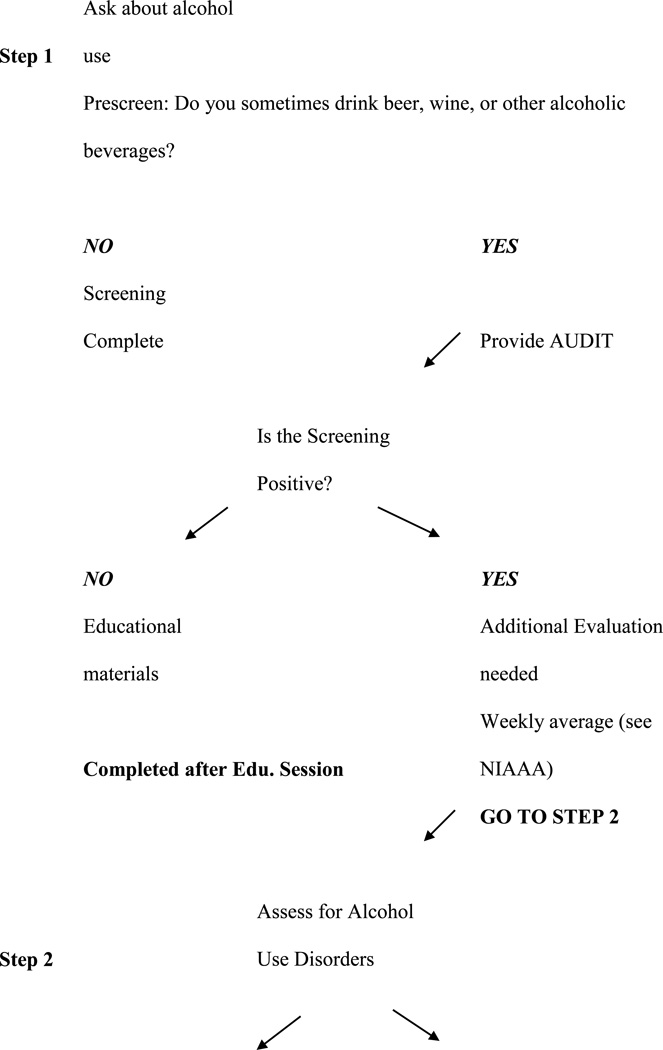

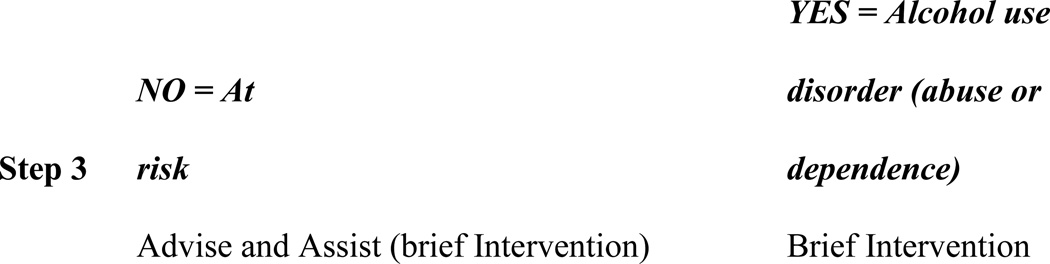

Training Staff on Intervention

Participants received a minimum of one hour of training on NIAAA’s alcohol screening and brief intervention. This training included how to conduct an alcohol screening (use of AUDIT) with clients, followed by the brief intervention’s implementation strategies found in the online version (as well as hard-copy handouts) of Helping Patients Who Drink Too Much: A Clinician’s Guide, a simple but effective brief intervention that was originally developed for a primary care setting (National Institutes of Health, 2005). The guide involves a number of steps and resources, including questions that help to diagnose alcohol abuse or dependence. During the training staff were introduced to the online version of NIAAA’s step-by-step clinician’s guide (Figure 1). The staff were also provided hard-copy versions of the clinician’s guide, along with clinician’s support materials and patient education materials. The training followed the guide’s recommendations on preparing clinicians to help those who drink too much.

Figure 1.

Step-by-step clinician guide.

After the scheduled training was completed, each staff member trained received an intervention packet for every client being seen. This packet included the NIAAA step-by-step guide to integrate the alcohol prescreening tool and information on its use as well as the AUDIT tool and information on its use. The staff were also trained on how to document their completed intervention to measure its use.

Intervention Implemented

Participants were trained to implement the NIAAA’s Helping Patients Who Drink Too Much: A Clinician’s Guide. However, in this study, mental health clinicians were asked to use this intervention with all new clients. This information was presented in three one-page documents excerpted from the NIAAA guide. The first step was to ask whether a client drinks, for example, “Do you sometimes drink beer, wine, or other alcoholic beverages?” If the client reported not ever drinking alcohol, the worker documented that response on a dated one-page intervention check-off sheet indicating a completed intervention. If the client answered in the affirmative, the clinician was then instructed to show an illustration of and provide information on “What’s a Standard Drink?” which explains that, for instance, a 12-ounce can of beer and a 1.5 ounce “shot” of liquor are both standard drinks. This is useful information for people who are not aware of what constitutes a standard drink and might, for instance, consider any mixed drink as a single drink even if it contains more than 1.5 ounces of liquor. Once the client had an understanding of what constitutes a standard drink, the client was asked how many drinks he or she had per day and how many days per week. This information was used to establish any high-risk drinking patterns.

With the client’s drinking pattern established, the worker showed the second illustration, “U.S. Adult Drinking Patterns,” which aimed to help clients understand their pattern of drinking, including whether they exceeded daily or weekly limits, in relation to the adult U.S. population’s patterns of drinking. This also helped clients to see the link between excessive drinking and alcohol disorders, because, for instance, half the people who exceed weekly and daily recommended alcohol limits have an alcohol use disorder. The final document included “Strategies for Cutting Down,” a list of tips for limiting the amount of alcohol consumption, such as keeping track of the number of drinks, including food when drinking, or having a plan to handle urges to drink.

The intervention lasted approximately five minutes and concluded with the worker checking off the dated, one-page intervention sheet indicating a completed intervention. The intervention was to last approximately three months. At the end of each month, a research team member collected documents on how many new clients each worker had met during the prior month and counted how many one-page intervention sheets were completed. These data were entered into SPSS for each participant.

Data Collection Procedure

Upon institutional review board approval, a member of the research team traveled to each agency and administered the survey, typically during staff meetings. The OSC and a companion measure that included a set of demographic questions were administered to participants in paper-and-pencil format. Data collection occurred in groups, with no agency administrator present. Each group was read instructions that assured participants that their responses were anonymous and that data would only be reported back to the organization in aggregated form. All individuals participated voluntarily, signed an informed consent, and were provided no compensation. The research team counted the total number of possible frontline workers in each agency and continued recruitment until reaching a response rate of 80 percent.

Data Collection

Data collection occurred face-to-face through self-administered surveys with frontline staff. The completed OSC surveys (e.g., bubble sheets) were mailed in a sealed, secured envelope to Dr. Glisson’s University of Tennessee Children’s Mental Health Services Research Center for scanning scoring. The Children’s Mental Health Services Research center returns profiles and OSC T-scores back to the researchers. No raw data, other than general demographics, are returned. All subject names were removed before mailing. The worker demographic data sheets were collected as part of the OSC measurement tool, described previously. The EBPAS data collection occurred face-to-face during the OSC data collection process.

Alcohol Intervention Implementation

As part of the research agreement, organizations allowed research staff to review on a regular basis, approximately monthly, all client intake forms completed by participating staff during the pilot study period. The completed one-page NIAAA intervention sheets were collected and counted for each participant. These reviews consisted of counting the number of intakes each staff member had conducted since the previous review and recording the completion status and results of the brief intervention as documented by the one-page intervention sheets. To further evaluate the intervention’s implementation focus groups were conducted and recorded. The groups’ protocol began with free-flowing, open-ended questions from a semi-structured interviewing technique that was recorded.

Data Analysis

Because the dimensions of culture (proficiency, rigidity, and resistance) and climate (engagement, functionality, and stress) are theorized to be program-level or agency-level constructs, there is a multilevel relationship between the worker implementation measure. However, the number of agencies was too small to support a multilevel analysis. Instead, we computed the correlation between agency intervention percentage and each of the culture and climate dimensions. Rather than use parametric methods to test significance, we used permutation tests (e.g., Edgington, 1987; LaFluer & Greevy, 2009). As LaFleur and Greevy (page 286) noted, permutation tests are “… often used when distributional assumptions are questionable or unmet.” Permutation tests do not compensate for low statistical power due to either a small sample size or a small effect size as permutation tests generally have been found to have approximately as much power as their parametric or non-parametric alternatives (Bishara & Hittner, 2012; Keller, 2012).

Permutation methods are built on the computation of the test statistic, a correlation in this case, for every possible permutation of the culture and climate scores and the agency intervention percentages. The sample of six agencies yielded 6 factorial, that is, 720 possible rearrangements of the data values, one of which is the observed arrangement. The two-tailed test of significance of the correlation for the observed data is determined by the proportion of permutations with correlations whose absolute values are greater than or equal to the absolute value of the correlation for the observed data. Had a one-sided test been used, the computed correlation values rather than their absolute values would have been counted.

We used a mixture of correlations and t-tests or analyses of variance, all with conventional tests of significance, to analyze relationships between worker demographics and EBPAS scores and Intervention Percentage.

The focus groups analysis consisted of the moderator and observer meeting after each group using memory and note based consensus analysis strategy (Krueger & Casey, 2000). After data review and immersion in the data by the moderator and observer (i.e., extensive reading and re-reading of the transcripts and repeated listening to recorded interviews), all sections of the interview that explicitly or implicitly described the experiences using the NIAAA guide by the participant was analyzed at a detailed level, using descriptive, open coding. The recordings were professional transcribed. These codes were then grouped to form themes.

RESULTS

At recruitment, participating workers indicated that they had at least two new intakes per week, that they were willing to incorporate the intervention into their agencies’ routine intake procedures, thus conducting the intervention with all new intakes, and that the intervention would be sustained for at least three months. The results found that of the 54 participating staff, 44.4 percent provided data (i.e., reported the number of new intakes or clients, even if that number were zero) for three months, 35.2 percent provided data for two months, 11.1 percent provided data for one month, and 9.3 percent failed to provide any data. Furthermore, of the 48 workers reporting data for at least one month, 52% did not report intakes with eight or more clients in any month, 23% did so for one month, 17% did so for two months and only 8% did so for all three months.

In view of the inability of workers to attain and maintain the a priori criterion intake numbers, we decided to reduce the criterion to merely conducting at least three intakes per month for two of the three months. The result was that 29 workers (53.7 percent) met this criterion. Among the 29 workers meeting criterion, the mean number of intakes per worker per month ranged from 10.3 to 14.2 (SD: 11.5 to 13.9; range: 0 to 55), the mean number of interventions per worker per month ranged from 6.4 to 9.1 (SD = 6.3 to 9.8; range = 0 to 43), and the mean percentage of interventions completed per worker per month ranged from 71.1 to 82.2 (SD: 30.9 to 33.0; range: 0 to 100). Cumulated across the three months, the mean intervention completion percentage was 79.4 (SD: 29.3; range: 0 to 100), with eleven workers reporting a 100% completion percentage.

Across the six agencies, the number of workers who attained the new criterion ranged from one to eleven (12.5% to 72.7%). Five of the six agencies had an agency intervention percentage greater than 50 percent and three had an intervention percentage greater than 75 percent.

Relationships with Culture and Climate

Table 2 reports the the culture and climate scale scores and the agency intervention percentage for each agency. The mean percentages of clients receiving the intervention at each agency were fairly tightly bunched, except for the low value of 50.68 percent for agency A and the high value of 94.83 for agency B. The culture and climate data values are T scores scaled on the standardization sample (Glisson et al., 2008). Although there were exceptions, about two-thirds of the scores were within one standard deviation of the mean. Of the six agencies, agency B had a less optimal profile by virtue of its high resistance and low engagement scores, whereas agency E had a more optimal profile by virtue of its high functionality and low stress scores.

Table 2.

Observed Culture and Climate Scores and Agency Intervention Percentage for the Six Agencies

| Agency | Culture and Climate Scale |

Intervention percentage |

|||||

|---|---|---|---|---|---|---|---|

| Proficiency | Rigidity | Resistance | Engagement | Functionality | Stress | ||

| A | 59.58 | 46.77 | 55.17 | 58.72 | 55.64 | 49.91 | 50.68 |

| B | 48.19 | 53.41 | 72.32 | 27.10 | 56.84 | 56.66 | 94.83 |

| C | 51.92 | 66.87 | 63.57 | 58.13 | 59.56 | 55.52 | 72.33 |

| D | 59.28 | 51.39 | 47.92 | 55.18 | 76.11 | 34.84 | 69.17 |

| E | 64.28 | 55.81 | 51.65 | 57.88 | 80.28 | 39.39 | 66.67 |

| F | 58.68 | 59.55 | 63.46 | 52.87 | 66.06 | 52.39 | 68.43 |

Table 3 reports the correlations between culture and climate scales and agency intervention percentage. Significance was assessed by permutation test. Only one correlation was significant, that for proficiency, r = −.74, p = .040. The .040 value means that 4 percent of the 720 permutations had an absolute value of .74 or greater. The −.74 correlation indicates that high proficiency is associated with a lower agency intervention percentage.

Table 3.

Correlations between Observed Culture and Climate Scores and Agency Intervention Percentage

| Culture and Climate Scale |

||||||

|---|---|---|---|---|---|---|

| Proficiency | Rigidity | Resistance | Engagement | Functionality | Stress | |

| Agency | −.74* | .28 | .69+ | −.87 | −.13 | .36 |

| Intervention | ||||||

| Percentage | ||||||

p < .05.

Relationships with EBPAS and Demographics

We next tested whether either EBPAS scale scores or worker demographics were associated either with attaining the new criterion or with the percentage of completed interventions given that the worker attained the criterion. Although workers making the criterion had numerically higher scores on the requirements, openness, and total scales and lower scores on the appeal and divergence scales, the effect sizes (absolute value), except for divergence (.44) and total (.21), were small (.10), and none of the scales were significant. We also examined whether EBPAS scores were related to the percentage of interventions completed over the three-month period for the 30 workers meeting the criterion. None of the correlations were significant. The largest correlation, −.23, was with requirements. Our examination of demographic variables found no relationships with attaining the new criterion.

DISCUSSION

The purpose of the present study is to test the implementation of a brief alcohol screening and educational intervention in mental health organizations that do not specialize in addiction services. The hypothesis that programs with productive culture and climate and workers with positive attitudes toward ESTs will have higher implementation rates was not supported. The results show that implementation of the intervention varied widely both within and across the organizations. Despite assurances by frontline workers that they each conducted at least the minimum number of intakes per month, more than half did not report actually doing so. Within each agency, only some workers appeared to be engaged and committed to the intervention, as evidenced by their returning completed data sheets, seeing clients, and completing interventions with the clients whom they saw. Although all workers said they regularly saw the initial minimum number of clients per month (eight), the person-level data did not reflect those assertions. Instead, and except for agency E, typically one or two workers seemed to see the majority of the clients.

Although the powers of all the analyses were low, we found it interesting that workers attaining the revised criterion of three or more clients per month for two months also had lower scores on the evidence practice attitudes scale of divergence, which indicates a more favorable attitude toward research-based interventions and a lower valuing of clinical judgment. Our analysis of the agency-level implementation data found only one relationship with a culture and climate measure, proficiency. Taken at face value, the negative relationship was unexpected. One would assume that a high-proficiency organization would have high implementation measures, given responses to items such as “Members of my organizational unit are expected to be responsive to the needs of each client” and “Members of my organizational unit are expected to have up-to-date knowledge.” It is surprising that workers reporting high scores on this scale had the lowest use of an EST in their standard services. Because a large number of correlations were computed between the culture and climate measures and the implementation measures, it is possible that the one significant correlation is a chance finding.

Several limitations should be kept in mind when considering this study. The first is the small number of agencies participating. Recruiting agencies to modify their existing procedures to incorporate a new element that is outside of their normal protocol is a decision agency executives carefully consider. It is also a decision that must be made by persons at every level of the organizational chart. Thus recruiting agencies is difficult, and the decision to take part may well identify these agencies as special in some sense. Thus the extent to which these results are replicable is simply unknown. The primary focus of the study is to examine how organizational characteristics relate to agency-level implementation performance. The data indicate that organizational characteristics may not be related to implementation. The permutation test results clearly indicate the probability of the observed correlation given the data set, which, except for one, were not significant; however, our results almost certainly should not be generalized beyond these six agencies, because the agencies were not a random sample of all mental health agencies. Also, in the absence of very large effect sizes, a sample of size six yields low powers—irrespective of how analyzed.

Lastly, measures related to intervention fidelity were not included in this study. Adopting the NIAAA Clinicians Guide is indicated by the front-line worker progressing through the step-by-step guide until its logical conclusion and completing the guide’s form. For example, if after the first prescreening question, Do you sometimes drink beer, wine, or other alcoholic beverages?, the client’s response is “No,” then the EBP would be considered as adopted after the worker documents that response and places it in the research file.

Adoption as it relates to this study is not connected to fidelity issues, nor was the workers’ fidelity to the EBP’s specific clinical protocols measured during this study. Part of the instructions to using the Clinicians Guide as well as training materials instructed the worker to relate to patient’s concerns; encourage reflection; acknowledge change is difficult, along with some other clinical engagement and rapport building strategies. While these are important clinical techniques (Fleming et al., 2002) the ability to measure fidelity issues goes beyond this study. In order to appropriately study workers’ consistency of delivering the intervention over the time of the project, specific fidelity methods, scale(s), and resources would have been needed to evaluate those activities (Bond et al., 2000). This proposal sought to study adoption of the intervention only.

It should be noted that about 20 alcohol screening and brief intervention trials have been conducted in medical settings and reported in medical literature (Fleming & Manwell, 1999). The recommended screening and education methods available to staff during this pilot study were similar to the methods used in past trials. According to Fleming (2005), “The reliability and validity of these methods are similar to those of screening techniques used to detect chronic illnesses such as hypertension, diabetes, and lipid disorders (p. 61).”

Again, if worker and organizational characteristics can predict adoption of an intervention, the next logical step would be to measure fidelity and client outcomes. However, if workers were unable at a minimum to adopt and sustain a new intervention into their current standard services, the effort and resources needed for designing and measuring fidelity would seem a futile task.

This was a first-of-its-kind study using Glisson and colleagues’ (2008) culture and climate profiles as predictors of EST implementation. Although there have been speculations in the literature indicating that poor organizational cultures and climates create barriers to implementation (Glisson et al., 2002), this study cannot confirm those opinions. There is a developing literature showing that the subscales making up Glisson’s idea of culture (e.g., Proficiency, Rigidity, and Resistance) and climate (e.g., Engagement, Functionality, and Stress) are not related to EST implementation in other mental health services (Patterson & Dulmus, 2012; Patterson, Dulmus, & Maguin, 2012). Although the theory on poor cultures and climates has been that they are unable to successfully function (Glisson et al., 2002), studies have lately shown that organizations with some of the traditionally characterized worst cultures and climates are some of the best EST implementers (Patterson & Dulmus, 2012; Patterson et al., 2012). Given these new findings, it may be that the tool Glisson and colleagues developed measures their concepts of culture and climate but produces few outcomes linking those concepts to worker behaviors. It has to be acknowledged that because there are limitations to the study (low agency sample size, nonrandom sample), more research is needed to conclude that Glisson’s culture and climate tool is not predictive of EST implementation.

In light of these findings, it is important to understand what factors contribute to successful implementation. A better predictor of EST implementation could be worker characteristics beyond Aaron’s EBPAS measures. This project had an end-of-study qualitative evaluation. The top implementers, two females and two males, were equally split between two different programs. Each was asked one question: “What was the main reason you successfully implemented the new intervention?” Their responses centered around one personality trait theme: conscientiousness. Being one of the basic five personality traits, along with extraversion, agreeableness, emotional stability, and openness to experiences (McCrae & John, 1992), conscientiousness has been shown to be a reliable predictor of worker behavior (Barrick & Mount, 2005). Conscientious workers can be described as being hard workers, goal oriented, and motivated.

As an example of this trait, one of the top implementers stated, “This was something I was supposed to do… it is my mind-set.” According to this statement, the worker was given a job to do, and it was part of the worker’s internal makeup to get the task accomplished. Similarly, another successful implementer in a different program stated, “This is part of my personality…given a task to do, I do it.” Finally, when another worker was asked the reason for his or her success, the worker stated, “Frankly… [the intervention] needed to be done and I did it.” These workers saw a task that needed to be completed, and something within their personalities seemed to have carried them forward toward reaching that goal.

Although the qualitative methods and statements have limitations, it is interesting that the small cohort of successful implementers expressed a similar trait. Because it is ultimately the worker who is responsible for implementing ESTs, in that clinical practice should be empirically based, responsive to client needs, and outcomes focused (Rosen, 2003), investigating certain worker traits that are related to implementation behavior would be beneficial. Investigating characteristics of success and transferring these factors into practice might be a better approach than studying implementation failures and training workers on how to avoid those pitfalls.

REFERENCES

- Aarons GA. Mental Health Provider Attitudes toward Adoption of Evidence-Based Practice: The Evidence-Based Practice Attitude Scale (EBPAS) Mental Health Services Research. 2004;6:61–74. doi: 10.1023/b:mhsr.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA. Measuring Provider Attitudes toward Evidence-Based Practice: Organizational Context and Individual Differences. Child and Adolescent Psychiatric Clinic of North America. 2005;14:255–271. doi: 10.1016/j.chc.2004.04.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Glisson C, Hoagwood K, Kelleher k, Landsverk J, Cafri G. Psychometric Properties and United States National Norms of the Evidence-Based Practice Attitude Scale (EBPAS) Psychological Assessment. 2010;22:356–365. doi: 10.1037/a0019188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Sawitzky AC. Organizational Culture and Climate and Mental Health Provider Attitude toward Evidence-Based Practices. Psychological Services. 2006;3:61–72. doi: 10.1037/1541-1559.3.1.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Babor TF, Aguirre-Molina M, Marlatt A, Clayton R. Managing Alcohol Problems and Risky Drinking. American Journal of Health Promotion. 1999;14:98–103. doi: 10.4278/0890-1171-14.2.98. [DOI] [PubMed] [Google Scholar]

- Babor TF, Higgins-Biddle JC. Alcohol Screening and Brief Intervention: Dissemination Strategies for Medical Practice and Public Health. Addiction. 2000;95:677–686. doi: 10.1046/j.1360-0443.2000.9556773.x. [DOI] [PubMed] [Google Scholar]

- Babor TF, Higgins-Biddle J, Dauser D, Burleson JA, Zarkin GA, Bray J. Brief Interventions for At-Risk Drinking: Patient Outcomes and Cost-Effectiveness in Managed Care Organizations. Alcohol and Alcoholism. 2006;41:624–631. doi: 10.1093/alcalc/agl078. [DOI] [PubMed] [Google Scholar]

- Babor TF, Higgins-Biddle J, Dauser D, Higgins P, Burleson J. Alcohol screening and brief intervention in primary care settings: Implementation models and predictors. Journal of Studies on Alcohol. 2005;66:361–269. doi: 10.15288/jsa.2005.66.361. [DOI] [PubMed] [Google Scholar]

- Babor TF, Higgins-Biddle JC, Higgins PS, Gassman RA, Gould BE. Training medical providers to conduct alcohol screening and brief interventions. Substance Abuse Treatment. 2004;25:17–26. doi: 10.1300/J465v25n01_04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrick MR, Mount MK. Yes, Personality Matters: Moving On to More Important Matters. Human Performance. 2005;18:359–372. [Google Scholar]

- Beronio K, Po R, Skopec L, Glied S. Affordable Care Act Expands Mental Health and Substance Use Disorder Benefits and Federal Parity Protections for 62 Million Americans. ASPE Issue Brief. Washington, DC: U.S. Department of Health and Human Services; 2013. [Google Scholar]

- Bien TH, Miller WR, Tonigan SJ. Brief interventions for alcohol problems: A review. Addiction. 1993;88:315–336. doi: 10.1111/j.1360-0443.1993.tb00820.x. [DOI] [PubMed] [Google Scholar]

- Bishara AJ, Hittner JB. Testing the significance of a correlation with nonnormal data: Comparison of Pearson, Spearman, transformation, and resampling approaches. Psychological Methods. 2012;17:399–417. doi: 10.1037/a0028087. [DOI] [PubMed] [Google Scholar]

- Bond GR, Becker DR, Drake RE, Volger KM. A fidelity scale for the individual placement and support model of supported employment. Rehabilitation Counseling Bulletin. 2000;40:265–284. [Google Scholar]

- Bouchery EE, Harwood HJ, Sacks JJ, Simon CJ, Brewer RD. Economic Costs of Excessive Alcohol Consumption in the U.S., 2006. American Journal of Prevention Medicine. 2011;41:516–524. doi: 10.1016/j.amepre.2011.06.045. [DOI] [PubMed] [Google Scholar]

- Burns BJ, Hoagwood KE. Evidence-Based Practice, Part II: Effecting Change. Child and Adolescent Psychiatric Clinic of North America. 2005;14:255–271. doi: 10.1016/j.chc.2004.11.001. [DOI] [PubMed] [Google Scholar]

- Cooke RA, Szumal JL. Measuring normative beliefs and shared behavioral expectations in organizations: The reliability and validity of the Organizational Culture Inventory. Psychological Reports. 1993;72:1299–1330. [Google Scholar]

- Edgington ES. Randomization Tests. 2nd ed. New York: Dekker; 1987. [Google Scholar]

- Fleming MF. Screening and brief intervention in primary care settings. Alcohol Research & Health. 2005;28(2):57–62. [PMC free article] [PubMed] [Google Scholar]

- Fleming M, Manwell LB. Brief intervention in primary care settings. Alcohol Research & Health. 1999;23(2):128–137. [PMC free article] [PubMed] [Google Scholar]

- Fleming MF, Mundt MP, French MT, Manwell LB, Staauffacher EA, Barry KL. Brief physician advice for problem drinkers: Long-term efficacy and cost benefit analysis. Alcoholism, Clinical and Experimental Research. 2002;26:36–43. [PubMed] [Google Scholar]

- Garland AF, Kruse M, Aarons GA. Clinicians and Outcome Measurement: What’s the Use? Journal of Behavioral Health Services and Research. 2003;30:393–405. doi: 10.1007/BF02287427. [DOI] [PubMed] [Google Scholar]

- Glisson C. The organizational context of children’s mental health services. Clinical Child and Family Psychology Review. 2002;5:233–253. doi: 10.1023/a:1020972906177. [DOI] [PubMed] [Google Scholar]

- Glisson C, James LR. The cross-level effects of culture and climate in human services teams. Journal of Organizational Behavior. 2002;23:767–794. [Google Scholar]

- Glisson C, Landsverk J, Schoenwald S, Kelleher K, Hoagwood KE, Mayberg S, Green P. Assessing the Organizational Social Context (OSC) of Mental Health Services: Implications for Research and Practice. Administration and Policy in Mental Health and Mental Health Services Research. 2008;35:98–113. doi: 10.1007/s10488-007-0148-5. [DOI] [PubMed] [Google Scholar]

- Hemmelgarn AL, Glisson C, James LR. Organizational culture and climate: Implications for services and interventions research. Clinical Psychology: Science and Practice. 2006;13:73–89. [Google Scholar]

- Holder HD, Blose JO. The Reduction of Health Care Costs Associated with Alcoholism Treatment. Journal of the Studies on Alcohol. 1992;53:293–302. doi: 10.15288/jsa.1992.53.293. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. To Err Is Human: Building a Safer Health System. Washington, DC: National Academies Press; 2000. [PubMed] [Google Scholar]

- Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academies Press; 2001. [PubMed] [Google Scholar]

- Institute of Medicine. Improving the Quality of Health Care for Mental and Substance-Use Conditions: Quality Chasm Series. Washington, DC: National Academies Press; 2006. [PubMed] [Google Scholar]

- Kahan M, Wilson L, Becker L. Effectiveness of Physician Based Interventions with Problem Drinkers: A Review. Canadian Medical Association Journal. 1995;152:851–859. [PMC free article] [PubMed] [Google Scholar]

- Keller B. Detecting treatment effects with small samples: The power of some tests under the randomization model. Psychometrika. 2012;77:324–338. [Google Scholar]

- Kessler RC, Berglund P, Demler O, Jin R, Merikangas KR, Walters EE. Lifetime Prevalence and Age-of-Onset Distributions of DSM-IV Disorders in the National Comorbidity Survey Replication. Archives of General Psychiatry. 2005;62:593–602. doi: 10.1001/archpsyc.62.6.593. [DOI] [PubMed] [Google Scholar]

- Krueger RA, Casey A. Focus Groups: A Practical Guide for Applied Research. 3rd Edition. Thousand Oaks, CA: Sage Publishing; 2000. [Google Scholar]

- LaFleur BJ, Greevy RA. Introduction to permutation and resampling-based hypothesis tests. Journal of Clinical Child & Adolescent Psychology. 2009;38:286–294. doi: 10.1080/15374410902740411. [DOI] [PubMed] [Google Scholar]

- McCrae RR, John OP. An introduction to the Five-Factor Model and its applications. Journal of Personality. 1992;60:175–215. doi: 10.1111/j.1467-6494.1992.tb00970.x. [DOI] [PubMed] [Google Scholar]

- McGovern MP, Fox TS, Xie H, Drake RE. A Survey of Clinical Practices and Readiness to Adopt Evidence-Based Practices: Dissemination Research in an Addiction Treatment System. Journal of Substance Abuse Treatment. 2004;26:305–312. doi: 10.1016/j.jsat.2004.03.003. [DOI] [PubMed] [Google Scholar]

- Miller MR, Sorensen JL, Selzer JA, Brigham GS. Disseminating Evidence-Based Practices in Substance Abuse Treatment: A Review with Suggestions. Journal of Substance Abuse Treatment. 2006;31:25–39. doi: 10.1016/j.jsat.2006.03.005. [DOI] [PubMed] [Google Scholar]

- National Institute on Drug Abuse. Principles of Drug Addiction Treatment: A Research-Based Guide. 2nd ed. Bethesda, MD: National Institutes of Health; 2009. HHS Publication (NIH) 09-4180. http://www.drugabuse.gov/publications/principlesdrugaddictiontreatment/principleseffective-treatment. [Google Scholar]

- National Institute on Drug Abuse. Adoption of NIDA’s Evidence-Based Treatments in Real World Settings: A National Advisory Council on Drug Abuse Workgroup Report. 2012 http://www.drugabuse.gov/sites/default/files/files/evidencebased_treatments_in_real_world_settings_workgroup_report.pdf.

- National Institutes of Health. Helping Patients Who Drink Too Much: A Clinician’s Guide. 2005 http://pubs.niaaa.nih.gov/publications/Practitioner/CliniciansGuide2005/clinicians_guide.htm.

- Nelson TD, Steele RG, Mize JA. Practitioner Attitudes toward Evidence Based Practice: Themes and Challenges. Administration and Policy in Mental Health. 2006;33:398–409. doi: 10.1007/s10488-006-0044-4. [DOI] [PubMed] [Google Scholar]

- Office of Applied Studies. The NSDUH Report: Alcohol Treatment: Need, Utilization, and Barriers. Rockville, MD: Substance Abuse and Mental Health Services Administration; Apr 9, 2009. [Google Scholar]

- Ogborne AC, Wild TC, Braun K, Newton-Taylor B. Measuring treatment process beliefs among staff of specialized addiction treatment services. Journal of Substance Abuse Treatment. 1998;15:301–312. doi: 10.1016/s0740-5472(97)00196-7. [DOI] [PubMed] [Google Scholar]

- Patterson DA. What are the ideal characteristics of empirically supported treatment adopters? Journal of Human Behavior in the Social Environment. 2014;24:408–414. doi: 10.1080/10911359.2014.875343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson DA, Dulmus CN. Organizational Barriers to Adopting an Alcohol Screening and Brief Intervention in Community-Based Mental Health Organizations. Best Practices in Mental Health: An International. 2012 Journal;:16–28. [PMC free article] [PubMed] [Google Scholar]

- Patterson DA, Dulmus CN, Maguin E. Empirically supported treatment’s impact on organizational culture and climate. Research on Social Work Practice. 2012;22(6):665–671. doi: 10.1177/1049731512448934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson DA, Dulmus CN, Maguin E. Is Openness to Using Empirically Supported Treatments Related to Organizational Culture and Climate? Journal of Social Service Research. 2013;39:562–571. doi: 10.1080/01488376.2013.804023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson DA, Maguin E, Dulmus CN, Nisbet BC. Individual Worker level Attitudes Toward Empirically Supported Treatments. Research on Social Work Practice. 2013;23(1):95–99. doi: 10.1177/1049731512463442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rehm J, Mathers C, Popova S, Thavorncharoensap M, Teerawattananon Y, Patra J. Global Burden of Disease and Injury and Economic Cost Attributable to Alcohol Use and Alcohol-Use Disorders. Lancet. 2009;373(9682):2223–2233. doi: 10.1016/S0140-6736(09)60746-7. [DOI] [PubMed] [Google Scholar]

- Roche AM, Horham ED, Richmond E. The General Practitioners’ Role in AOD Issues: Overcoming Individual, Professional and Systemic Barriers. Drug and Alcohol Review. 2002;21:223–230. doi: 10.1080/0959523021000002679. [DOI] [PubMed] [Google Scholar]

- Rosen A. Evidence-Based Social Work Practice: Challenges and Promise. Social Work Research. 2003;27:197–208. [Google Scholar]

- Schneider B, Ehrhart MG, Macey WH. Perspectives on Organizational Climate and Culture. In: Zedeck S, editor. APA Handbook of Industrial and Organizational Psychology: Vol. 1. Building and Developing the Organization. Washington, DC: American Psychological Association; 2011. pp. 373–414. [Google Scholar]

- Sorensen JL, Midkiff EE. Bridging the Gap between Research and Drug Abuse Treatment. Journal of Psychoactive Drugs. 2002;32:379–382. doi: 10.1080/02791072.2000.10400239. [DOI] [PubMed] [Google Scholar]

- Stahmer AC, Aarons GA. Attitudes toward Adoption of Evidence-Based Practices: A Comparison of Autism Early Intervention Teachers and Children’s Mental Health Providers. Psychological Services. 2009;6:223–234. doi: 10.1037/a0010738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- U.S. Department of Health and Human Services. The Road Ahead: Research Partnerships to Transform Services. A report by the National Advisory Mental Health Council’s Workgroup on Services and Clinical Epidemiology Research. Bethesda, MD: National Institutes of Health, National Institute of Mental Health; 2006. [Google Scholar]

- Verbeke W, Volgering M, Hessels M. Exploring the conceptual expansion within the field of organizational behavior: Organizational climate and organizational culture. Journal of Management Studies. 1998;35:303–329. [Google Scholar]

- Willenbring ML, Olson DH. A randomized trial of integrated outpatient treatment for medically ill alcoholic men. Archives of Internal Medicine. 1999;13(159):1946–1952. doi: 10.1001/archinte.159.16.1946. [DOI] [PubMed] [Google Scholar]