Abstract

Previous studies demonstrated that a region in the left fusiform gyrus, often referred to as the “visual word form area” (VWFA), is responsive to written words, but the precise functional role of VWFA remains unclear. In the present study, we investigated the influence of orthographic similarity, and lexical factors on the multivoxel response patterns to written stimuli. Using high-resolution fMRI at 7 Tesla, we compared the organization of visual word representations in VWFA to the organization in early visual cortex and a language region in the superior temporal gyrus. Sets of four letter words and pseudowords were presented, in which orthographic similarity was parametrically manipulated. We found that during a lexical decision task VWFA is responsive to the lexical status of a stimulus, but both real words and pseudowords were further processed in terms of orthographic similarity. In contrast, early visual cortex was only responsive to the visual aspects of the stimuli and in the left superior temporal gyrus there was an interaction between lexical status and orthography such that only real words were processed in terms of orthographic similarity. These findings indicate that VWFA represents the word/non-word status of letter strings as well as their orthographic similarity.

Keywords: visual word form area, fMRI, orthography, lexicality

1. Introduction

Reading is an important cognitive skill, trained by extensive experience with written words. A specific word is created by putting different letters in a particular order. Beyond this visual analysis, we also process the meaning associated with this specific combination of letters. The semantic content of a written word is largely independent of the visual appearance of a word: two words can differ in only one letter, but have a completely different meaning (e.g. ‘flog’ and ‘flag’), while two other words that have a similar meaning can share not a single letter (e.g. ‘flog’ and ‘beat’). In the present study, we made use of these characteristics to try to dissociate the influence of orthography, lexical factors and semantics on the neural response patterns to written words in different regions across the brain.

Learning to read causes a region in the left fusiform gyrus to become increasingly responsive to visual words (Ben-Shachar et al., 2011; Olulade et al., 2013), often referred to as the “visual word form area” (VWFA: Cohen & Dehaene, 2004). Dehaene et al. (2005) propose a hierarchy of local combination detectors that represent progressively larger word fragments (letters to bigrams to quadrigrams) along the ventral visual pathway. VWFA is presumed to serve an orthographic lexicon function (Bruno et al., 2008; Guo & Burgund, 2010; Kronbichler et al., 2004, 2007). However, there are two important questions about the functional role of VWFA. First, is VWFA restricted to lower-level representations, e.g. characters and sublexical letter combinations (Binder et al., 2006; Dehaene et al., 2002, 2005; Vinckier et al., 2007), or does VWFA contain neurons tuned to entire words (Glezer et al., 2009, Schurz et al., 2010)? Evidence for lower level representations is found, for example, in the sensitivity of VWFA to the degree of orthographic structure in a letter string, even when the letter sequences do not show a resemblance to words (Binder et al., 2006). In contrast, representations of whole words would predict a difference between the responses to real words and other non-word letter combinations. While some studies found a lower mean activation in VWFA when presenting real words compared with pseudowords or letter strings (Bruno et al., 2008; Kronbichler et al., 2004, 2007; Price, Wise, & Frackowiak, 1996; Woollams et al., 2010; Xu et al., 2001), others failed to find any difference in activity levels (Baker et al., 2007; Dehaene et al., 2002; Vinckier et al., 2007) or found a reverse effect (Cohen et al., 2002). However, in an fMRI adaptation study, Glezer et al. (2009) discovered a smaller release from adaptation after a one letter change for pseudowords compared to real words. Glezer et al. (2009) proposed that this difference was due to a difference in tuning selectivity for real words and pseudowords, with more selective tuning for words.

The second question is related to the functional specialization of VWFA: is this area specific to the processing of the ‘visual word form’, an abstract representation of the sequence of letters that composes a written string (Cohen et al., 2002; Dehaene et al., 2005, Dehaene & Cohen, 2011), or is it involved in the integration of visual information with higher level information such as semantics (Brunswick, McCrory, & Price, 1999; Price & Devlin, 2003; Price & Devlin, 2011; Song, Tian, & Liu, 2012; Xue et al., 2006)? Evidence for a role in representing higher level information is derived, for example, from the priming effect of semantically related pictures and words irrespective of the stimulus type in the left ventral occipito-temporal cortex (Kherif, Josse, & Price, 2011). However, multiple other studies did not find a modulation of the responses in VWFA based on the semantic content (e.g. Dehaene et al., 2002; Glezer et al., 2009). Thus, the extent to which higher level information is represented in VWFA is still a matter of debate. In contrast, in the domain of language, it is less controversial that an area in the superior temporal gyrus is involved in semantic processing (Haldgren et al., 2002; Pylkkänen & Marantz, 2003; Simos, Basile, & Papanicolaou, 1997; Vartiainen, Parviainen, & Salmelin, 2009).

In the present study, we parametrically manipulated the orthographic similarity of four letter real words and pseudowords: stimuli could differ by 1, 2, 3 or all letters. Further, to try and dissociate the influence of orthography and semantics on the response patterns, stimuli with no orthographic overlap were semantically related. The organization of these visual word representations was investigated in VWFA and compared to the organization in the early visual cortex region and the superior temporal gyrus. Data were analyzed using multivariate analysis techniques. These relatively recent methods (e.g. Haxby et al., 2001; Norman et al., 2006) combine the information of the responses across voxels. With these methods, we can investigate the internal organization of the visual word representations based on the pattern of information within each region. This internal organization might be missed with univariate analyses in which each voxel is treated as a separate entity (Mahmoudi et al., 2012) and in which the integration of information across voxels assumes that nearby voxels contain the same signal (e.g., through averaging across all voxels in an ROI). For example, Nestor et al. (2013) showed that VWFA can discriminate between real words and equally complex false-font controls when multivariate mapping was applied, while no difference in mean activation was found. If an area is responsive to the lexical status, a difference between the response patterns of the real words and the pseudowords should emerge. Responsiveness to the orthographic structure of the stimuli would be shown by a difference in similarity between stimuli that have no, a few or all letters in common. We hypothesized that areas that strongly represent orthography will show a linear effect of the numbers of letters difference on the similarity of the response patterns. Finally, if semantics are represented within an area, the response patterns of the semantically related stimuli should be more similar compared to response patterns of semantically unrelated words.

2. Method

2.1 Participants

21 native English speakers with normal or corrected-to-normal vision participated in this study as paid volunteers. All reported being right-handed. Data from five participants were excluded, three due to technical problems during scanning and two because the participants did not perform the tasks as instructed (less than 50% correct responses in total or no responses during multiple runs). The protocol for the experiments was approved by the National Institutes of Health Institutional Review Board. Participants signed an informed consent at the start of the imaging session.

2.2 Stimuli

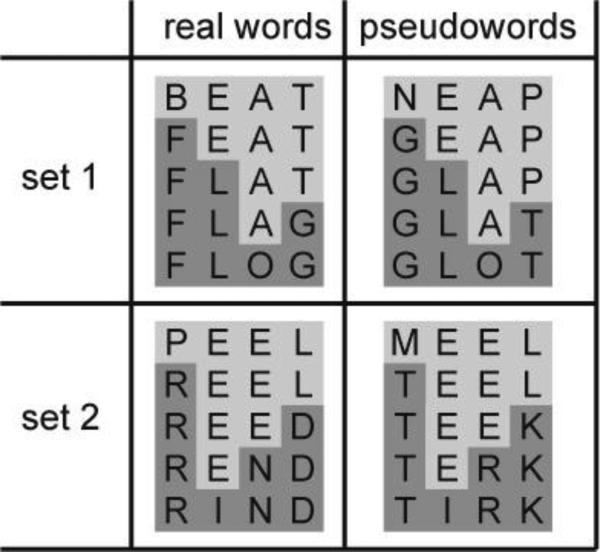

Stimuli included two sets of five real words (RW) and two sets of five pseudowords (PW) (Figure 1). In each set of real words, every next word differed in only one letter with the previous word. The position of the substituted letter across words was varied over the two sets. The first and last word within each set did not have any letters in common, but were semantically related i.e. synonyms. No close semantic relationship existed between the remaining stimuli or between words of the different sets. Two sets of pseudowords were created, consisting of pronounceable non-existing words and one uncommon word (frequency: 1 per million) unknown to all participants except one (in which case the data for this stimulus were not included in the analyses). PW sets matched the RW sets in a number of criteria. For each RW set, a PW set was created using the same vowels, in order to control for phonology between the two different lexical categories as much as possible. The position of the substituted letter across words also remained the same for matching RW and PW sets. Finally, RW and PW sets were matched for summated bigram (t(18)=.916, p=.372) and trigram (t(18)=1.364, p=.190) frequency.

Figure 1.

Real word and pseudoword stimulus sets.

2.3 Apparatus

Imaging data were acquired using a 7T Siemens scanner in the functional MRI facility at NIH. Functional images were acquired using a 32-channel head coil with an in-plane resolution of 1.6 × 1.6 mm and 49 1.6mm slices (0.16mm inter-slice gap, repetition time (TR) = 2s, echo time (TE) = 25ms, matrix size = 120 × 120, field of view (FOV) = 192mm). Slices were oriented approximately parallel to the base of temporal lobe and extended throughout the temporal lobe and part of the frontal and parietal lobes. Each functional run consisted of 200 T2*-weighted echoplanar images (EPIs). In addition we collected a standard high-resolution T1-weighted MPRAGE anatomical scan for each participant (192 slices). For more information on the characteristics of high field scanning, we refer to Norris (2003), Ohlman and Yacoub (2011) and Ugurbil et al. (2003); or to Lee, Kravitz and Baker (2013) which is based on the same 7T scanner. Stimuli were presented using Psychtoolbox 3 (Brainard, 1997) via an LCD projector that projected the image on a screen the participant viewed through a mirror.

2.4 Design

Experimental runs were constructed according to a rapid-presentation event-related design to schedule the presentation of the stimuli. During one run, each of the 20 stimuli (approximately 3 visual degrees) was presented four times. The order of the stimuli and the interstimulus intervals were scheduled using optseq2 (Dale, 1999) and were varied over participants. Each stimulus presentation lasted for 0.5s and the interval between stimuli was jittered with minimum 3.5s and maximum 11.5s. Total duration of each run was 400s. A red fixation dot was continuously present on the screen and the position of the stimuli was randomly scattered around the fixation dot (maximum deviation of 0.5 visual degrees horizontally and vertically from the screen centre). During experimental runs, participants performed a lexical decision task: in each trial they indicated whether the stimulus was a real word or a pseudoword by pressing one of two response buttons.

Independent localizer runs were conducted to identify regions of interest in each participant individually. Participants viewed 3 or 4 runs during which 15s blocks of English words, line drawings of objects, and Chinese characters were presented, largely overlapping with the stimuli used in Baker et al. (2007). Word stimuli in the localizers all comprised 3, 4 or 5 letters. To ensure the content of the stimuli was processed, participants reported when the word and line drawings stimuli represented a living thing (humans, animals or plants) using a response button. In each block 20 different stimuli were presented, of which 3 were living things. Since the participant could not attach meaning to the Chinese characters, this task was not possible for these stimuli. To equate motor responses between the different stimulus blocks, participants pressed a button when the stimulus was rotated 45° during Chinese character blocks (3 rotated stimuli per block). Each run contained 15 blocks (4×3 stimulus blocks and 3 fixation blocks). In both experimental and localizer runs, participants were asked to maintain central fixation.

2.5 Procedure

Before the imaging session, sentences in which the real words were used were shown. Participants’ understanding of the words was checked by asking them to explain the meaning of each word. In case of an incorrect understanding, the words were explained in more detail. The participants received instructions about both tasks before entering the scanner room and the corresponding task instructions were repeated at the start of each run. Every scan session lasted 2 hours during which the participant completed between 8 and 10 experimental runs and 3 or 4 localizer runs. For one participant an insufficient number of experimental runs were collected due to scheduling issues. A second session was planned, resulting in a total of 15 experimental runs and 6 localizer runs for that participant.

2.6 Analysis

fMRI Preprocessing

Imaging data were analysed using the Statistical Parametric Mapping software package (SPM8, Wellcome Department of Cognitive Neurology, London), as well as custom Matlab code for the selections of ROIs and the multivariate analyses. Preprocessing steps involved slice timing correction to correct for differences in acquisition time and spatial realignment to remove movements artefacts. Spatial realignment was performed using a two-step procedure: first all images were realigned to the first image during which a mean image was computed. In the second step all images were realigned to this mean image. During motion correction, the translation and rotation parameters were extracted to include in the general linear model. Functional and anatomical images were coregistered by aligning the anatomical scan to the functional images. Preprocessing further included spatial normalization to an MNI (Montreal Neurological Institute) template using a 4th degree B-spline interpolation. Finally, functional images were smoothed using Gaussian kernels of 3 mm full-width at half maximum (FWHM).

Statistical analyses

Statistical modelling of the signal at an individual level in each voxel included a general linear model applied to the pre-processed images, with regressors for each condition (20 independent variables corresponding to the different stimuli) and six covariates (the translations and rotation parameters needed for realignment). Further analyses were performed using the parameter estimates (‘beta values’) per run obtained after fitting the general linear model.

Regions of interest

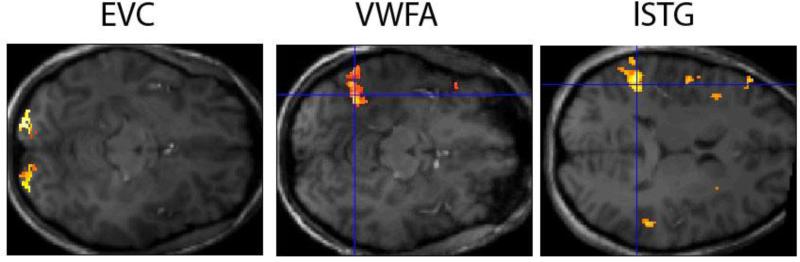

Regions of interest (ROIs) were defined by a combination of functional data from the localizer scans (using the SPM8 contrast manager) and anatomical landmarks. A custom-made MATLAB script was used for the selection of significantly activated voxels displayed on coronal sections. The visual word form area (VWFA) was defined as the cluster of voxels in the left mid-fusiform gyrus that showed significantly greater activation for words compared to objects and Chinese characters (M = 378 voxels, SD = 264). The average peak MNI coordinates for WFA were −44 −56 −16, which is comparable to the coordinates of the VWFA in previous work (e.g. Cohen et al., 2000, 2002). Two additional ROIs were defined as control regions. An early visual cortex (EVC) region (M = 299 voxels, SD = 76) was defined by an all stimulus conditions (words, objects and Chinese characters) versus baseline contrast after applying an anatomical mask that selected BA17, which approximately corresponds to V1. The mask was constructed using the PickAtlas Toolbox in SPM (Advanced NeuroScience Imaging Research Laboratory, Wake Forest University, Winston-Salem, NC). To select a possibly semantic ROI in the temporal region, two contrasts were compared, namely ‘words and objects minus Chinese characters‘ and ‘words minus Chinese characters’ contrast. Both contrasts target voxels that show larger responses to meaningful stimuli (words and objects) than to stimuli to which participants could attach no meaning (Chinese characters). The second contrast elicited on average a larger cluster of activation (M = 313 voxels, SD = 249; compared to M = 155 voxels, SD = 158 for the first contrast) in the left temporal region. This cluster of activation was located in the left superior temporal gyrus area (average peak MNI coordinates −54 −41 17), and is further referred to as lSTG. All contrasts were thresholded at p<.0001 (uncorrected for multiple comparisons). Figure 2 shows an example image of each of the ROIs. Because the ROIS were defined individually, they differ in size. To ensure that the effects found cannot be attributed to differences in size, we conducted control analyses in which the data of the two participants where the size of the smallest and largest ROI differed the most were removed. The resulting average number of voxels was 300, 297 and 306 for respectively EVC, VWFA and LSTG. The overall result pattern was not affected by this control. In the Results and Supplemental Data section we report the results with the data of all participants. When there was a difference between both analyses, we report both results.

Figure 2.

Example of the ROIs for representative participants. A mask was applied for EVC and only activated voxels (p<.0001) within this mask are shown. All activated voxels were selected for the EVC ROI. For VWFA and lSTG, only the cluster that is indicated by the crosshair was included in the relevant ROIs.

Multi-voxel correlational analyses

Correlational analyses were computed similarly to previously published methods (Norman et al., 2006; Op de Beeck et al., 2010). The data were first divided in two random equally sized subsets of runs (or in the case of an odd number of runs, with only one run difference). We constructed lists as long as the number of voxels in each ROI, and each list contained the response (beta value) for all voxels for a particular condition in one subset. These responses were normalized by subtracting the mean response across all conditions for each voxel separately (“cocktail blank normalization”). The values in each list of the first subset were correlated with the values in each list of the second subset, resulting in an asymmetrical 20x20 correlation matrix. These correlations represent the similarity between the activation pattern for a stimulus in the first subset of the data and the activation pattern for a (same of different) stimulus in the second subset of the data. The mean correlation in the matrix was close to zero (due to the cocktail blank normalization), so that positive correlations indicate higher similarity and negative correlations less similarity than the average similarity among the 20 stimulus conditions. The procedure of dividing the runs in two random subsets was repeated 100 times, and the resulting similarity matrices were averaged across these 100 iterations. Specific hypotheses were tested by averaging over the relevant cells of the individual correlation matrices. For tests concerning orthographic similarity, only the correlations between stimuli that differed by 0, 1, 2 or 3 letters were included, since the semantic relationship between the words that differ by 4 letters (synonyms) might obscure a possible effect of orthography. Data about the investigation of a semantic effect are provided as Supplemental Data.

Behavioral data analyses

Percentage correct and incorrect answers were calculated for each stimulus separately (based on the categorization in Figure 1). Some individual subjects had difficulty with the categorization of a stimulus. In case the ratio of incorrect and correct responses about the lexical categorization for a stimulus was higher than 1 at an individual level, the data for that stimulus in that subject were excluded from further analyses. This resulted in a removal of 2.2% of the data, divided over six participants. The average resulting ratio of incorrect to correct responses was 0.03.

Results

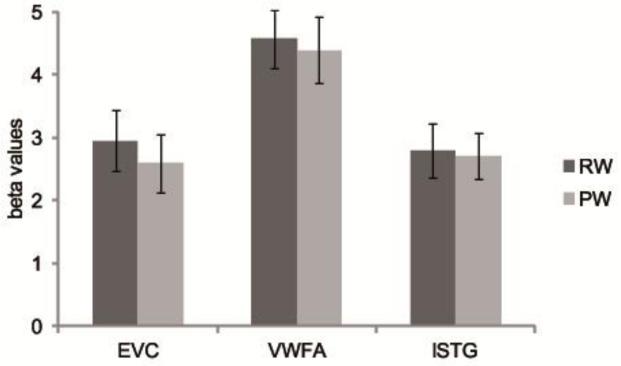

3.1 Lexical category discrimination

We first investigated whether the univariate responses in the selected ROIs could be used to discriminate between the stimuli based on their lexical status (reals words vs pseudowords). We entered mean beta values into a two-way ANOVA with ROI (EVC, VWFA, lSTG) and lexical status (real words, pseudowords) as within-subject factors, revealing only a main effect of ROI (F(2,30)= 10.719, p<.001) and no effects involving lexical status (all p > 0.05). These results indicate that there were no differences in mean activity for the different types of words across the ROIs and post-hoc t-tests confirmed that no individual ROI evidenced any difference (EVC: t(15)=1.339, p=.201; VWFA: t(15)=1.108, p=.286, lSTG: t(15)=.406, p=.691, see Figure 3). The main effect of ROI stemmed from a higher response in VWFA than in either EVC (t(15)=3.528, p=.003) or lSTG (t(15)=4.603, p<.001), while no difference in mean response level was found between EVC and lSTG (t(15)=.004, p=.997).

Figure 3.

Mean beta values for real words (RW) and pseudowords (PW) grouped by region of interest. Error bars represent the standard error of the mean (SEM).

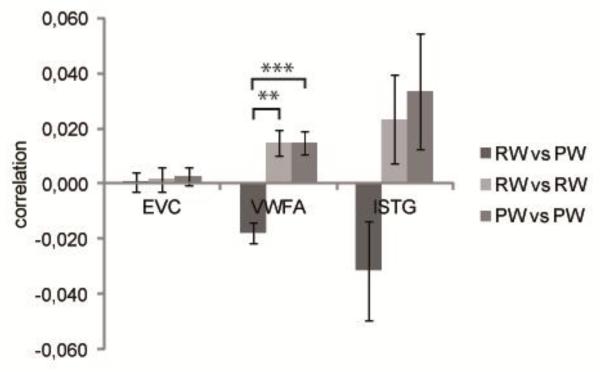

We further conducted multi-voxel correlational analyses to investigate whether the pattern of responses in the ROIs could be used to discriminate between RWs and PWs (Figure 4). To do this within each ROI we extracted the average correlation between different RWs, different PWs, and between RWs and PWs. Note that the average number of overlapping letters between stimuli within each lexical category (RW: M=1.2, PW: M= 1.4) was similar to the number of overlapping letters between lexical categories (M= 1.2) , thus, any effect of orthographic similarity cannot confound the current analysis. If the correlation between RWs and PWs is lower than the average correlations within those categories this indicates that the multi-voxel patterns can be used to discriminate between the categories. In EVC, the different correlation types did not differ (F(2,30)=.110, p=.896). In contrast, in VWFA, an effect of the correlation type was found (F(2,30)=16.028, p<.001), with higher correlations within the lexical categories than between the categories of RW and PW (RWPW vs RWRW: t(15)=4.085, p=.001; RWPW vs PWPW: t(15)= 4.482, p<.001; RWRW vs PWPW: t(15)= .011, p=.991). This pattern of results indicates that VWFA does categorize the stimuli based on lexical status. When comparing the patterns between VWFA and EVC using a two-way ANOVA with ROI and correlation type (RWvsPW, RWvsRW, RWvsPW), a significant interaction was revealed (F(2, 30) = 10.043, p < .001). Finally, in lSTG, the same pattern as described in VWFA was present, but the trend failed to reach significance (F(2,30)=2.739, p=.081). The absence of a significant interaction between correlation type and ROI (VWFA and lSTG) confirmed this trend (F(2,30)=.651, p=.529).

Figure 4.

Results of the correlational analyses considering discrimination based on lexical status, grouped by region of interest. Error bars represent the standard error of the mean (SEM). ** p<.01, *** p<.001

3.2 Effect of orthographic similarity

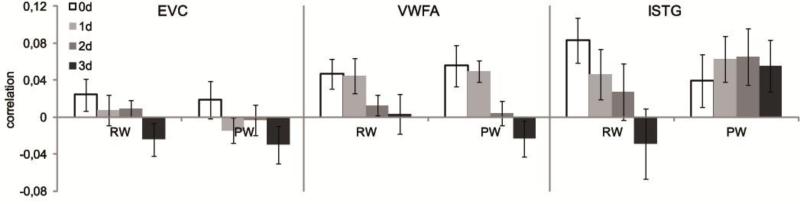

In case of an effect of orthographic similarity, we expect the neural patterns of two stimuli to be more similar the more letters these stimuli have in common. We performed a 2 (lexical status: RW or PW) × 4 (number of letters different: 0-3) repeated measures ANOVA to investigate whether orthographic similarity played a role in the response of the regions of interest and whether any effect was influenced by the lexical status of the stimuli. Results are summarized in Figure 5.

Figure 5.

Results of the correlational analyses investigating the effect of orthographic similarity: stimuli could either differ by 0, 1, 2 or 3 letters (0d, 1d, 2d or 3d). Error bars represent the standard error of the mean (SEM).

In EVC, there was no main effect of lexical status (F1,15)=1.857, p=.193) but there was a trend for a main effect of orthographic similarity (F(3,45)=2.603, p=.064). As mentioned previously, we can expect an effect of orthographic similarity to be linear, which was confirmed in EVC by means of a linear contrast (F(1,15)=6.573, p=.022). There was no interaction between the lexical status and the orthographic similarity (F(3,45)=.107, p=.955).

In VWFA, there was also no main effect of lexical status (F(1,15)=.258, p=.619), but there was a highly significant main effect of orthographic similarity (F3,45)=6.687, p=.001). The linear contrast of this effect was also significant (F(1,15)=9.702, p=.007). There was no interaction between lexical status and orthographic similarity (F(3,45)=.313, p=.816), indicating that the effect of orthographic similarity was equally large for pseudowords and real words.

Finally, in lSTG, no main effect was found of either lexical status (F(1,15)=1.780, p=.202) or orthographic similarity (F(3,45)=1.547, p=.215). However, there was a trend for an interaction between the two variables (F(3,45)=2.739, p=.054). Closer evaluation revealed that an effect of orthographic similarity was present for real words (F3,45)=2.939, p=.043), but not for pseudowords (F(3,45)=.354, p=.786). This effect of orthographic similarity for real words was linear (F(1,15)=5.780, p=.030). When performing the control analysis using the data of only 14 participants, both the main effect of orthographic similarity (F(3,39)=3.682, p=.020) and the interaction effect (F(3,39)=2.880, p=.048) were significant. Further analysis showed that the main effect can be explained by an effect of orthographic similarity for the real words (F(3,39)= 4.048, p=.013). Again no such effect was found for the pseudowords (F(3,39)=1.374, p=265).

Given the strong effect of orthographic similarity in VWFA, we further tested in a more post-hoc manner whether in addition to the number of letters changing between two stimuli, an effect of the characteristics of the changing letter could be found. Behavioral research suggests that the first and last letters of a word are more important than the interior letters during reading (e.g. Hammond & Green, 1982; Humphreys et al., 1990). For these tests, only correlations between stimuli that differed by 1 letter were included. We tested whether the position of the changing letter caused the neural patterns to be less or more similar to each other. There was no main effect of the position of the letter that changed between two stimuli (F(3,45)=1.016, p=.395). Next we performed a test to investigate whether the type of the changing letter was important: letters could either change from one vowel to another vowel, from one consonant to another consonant, or the letter type could change (from a vowel into a consonant or vice versa). The main effect of letter type changing was not significant (F2,30)=1.177, p=.322).

In summary, VWFA showed a general effect of orthographic similarity stimuli, independent of the lexical status of the stimuli. A similar trend was observed in EVC. In lSTG, this effect of orthographic similarity could only be demonstrated for real words, not for pseudowords. We explicitly tested the correspondence of the functional organization of VWFA compared to the other regions of interest, by means of pair-wise three-way ANOVAs with ROI, lexical status and orthographic similarity as factors. As expected, the comparison of EVC and VWFA revealed no three-way interaction (F(3,45)=.306, p=.821; the two-way interactions with ROI were also not significant, with p > 0.15), suggesting a similar underlying functional organization. However, the direct statistical comparison of VWFA and lSTG revealed a significant three-way interaction between ROI, lexical status and orthographic similarity (F(3,45)=3.669, p=.019), which corresponds to the finding that in lSTG we only found an effect of orthographic similarity for RW but not for PW, while in VWFA both types of stimuli showed the same effect.

4. Discussion

In the present study, we studied the functional organization of written word representations. The contributions of lexical and orthographic factors were investigated in two areas within the ventral visual stream, namely EVC and VWFA, and in the superior temporal gyrus, an area generally considered to be involved in semantic processing.

4.1 Early visual cortex

In EVC, no effect of the lexical category of the stimulus between stimuli was found. There was a trend towards an effect of orthographic similarity. This pattern corresponds to the characteristics of a purely visual area: the more letters two (pseudo)words share, the more visually similar the stimuli are and thus the more similar the neural response patterns should be, without discriminating based on the type of stimulus (in this case RW or PW). Compared to VWFA, the effect of orthographic similarity was less pronounced, but this was expected given the small receptive fields of neurons and voxels in EVC (De Valois & De Valois, 1988; Engel, Glover, & Wandell, 1997) and the position jitter of the stimuli presented in our experiment.

4.2 Visual word form area

According to the neuronal recycling hypothesis (Dehaene & Cohen, 2007) reading and writing evolved as a recycling of the pre-existing competence of the ventral visual cortex for extracting object features through frequent exposure and perceptual tuning. In this respect, reading words can be considered a specialized form of object processing and VWFA should thus show similar characteristics to the object-selective region LOC. For example, LOC is selective for shape similarity among objects (Op de Beeck et al., 2008), irrespective whether the objects are meaningful or not (Kanwisher et al., 1997, Malach et al., 1995). Further, VWFA is located in more anterior parts along the ventral visual stream compared to EVC, and thus, again similar as in LOC (Logothetis & Sheinberg, 1996), the position jitter should influence the visual similarity less compared to EVC (Cohen et al., 2000). Both aspects are reflected in the strong effect of orthographic similarity in VWFA in our data: the more letters two (pseudo)words share, the more similar the neural response patterns are. These results are compatible with the local combination detector account proposed by Dehaene et al. (2005), in which invariant (visual) orthographic information is extracted via a hierarchical set of local combination detectors. Please note that orthographic similarity as manipulated in the present study, cannot be distinguished from position invariant visual similarity. A further test would include, in addition to the current changes in location, variation in the physical appearance of the letters, such as font, size and case.

The underlying mechanism for the effect of orthographic similarity in VWFA might be similar to the organization in LOC based on shape similarity (e.g. Drucker and Aguirre, 2009). In LOC, objects that have a similar shape show a more similar response pattern (Op de Beeck et al., 2008). This is explained by the clustering of neurons in a cortical map: neurons that respond to similar complex visual features are spatially clustered (Tanaka, 2003). Likewise, in our study, stimuli showing a more similar configuration of their constituting letters were associated with more similar response patterns in VWFA. Neurons in VWFA might thus be coding for the configuration of letters that constitute a (pseudo)word and similar configurations are then coded by nearby neurons.

However, the finding that categorization of the stimuli based on lexical status was possible in VWFA suggests that more than only the visual aspects of word and word-like stimuli are represented within this area. Previously, the finding in VWFA that pseudowords evoke the same amount (Baker et al., 2007; Dehaene et al., 2002; Vinckier et al., 2007; also in our current findings) or even stronger activation (Bruno et al., 2008; Kronbichler et al., 2004, 2007; Woollams et al., 2010; Xu et al., 2001) than real words was taken as evidence for pre-lexical processing, meaning that the area is tuned to letter combinations which are frequently encountered in a language system (Dehaene et al., 2002; McCandliss et al., 2003). But in the present study, by using multivariate techniques, we show that VWFA does discriminate based on lexical status. Distributed patterns of activity were more similar among stimuli of the same lexical status than among stimuli with a different lexical status (real word vs. pseudoword). This categorization was not apparent under a univariate general linear model (GLM) analysis, as no activation difference between words over pseudowords was evident.

The finding of a lexicality effect in VWFA in the present study might be caused or enhanced by the use of a lexical decision task. Such top-down task-related effects have been reported in VWFA (Hellyer et al., 2011; Mano et al., 2013; Wang et al., 2011). However, even if it would turn out that the representation of lexical status in VWFA is task dependent, its observation under the current active task conditions still shows that lexicality is relevant to the internal organization of VWFA.

Earlier suggestions have been made that VWFA is involved in lexical processing. VWFA is activated during multiple lexical tasks (Schurz et al., 2010; Starrfelt & Gerlach, 2007; Xue et al., 2010) and damage in VWFA is associated with worse performance on tasks requiring lexical output (Hillis et al., 2004). In addition, a length by lexicality interaction was shown in a study of Schurz et al. (2010): using a phonological lexical decision task a length effect was found for pseudowords but not for real words. Kronbichler et al. (2004) showed sensitivity to lexical frequency in VWFA. Based on these findings, they propose that the outcome of the visual orthographic processing in VWFA is not an abstract visual word form as suggested by the local combination detector model of Dehaene et al. (2005), but a recognition pattern within an orthographic memory system. As a result, a difference can be found between high-frequency stimuli (real words) which recognition patterns are easily activated and low-frequency stimuli like pseudowords. The modulation of early visual orthographic processing by higher-order factors such as semantic, phonological, morphologic and lexical aspects has also been proposed by the interactive account of Price and Devlin (2003, 2011). They suggest that the integration of the abstract visual form with higher linguistic characteristics takes place in the left fusiform gyrus (Devlin et al., 2006).

The categorical distinction between words and pseudowords in our data suggests that words and pseudowords are represented distinctly (e.g., by other neural populations), but it does not necessarily imply that word representations have different properties compared to pseudoword representations. Such suggestions have been formulated in the literature. In particular, according to the fMRI adaptation study of Glezer et al. (2009), VWFA is involved in the storage and recognition of lexical items, i.e. whole words. In their study, they found the same gradual effect of orthographic similarity for pseudowords as in the present study, but the results differ from the present study when real words are considered. For real words, they found the same amount of release from adaptation when two words differed by only one or by all letters. The authors attributed this to a difference in selectivity for real words and pseudowords, with finer tuning for real words as a result of more experience with this type of stimuli. This is in contrast with our study, since we did not find a difference in selectivity between both types of stimuli: even though discrimination based on lexical status was possible, both RW and PW stimuli were further processed in terms of orthographic similarity.

One possible explanation for the difference in findings is the use of different methods. In the Glezer et al. (2009) study, fMRI adaptation was used while we adopted multi-voxel pattern analyses techniques (MVPA). These two techniques capture different aspects of neural selectivity, but neither directly measures neuronal selectivity. The outcome of MVPA reflects the functional organization of VWFA and how neurons are clustered. MVPA can reveal selectivity if and only if there is a mapping of functional properties. If there is such a mapping of functional properties, then there also must be selectivity at the single-neuron level. However, the reverse is not true. If no selectivity is measured with MVPA, then there is no (detectable) mapping of functional properties but that does not say much about possible single-neuron selectivity. FMRI adaptation, on the other hand, measures the sensitivity of the neural responses to changes in stimulation over time. It seems straightforward to assume that neurons which are sensitive to a change in a particular stimulus property are also selective for this stimulus property. However, single-neuron recordings have shown that this assumption is not always correct. Neurons have been reported to sometimes have no selectivity for the difference between object A and object B and still show a release from adaptation when a repetition of object A is broken by object B (Sawamura, Orban, & Vogels, 2006). There is no easy way to know which of the two methods reflects single-neuron selectivity when the two methods disagree. However, the interpretation of MVPA in terms of the functional organization is clear-cut. Based on our results, it seems that the neural map in VWFA segregates words from pseudo-words, at least to some degree, but follows the same principle of orthographic mapping in how neurons with a tuning for different words are clustered and how neurons with a tuning for different pseudo-words are clustered.

In conclusion, the results of the present study provide evidence that more than only the visuo-orthographic aspects are processed in VWFA, since we found evidence of processing of lexical information. This is in contradiction with the feedforward model of visual word processing or local combination detector account as proposed by Dehaene et al. (2005). Nevertheless during processing of the lexical category of the stimulus, both types of stimuli were further processed in terms of orthographic similarity. This last finding is in accordance with the local combination detector account, as it predicts an organization based on visual orthographic similarity for word and word-like stimuli (word fragments or pseudowords). Not all higher-order linguistic properties were integrated in the representations as measured by the distributed response patterns in VWFA in the present study, since we did not find evidence for an effect of semantics (see Supplemental Data). Our data thus provide evidence for certain elements of the local combination detector account and the interactive account, but do not fully correspond to one of both theories.

4.3 Left superior temporal gyrus

The most remarkable effect in lSTG was an interaction between lexical status and orthographic similarity. Real words, when not semantically related, are organized according to orthographic similarity, while no functional organization was found for pseudowords. Thus, while words and pseudo-words were mapped according to similar principles in VWFA, despite the categorical distinction in terms of lexical status, this is not the case in lSTG. In lSTG, responses to real words are clustered according to orthographic similarity, and lSTG responses to pseudo-words are not clustered at all as far as we can measure. This is consistent with reports of lexical representations in the left superior temporal region (Fedorenko, Nieto-Castanon & Kanwisher, 2012; Gaskell & Zwitserlood, 2011).

4.4 Conclusion

In conclusion, the findings in this study support VWFA as a high-level perceptual region. This region can categorize between stimuli based on lexical status, but both stimulus types are further processed in terms of how visually similar (i.e. orthographically similar) they are. This is in contrast to the earlier EVC where no effect of the lexical status was found. lSTG was confirmed to be a language area, considering we only found a functional organization for real words, based on orthographic similarity, and no organization for pseudowords.

Supplementary Material

Highlights.

The multi-voxel pattern in VWFA contains information about lexical status

Words and pseudowords are processed according to orthographic similarity in VWFA

Words, not pseudowords are processed according to orthographic similarity in lSTG

Table 1.

Stimulus matching information (average values and standard deviation) on psycholinguistic factors for real words and pseudowords. Values were retrieved from the MCWord (Medler & Binder, 2005) and WordGen toolboxes (Duyck, Desmet, Verbeke & Brysbaert, 2004).

| Psycholinguistic factor | real words | pseudowords |

|---|---|---|

| Word frequency (per million) | 32 (64) | |

| Frequency (per million) of the constrained unigrams | 14267 (4545) | 15830 (4905) |

| Summated bigram frequency | 7734 (2972) | 6616 (2461) |

| Summated trigram frequency | 1800 (1047) | 1029 (1447) |

| Number of orthographic neighbours | 13 (5) | 7 (2.36) |

Acknowledgments

This research was supported by an FWO Fellowship to A.B.; the Methusalem program (METH/08/02); ERC (ERC-2011-Stg-284101); IUAP (IUAP-P7/11) and by the Intramural Research Program of the NIMH.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Annelies Baeck, Laboratory of Biological Psychology, University of Leuven (KULeuven), Tiensestraat 102, 3000 Leuven, Belgium Laboratory of Experimental Psychology, University of Leuven (KULeuven), Tiensestraat 102, 3000 Leuven, Belgium Laboratory of Brain and Cognition, National Institute of Mental Health, National Institutes of Health, Bethesda, MD 30901 USA.

Dwight Kravitz, Laboratory of Brain and Cognition, National Institute of Mental Health, National Institutes of Health, Bethesda, MD 30901 USA kravitzd@email.gwu.edu.

Chris Baker, Laboratory of Brain and Cognition, National Institute of Mental Health, National Institutes of Health, Bethesda, MD 30901 USA bakerchris@mail.nih.gov.

Hans P. Op de Beeck, Laboratory of Biological Psychology, University of Leuven (KULeuven), Tiensestraat 102, 3000 Leuven, Belgium Hans.opdebeeck@ppw.kuleuven.be

References

- Baker CI, Liu J, Wald LL, Kwong KK, Benner T, Kanwisher N. Visual word processing and experiential origins of functional selectivity in human extrastriate cortex. Proceedings of the National Academy of Sciences USA. 2007;104:9087–9092. doi: 10.1073/pnas.0703300104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Shachar M, Dougherty RF, Deutsch GK, Wandell BA. The development of cortical sensitivity to visual word forms. Journal of Cognitive Neuroscience. 2011;23:2387–2399. doi: 10.1162/jocn.2011.21615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Medler DA, Westbury CF, Liebenthal E, Buchanan L. Tuning of the human left fusiform gyrus to sublexical orthographic structure. NeuroImage. 2006;33:739–748. doi: 10.1016/j.neuroimage.2006.06.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard D. The psychophysics toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Bruno JL, Zumberge A, Manis F, Lu Z, Goldberg JG. Sensitivity to orthographic familiarity in the occipito-temporal region. NeuroImage. 2008;39:1988–2001. doi: 10.1016/j.neuroimage.2007.10.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunswick N, McCrory E, Price CJ, Frith CD, Frith U. Explicit and implicit processing of words and pseudowords by adult developmental dyslexics – A search for Wernicke's Wortschatz? Brain. 1999;122:1901–1917. doi: 10.1093/brain/122.10.1901. [DOI] [PubMed] [Google Scholar]

- Cai Q, Paulignan Y, Brysbaert M, Ibarrola D, Nazir TA. The left ventral occipito- temporal response to words depends in language lateralization but not in visual familiarity. Cerebral Cortex. 2010;20:1153–1163. doi: 10.1093/cercor/bhp175. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S. Specialization within the ventral stream: The case for the visual word form area. NeuroImage. 2004;22:466–476. doi: 10.1016/j.neuroimage.2003.12.049. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehérique S, Dehaene-Lambertz G, Hénaff MA, Michel F. The visual word form area : Spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain. 2000;123:291–307. doi: 10.1093/brain/123.2.291. [DOI] [PubMed] [Google Scholar]

- Cohen L, Lehéricy S, Chochon F, Lemer C, Rivaud S, Dehaene S. Language-specific tuning of visual cortex? Functional properties of the visual word form area. Brain. 2002;125:1054–1069. doi: 10.1093/brain/awf094. [DOI] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Human Brain Mapping. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Cohen L. Cultural recycling of maps. Neuron. 2007;56:384–398. doi: 10.1016/j.neuron.2007.10.004. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Cohen L. The unique role of the visual word form area in reading. Trends in Cognitive Sciences. 2011;15:254–262. doi: 10.1016/j.tics.2011.04.003. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Cohen L, Sigman M, Vinckier F. The neural code for written words: A proposal. Trends in Cognitive Sciences. 2005;9:335–341. doi: 10.1016/j.tics.2005.05.004. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Le Clec'H G, Poline JB, Le Bihan D, Cohen L. The visual word form area : A prelexical representation of visual words in the fusiform gyrus. NeuroReport. 2002;13:321–325. doi: 10.1097/00001756-200203040-00015. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Jamison HL, Gonnerman LM, Mathews PM. The role of the posterior fusiform gyrus in reading. Journal of Cognitive Neuroscience. 2006;16:911–922. doi: 10.1162/jocn.2006.18.6.911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Valois RL, De Valois KK. Spatial vision. Oxford University Press; New York: 1988. [Google Scholar]

- Drucker DM, Aguirre GK. Different spatial scales of shape similarity representation in lateral and ventral LOC. Cerebral Cortex. 2009;19:2269–2280. doi: 10.1093/cercor/bhn244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duyck W, Desmet T, Verbeke LPC, Brysbaert M. A tool for word selection and non- word generation in Dutch, German, English, and French. Behavior Research Methods, Instruments, & Computers. 2004;6:488–499. doi: 10.3758/bf03195595. [DOI] [PubMed] [Google Scholar]

- Engel SA, Glover GH, Wandell BA. Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cerebral Cortex. 1997;7:181–192. doi: 10.1093/cercor/7.2.181. [DOI] [PubMed] [Google Scholar]

- Fedorenko E, Nieto-Castanon A, Kanwisher N. Lexical and syntactic representations in the brain: An fMRI investigation with multi-voxel pattern analyses. Neuropsychologia. 2012;50:499–513. doi: 10.1016/j.neuropsychologia.2011.09.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaskell G, Zwitserlood P. Lexical representation: A multidisciplinary approach. Walter de Gruyter GmbH & Co. KG.; Berlin/New York: 2011. [Google Scholar]

- Glezer LS, Jiang X, Riesenhuber M. Evidence for highly selective neuronal tuning to whole words in the visual word form area. Neuron. 2009;62:199–204. doi: 10.1016/j.neuron.2009.03.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo Y, Burgund ED. Task effects in the mid-fusiform gyrus : A comparison of orthographic, phonological, and semantic processing of Chinese characters. Brain & Language. 2010;115:113–120. doi: 10.1016/j.bandl.2010.08.001. [DOI] [PubMed] [Google Scholar]

- Hammond EJ, Green DW. Detecting targets in letter and non-letter arrays. Canadian Journal of Psychology. 1982;36:67–82. doi: 10.1037/h0081211. [DOI] [PubMed] [Google Scholar]

- Haldgren E, Dhond RP, Christensen N, Van Petten C, Marinkovic K, Lewine JD, Dale AM. N400-like magnetoencephalography responses modulated by semantic context, word frequency, and lexical class in sentences. NeuroImage. 2002;17:1101–1116. doi: 10.1006/nimg.2002.1268. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Hellyer PJ, Woodhead ZVJ, Leech R, Wise RJS. An investigation of twenty/20 vision in reading. Journal of Neuroscience. 2011;31:14631–14638. doi: 10.1523/JNEUROSCI.2740-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillis AE, Newhart M, Heidler J, Barker P, Herskovits E, Degaonkar M. NeuroImage. 2005;24:548–559. doi: 10.1016/j.neuroimage.2004.08.026. [DOI] [PubMed] [Google Scholar]

- Humphreys GW, Evett LJ, Quinlan PT. Orthographic processing in visual word identification. Cognitive Psychology. 1990;22:517–560. doi: 10.1016/0010-0285(90)90012-s. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Woods R, Lacoboni M, Mazzioti J. A locus in human extrastriate cortex for visual shape analysis. Journal of Cognitive Neuroscience. 1997;9:133–142. doi: 10.1162/jocn.1997.9.1.133. [DOI] [PubMed] [Google Scholar]

- Kherif F, Josse G, Price CJ. Automatic top-down processing explains common left occipito-temporal responses to visual words and objects. Cerebral Cortex. 2011;21:103–114. doi: 10.1093/cercor/bhq063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronbichler M, Bergman J, Hutzler F;, Staffen W, Mair A, Ladurner G, Wimmer H. Taxi vs. Taksi: Orthopgraphic word recognition in the left ventral occipitotemporal cortex. Journal of cognitive Neuroscience. 2007;19:1584–1594. doi: 10.1162/jocn.2007.19.10.1584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronbichler M, Hutzler F, Wimmer H, Mair A, Staffen W, Ladurner G. The visual word form area and the frequency with which words are encountered: Evidence from a parametric fMRI study. NeuroImage. 2004;21:946–953. doi: 10.1016/j.neuroimage.2003.10.021. [DOI] [PubMed] [Google Scholar]

- Lee SH, Kravitz DJ, Baker CI. Goal-dependent dissociation of visual and prefrontal cortices during working memory. Nature Neuroscience. 2013;16:997–999. doi: 10.1038/nn.3452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logothetis NK, Sheiberg DL. Visual object recognition. Annual Review of Neuroscience. 1996;19:577–621. doi: 10.1146/annurev.ne.19.030196.003045. [DOI] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Totell RBH. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proceedings of the National Academic Sciences. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCandliss BD, Cohen L, Dehaene S. The visual word form area: Expertise for reading in the fusiform gyrus. Trends in Cognitive Sciences. 2003;7:293–299. doi: 10.1016/s1364-6613(03)00134-7. [DOI] [PubMed] [Google Scholar]

- Nestor A, Behrman M, Plaut DC. The neural basis of visual word form processing: a multivariate investigation. Cerebral Cortex. 2013;23:1673–7684. doi: 10.1093/cercor/bhs158. [DOI] [PubMed] [Google Scholar]

- Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: Multi-voxel pattern analysis of fMRI data. Trends in Cognitive Sciences. 2006;10:424–430. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]

- Olulade OA, Flowers DL, Napoliello EM, Eden GF. Developmental differences for word processing in the visual stream. Brain and Language. 2013;125:134–145. doi: 10.1016/j.bandl.2012.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Op de Beeck HP, Torfs K, Wagemans J. Perceived shape similarity among unfamiliar object the organization of the human object vision pathway. Journal of Neuroscience. 2008;28:10111–10123. doi: 10.1523/JNEUROSCI.2511-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medler DA, Binder JR. MCWord: An on-line orthographic database of the English language. 2005 http://www.neuro.mcw.edu/mcword/

- Moore CJ, Price CJ. Three distinct ventral occipitotemporal regions for reading and object naming. NeuroImage. 1999;10:181–192. doi: 10.1006/nimg.1999.0450. [DOI] [PubMed] [Google Scholar]

- Mummery CJ, Patterson K, Hodges JR, Price CJ. Functional neuroanatomy of the semantic system: Divisible by what? Journal of Cognitive Neuroscience. 1998;10:766–777. doi: 10.1162/089892998563059. [DOI] [PubMed] [Google Scholar]

- Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends in Cognitive Sciences. 2006;10:424–430. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]

- Norris DG. High field human imaging. Journal of magnetic resonance imaging. 2003;18:519–529. doi: 10.1002/jmri.10390. [DOI] [PubMed] [Google Scholar]

- Ohlman CA, Yacoub E. High-field fMRI for human application: An overview of spatial resolution and signal specificity. Open NeuroImaging Journal. 2011;5:74–89. doi: 10.2174/1874440001105010074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Op de Beeck HP, brants M, Baeck A, Wagemans J. Distributed subordinate specificity for bodies, faces, and buildings in human ventral visual cortex. NeuroImage. 2010;49:3414–3425. doi: 10.1016/j.neuroimage.2009.11.022. [DOI] [PubMed] [Google Scholar]

- Op de Beeck HP, Torfs K, Wagemans J. Perceived shape similairty among unfamiliar objects and the organization of the human object vision pathway. Journal of Neuroscience. 2008;28:10111–10123. doi: 10.1523/JNEUROSCI.2511-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Devlin JT. The myth of the visual word form area. NeuroImage. 2003;19:473–481. doi: 10.1016/s1053-8119(03)00084-3. [DOI] [PubMed] [Google Scholar]

- Price CJ, Devlin JT. The interactive account of ventral occipitotemporal contributions to reading. Trends in Cognitive Sciences. 2011;15:246–253. doi: 10.1016/j.tics.2011.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Wise RJ, Frackowiak RS. Demonstrating the implicit processing of visually presented words and pseudowords. Cerebral Cortex. 1996;6:62–70. doi: 10.1093/cercor/6.1.62. [DOI] [PubMed] [Google Scholar]

- Pylkkänen L, Marantz A. Tracking the time course of word recognition with MEG. Trends in Cognitive Sciences. 2003;7:187–189. doi: 10.1016/s1364-6613(03)00092-5. [DOI] [PubMed] [Google Scholar]

- Samawura H, Orban GA, Vogels R. Selectivity of neuronal adaptation does not match response selectivity: A single-cell study of the fMRI adaptation paradigm. Neuron. 2006;49:307–318. doi: 10.1016/j.neuron.2005.11.028. [DOI] [PubMed] [Google Scholar]

- Schurz M, Sturm D, Richian F, Kronbichler M, Ladurner G, Wimmer H. A dual-route perspective on brain activation in response to visual words: Evidence for a lenght by lexicality interaction in the visual word form area (VWFA). NeuroImage. 2010;49:2649–2661. doi: 10.1016/j.neuroimage.2009.10.082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simos PG, Basile LF, Papanicolaou AC. Source localization of the N400 response in a sentence-reading paradigm using evoked magnetic fields and magnetic resonance imaging. Brain Research. 1997;762:29–39. doi: 10.1016/s0006-8993(97)00349-1. [DOI] [PubMed] [Google Scholar]

- Song Y, Tian M, Liu J. Top-down processing of symbolic meanings modulates the visual word form area. Journal of Neuroscience. 2012;32:12277–12283. doi: 10.1523/JNEUROSCI.1874-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Starrfelt R, Gerlach C. The visual what for area: words and pictures in the left fusiform gyrus. NeuroImage. 2007;35:334–342. doi: 10.1016/j.neuroimage.2006.12.003. [DOI] [PubMed] [Google Scholar]

- Tanaka K. Columns for complex visual object features in the inferotemporal cortex: Clustering of cells with similar but slightly different stimulus selectivities. Cerebral Cortex. 2003;13:90–99. doi: 10.1093/cercor/13.1.90. [DOI] [PubMed] [Google Scholar]

- Ugurbil K, Adriany G, Andersen P, Chen W, Garwood M, Gruetter R, Henry PG, Kim SG, Lieu H, Tkac I, Vaughan T, Van De Moortele PF, Yaoub E, Zhu XH. Ultrahigh field magnetic resonance imaging and spectroscopy. Magnetic Resonance Imaging. 2005;21:1263–1281. doi: 10.1016/j.mri.2003.08.027. [DOI] [PubMed] [Google Scholar]

- Vartiainen J, Parviainen T, Salmedin R. Spatiotemporal convergence of semantic processing in reading and speech perception. Journal of neuroscience. 2009;29:9271–9280. doi: 10.1523/JNEUROSCI.5860-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vinckier F, Dehaene S, Jobert A, Dubus JP, Sigman M, Cohen L. Hierarchical coding of letter strings in the ventral stream: Dissecting the inner organization of the inner organization of the visual word-form area. Neuron. 2007;55:143–156. doi: 10.1016/j.neuron.2007.05.031. [DOI] [PubMed] [Google Scholar]

- Wang X, Yang J, Shu H, Zevin JD. Left fusiform BOLD responses are inversely related to word-likeness in a one-back task. NeuroImage. 2011;55:1346–1356. doi: 10.1016/j.neuroimage.2010.12.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woollams AM, Silani G, Okada K, Patterson K, Price CJ. Word or word-like? Dissociating orthographic typicality from lexicality in the left occipito-temporal cortex. Journal of Cognitive Neuroscience. 2010;23:992–1002. doi: 10.1162/jocn.2010.21502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu B, Grafman J, Gaillard WD, Ishii K, Vega-Bermudez F, Pietrini P, Reeves-Tyer P, DiCamillo P, Theodore W. Conjoint and extended neural networks for the computation of speech codes: The neural basis of selective impairment in reading words and pseudowords. Cerebral Cortex. 2001;11:267–277. doi: 10.1093/cercor/11.3.267. [DOI] [PubMed] [Google Scholar]

- Xue G, Chen C, Jin Z, Dong Q. Language experience shapes fusiform activation when processing a logographic artificial language : An fMRI training study. NeuroImage. 2006;31:1315–1326. doi: 10.1016/j.neuroimage.2005.11.055. [DOI] [PubMed] [Google Scholar]

- Xue G, Mei L, Chen C, Lu ZL, Poldrack RA, Dong Q. Facilitating memory for novel characters by reducing neural repetition suppression in the left fusiform cortex. PLoS ONE. 2010;5:e13204–13214. doi: 10.1371/journal.pone.0013204. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.