Significance

Many complex systems reduce their flexibility over time in the sense that the number of options (possible states) diminishes over time. We show that rank distributions of the visits to these states that emerge from such processes are exact power laws with an exponent −1 (Zipf’s law). When noise is added to such processes, meaning that from time to time they can also increase the number of their options, the rank distribution remains a power law, with an exponent that is related to the noise level in a remarkably simple way. Sample-space-reducing processes provide a new route to understand the phenomenon of scaling and provide an alternative to the known mechanisms of self-organized criticality, multiplicative processes, or preferential attachment.

Keywords: scaling laws, Zipf’s law, random walks, path dependence, network diffusion

Abstract

History-dependent processes are ubiquitous in natural and social systems. Many such stochastic processes, especially those that are associated with complex systems, become more constrained as they unfold, meaning that their sample space, or their set of possible outcomes, reduces as they age. We demonstrate that these sample-space-reducing (SSR) processes necessarily lead to Zipf’s law in the rank distributions of their outcomes. We show that by adding noise to SSR processes the corresponding rank distributions remain exact power laws, , where the exponent directly corresponds to the mixing ratio of the SSR process and noise. This allows us to give a precise meaning to the scaling exponent in terms of the degree to which a given process reduces its sample space as it unfolds. Noisy SSR processes further allow us to explain a wide range of scaling exponents in frequency distributions ranging from to . We discuss several applications showing how SSR processes can be used to understand Zipf’s law in word frequencies, and how they are related to diffusion processes in directed networks, or aging processes such as in fragmentation processes. SSR processes provide a new alternative to understand the origin of scaling in complex systems without the recourse to multiplicative, preferential, or self-organized critical processes.

A typical feature of aging is that the number of possible states in a system reduces as it ages. Whereas a newborn can become a composer, politician, physicist, actor, or anything else, the chances for a 65-y-old physics professor to become a concert pianist are practically zero. A characteristic feature of history-dependent systems is that their sample space, defined as the set of all possible outcomes, changes over time. Many aging stochastic systems (such as career paths) become more constrained in their dynamics as they unfold (i.e., their sample space becomes smaller over time). An example for a sample-space-reducing (SSR) process is the formation of sentences. The first word in a sentence can be sampled from the sample space of all existing words. The choice of subsequent words is constrained by grammar and context, so that the second word can only be sampled from a smaller sample space. As the length of a sentence increases, the size of the sample space of word use typically reduces.

Many history-dependent processes are characterized by power-law distribution functions in their frequency and rank distributions of their outcomes. The most famous example is the rank distribution of word frequencies in texts, which follows a power law with an approximate exponent of −1, the so-called Zipf’s law (1). Zipf’s law has been found in countless natural and social phenomena, including gene expression patterns (2), human behavioral sequences (3), fluctuations in financial markets (4), scientific citations (5, 6), distributions of city (7) and firm sizes (8, 9), and many more (see, e.g., ref. 10). (Some of these examples are, of course, not associated with SSR processes.) Over the past decades there has been a tremendous effort to understand the origin of power laws in distribution functions obtained from complex systems. Most of the existing explanations are based on multiplicative processes (11–14), preferential mechanisms (15–17), or self-organized criticality (18–20). Here we offer an alternative route to understand scaling based on processes that reduce their sample space over time. We show that the emergence of power laws in this way is related to the breaking of a symmetry in random sampling processes, a mechanism that was explored in ref. 21. History-dependent random processes have been studied generically (22, 23), however not with the rationale to understand the emergence of scaling in complex systems.

Results

The Pure SSR Process and Zipf's Law.

The essence of SSR processes can be illustrated by a set of N fair dice with different numbers of faces. The first dice has one face, the second has two faces (coin), the third one three, and so on, up to dice number N, which has N faces. The faces of a dice are numbered and have respective face values. To start the SSR process, take the dice with the largest number of faces (N) and throw it. The result is a face value between 1 and N; say it is K. We now take dice number (with faces) and throw it, to get a number i between 1 and ; say we throw L. We now take dice number , throw it, and so forth. We repeat the process until we reach dice number 1, and the process stops. We denote this directed and acyclic process by ϕ. As the process unfolds, ϕ generates a single sequence of strictly decreasing numbers i. An intuitive realization of this process is depicted in Fig. 1. The probability that the process ϕ visits the particular site i in a sequence is the visiting probability , which can easily be shown to follow an exact Zipf’s law, . This is done, for example, with a proof by induction on N. Take the process ϕ and let . There exist two possible sequences: Either ϕ directly generates a 1 with probability , or ϕ first generates 2 with probability , and then a 1 with certainty. Both sequences visit 1 but only one visits 2. As a consequence, and . Let us now suppose that has been shown up to level . Now, if the process starts with dice N, the probability to hit i in the first step is . Also, any other j, , is reached with probability . If we get , we will obtain i in the next step with probability , which leads us to the recursive scheme for all , Because by assumption , with holds, simple algebra yields . Finally, as pointed out above, for , we have , which completes the proof that indeed the visiting probability is

| [1] |

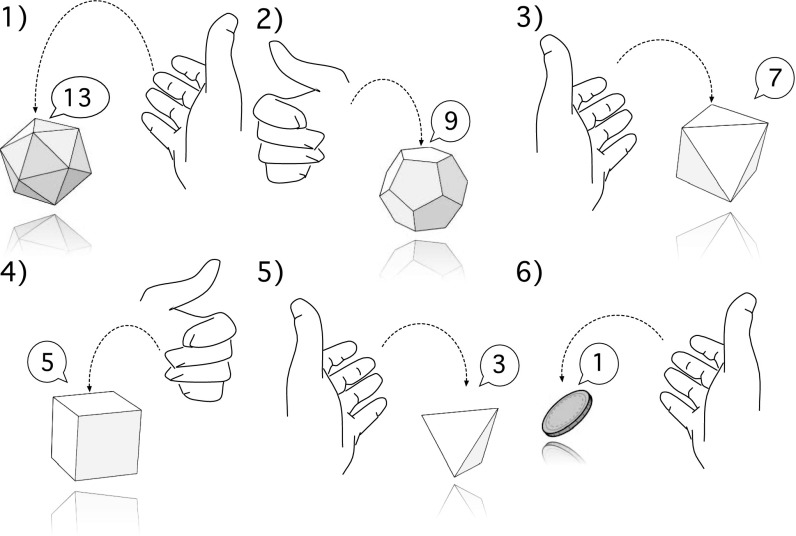

Fig. 1.

SSR process. Imagine a set of dice with different numbers of faces. We start by throwing the 20-faced dice (icosahedron). Suppose we get a face value of 13. We now have to take the 12-faced dice (dodecahedron), throw it, and get a face value of, say, 9, so that we must continue with the 8-faced dice. Say we throw a 7, forcing us to take the (ordinary) dice, with which we throw, say, a 5. With the 4-faced dice we get a 2, which forces us to take the 2-faced dice (coin). The process ends when we throw a 1 for the first time. The set of possible outcomes (sample space) reduces as the process unfolds. The sequence above was chosen to make use of the platonic dice for pictorial reasons only. If the process is repeated many times, the distribution of face values (rank-ordered) gives Zipf’s law.

If the process ϕ is repeated many times, meaning that once it reaches dice number 1 we start by throwing dice number N again, we are interested in how often a given site i is occupied on average. The occupation probability for site i, given that there are N possible sites, is denoted by . Note an important property of the process ϕ. Although in general the visiting probability and the occupation probability of a process quantify different aspects, for the particular process ϕ both probabilities only differ by a normalization factor. This is so because any sequence generated by ϕ is strictly decreasing and contains any particular site i at most once. Further, any sequence ends on site 1, meaning . Therefore, it is clear that , where is a normalization factor. This shows that this prototype of an SSR processes exhibits an exact Zipf’s law in the (rank-ordered) occupation probabilities.

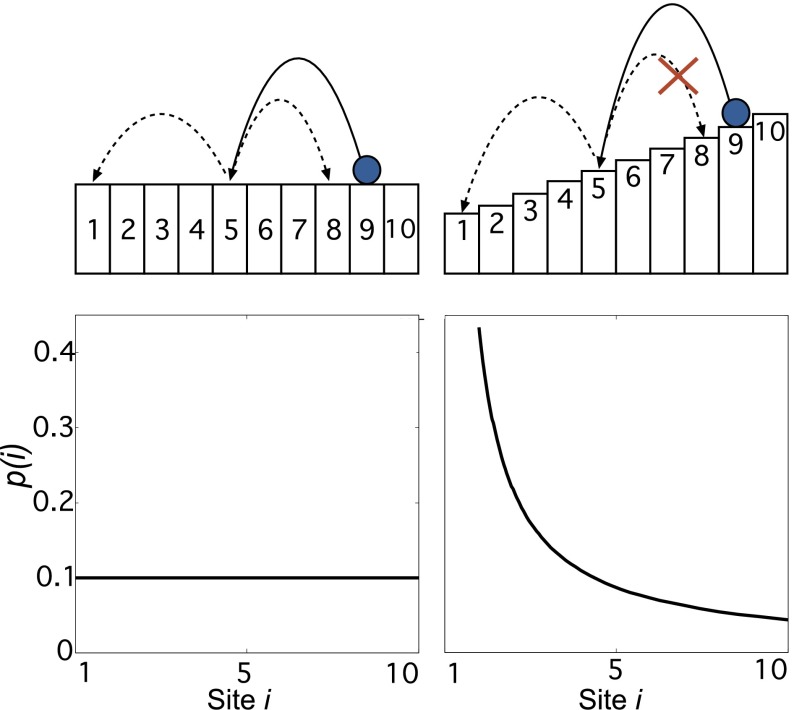

An alternative picture that illustrates the history-dependence aspect of the same SSR processes is shown in Fig. 2. In Fig. 2, Left we show an independent and identically distributed (iid) stochastic process, where the space of potential outcomes is . At each time step a ball can jump from one of N sites of to any other with equal probability. Because the process is independent, the conditional probability of jumping from site i to site j is . There is no path dependence. If we define as the subset of those sites that can be reached from site i, we obviously find that this is constant over time,

We refer to this process as an unconstrained random walk and denote it by . The occupation distribution is (Fig. 2). To introduce path or history dependence, assume that sites are arranged in levels like a staircase. Now imagine a ball that can bounce downstairs to lower levels randomly but never can climb to higher levels (Fig. 2, Right). If at time t the ball is at level (site) i, at all lower levels can be reached with the same probability, . Jumps to higher levels are forbidden, , for . The process ends at the lowest stair level 1. In this process, sample space displays a nested structure,

In this case, , for all values of . is the empty set. This nested structure of sample space is the defining property of SSR processes. This type of nesting breaks the left–right symmetry of the iid stochastic process. The visiting probability to sites (levels) i during a downward sequence is again . Because this process is equivalent to ϕ, the same proof applies.

Fig. 2.

Illustration of path dependence, SSR, and nestedness of sample space. (Left) Unconstrained (iid) random walk realized by a ball randomly bouncing between all possible sites. The probability to observe the ball at a given site i is uniform, . (Right) The ball can only bounce downward; the left–right symmetry is broken. When level 1 is reached the process stops and is repeated. Sample space reduces from step to step in a nested way (main feature of SSR processes). After many iterations the occupation distribution (visits to level i) follows Zipf’s law, . Symmetry breaking of the sampling changes the uniform probability distribution to a power law.

The Role of Noise in SSR Processes.

It is conceivable that in many real systems nestedness of SSR processes is not realized perfectly and that from time to time the sample space can also expand during a sequence. In the above example this would mean that from time to time random upward moves are allowed, or equivalently, that the nested process ϕ is perturbed by noise. In the context of the scenario depicted in Fig. 2 we look at a superposition of the SSR ϕ and the unconstrained random walk . Using λ to denote the mixing ratio, the nested SSR process with noise is written as

| [2] |

More concretely, if the ball is at site i, with probability λ it jumps (downward) to any of site (with uniform probability), and with probability it jumps to any of the N sites, . In other words, each time before throwing the dice we decide with probability λ that the sample space for the next throw is (SSR process), or with it is (iid noise ). We repeat this process until the face value 1 is obtained. With probability λ the process is ϕ and stops, and with probability the process is and continues until 1 occurs again. Obviously, corresponds to the unconstrained random walk, and recovers the results for the strictly SSR processes without noise. Note that for , may visit a given site i more than once. This implies in general that the visiting probability and the occupation probability no longer need to be proportional to each other. For that reason we now explicitly compute the occupation probability for SSR processes with a given noise level. For simplicity in notation we now suppress N and write .

Note that ϕ produces one realization of possible sequences of sites , and then stops. The maximum length of such a sequence is N, the average sequence length is . In contrast, the unconstrained random walk has no stopping criterion. To avoid problems with mixing processes with different lengths we replace ϕ with a process that is identical to ϕ, except for the case when site is reached. In that case does not stop but continues with tossing the N-faced dice and thus restarts the process ϕ (in the numerical simulations we stop the process after M restarts). For site becomes both the starting point of a new single-sequence process ϕ, and the end point of the previous one (see also Fig. 5A). Replacing ϕ by in Eq. 2 ensures that we have an infinitely long, noisy sequence, which is denoted by . Successive restarting gives us the possibility to treat SSR processes as stationary, for which the consistency equation

| [3] |

holds. Here is the conditional probability that site i is reached from site j in the next time step in an infinite and noisy SSR process. It reads

| [4] |

The first line in the above equation accounts for the strictly SSR process, the second line for the unconstrained random walk component, and the third line takes care of the restarting once site is reached. From Eqs. 3 and 4 we get

| [5] |

Clearly, the recursive relation holds, from which one obtains

is given by the normalization condition , and we arrive at the remarkable result

| [6] |

Note that λ is nothing but the mixing parameter for the noise component. For one recovers Zipf’s law, ; for , the uniform distribution is obtained. For intermediate one observes an asymptotically exact power law with exponent λ. Note that Eq. 6 is a statement about the rank distribution of the system. Often statistical features of systems are presented as frequency distributions, that is, the probability that a given site (state) is visited k times, , and not as rank distributions. These are related, however. It is well known that if the rank distribution p is a power law with exponent λ, is also a power law with the exponent (see, e.g., ref. 10). The result of Eq. 6 implies that we are able to understand a remarkable range of exponents in frequency distributions, , by noisy SSR processes. Many observed systems in nature display frequency distributions with exponents between 2 and 3, which in our framework relates to a mixing ratio of . We find perfect agreement of the result of Eq. 6 and numerical simulations (Fig. 3A). The slope of the measured rank distributions in log scale, , perfectly agree with the theoretical prediction λ. Fitting was carried out by using the method proposed in ref. 24.

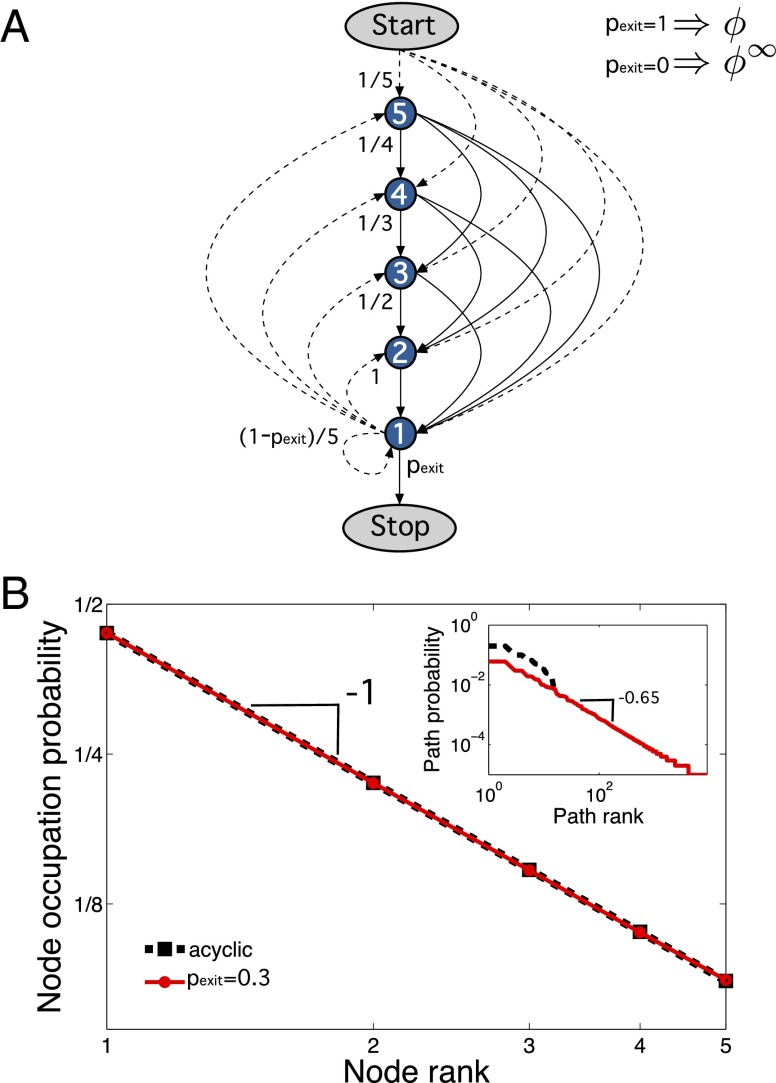

Fig. 5.

(A) SSR processes seen as random walks on networks. A random walker starts at the start node and diffuses through the directed network. Depending on the value of , two possible types of walks are possible. For , the finite ( possible paths) and acyclic process ϕ is recovered that stops after a single path; for , we have the infinite and cyclical process, . For we have the mixed process, . (B) The occupation probability for is unaffected by the value of . The repeated ϕ (dashed black line) and the mixed process with (solid red line) have exactly the same occupation probability , which corresponds to the stationary visiting distribution of nodes in the network by random walkers. (Inset) Rank distribution of path-visit frequencies. Clearly they depend strongly on . Whereas the acyclic ϕ produces a finite distribution, the cyclic one produces a power law, matching the theoretical prediction of ref. 27. For the simulation we generated sequence samples and found distinct sequences for .

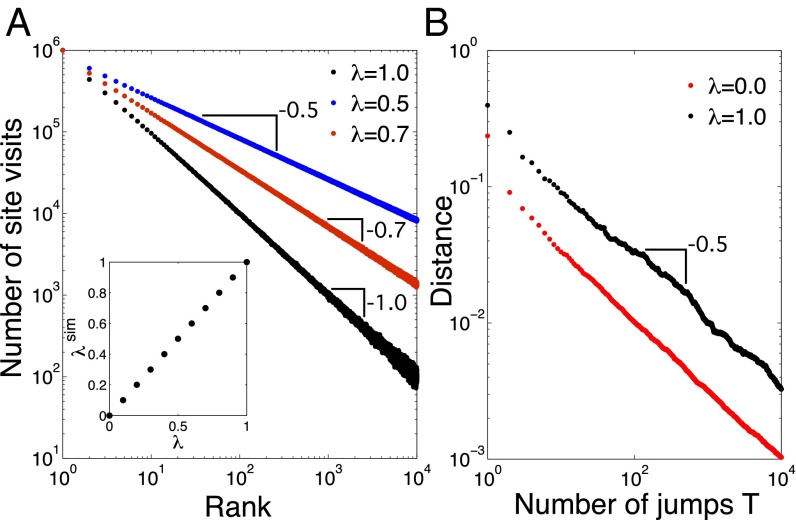

Fig. 3.

(A) Rank distributions of SSR processes with iid noise contributions from simulations of Φ(λ)∞, for three values of λ = 1, 0.7, and 0.5 (black, red, and blue, respectively). Fits to the distributions [obtained with a maximum-likelihood estimator (24)] yield λfit = 0.999, λfit = 0.699, and λfit = 0.499, respectively. Clearly, an almost exact match with the expected power-law exponents is realized. The inset shows the dependence of the measured exponent λsim from the simulations (slope), on various noise levels λ. The exponent λsim is practically identical to λ. N = 10,000, numerical simulations were stopped after M = 106 restarts of the process. (B) Convergence rate. The distance (2-norm) between the simulated occupation probability (normalized histogram) after T jumps in the Φ(λ)∞ process, and the predicted power-law of Eq. 6, is shown for λ = 1 (black), and the pure random case, λ = 0 (red). Both distances show a power-law convergence ∼T−β. MLE fits yield β = 0.512 and 0.463, for λ = 0 and 1, respectively. This means that both cases are compatible with β ∼ 1/2, and that SSR processes converge equally fast toward their limiting distributions as pure random walks do.

Convergence Speed of SSR Distributions.

From a practical side the question arises of how fast SSR processes converge to the limiting occupation distribution given by Eq. 6. In other words, what is the distance between the sample distribution p(λ)T of the process Φ(λ)∞ and p(λ), after T individual jumps? In Fig. 3B we show the Euclidean distance of the distribution after T jumps , and , . We find that the distance decays as

| [7] |

The result does not depend on the value of λ. For the pure random case , our result for the convergence rate is well known and is in full accordance with the Berry–Esseen theorem (25), which accounts for the rate of convergence of the central limit theorem for iid processes. The fact that for we see the same convergence behavior means that SSR processes converge equally fast to their underlying limiting power-law distribution.

Examples

Sentence Formation and Zipf’s Law.

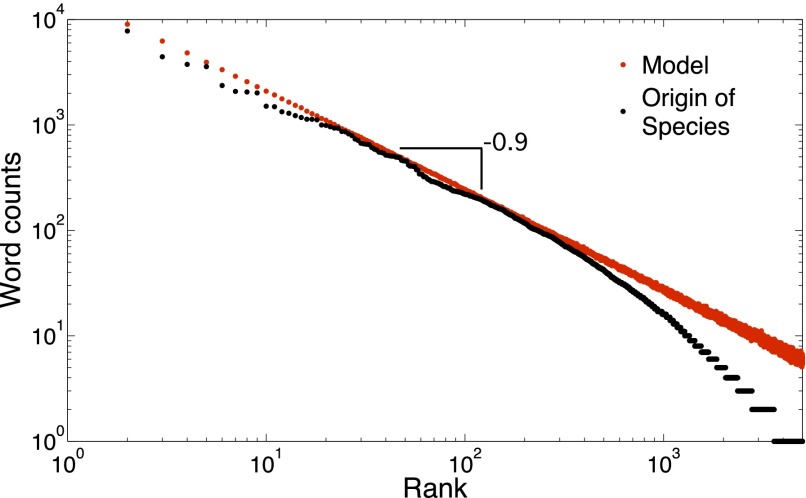

One example for a SSR process of the presented type is the process by which sentences are formed. During the creation of a sentence, grammatical and contextual constraints have the effect of a reducing sample space [i.e., the space (vocabulary) from which a successive word in a sentence can be sampled]. Clearly, the process of sentence formation is not expected to be strictly SSR, and we expect deviations from an exact Zipf’s law in the rank distribution of words in texts. In Fig. 4 we show the empirical distribution of word frequencies of Darwin’s The Origin of Species, which shows an approximate power law with a rank exponent of . In our framework of the mixed process this corresponds to a mixing parameter , indicating that in the process of sentence formation nesting is not perfect, and many instances occur where sample space can expand from one word to another. Note that here M corresponds to the number of sentences in a text. In the simulation we use words and restarts. For a more detailed model of sentence formation and SSR processes, see ref. 26.

Fig. 4.

Empirical rank distribution of word frequencies in The Origin of Species (black), showing two power-law regimes. For the most frequent words, the distribution is approximately power-law with an exponent . The corresponding distribution for the process with (red), suggests a slight deviation from perfect nesting. This means that in sentence formation, about 90% of consecutive word pairs, sample space is strictly reducing. Simulation: (words), and restarts (sentences).

SSR Processes and Random Walks on Networks.

SSR processes can be related to random walks on directed networks, as depicted in Fig. 5A. There we start the process from a start node, from which we can reach any of the N nodes with probability . At whatever node we end up, we can successively reach nodes with lower node numbers until we reach node number 1. There, with probability we jump to a stop node that ends the process. Note that if , the process runs through one single path and then stops. The process is acyclic and finite; there are possible paths. This network diffusion process is equivalent to the process ϕ above. However, if , the process becomes cyclic and infinite and corresponds exactly to . For any we have a mixing of the two processes, , which is again cyclic, and the number of possible paths is infinite. In Fig. 5B we show the result for the node occupation distribution for the process for (dashed black line) and (solid red line). The figure is produced from independently sampled sequences generated by . As expected the distribution follows the exact Zipf law, irrespective of the value of . The process allows us to study also the rank distribution of paths through the network. The path that is most often taken through the network has rank 1, the second most popular path has rank 2, and so on. Recent theoretical work (27) predicts a difference in the corresponding distributions for different values of . According to ref. 27, acyclic processes are expected to show finite path rank distributions of no particular shape. This is seen in Fig. 5B, Inset (black dashed line), which shows the observed path rank distribution for the paths. For cyclic processes where at least one node participates in at least two distinct cycles ref. 27 predicts power laws, which we clearly confirm for the cyclic process with (red line). Note that in our example node 1 alone is involved in five distinct cycles. The process demonstrates the mechanism that produces these power laws in its simplest form, where the probability of long sequences are products of the probability of the finite number of possible sequences that they concatenate.

SSR Processes and Fragmentation Processes.

One important class of aging systems are fragmentation processes, such as objects that repeatedly break at random sites into ever smaller pieces (see, e.g., refs. 28 and 29). A simple example demonstrates how fragmentation processes are related to SSR processes. Consider a stick of a certain initial length L, such as a spaghetto, and mark some point on the stick. Now take the stick and break it at a random position. Select the fragment that contains the mark and record its length. Then break this fragment again at a random position, take the fragment containing the mark, and again record its length. One repeats the process until the fragment holding the mark reaches a minimal length, say the diameter of an atom, and the fragmentation process stops. The process is clearly of the SSR type because fragments are always shorter than the fragment they come from. In particular, if the mark has been chosen on one of the endpoints of the initial strand of spaghetti, then the consecutive fragmentation of the marked fragment is obviously a continuous version of the SSR process ϕ discussed above. Note that even though the length sequence of a single marked fragment is an SSR process, the size evolution of all fragments is more complicated, because fragment lengths are not independent from each other: Every spaghetti fragment of length x splits into two fragments of respective lengths, and . The evolution of the distribution of all fragment sizes was analyzed in ref. 29. Note that in the one-dimensional SSR processes introduced here we see no signs of multiscaling. However, this possibility might exist for continuous or higher-dimensional versions of SSR processes.

Discussion

The main result of Eq. 6 is remarkable insofar as it explains the emergence of scaling in an extremely simple and hitherto unnoticed way. In SSR processes, Zipf’s law emerges as a simple consequence of breaking a directional symmetry in stochastic processes, or, equivalently, by a nestedness property of the sample space. More general power exponents are simply obtained by the addition of iid random fluctuations to the process. The relation of exponents and the noise level is strikingly simple and gives the exponent a clear interpretation in terms of the extent of violation of the nestedness property in strictly SSR processes. We demonstrate that SSR processes converge equally fast toward their limiting distributions, as uncorrelated iid processes do.

We presented several examples for SSR processes. The emergence of scaling through SSR processes can be used straightforwardly to understand Zipf’s law in word frequencies. An empirical quantification of the degree of nestedness in sentence formation in a number of books allows us to understand the variations of the scaling exponents between the individual books (26). SSR processes can be related to diffusion processes on directed networks. For a specific example we demonstrated that the visiting times of nodes follow a Zipf’s law, and could further reproduce very general recent findings of path-visit distributions in random walks on networks (27). Here we presented results for a complete directed graph; however, we conjecture that SSR processes on networks and the associated Zipf’s law of node-visiting distributions are tightly related and are valid for much more general directed networks. We demonstrated how SSR processes can be related to fragmentation processes, which are examples of aging processes. We note that SSR processes and nesting are deeply connected to phase-space collapse in statistical physics (21, 30–32), where the number of configurations does not grow exponentially with system size (as in Markovian and ergodic systems), but grows subexponentially. Subexponential growth can be shown to hold for the phase-space growth of the SSR sequences introduced here. In conclusion, we believe that SSR processes provide a new alternative view on the emergence of scaling in many natural, social, and man-made systems. It is a self-contained, independent alternative to multiplicative, preferential, self-organized criticality and other mechanisms that have been proposed to understand the origin of power laws in nature (33).

Acknowledgments

We thank two anonymous referees who made us aware of the relation to network diffusion and fragmentation and Álvaro Corral for his helpful comments. This work was supported by Austrian Science Fund FWF under Grant KPP23378FW.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

References

- 1.Zipf GK. Human Behavior and the Principle of Least Effort. Addison-Wesley; Reading, MA: 1949. [Google Scholar]

- 2.Stanley HE, et al. Scaling features of noncoding DNA. Physica A. 1999;273(1-2):1–18. doi: 10.1016/s0378-4371(99)00407-0. [DOI] [PubMed] [Google Scholar]

- 3.Thurner S, Szell M, Sinatra R. Emergence of good conduct, scaling and zipf laws in human behavioral sequences in an online world. PLoS ONE. 2012;7(1):e29796. doi: 10.1371/journal.pone.0029796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gabaix X, Gopikrishnan P, Plerou V, Stanley HE. A theory of power-law distributions in financial market fluctuations. Nature. 2003;423(6937):267–270. doi: 10.1038/nature01624. [DOI] [PubMed] [Google Scholar]

- 5.Price DJ. Networks of scientific papers. Science. 1965;149(3683):510–515. doi: 10.1126/science.149.3683.510. [DOI] [PubMed] [Google Scholar]

- 6.Redner S. How popular is your paper? An empirical study of the citation distribution. Eur Phys J B. 1998;4:131–134. [Google Scholar]

- 7.Makse HA, Havlin S, Stanley HE. Modelling urban growth patterns. Nature. 1995;377(6650):608–612. [Google Scholar]

- 8.Axtell RL. Zipf distribution of U.S. firm sizes. Science. 2001;293(5536):1818–1820. doi: 10.1126/science.1062081. [DOI] [PubMed] [Google Scholar]

- 9.Saichev A, Malevergne Y, Sornette S. Lecture Notes in Economics and Mathematical Systems. Springer; Berlin: 2008. Theory of Zipf’s law and of general power law distributions with Gibrat’s law of proportional growth. [Google Scholar]

- 10.Newman MEJ. Power laws, Pareto distributions and Zipf’s law. Contemp Phys. 2005;46(5):323–351. [Google Scholar]

- 11.Simon HA. On a class of skew distribution functions. Biometrika. 1955;42(3-4):425–440. [Google Scholar]

- 12.Mandelbrot B. An informational theory of the statistical structure of language. In: Jackson W, editor. Communication Theory. Butterworths; London: 1953. [Google Scholar]

- 13.Solomon S, Levy M. Spontaneous scaling emergence in generic stochastic systems. Int J Mod Phys C. 1996;7(5):745–751. [Google Scholar]

- 14.Pietronero L, Tosatti E, Tosatti V, Vespignani A. Explaining the uneven distribution of numbers in nature: The laws of Benford and Zipf. Physica A. 2001;293(1-2):297–304. [Google Scholar]

- 15.Malcai O, Biham O, Solomon S. Power-law distributions and Lévy-stable intermittent fluctuations in stochastic systems of many autocatalytic elements. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics. 1999;60(2 Pt A):1299–1303. doi: 10.1103/physreve.60.1299. [DOI] [PubMed] [Google Scholar]

- 16.Lu ET, Hamilton RJ. Avalanches of the distribution of solar flares. Astrophys J. 1991;380:89–92. [Google Scholar]

- 17.Barabasi A-L, Albert R. Emergence of scaling in random networks. Science. 1999;286(5439):509–512. doi: 10.1126/science.286.5439.509. [DOI] [PubMed] [Google Scholar]

- 18.Bak P, Tang C, Wiesenfeld K. Self-organized criticality: An explanation of the 1/f noise. Phys Rev Lett. 1987;59(4):381–384. doi: 10.1103/PhysRevLett.59.381. [DOI] [PubMed] [Google Scholar]

- 19.Corominas-Murtra B, Solé RV. Universality of Zipf’s law. Phys Rev E Stat Nonlin Soft Matter Phys. 2010;82(1 Pt 1):011102. doi: 10.1103/PhysRevE.82.011102. [DOI] [PubMed] [Google Scholar]

- 20.Corominas-Murtra B, Fortuny J, Solé RV. Emergence of Zipf’s law in the evolution of communication. Phys Rev E Stat Nonlin Soft Matter Phys. 2011;83(3 Pt 2):036115. doi: 10.1103/PhysRevE.83.036115. [DOI] [PubMed] [Google Scholar]

- 21.Hanel R, Thurner S, Gell-Mann M. How multiplicity determines entropy and the derivation of the maximum entropy principle for complex systems. Proc Natl Acad Sci USA. 2014;111(19):6905–6910. doi: 10.1073/pnas.1406071111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kac M. A history-dependent random sequence defined by Ulam. Adv Appl Math. 1989;10(3):270–277. [Google Scholar]

- 23.Clifford P, Stirzaker D. History-dependent random processes. Proc R Soc A. 2008;464(2093):1105–1124. [Google Scholar]

- 24.Clauset A, Shalizi CR, Newman MEJ. Power-law distributions in empirical data. SIAM Rev. 2009;51(4):661–703. [Google Scholar]

- 25.Feller W. An Introduction to Probability Theory and Its Applications. Vol 2 Wiley; New York: 1966. [Google Scholar]

- 26.Thurner S, Hanel R, Corominas-Murtra B. 2014. Understanding Zipf’s law of word frequencies through sample space collapse in sentence formation. arXiv:1407.4610.

- 27.Perkins TJ, Foxall E, Glass L, Edwards R. A scaling law for random walks on networks. Nat Commun. 2014;5:5121. doi: 10.1038/ncomms6121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tokeshi M. Species abundance patterns and community structure. Adv Ecol Res. 1993;24:111–186. [Google Scholar]

- 29.Krapivsky PL, Ben-Naim E. Scaling and multiscaling in models of fragmentation. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics. 1994;50(5):3502–3507. doi: 10.1103/physreve.50.3502. [DOI] [PubMed] [Google Scholar]

- 30.Hanel R, Thurner S. A comprehensive classification of complex statistical systems and an ab initio derivation of their entropy and distribution functions. Europhys Lett. 2011;93(2):20006. [Google Scholar]

- 31.Hanel R, Thurner S. When do generalized entropies apply? How phase space volume determines entropy. Europhys Lett. 2011;96(5):50003. [Google Scholar]

- 32.Hanel R, Thurner S. Generalized (c,d)-entropy and aging random walks. Entropy. 2014;15:5324–5337. [Google Scholar]

- 33.Mitzenmacher M. A brief history of generative models for power law and lognormal distributions. Internet Mathematics. 2003;1(2):226–251. [Google Scholar]