Abstract

Similar to intelligent multicellular neural networks controlling human brains, even single cells, surprisingly, are able to make intelligent decisions to classify several external stimuli or to associate them. This happens because of the fact that gene regulatory networks can perform as perceptrons, simple intelligent schemes known from studies on Artificial Intelligence. We study the role of genetic noise in intelligent decision making at the genetic level and show that noise can play a constructive role helping cells to make a proper decision. We show this using the example of a simple genetic classifier able to classify two external stimuli.

Introduction

Cellular decision making and the determination of cellular fate is performed by superimposing the pattern of extracellular signalling with the intracellular state given by locations and concentrations of chemicals within the cell. This process is regulated by the program encoded in the cellular genome. Understanding the principles of this programming and, especially, how this program is executed is a key problem of modern biology, for control of this program would provide insight into new biotechnologies and medical treatments.

Our brains is able to make intelligent decisions because they are controlled by neural networks, i.e., networks based on the communication between huge amount of cells. But can intelligent solutions be executed at the level of genome? First, we should define what we will understand under intelligence. For this we will use some basic schemes developed in the field of Artificial Intelligence. One of the basic schemes of intelligence has been suggested by Frank Rosenblatt, who has called such systems as “perceptrons”. Basic perceptron was able to classify several external stimuli and provide binary output. Could such an intelligent decision making be observed in the performance of gene regulatory networks? It was long known that cells can adapt to and anticipate the stress but it remains not completely clear whether this is a result of intelligent learning or something else. For example, in 2008 Saigusa et al. [1] have shown that amoebae, a single cell organism, can anticipate periodic events. Naturally, a fundamental question arises, can a genetic network behave intelligently in the sense that it will learn an association or classification of stimuli? Recent theoretical studies have shown that it is, in principle, possible. It was shown that neural network can be built on the basis of chemical reactions, if a reaction mechanism has neuron-like properties [2]. In these works linked chains of chemical reactions could act as Turing machines or neural networks [3]. D. Bray has demonstrated that a cellular receptor can be considered as a perceptron with weights which have been learned via genetic evolution [4], showing formally that protein molecules may work as computational elements in living cells. Gandhi et al. has formally shown that also associative learning can be performed in biomolecular networks [5]. In 2008 Fernando et al. have suggested a formal scheme of the single cell genetic circuit which can associatively learn within the cellular life [6]. The same team has investigated with positive result using the real genomic interconnections whether the genome of the bacterium E. Coli could work as a liquid state machine learning associatively how to respond to a wide range of environmental inputs [7]. Despite formal proof-of-the-principle experimental work has fallen short to fully implement genetic intelligence, e.g., in Synthetic Biology. To our knowledge, only L. Qian et al. have experimentally shown that neural network computations, in particular, a Hopfield associative memory, can be implemented with DNA gate architecture and DNA strand displacement cascades [8]. In their experiment, learning has been, however, executed in advance on the computer.

On the other hand, recently it has been demonstrated that gene expression is a very noisy process [9]. Both intrinsic and extrinsic noise in a gene expression has been experimentally measured in [10] and modelled either with stochastic Langevin type differential equations or with Gillespie-type algorithms to simulate the single chemical reactions underlying this stochasticity [11]. Hence, the question arises as to what the fundamental role of noise in intracellular intelligence is. Can stochastic fluctuations only corrupt the information processing in the course of decision making or can they can also help cells to make intelligent decisions? During last three decades it was shown that under certain conditions in nonlinear systems noise can counterintuitively lead to ordering, e.g., in the effect of Stochastic Resonance (SR) [12], which has found many manifestations in biological systems, in particular to improve the hunting abilities of the paddlefish [13], to enhance human balance control [14], to help brain’s visual processing [15], to increase the speed of memory retrieval [16], or to improve quality of classification in genetic perceptron [17]. Here we will show that, surprisingly, the correct amount of noise in genetic decision making can produce an improvement in performance in classification tasks, demonstrating Stochastic resonance in a genetic decision making (SRIDM).

To show this we have designed a simple genetic network able to classify two external stimuli. The form in which our intelligence will take in this paper will be in the ability to perform linear classification tasks. Linear classification describes the ability to successfully discriminate between two sets which are linearly separable. Let S 1 and S 2 be linearly separable sets in n-dimensional space with a separation threshold T, i.e.,

Such that:

Linear separation problems represent what could be described as one of the simplest form of intelligence that a system could display. But although they may be simple they allow a system to perform a large class of discrimination tasks. Many functions a cell may need to perform require a yes or no decision to be made to proceed, the function of linear classifcation allows a system to take in information from various sources (most likely chemical concentrations), perform some analysis on this (take a weighted sum of the inputs) and make a final decision based on this analysis, namely “does this value exceed my required threshold of activation?”. There are countless examples of cells having to perform some kind of quantitative ‘weighing up’ of information from various sources such as in chemotaxis, regulatory checkpoints (cyclin concentrations) and apoptosis or ‘cellular suicide’ [18]. Additionally, if a separation threshold T is linked to a current cellular state, such a scheme can learn the classification rule as an intelligent perceptron.

Linear classification algorithms of this nature are named perceptrons and are formulated in the following way, for ∀x ∈ (S 1, S 2):

| (1) |

| (2) |

Here x = (x 1, x 2…, x n) represents the n-dimensional input and the weight vector w = (w 1, w 2, …, w n) and the value T describe the separation hyperplane. As can clearly be seen from (Eq 1) and (Eq 2) the value of T can be normalized to 1 by redefining,

From this we can see that our entire classification is now specified by the weight vector w. We also introduce the concept of a spoiled perceptron whereby the value of T is adjusted slightly. This will clearly have the effect of causing the misclassification of points close to the separation hyperplane.

Analysis

Model of the linear genetic classifier

The model we will consider is based on the designs of Kaneko et al. [19] for an arbitrarily connected n-node genetic circuit. Gene activity is modelled by differential equations coupled through Hill functions to describe inhibitory or activatory influence [20]. We have utilized this framework to produce a gene regulatory circuit capable of linear separation on 2 nodal concentrations with the result exhibited as the steady state concentration reached on a third node, maximal concentration or zero concentration depending on the classification. The circuit diagram is as shown in Fig 1A with pointed arrowheads representing an promoting transcription factor and a flat-headed arrow representing a repressive transcription factor.

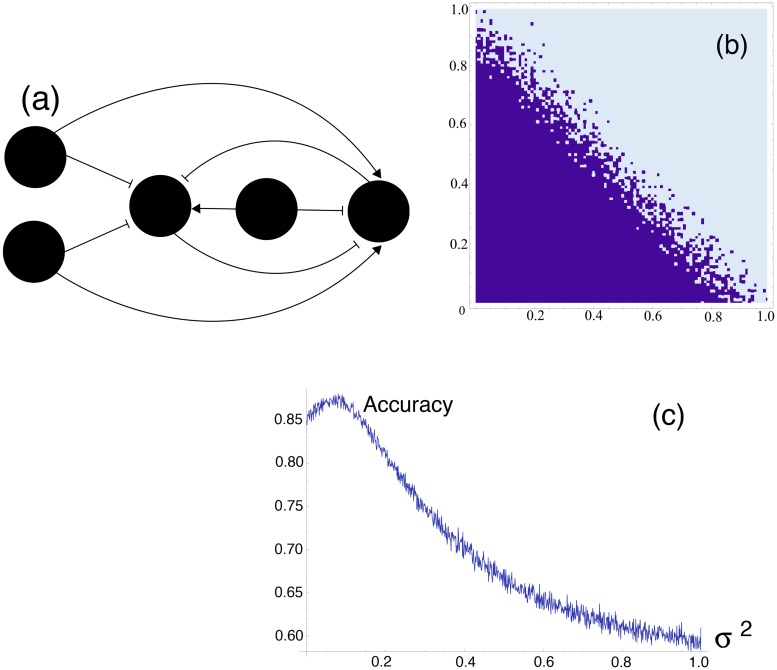

Fig 1. Design of a genetic network and effect of stochastic resonance.

(a) Design of an intracellular gene regulatory network able to perform linear classification. In each cell the classifier is based on the toggle switch (genes 3 and 4, which, if separated, organize a bistable system in state ON-OFF or OFF-ON) and the gene 3 is the output. Two inputs are genes 1 and 2. Due to permanent basal expression of gene 0, the gene 4 is in the state ON. Correspondingly the output gene 3 is in the OFF state. If the join action of inputs 1 and 2 can repress gene 4 despite the activating link from 0, the switch will change its state and the output will be in the state ON. In this way the scheme can classify two inputs according to the binary classification. (b) The expression of gene 0 sets the “threshold” of classification. The slope of the separation line depends on the weights with which inputs 1 and 2 inhibit gene 4, activate gene 3, and on the expression of gene 0. The gene 0 can be one of the genes in the cellular genome, in this case, a classifier will “learn” the classification rule from surrounding. Here the example of linear separation for inputs 1 and 2 varying between 0 and 1 is shown, demonstrating the effect of input noise in linear separation. Adding noise to inputs 1 and 2 blurs the classification line. (c) Stochastic resonance in a genetic perceptron. In a spoiled perceptron optimal amount of noise added to inputs improves the accuracy of a classification.

Letting m(i, t) denote the ith nodal mRNA concentration and p(i, t) denote the ith nodal protein concentration the rate equations are as follows:

| (3) |

with

| (4) |

| (5) |

and

| (6) |

Where β is some positive constant (taken in the following calculations to be 40) and f(x) is a sigmoid function switching from 0 to 1 if x is increased. The matrix A represents the topology of the genetic network. A ij > 0 implies that the ith protein is an activating promoter for the jth gene, A ij < 0 implies that the ith protein is an inhibitor for the jth gene and A ij = 0 implies that there is no transcriptional relationship between the ith protein and jth gene. In addition to this nodes 3 and 4 are self promoting, although this is simply to increase the speed of convergence and will not qualitatively affect the dynamics. For example the A matrix for the network in Fig 1a would be as follows:

| (7) |

It can clearly be seen that the dynamics of this system will be bounded, indeed we can easily show that if both m(i, 0) and p(i, 0) are in [0, 1] they will never leave this range. As a generalised chemical concentration could not be so rigorously contained we should consider these as concentrations relative to some maximal concentration level.

As discussed earlier we wish to have some mechanism by which the weightings (as manifested by the values in the matrix 7) can be adjusted. As this would represent a multiplicational transcriptional strength we consider a mechanism of phosphorylation. We now require that the proteins produced at nodes 1 & 2 must be activated via phosphorylation by some external kinase concentration. In this way we can consider the rate of production of phosphorylated protein as the product of concentrations of the unphosphorylated protein and the phosphorylating kinase concentration. It is by this mechanism that we justify the ability to adjust the values of w 1 and w 2 and thus the weightings for linear classification. If the weights have been trained as a result of the evolution, then not only w 1 and w 2, but also other connections could have a different value obtained as a result of training. It is possible also that the inputs will activate node 3 and repress node 4 with a different rate. This will lead to some changes of the separation line shown in the Fig 1b, but will not change the dynamics of the genetic perception in a qualitative way. If we consider this design as a scheme for the synthetic genetic perceptron, then it is more logical to assume that only weights w 1 and w 2 will be adjusted.

Results

Results demonstrating effect of a stochastic resonance in the genetic classifier

In Fig 1b we demonstrate the systems ability to perform linear separation on the input initial conditions. In the current design the weightings by which the linear separation is performed is manifested in the strength of transcriptional regulation between certain nodes as seen in (Eq 7). By varying this we are able to perform a wide range of linear classification tasks, i.e., we are able to perform any linear separation possible on the domain [0, 1] × [0, 1]. This adjustment of the transcriptional strength is justified earlier by the addition of a phosphorylation stage.

From here onwards we will be interested in noisy systems where noise is an extrinsic noise added to inputs. Hence, rather than using techniques of stochastic simulation popular in Mathematical Biology we will be using deterministic systems adjusted by the addition of some stochastic term to inputs. Effect of this can clearly be seen in Fig 1b, the addition of noise to the unspoiled threshold will only cause a decrease in accuracy of classification. The situation will dramatically change if, initially, the classifier had some inaccuracy introduced by spoiling the threshold of classification. The context in which we will look for stochastic resonance is in the case that the mechanism has been spoiled in some sense. This will be manifested by an adjusted initial concentration value for the 0th node. As stated earlier our systems performance without any spoiling of some kind is considered an ideal set of results so obviously no improvement can be made there and noise will only decrease our accuracy, this can be observed in Fig 1b showing a severe decrease in accuracy with the addition of noise when there is zero spoiling.

For spoiled perceptron, however, adding noise can surprisingly restore a correct classification manifesting the effect of stochastic resonance. The term stochastic resonance is somewhat of a misnomer as in this case we make no reference to acoustic or vibrational resonance. Instead what we mean is that some measure of functionality of our system is improved by the addition of some optimal intensity of noise. This optimal noise should be some finite value of the variance of an additive normally distributed noise term. This implies that our system should perform worse after a certain amount of noise. This makes sense intuitively as an infinitely noisy system would not be able to facilitate any order.

The inputs to our system are generated as a pair of random numbers uniformly distributed between zero and one and then classified according to the unspoiled threshold. Next, the same inputs have the Gaussian noise added to them and are classified again this time using the spoiled threshold. The two classifications are then compared and compiled into the accuracy score as follows. Considering a set of N input sets , let

be the non-noisy unspoiled value outputted for the ith input set, and let

be the noisy, spoiled, outputted value. Define

| (8) |

and

| (9) |

Then we can define accuracy as

| (10) |

If we perform this accuracy analysis with a spoiled threshold we observe Fig 1c. Here we see that for some optimal value of input noise we witness an increase in accuracy. This result is surprising as the noise added was normally distributed and thus directionally unbiased. This is also significant as we have genuinely produced an output set which is more faithful to the original than the non-noisy case.

The governing equations for our genetic network model were simulated using a 4th order Runge-Kutta numerical integration scheme. Normally distributed random variables were generated using the Box-Muller transformation for uniform random variables. When considering accuracy we calculated it in the following way, using a Monte Carlo style approach. We generated a set of 10000 pairs of random variables, distributed randomly on the interval [0, 1]. These pairs became the initial concentrations of nodes 1 and 2 respectively. The network is then simulated for these initial conditions and after a sufficient amount of time the concentration of node 3 will have converged on either 1 or 0. This value is taken as the output for that simulation. We then repeat the simulation for the same pairs of inputs but this time with a normally distributed random variable added to each of them and the initial concentration of node 0 adjusted. We compare the results of this new simulation with the simulation of the same pair of input concentrations.

Stochastic resonance in a simple perceptron

In order to examine the mechanism of this effect we will consider a simplification of this system. The perceptron is a well-established concept and the most simple manifestation of a neural network. It performs linear separation on n inputs (see Eqs 1 and 2) as described earlier but will give us a form which is easier to examine (see Fig 2a). This figure shows a 2-input perceptron, but the similar scheme applies for n-input configurations.

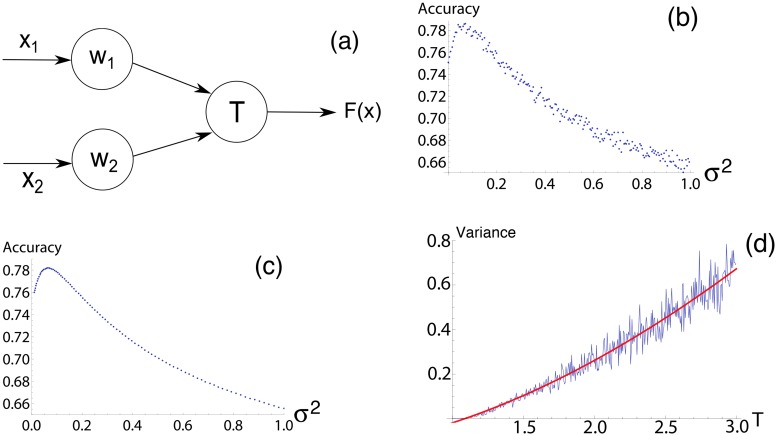

Fig 2. Stochastic resonance in a simple perceptron.

(a) Visualisation of a simple perceptron with two inputs x 1 and x 2. (b) Graph showing accuracy vs. noise intensity in a threshold spoiled from 1 to 1.3. This is a well known bell shaped curve for SR. (c) Analytical results displayed here closely matches numerical simulations in (b). (d) Linear correlation between optimal noise intensity and spoiled threshold value.

Here we will spoil the threshold value T (for example, by changing it from 1 to 1.3) and measure the accuracy of the output assignment functionality of the algorithm both with and without noise. The noise is represented by a random variable from a Gaussian distribution with mean μ = 0 and variance σ 2 = z where z will be increased from 0 to 1 to represent our increasing intensity of noise. Our accuracy measure simply gives the success rate with which the algorithm correctly classified all members of the test set when given the new, spoiled system to work with. In practice we calculate this using a Monte-Carlo style approach, a set of N (10000 in practice) input vectors are generated, each consisting of n uniformly distributed random entries from the range [0, 1]. We classify this set according to the unspoiled threshold T 1 to get

These 10000 input vectors have a normally distributed random variable added to each element of them and are then classified again according to the spoiled threshold T 2 to give

And accuracy is calculated as in (Eq 10). Accuracy vs noise intensity is shown in Fig 2b and again shows clearly defined SR manifesting itself in restoration of classification accuracy for an optimal amount of input noise.

Analytical Investigation

Now that we have demonstrated the existence of this effect of stochastic resonance it would be prudent to attempt to explain it analytically. In doing so we must think more rigourously about our random variables and their distribution functions. For simplicity let us first consider the 2-input system with w 1 = w 2. Our input values x 1 and x 2 are uniformly distributed random variables between 0 and 1, and as such will each have a probability distribution function (pdf) given by:

| (11) |

But when we consider the sum of 2 uniform random variables we must adjust the pdf. Let X = x 1 w 1+x 2 w 2, then

| (12) |

Now we must consider the addition of noise. Our noise is distributed normally, always with μ = 0 but with changing variance σ 2. The normal distribution has the following pdf:

| (13) |

We wish to combine 2 lots of noise (1 for each input) and as in this case both of our weights are the same we can simply combine our noise terms together and add them to X. The sum of two independent normal variables is normally distributed itself with μ = μ 1+μ 2 and giving us . We also wish to multiply the noise by the weight value, to do this we simply multiply the standard deviation σ by w.

This gives:

| (14) |

Now we must consider how to formulate the system as a whole. We will consider the concept of accuracy as before, where for each point which is successfully allocated before spoiling, we consider the probability of it still being successfully allocated after spoiling and noise. Now let T 1 be the pre-spoiling threshold and T 2 be the post-spoiling threshold. For a point x this probability is given by:

If x < T 1

| (15) |

And, if x > T 1

| (16) |

Now we must apply this to the entire distribution of x, remembering to use our probability density function f(x). As the distribution of x is independent to the distribution of the additive noise we can simply multiply the probabilities:

| (17) |

Gives the total likelihood of

| (18) |

which is analogous to our measure of accuracy from before but in a more continuous sense.

This expression containing double integrals can be tidied up in terms of the error function:

| (19) |

Which is related to the cumulative distribution function of the normal distribution such that our equation now becomes:

| (20) |

As f(x) represents a probability distribution function we know that its integral over its whole range ([0, 2w] in this case) must be equal to 1. Using this we can simplify further:

| (21) |

By plotting this for increasing variance σ 2 and comparing it with the simulated results using the same coefficients we can see perfect correlation (compare Fig 2b and 2c). From this one can also find optimal noise intensity as a function of a spoiled threshold value (Fig 2d).

Generalising the Model

To generalise this model we must consider a system with n inputs and independent weights for each input (w 1, w 2, …, w n). First consider our input variable X ∼ w 1 X 1+w 2 X 2+…+w n X n. Each w i X i is a uniformly distributed random variable on the range (0, w i). We can generate the probability distribution function for a distribution such as this from the following function [21].

| (22) |

Where:

Theoretically it is now possible to produce the probability distribution function for any number of independent inputs but due to the nature of this equation it is difficult to treat this general formula analytically. We will proceed by generating it for the case n = 2.

If we evaluate this for n = 2 we arrive at the probability distribution function for 2 independently weighted inputs. Labelling such that w 1 < w 2

| (23) |

Considering the addition of n independently weighted noise terms is simpler. We can simply consider a single Gaussian distribution with a modified variance due to various properties of adding normal distributions and multiplying them by constants:

Let

Then

And

From this we can see how to create our new variance.

But all σ i will be equal so we have:

We have now reduced our n input system to a single variable distributed according to f n(s) and a single noise term, defined as above.

Defining σ as stated and defining W as the sum of all weights we end up with a similar description for the full system as before.

| (24) |

This fully describe the accuracy vs. noise intensity curve for n inputs with independent weightings.

Optimal Spoiling

Earlier we discussed the idea of threshold spoiling acting as some sort of mechanism for providing robustness to noise. Now with this in mind we can refer back to (Eq 24) but rather than considering it as a function of σ we take T 2 to be the independent variable and σ to be a parameter.

| (25) |

Plotting this for a given value of noise intensity will show us the optimal spoiling value to achieve the greatest value of accuracy. As shown in Fig 3a we can confirm that for a non-noisy system the optimal value for T 2 is 1 and equal to T 1 as would be expected. Increasing the noise intensity also increases the optimal T 2 (Fig 3a–3d).

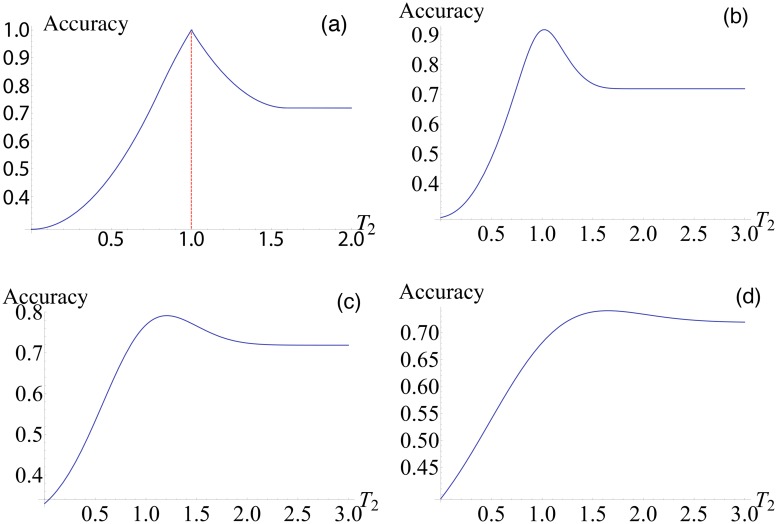

Fig 3. Accuracy vs threshold.

(a) Here the function (Eq 25) is plotted for σ 2 = 0. Examples of increasing values for σ 2 showing an increasing value for optimal T 2, this corroborates the findings of Fig 2d which showed a linear relationship between spoiling and optimal noise. Increase of noise intensity leads to the shift of the optimal threshold to the right, see (b-d). Here b: σ 2 = 0.01, c: 0.1, and d: 0.2.

If the noise is only intrinsic, re-orienting our equation in this way in fact makes more sense as the threshold value in our original model would be much more flexible than the intrinsic intensity of noise in the system. Thermodynamic considerations tell us that to expect an increase in noise in a chemical system we would require either a decrease in cell volume or an increase in temperature. In fact studies have shown [22] that an increase in environmental temperature will cause an evolutionary increase in cell volume in Drosophila melanogaster. Aside from this it seems unlikely that optimising behaviours such as those we are considering would provide significant enough evolutionary pressure on cell size for it to be sensible to consider it a variable in this sense.

In a potential synthetic biology implementation of such a linear perceptron (Eq 25) could be invaluable in terms of optimizing the system. It allows us to determine how best to skew the threshold parameter in order to compensate for the inescapable effects of noise in the system. Indeed this could be applied to any application of linear classification attempting to operate in a noisy environment.

In addition to simply a practical application it is of interest to try and explore such systems as designs of this type appear frequently in nature. For example, it has been shown that the process by which bristles in the epithelial cells of Drosophilia are organised could be imagined as a perceptron style system [23]. Delta-Notch signalling allows cells to make analysis from numerous inputting filopodia. From this information the cell can make a decision as to whether it should differentiate to a bristle producing hair.

In summary we have outlined a design by which linear separation can be performed genetically on the concentrations of certain input protein concentrations. In addition to this we are able to contextualize this separation through the introduction of external kinases which allow the systems weightings (and thus its line of separation) to be adjusted within the cellular life rather than having to be adjusted evolutionarily. Considering this system in a presence of noise we observed, demonstrated and quantified the effect of stochastic resonance in linear classification systems with input noise and threshold spoiling. To explain a mechanism of this effect we have considered a simple classifying perceptron and have shown that analytical results match the numerical simulations. The consideration of linear separation in noisy environments is relevant due to the inherantly noisy nature of gene expression in cells both due to extrinsic or intrinsic factors.

Discussion

Spoiled vs not spoiled perceptron in the presence of noise

Whereas an idea to spoil the threshold of classification may look a bit artificially in a sense that we first corrupt a classification, and then restore it, the Fig 4a illustrates that for certain noise intensities spoiling is a genuine way how a biological system could adapt to the unavoidable level of stochasticity. This figure shows that for noise intensity σ 2 > 0.1 spoiled perceptron has better accuracy in classification than not spoiled one. It could lead to speculations that, since some noise is certainly present in gene expression, classifying genetic networks will evolve towards shifting the classification threshold to compensate an effect of noise.

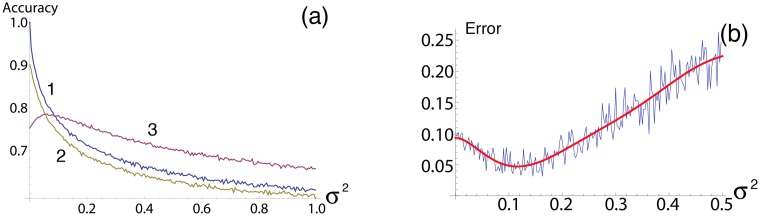

Fig 4. Accuracy and error function vs noise intensity for threshold spoiling and learning resonance.

(a) Accuracy vs. noise intensity for various values of threshold spoiling. Here we see 3 curves for various values of threshold spoiling, T = 0, 0.5, 1.1. The blue line 1 shows the case for zero threshold spoiling, here we see perfect accuracy for no noise, as would be expected but a sharp decrease with the addition of noise. The same remains for spoiling T = 0.5, line 2. The purple line 3 shows a threshold spoiling which exhibited some degree of stochastic resonance, not only do we see a peak at which it exceeds the unspoiled accuracy but we also see it far exceeding the performance of the unspoiled threshold throughout the intensity range examined. Essentially the threshold spoiling has provided some degree of robustness to the noise which is an extremely interesting property in itself. The contrary could also be inferred that the noise is providing some degree of robustness to threshold spoiling by a ‘blurring’ of the lines as seen in Fig 1b. This figure clearly demonstrates that there is a strong relationship between threshold spoiling, input noise and accuracy but how they work together can be highly variable. (b) Graph showing learning resonance for spoiled T = 1.1, w 1 = 0.7 w 2 = 0.7.

Resonance in the Learning Algorithm

The design presented allows for the ‘contextualisation’ of classification whereby the same network implemented in a different scenario could perform an entirely different separation task depending on the stimuli present. While this allows for a great deal of flexibility we would like to explore the idea of the implementation of some kind of perceptron learning in the same vein as the delta learning rule, a simple version of back propagation learning. In this learning method weights are updated by a process of comparison of the perceptron output to some ideal result for the given inputs. Mathematically we consider it as follow. Let w = {w 1, …, w n} denote the desired weight set and denote the learning algorithms lth iterative attempt at learning the desired weights. Also let be the learning set. Each x j is a set of input values for which we have the desired output given by O(x j). Then we update our weights as follows,

Where

While this is more computationally intensive than simply setting our weights as desired as in the current implementation it has a major advantage in the sense that it is results driven. With this kind of learning we are guarenteed for our system to conform to our desired outputs as provided in the test sets. When setting weights directly we are not guarenteed that we will get the results we desire, simply that we will have the weights given.

The thinking behind backpropagation learning involves the system performing a comparison between the result which it has arrived at and some ideal result. By considering discrepancies between these two results it can then make adjustments to the process by which it arrived at its result in order to move closer to the ideal result.

This kind of learning requires a large degree of reflexivity on the behalf of the network and as such it is difficult to imagine a biological implementation of such a learning technique. Despite this we feel it is worth considering whilst on the topic of perceptrons as it is an extremely effective technique. The procedure is simple: for each element j of some training set O, where we have a set of inputs along with their desired output, we should compute the following:

Here α is some sort of learning rate, if we choose α too large then we may end overcorrect and adjusting the weights too far in each update, but the smaller it is chosen then the longer it will take to arrive at the desired weights.

This improvement to w i is performed for each i and looped through for each entry in the training set. The whole process is then repeated until the error in the system is sufficiently small or a maximum number of iterations is reached. For a training set of N input/outputs we have:

For an error threshold of 0.001, α = 0.01 was found to be suitable for optimal learning speeds and for what follows, the unspoiled threshold has T = 1.

Previously, we have just been examining the output assignment functionality of the algorithm but the ideas of stochastic resonance could also be applied to the learning algorithm. As in the previous case we must spoil the system in some way and then we will examine some kind of measure of accuracy for increasing intensity of noise. The way in which this will be implemented is by spoiling the value of T in F(X) as defined in the previous section and adding the noise to each x 1 and x 2 from the test set before it is fed into F(x). The accuracy is measured by considering the error from the actual intended weights. This is done by finding the Euclidean distance of the algorithms attempts at the weights to the correct values:

The algorithm was allowed to run through the test set 2000 times before arriving at its final attempt at the correct weights w 1, new and w 2, new. As is clear from Fig 4b we again find that the system performs optimally under some non-zero amount of noise, shown here by a minimized error (where noise intensity σ 2 ≈ 0.12) rather than a maximized accuracy rate as before.

Another simulation featuring independently changed weights shows that the resonance still applies in this case. It would be of interest to perform a more thorough investigation into this effect and to try and describe it analytically if possible. A better understanding of this effect and the circumstances in which we can find it would be of great interest for applications in learning algorithms in general. Of additional interest would be to investigate the possibility of a genetic implementation of learning of this kind. It is not clear whether such an implementation would be possible, if it were possible it would undoubtably be much more complex than our linear classification network. If possible it’s construction and simulation would certainly be of great interest.

Finally, construction of intelligent intracellular gene regulating networks is the hot topic of synthetic biology, e.g., see for a review [24], and here we have shown that unavoidable noise can be constructively used in such design.

Acknowledgments

We acknowledge support from the Deanship of Scientific Research (DSR), King Abdulaziz University (KAU), Jeddah, under grant No. (86-130-35-RG), CR-UK and Eve Appeal funded project PROMISE-2016, Russian Foundation for Basic Research (14-02-01202, 13-02-00918), and partly by the grant (the agreement of August 27, 2013 N 02.B.49.21.0003 between The Ministry of education and science of the Russian Federation and Lobachevsky State University of Nizhni Novgorod).

Data Availability

All data used in the paper are fully available without restriction. All results of the simulations are displayed in the paper with the corresponding parameters values.

Funding Statement

The authors acknowledge support from the Deanship of Scientific Research (DSR), King Abdulaziz University (KAU), Jeddah, under grant No. (86-130-35-RG), CR-UK and Eve Appeal funded project PROMISE-2016, Russian Foundation for Basic Research (14-02-01202, 3-02-00918), and partly by the grant (the agreement of August 27, 2013 N 02.B.49.21.0003 between The Ministry of education and science of the Russian Federation and Lobachevsky State University of Nizhni Novgorod).

References

- 1. Saigusa T, Tero A, Nakagaki T, Kuramoto Y. Amoebae Anticipate Periodic Events. Physical Review Letters. 2008; 100: 018101 10.1103/PhysRevLett.100.018101 [DOI] [PubMed] [Google Scholar]

- 2. Hjelmfelt A, Weinberger ED, Ross J. Chemical implementation of neural networks and Turing machines. Proc Natl Acad Sci U S A. 1991; 88: 10983–7. 10.1073/pnas.88.24.10983 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Hjelmfelt A, Ross J. Implementation of logic functions and computations by chemical kinetics. Physica D: Nonlinear Phenomena. 1995; 84: 180–193. 10.1016/0167-2789(95)00014-U [DOI] [Google Scholar]

- 4. Bray D, Lay S. Computer simulated evolution of a network of cell-signaling molecules. Biophys J. 1994; 66: 972–7. 10.1016/S0006-3495(94)80878-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Gandhi N, Ashkenasy G, Tannenbaum E. Associative learning in biochemical networks. J Theor Biol. 2007; 249: 58–66. 10.1016/j.jtbi.2007.07.004 [DOI] [PubMed] [Google Scholar]

- 6. Fernando CT, Liekens AM, Bingle LE, Beck C, Lenser T, Stekel DJ, et al. Molecular circuits for associative learning in single-celled organisms. Journal of The Royal Society Interface. 2008; 6: 463–469. 10.1098/rsif.2008.0344 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jones B, Stekel DJ, Rowe JE, Fernando CT. Is there a Liquid State Machine in the Bacterium Escherichia Coli? In: Proceedings of IEEE Symposium on Artificial Life. 2007; p. 187–191.

- 8. Qian L, Winfree E, Bruck J. Neural network computation with DNA strand displacement cascades. Nature. 2011; 475: 368–72. 10.1038/nature10262 [DOI] [PubMed] [Google Scholar]

- 9. McAdams HH, Arkin A. It’s a noisy business! Genetic regulation at the nanomolar scale. Trends in Genetics. 1999; 15: 65–69. 10.1016/S0168-9525(98)01659-X [DOI] [PubMed] [Google Scholar]

- 10. Elowitz MB, Levine AJ, Siggia ED, Swain PS. Stochastic Gene Expression in a Single Cell. Science. 2002; 297: 1183–1186. 10.1126/science.1070919 [DOI] [PubMed] [Google Scholar]

- 11. Gillespie DT. The chemical Langevin equation. The Journal of Chemical Physics. 2000; 113: 297 10.1063/1.481811 [DOI] [Google Scholar]

- 12. Benzi R, Sutera A, Vulpiani A. The mechanism of stochastic resonance. J Phys A. 1981; 14: L453 10.1088/0305-4470/14/11/006 [DOI] [Google Scholar]

- 13. Russell DF, Wilkens LA, Moss F. Use of behavioural stochastic resonance by paddle fish for feeding. Nature. 1999; 402: 291–294. 10.1038/46279 [DOI] [PubMed] [Google Scholar]

- 14. Priplata AA, Niemi JB, Harry JD, Lipsitz LA, Collins JJ. Vibrating insoles and balance control in elderly people. The Lancet. 2003; 362: 1123–1124. 10.1016/S0140-6736(03)14470-4 [DOI] [PubMed] [Google Scholar]

- 15. Mori T, Kai S. Noise-induced entrainment and stochastic resonance in human brain waves. Physical Review Letters. 2002; 88: 218101 10.1103/PhysRevLett.88.218101 [DOI] [PubMed] [Google Scholar]

- 16. Usher M, Feingold M. Stochastic resonance in the speed of memory retrieval. Biological Cybernetics. 2000; 83: L11–6. 10.1007/PL00007974 [DOI] [PubMed] [Google Scholar]

- 17. Bates R, Blyuss O, Zaikin A. Stochastic resonance in an intracellular genetic perceptron. Physical Review E. 2014; 89: 032716 10.1103/PhysRevE.89.032716 [DOI] [PubMed] [Google Scholar]

- 18. Alberts B. Molecular biology of the cell. New York: Garland Science; 2008. [Google Scholar]

- 19. Suzuki N, Furusawa C, Kaneko K. Oscillatory Protein Expression Dynamics Endows Stem Cells with Robust Differentiation Potential. PLoS ONE. 2011; 6: e27232 10.1371/journal.pone.0027232 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Weiss JN. The Hill equation revisited: uses and misuses. FASEB J. 1997; 11: 835–41. [PubMed] [Google Scholar]

- 21. Sadooghi-Alvandi SM, Nematollahi AR, Habibi R. On the distribution of the sum of independent uniform random variables. Statistical Papers. 2007; 50: 171–175. 10.1007/s00362-007-0049-4 [DOI] [Google Scholar]

- 22. Partridge L, Barrie B, Fowler K, French V. Evolution and Development of Body Size and Cell Size in Drosophila melanogaster in Response to Temperature. Evolution. 1994; 48: 1269–1276. 10.2307/2410384 [DOI] [PubMed] [Google Scholar]

- 23. Cohen M, Georgiou M, Stevenson NL, Miodownik M, Baum B. Dynamic Filopodia Transmit Intermittent Delta-Notch Signaling to Drive Pattern Refinement during Lateral Inhibition. Developmental Cell. 2010; 19: 78–89. 10.1016/j.devcel.2010.06.006 [DOI] [PubMed] [Google Scholar]

- 24. Lu TK, Khalil AS, Collins JJ. Next-generation synthetic gene networks. Nat Biotechnol. 2009; 27: 1139–50. 10.1038/nbt.1591 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data used in the paper are fully available without restriction. All results of the simulations are displayed in the paper with the corresponding parameters values.