Abstract

We set up a game theoretic framework to analyze a wide range of situations from team sports. A fundamental idea is the concept of potential; the probability of the offense scoring the next goal minus the probability that the next goal is made by the defense. We develop categorical as well as continuous models, and obtain optimal strategies for both offense and defense. A main result is that the optimal defensive strategy is to minimize the maximum potential of all offensive strategies.

Introduction

A subset of all team sports is the ones where two opposing teams each have a goal to defend, and the team which scores the most points win the game. This paper analyzes general situation tactics in such sports, henceforth denoted team sports. Game intelligence in team sports is usually regarded as something very incomprehensible, and excellent players are often praised for how they “read the game”. Even though most would agree on what constitutes good skills—technique, strength, agility, endurance, etc—it is less obvious what characterizes a good player in terms of game intelligence.

We will make an attempt at analyzing the concept of game intelligence from a game theoretic perspective. To this end, we assume that a player’s overall ability can be categorized into two parts. First, the ability to decide on a strategy, which is in some sense optimal, in each encountered game situation. Second, to carry out the chosen strategy. The first category is what typically is contained in the concept of game intelligence, while the last category has to do with a player’s skill set. The present paper will focus on the first category. Obviously, one can never know which choice would have worked out the best on each particular occasion. However, we show in this paper that we can find strategies which are optimal in the mean. To analyze such situations, we adopt a game theoretic framework. We will model game situations as so called zero-sum games, i e games where the players have exactly opposite rewards. In our setting, a goal made by the offense has value one, which is exactly the negative of the value it has for the defense. Conversely, a goal made by the defense has the value minus one for the offense which again is exactly the negative of the value it has for the defense. Further, we associate a utility to each player’s choices. We will define the utility function for team sports to be the potential; the probability of the attacking team scoring the next goal minus the probability that the next goal is made by the defending team. Given this setup, we can model a variety of team sport game situations, and solve these using standard game theoretic methods. An implication of our approach is that game intelligence is not something incomprehensible, but rather an acquired skill. Note that our setup is different than the so-called potential games in e g [1]. We have chosen to denote the utility function by potential because of its natural interpretation as a scalar potential for a conservative vector field.

The first part of the paper gives the theoretical foundation underlying our analysis, including the concept of potential fields, and derives some results with applications to game intelligence. The following sections focus on game situations where the players make decisions based on a given set of strategies. Here, we apply principles from game theory to determine which decisions are optimal. A main consequence of our problem set up is that the optimal defensive strategy is to make the best offensive choice, in terms of potential, as bad as possible. Further, the optimal strategy for the offense is to distribute shots between the players so that, for every player, a shot should be taken if and only if the potential is larger than a certain threshold. This threshold is the same for all players. It is important to note that the optimal strategy does not guarantee a successful outcome on each occasion. Rather, the optimal strategy for a specific situation gives the best outcome in terms of potential.

There is an extensive literature on the application of game theory to fixed game situations in sports. In [2], the game theoretic analysis of sports as a quantitative field is introduced by testing if professionals use the mixed Nash equilibrium when they decide on which side, forehand or backhand, to land the first serve. They found that men’s pro tennis players did not randomize their serves in an optimal way, but were rather switching the serve direction too often. Later, [3] found evidence of good strategic play among professionals in the same situation. In the same setting, [4] included in their analysis also the types of court surfaces, and found evidence that servers on faster surfaces tend to hit the serve to an opponent’s backhand too often.

In [5], a game theoretic approach yields that there is room for improvement both in pitch selection in Major League Baseball (MLB) as well as in calling plays in the National Football League (NFL). They can conclude that pitchers throw more fastballs than what is optimal, and that football teams pass less than they ought to. Interestingly, they find in both sports a negative serial correlation in the decisions. Further, [2] find the same result in tennis, and [6] report similar findings for the NFL. This indicates that strategies, in some sports, are changed more often than what we would see if the strategy choices were truly random. In [6], the authors offer the explanation that teams excessively switch play types in order to not be perceived as predictable. The paper [7] present the idea that, in the NFL, the observed temporal dependence in strategy choice is due to that the offense tries to wear down the defense.

In soccer, [8], [9], [10], and [11] all find that during soccer penalty kicks, both the strikers and the goalkeepers are making choices that are consistent with the strategies of Nash equilibria.

Even though fixed game situations play an important role in sports such as ice-hockey, team handball, basketball and soccer, these sports are primarily built up by in-game activity. However, there does not seem to be much literature on quantitative approaches to in-game activity, or to game intelligence which is the scope of the present paper. The papers [12] and [13] are slightly related in spirit to the first part of the present paper. In [12], the performance of an basketball offense is modeled as a network problem. The paper [13] investigates when, in terms of shot quality, a basketball team should shoot. Further, [14] estimate a matching law from basketball scoring data, and are able to verify a good fit. Finally, optimal strategies for an underdog are derived in [15]. In soccer, [16] analyze the in-game decision making of shooting towards the far post as opposed to the near post.

This paper is organized as follows. In Section Potential, we introduce the concept of potential, which is of central importance to our analysis. We prove in Section Fundamental results a theorem and a lemma. These will be used extensively throughout the paper. Sections Strategic game situations and Extensive game situations present some standard game theoretic notation and results. Further, we adapt these results to our framework, and give some examples which illustrate the applicability of the theory to game intelligence. In Section Shot potential, we define the shot potential as the probability of scoring from a given position, and show how this can be used in the modeling and analysis of various situations in sports. Section Parameter estimation presents some possible approaches to estimate the model parameters from data. We conclude with a discussion.

Potential

We introduce in this section the concept of potential. This idea is fundamental to our analysis, and it is applied in all game situations that we consider.

There are two teams in each game; team A and team B. We define the stochastic expiration time T to be the time when the next goal is scored or the game ends. Further, we denote by V(T) the stochastic variable that takes the value 1 if team A scores, −1 if team B scores, and 0 if no goal is scored before the game is over.

Definition 1 The potential, v, is defined by

Hence, the potential is the probability of team A scoring the next goal, minus the probability that the next goal is made by team B. The potential v is a general stochastic process in continuous time which is conditioned on the present states of all players, as well as their respective strategies, skills and tactics. Unfortunately, the potential process v is too complex to model explicitly. Hence, we will in this paper analyze v only for a set of game situations which are frequently recurring in their specific sports. Given such a situation, we will be able to find the optimal player behavior in that particular setting. If the players adopt the optimal behavior, they improve the potential v for all t; higher potential from the perspective of team A, and lower from the point of view of team B. Recall that the potential v considers not just the probability of scoring, but also the effects of losing ball possession.

To illustrate the concept of potential, we consider a few short examples. In a situation where a team A player has possession with no team B players in her path to the goal, the potential is close to one. In contrast, if team A has ball possession, but there are a large number of skilled and well positioned team B players ahead of them, the potential may be close to zero. Further, a missed pass for team A yields a drop in the potential. This drop could be big for particularly bad passes, possibly down to almost −1.

We are considering n categorical alternatives to pursue shot attempts at. Further, the defensive effort, y ∈ [0, 1]n, such that ∑y i = 1, is the proportional effort that team B puts on each of the categorical alternatives in order to reduce the potential of the corresponding shot alternatives. In addition, x = (x 1, …, x n) ∈ [0, 1]n such that ∑i x i = 1 is the expected proportion of shot opportunities from each offensive alternative i = 1, …, n. We define the cumulative potential functions {F i}i = 1, …, n, for each i = 1, …, n, as the integral of the corresponding potential frequency function f i:[0, 1] × [0, 1] → [−1, 1], such that

for all x, y ∈ [0, 1]. We show now that the potential frequency function can be understood in terms of the distribution of the quality of the shot opportunities. Consider an offensive alternative i. We assume that under normal game conditions, the shot opportunities Y i(y) ∈ [−1, 1], given defensive effort y in that shot alternative, are drawn randomly and independently from a known distribution G Yi(y). Here, each value of Y i(y) gives the potential for that shot opportunity. The potential frequency function is defined by

where (⋅)−1 denotes the inverse and x, y ∈ [0, 1]. Hence, the potential frequency function gives the potential in seeking an additional “infinitesimal” proportion of the shot opportunities at the offensive alternative i given expected shot proportion x and defense effort y. We see that, by construction, the potential frequency functions f i are monotonically decreasing in the first argument, respectively. This is natural, since a rational offensive approach is to seek shot attempts at the best shot opportunities first and next consider shot opportunities with smaller potential. Further, we will assume that the potential frequency functions are also monotonically decreasing in the second argument, respectively. This models the rational game characteristic that if more defensive effort is put on shot alternative i, the potential of that alternative is reduced.

To exemplify, a rational defensive action in response to a high potential categorical offensive alternative i would be to increase the defensive effort y i, and decrease some or multiple y j for j ≠ i, such that f i(x i, y i) is reduced to the expense of an increase in f j(x j, y j).

We illustrate now the concept of potential with two brief examples, one from ice hockey and one from team handball.

When is it a good idea to break the rules in a way which causes a 2 minute penalty in ice-hockey? Assume that the probability that team A scores during a team B penalty is p ∈ (0, 1). Conversely, the probability that team B scores while being one player short is approximately 0. Further, we assume that the potential if team A has scored is 0, as is the potential if no team has scored when the penalty is over. Hence, the potential when the penalty time starts is p. This implies that if it is going to be a good decision for a team B player do to something which gives a 2 minute penalty, her action needs to prevent a situation which had a potential higher than p.

Consider a wing position in team handball, with index w. The wing player shoots when given possession and a free path up to at least η meters up from the short side line. The potential of shot opportunities when shooting at exactly η meters is f w(x w, y w) under the offensive shot proportions x and defensive efforts y ∈ [0, 1]n. If the wing player is supposed to increase the proportion of expected shots, x w, then she needs to take shot opportunities from less than η meters. Further, the additional shots will have a lower potential, since it is harder to score from a wide position than from a central one. In addition, if there is an increased defensive effort put on the wing player, i e if y w increases, then she needs to lower the threshold η in order to maintain the same shot proportion x w.

Remark 2 Note that it is straightforward to extend the concept of potential to let it account for various penalties or to sports where a goal can have different value in points depending on how it was made. Basketball is one example of such a sport.

Fundamental results

Here we state and prove two results, from which a lot of interesting conclusions are drawn.

Consider an offense categorized into n alternatives. We now state the following theorem, where A C denotes the complement of the set A:

Theorem 3 (Tactical Theorem of Team Sports) Suppose that the potential frequency functions {fi(x, y)}i = 1, …, n, for x, y ∈ [0, 1] are continuously differentiable and decreasing in x and y, respectively, and that is non-negative and continuous. Assume that we are given a fixed defensive effort y ∈ [0, 1]n such that . Further, the expected shot proportions x* ∈ [0, 1]n, subject to , satisfies

| (1) |

for all j, k in some subset 𝓚o(x*) ⊂ [1, …, n], with if and only if i ∈ 𝓚o(x*), for , and where , for all i ∈ 𝓚o(x*)C and any j ∈ 𝓚o(x*). Then x* maximizes the potential G defined by

| (2) |

Conversely, assume that we are given fixed expected shot proportions x ∈ [0, 1]n, with , and a defense y*, subject to , which satisfies

for all j, k in some subset 𝓚d(y*) ⊂ [1, …, n], with if and only if i ∈ 𝓚d, where . Further,

for all i ∈ 𝓚d(y*)C and j ∈ 𝓚d(y*). Then y* minimizes the potential G(x, y). Finally, if we can find a point (x*, y*) for which

for all feasible x, y, i e a point where x* is a maximizer for G when y is fixed at y*, and where y* is a minimizer for G when x is fixed at x*. Then

Proof. The first part of the proof follows by noting that a point x* which satisfies the conditions stated in the theorem allows us to apply the Karuch-Kuhn-Tucker optimization principles, see e.g. [17, Lemma 14.5]. This gives us that x* is a local maximum. Further, G is concave with respect to x, since the f i are continuously differentiable and decreasing. Hence we have a concave maximization problem, and it follows from [17, Theorem 2.1], that x* is a global maximizer.

The second part of the proof follows completely analogously.

The final conclusion is an immediate consequence of [17, Lemma 14.8].■

Intuitively, it is optimal for the offensive side to always strive to distribute shooting proportions such that all shots which are fired from any position should have a potential larger than some threshold. This threshold is the same for all offensive alternatives. Hence, if the offense plays optimally it should be indifferent to which offensive alternative to assign a small additional shot proportion to. On the contrary, the defensive side should seek to distribute its effort such that if a small defensive effort was added to any defending alternative i, these would yield the same decrease in F i.

We give now a simple but important lemma which helps us to understand how the defense should optimally position itself. We give the lemma for a single defender, but it is straightforward to extend it to a multi defender setting.

Lemma 4 (Positioning for Indifference) We assume that the offensive side has n alternatives, each associated with a continuous convex potential function {vi(y)}i = 1, …, n, of the defensive player’s position, location and velocity, y = (yl, yv). The offense will pursue its best alternative, so the potential of the situation v is defined by

For a set 𝓓: = {y: g i(y) ≥ 0, ∀i = 1, …, m} for concave functions gi, there exists a global minimizer y* ∈ 𝓓 to v. The point y* is either a minimum to vi for some i = 1, …, n, in which case vj(y*) ≤ vi(y*) for all j ≠ i, or y* satisfies

| (3) |

for all j, k in some subset 𝓚d ⊂ [1, …, n], and where vi(y*) ≤ vj(y*), for all and any j ∈ 𝓚d.

Proof. The first conclusion follows from [17, Theorem 2.1]. For the second part, if y* is the global minimizer and y* ≠ arg miny ∈ 𝓓 v i(y) for any i = 1, …, n, then for any i such that v i(y*) = v(y*) there is a non-zero gradient q i such that for small λ > 0 we have that y* − λq i ∈ 𝓓 and v i(y* − λq i) < v(y*). Further, since y* is the global minimizer and the potential functions are continuous and convex then there exist a j ≠ i such that v(y*) ≤ v(y* − λq i) = v j(y* − λq i). Hence, since the potential functions are continuous there exist a j ≠ i such that v j(y*) = v(y*).

We call an offensive alternative i relevant if v i(y*) = v(y*). If there is only a single element in the set of relevant alternatives, that option is called the dominating offensive alternative. The lemma above states that given that there is no dominating offensive alternative, the optimal defense position is such that the potential of at least two, possible several, offensive alternatives are equal.

Remark 5 Note that in the last parts of the game, the teams may apply a different metric than the potential, such as simply to maximize the probability of scoring, indifferent of the resulting potential of the situation. The Positioning for Indifference lemma obviously works regardless of which underlying functions vi we choose, as long as they satisfy the conditions of the lemma.

Notable examples

In this section, we give some examples of applications to the Tactical Theorem of Team Sports and the Positioning for Indifference lemma. The applications are drawn from ice-hockey and team handball. However, the results above are not at all constrained to these two sports. Rather, the two sports were chosen based on the authors personal sporting backgrounds.

Team handball; shot proportions

We will in this example challenge two old “truths” in team handball. These are that an acceptable level of shot efficiency for wing and pivot players are about 80%, while an acceptable corresponding level for backcourt players is about 50%. We have, by the Tactical Theorem of Team Sports, that given a defense y, optimal or not, the optimal expected shot proportions x* are such that their potential frequency functions are equal. Intuitively, the best alternative for each offensive player that they do not pursue all have equal potential.

Recall that the potential is the probability of team A scoring the next goal, minus the probability of team B scoring the next goal. Hence, it is different from the probability of team A scoring. In general, the risk of technical faults for reaching a back court or wing shot is less than for a corresponding pass to the pivot, which is more troublesome to set up.

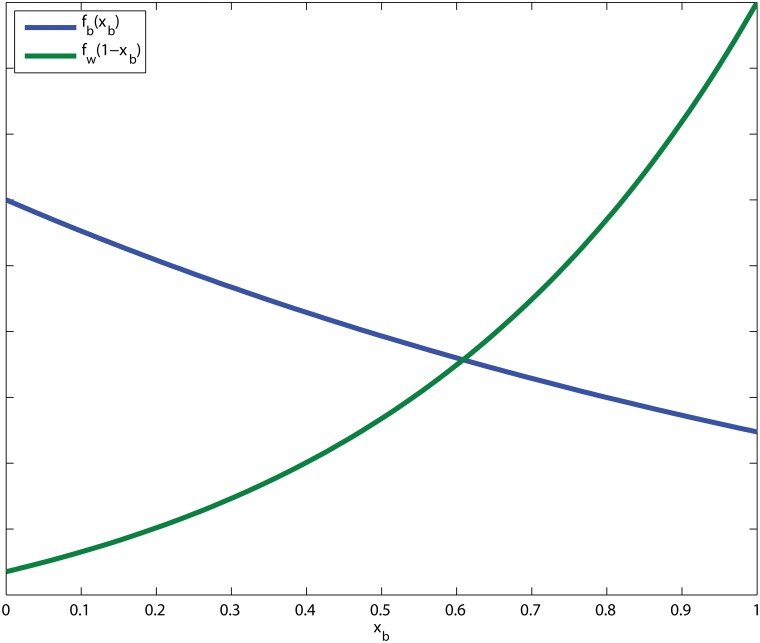

In order to illustrate the consequence of the Tactical Theorem of Team Sports, we give a simplified example of a situation with only two offensive players; a back court player and a wing player. Suppose, given the current defense, that the potential frequency functions for the back court and wing players are given by f b and f w, respectively, see Fig 1.

Fig 1. The potential frequency functions f b(x b) and f w(1 − x b).

We let x b denote the proportion of shots fired by the back court player, and thus x w = 1 − x b is the proportion of shots fired by the wing player. Further, we make the reasonable assumption that the risk of a counter attack from a missed back court attempt is the same as that of a missed wing attempt. As a consequence, the potential is equal to the shooting efficiency minus the same constant for both back court and wing players. By the Tactical Theorem of Team Sports, the optimal proportion of back court player shots is given at the point where the potential frequency functions satisfy f b(x b) = f w(1 − x b). In general, this suggests that the players with high efficiency should most likely be the ones to pursue additional shot opportunities, at the expense of the players with lower efficiency. Hence, the more efficient players should be put in shot positions more often, if that is at all possible without dramatically decreasing their efficiency to levels below those of the less efficient players. The opposite is true for less efficient players, who should take fewer shots and focus on getting their efficiency up. For a team in optimal play, all positions feature equal potential for their best shot opportunity not pursued.

It is natural to assume that the potential frequency functions are convex, meaning in essence that it is always easier to find bad shot opportunities than good ones. Under this assumption, the efficiency for all players will be quite close to the potential frequency function threshold at which to cease taking shots. To illustrate this using the setting of the present example, the efficiency for the back court and wing players will be larger than, but close to, f b(x b). But this implies that the “truths” stated in the beginning of the example are not truths at all, but rather signs of a non-optimal team strategy.

The main argument for accepting less efficiency for back court shots is that the defense will start to move up court if expecting additional shots from those positions. This results in more space for the efficient pivot players. However, by the Positioning for Indifference lemma, an optimal defense will only re-position for offensive alternatives that have a potential equal to the relevant alternatives. Hence, shots that are fired from back court which feature a potential below some efficiency threshold should not cause the defense to adjust their positions, but rather be appreciated by the defense as a non-optimal offensive strategy.

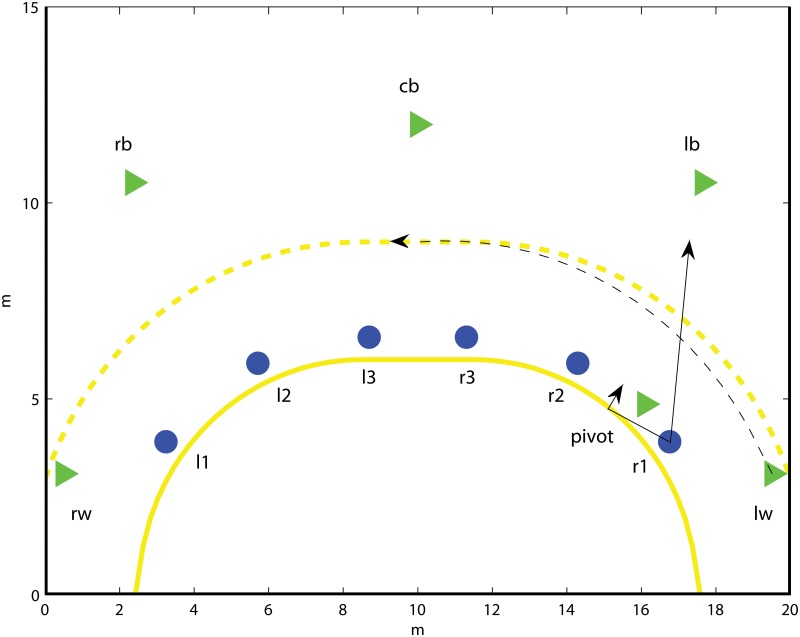

Team handball; wing change over

Consider a wing change over, which is a frequently occurring offensive opening in team handball. In a wing change over, one of team A’s wings repositions, with or without ball possession, to the opposite side of the court. The pivot subsequently screens on the inner side of r1, see Fig 2. We denote the players contributing in offensive play by: left wing (lw), left side back (lb), center back (cb), right side back (rb), right wing (rw) and pivot. The defensive positions are numbered from the side: left 1 (l1), left 2 (l2), left 3 (l3), right 3 (r3), right 2 (r2), right 1 (r1).

Fig 2. Schematic figure of a wing changeover opening in team handball.

The offensive players are denoted by triangles and the defensive players are marked by cirles. The dashed line displays the movement path of lw and the solid lines refer to the possible moves for r1.

The r1 defender chooses either to advance up court, marking the lb player, or to battle the pivot in an attempt to get around the screening and remove the goalscoring threat from the screening pivot. In the first case, r2 is responsible for handling the pivot, and these two players are usually of comparable size and strengths. However, in the latter case, it is the role of r1 to take care of the pivot. This is typically a mismatch situation where the big pivot player has a physical advantage over r1. Given r1 stays flat, team A will launch the attack from the lb player. If r1 moves up court, team A decides between launching the attack from the marked lb player, or from a central position. We can model this as follows. We denote by v p the potential of the situation where the pivot is screening r1. The potential of an attack started from the lb is v b(y), where y is the position of r1 as the situation begins. Further, v c(y) is the potential of the situation where the attack is started from a central position, without lb. The potential, v b(y), decreases as r1 approaches lb. However, the farther away r1 is from the remaining part of the defense, the more sparsely they will have to position themselves to cover the whole 6m line. Hence, in this case the potential v c increases.

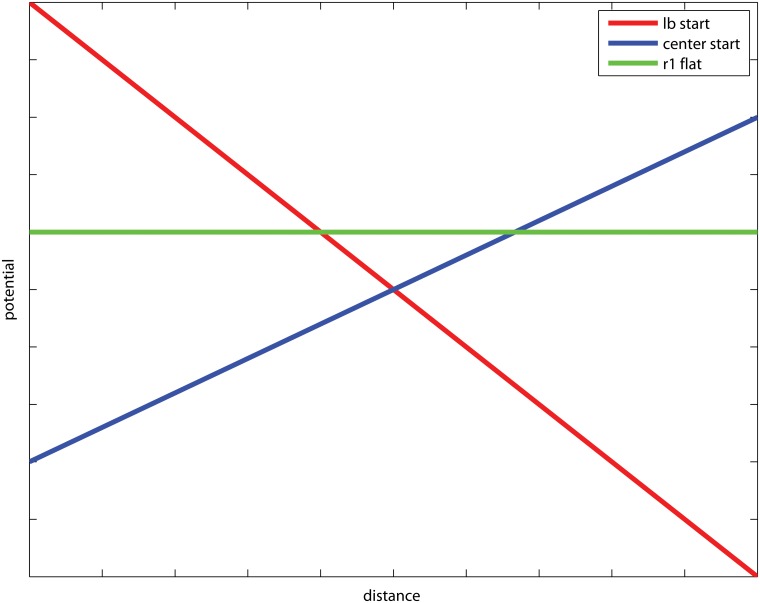

In order to illustrate the optimality principle, we let the potential functions be linear in the distance that r1 lifts from the field line, where v b(y) decreases and v c(y) increases with respect to the how far up court r1 moves, see Fig 3.

Fig 3. The potential functions for the example Team handball; wing change over.

The potential for a lb start (red) and a center start (blue), respectively, given that r1 moves up court. For reference, the potential of the situation where r1 stays flat (green) is displayed, even though it is not a function of y.

Given that r1 lifts up court and none of offensive alternatives are dominating, we have by the Positioning for indifference lemma that the potential of the situation is minimized for y* such that v b(y*) = v c(y*). Further, r1 should always lift up court if v b(γ*) < v p, and stay flat otherwise.

In reality, dichotomous choices to be flat or move up court are typically set at tactics sessions prior to the game. Here, team tactics are often that the speedy wing defenders should seek to avoid the screening situation associated with staying flat by choosing the alternative to move up court. Further, the offensive side attempts to hide the opening, sending over the offensive wing player lw to its right simultaneously as the lb starts the attack. This has the effect that r1 may not able to reach the optimal position prior to when lb charges. Further, r1 needs to re-categorize the game situation; to decide to move up court but only to a limited stretch , or to stay flat and challenge the screening set by the pivot. In the particular game situation displayed in Fig 3, then the optimal defensive strategy is to stay flat if yields a potential .

Note that the vast majority of wing defenders who choose to advance up court, do this all the way up to the side back court players, taking them out completely. This will only be the optimal defensive strategy given that the corresponding side back court player is the dominating alternative.

Ice hockey; one against one

In ice hockey, it is a common situation that a single offensive player with possession faces a single defender. Here, we will draw conclusions from the Positioning for Indifference lemma in a simplified such game situation. The offensive player, A 1, may choose to either shoot or to attempt to dribble past the defender, B 1. We assume that regardless of how possession is lost, either by a missed shot or a failed dribble, the resulting potential will be the same. This is a natural assumption, since in either case there are four players in team A defending the counter attack. An attempt to dribble has a certain probability of failure, resulting in a loss of possession. However, if it succeeds, A 1 has a free path up to the goal. This is a situation with a very high probability of scoring, and thus also high potential.

The defending B 1 player has to choose how to position herself in stopping each of the two offensive alternatives. If B 1 puts much pressure on A 1 early on, by seeking to close the distance between the players, the offensive choice of shooting will be bad, since A 1 is far away from the goal and will have to take a shot under pressure. On the other hand, when B 1 puts high pressure on A 1, the relative speed between the players will be large. This improves the probability of a successful dribble, since B 1 will have less time to intercept the puck before A 1 has passed by. Further, there is much space between the players and the goal for A 1 to use if she chooses this option. Hence, the dribbling alternative will be better the more pressure B 1 puts on A 1. In addition, if B 1 chooses to fall back and maintain a low relative speed versus A 1, the option to dribble will become worse. Simultaneously, the shot alternative will become better since A 1 can come closer to the goal undisturbed.

Given that neither of the two offensive alternatives are dominating, by the Positioning for Indifference lemma B 1 should put pressure on A 1 to the extent that the two options, to shoot or to dribble, yield the same potential. This is analogous to the red and blue lines in Fig 3. We call this position y*. By playing y*, B 1 has minimized the potential of the best choice for A 1, and hence plays optimally. Note that A 1 might still score regardless of what B 1 does. However, playing similar situations many times, following the effort y* will result in the least number of goals scored by team A.

Strategic game situations

In this section, we will set up a strategic game theoretic framework to model various game situations. Again, there are two teams in the game, team A and team B.

To conduct our analysis we need some definitions and results from game theory, which we present below. These are completely standard, see e.g. [18]. We are considering strategic zero-sum game situations, which are of a one-time choice type. Zero-sum games are games for which the utilities of one side is exactly the negative of that of the other side. We will define the team A utility function to be the potential, and hence the team B utility function is the negative potential.

A strategic game situation is a situation where team A and team B each have a choice to make and none of the participators know the opponent’s actions in advance. Hence, the participators make decisions simultaneously and independently, and none of the players have prior information of their counterpart’s choices.

Definition 6 (Strategic zero sum game) A strategic zero sum game is a structure, , consisting of the following components:

two sets of actions, Π and Γ, that team A and team B choose from, respectively.

a potential function

Further, we associate to a strategic game a set of team A strategies, π ∈ 𝓛(Π), on the actions in Π, i.e. a team A strategy for a strategic game is a probability distribution on the set of offensive actions. For a finite set Π, then π = (π 1, π 2, …, π n), π i ≥ 0 and ∑i π i = 1 for the n actions in Π. Analogously, team B has a set of defensive strategies, γ ∈ 𝓛(Γ). The potential associated with the strategies (π, γ) are

where X has distribution π ∈ 𝓛(Π), and Y has distribution γ ∈ 𝓛(Γ). The notation v defines the potential of the game for the pair of strategies (π, γ).

We will have use of the following definitions.

Definition 7 (Nash equilibrium) For a game situation of strategic type, a set of strategies (π*, γ*) for team A and team B, respectively, is called a Nash equilibrium if neither side achieves a higher potential by single-handedly deviating from the strategy, i.e.if

| (4) |

for π ∈ Π and γ ∈ Γ.

Definition 8 (max-min strategy) A team A max-min strategy for a strategic game is the strategy π* ∈ Π that maximizes the function

The value f(π*) is called the team A safety level. Analogously, a team B max-min strategy for the same game is the strategy γ* ∈ Γ that maximizes

i e, it maximizes the negative of the potential. Hence,

so we call γ* a min-max strategy. The value g(γ*) is called the team B safety level.

It can be shown that, at a Nash equilibrium, the team A safety level coincide with the negative of the team B safety level. We get this and more by the following theorems.

Theorem 9 (Max-Min Theorem)

For each game situation , there exists a Nash equilibrium.

A strategy vector (π*, γ*) in a game situation is a Nash equilibrium if and only if π* is a max-min-strategy for team A and γ* is a min-max-strategy for team B.

The potential in a Nash equilibrium is equal to the safety level of team A, f(π*), and f(π*) = −g(γ*).

The Max-Min theorem gives that the concept of Nash equilibria is a stable and satisfying solution to zero-sum games, since both teams can decide their optimal strategy without considering the strategy of the other team.

The following theorem is a strategic game analogue of the Positioning for Indifference lemma.

Theorem 10 (Indifference Principle) A game situation with a Nash equilibrium (π*, γ*) and potential has safety level v* if and only if

Definition 11 (deterministic action) An action a ∈ 𝓛(Π) for which a(i) = 1 for some i = 1, …, n is called a deterministic action.

Definition 12 (strictly dominated action) A team A deterministic action a ∈ 𝓛(Π) such that v(a, γ) < v(π>, γ) for all team B strategies γ and some team A strategy π, is called a strictly dominated action. Conversely, a deterministic action for team B, d ∈ 𝓛(Γ), is strictly dominated if v(π, d) > v(π, γ) for all team A strategies π and some team B strategy γ.

A consequence of the Indifference Principle is that strictly dominated actions are assigned probability 0 in a Nash equilibrium.

We have now laid out the theoretic framework for the strategic games in our setting.

The Indifference Principle applied to strategic games

We summarize now the results of the previous section in a theorem. The theorem follows directly from our model setting and standard results of game theory. It states that for a given game situation, with utility given by the potential, the optimal strategy for both the offense and the defense is a Nash equilibrium.

Theorem 13 (Fundamental Principle of Strategic Games in Team Sports) For a strategic game situation with team A strategy π ∈ 𝓛(Π), and team B strategy γ ∈ 𝓛(Γ), the potential is the probability of team A scoring next goal minus the probability of team B scoring next goal,

The Nash equilibrium strategy π* for team A is the strategy that maximizes the minimal potential,

Further, the Nash equilibrium strategy γ* for team B minimizes the maximum potential such that

Proof. The result follows immediately from the Max-Min theorem.■

The strategy γ* guarantees team B the highest possible potential it can obtain without knowledge of the team A strategy. By the Max-Min theorem, the Nash equilibrium is given by the minimax strategy. Hence, analogously to the Positioning for Indifference lemma, the optimal defensive strategy is to make the best alternative for the offense as bad as possible.

Notable examples

In this section we aim to convey the power of applying the Fundamental Principle of Strategic Games in Team Sports to various game situations in ice hockey.

Here we will break down game situations to categorical strategic games. Recall that a strategic game is a choice situation where the participators make their decisions simultaneously and have no prior information of the opponents actions.

Ice hockey; chasing the puck

A frequently occurring situation in ice hockey is that a team A player shoots the puck into her offensive corner, and a player from each team, A 1 and B 1, chases after it. At some point, the two players are faced with a choice to either charge forward in an attempt to win the puck or to hold back and by doing so invite the opposing player to go first into the situation. This can be modeled as a strategic game defined by

where is the potential defined on each pair of offensive and defensive actions. We denote by v A the potential of the situation where A 1 wins the puck when both players charge. Further, v B is the potential when B 1 wins the puck, regardless of the manner by which this happens. Finally, v h is the potential should A 1 charge and B 1 hold back. In this case, B 1 avoids to tackle in the first instance, and instead positions herself, in balance, within stick reaching distance to A 1 and the puck. Obviously, v B < v h < v A, since it is better for team A to have puck possession than to not have it, and since A 1 is in a better position to score if B 1 is not between A 1 and the goal. Additionally, p is the probability for A 1 to win the situation given that both players charge and q is the probability for A 1 to win possession given that both players hold back. The game is illustrated by the matrix given in Table 1.

Table 1. The matrix of the strategic game G c.

| team B | |||

|---|---|---|---|

| charge | hold back | ||

| team A | charge | pv A + (1 − p)v B | v h |

| hold back | v B | qv h + (1 − q)v B | |

Since the A 1 action to hold back is strictly dominated by the charge alternative, the Indifference Principle yields that the Nash equilibrium contains only the charge action for A 1. Given that

then there is a Nash equilibrium at π* = (1, 0), γ* = (0, 1) and the optimal strategy for A 1 is to charge while the optimal B 1 strategy is to hold back. Conversely, given that

there is a Nash equilibrium at π* = (1, 0), γ* = (1, 0) so it is optimal for both players to charge in the situation.

In this game situation, intuition leads us to believe that

since the resulting scoring chance if the offensive player has possession with the defender out of the way is much higher than for any of the alternative outcomes. Further, if both players were to choose to charge, they weigh approximately the same, and they come into the situation with the same speed, then it seems to be approximately a 0.5 probability for B 1 to win the puck possession. Hence, B 1 should hold back in all such situations.

Ice hockey; pass or dribble

An important choice that all players need to make in many team sports, is to decide when to pass and when to dribble. We consider this problem for ice hockey in a strategic game setting. However, the analysis is valid for other sports as well, e g soccer, team handball, and basketball. Consider a situation where team A has puck possession. The team A player A 1 with the puck has two choices, to pass or to dribble. Team B on the other hand can decide between to put pressure on A 1, or to hold back and wait until team A comes closer to the team B goal. This typically allows team B to defend themselves in a more compact and efficient manner, at the expense of that team A can advance forward. The game is defined by

where is the potential defined on each pair of offensive and defensive actions. The matrix for the game is given in Table 2.

Table 2. The matrix of the strategic game G p.

| team B | |||

|---|---|---|---|

| press | hold back | ||

| team A | pass | pv Ap + (1 − p)v Bp | v ph |

| dribble | qv Ad + (1 − q)v Bd | v dh | |

Now, we assume the typical situation that A 1 has sought protection behind her own goal, and is now advancing with the rest of team A ahead of her. We assume further that v ph and v dh are such that it is optimal for team B to start to put pressure on A 1. In addition, we assume that p > q, which is natural since it is most often considerably easier to succeed with a pass than with a dribble. Finally, we set v Bp > v Bd. This implies that the situation resulting from an intercepted pass, v Bp, is better for team A than if A 1 is to fail with a dribble, v Bd. Obviously, we have also that v Ap > v Bp and that v Ad > v Bd. Given the present situation, v Ap ≈ v Ad, since not much is won from a successful dribble early on in an attack. If anything, one might suspect that v Ap ≥ v Ad, as it takes a few seconds to complete a dribble, during which team B has the time to adjust its positions and put increasing pressure on the rest of team A. This implies that it would never be optimal to dribble, since pv Ap + (1 − p)v Bp > qv Ad + (1 − q)v Bd. In fact, we need v Ap < < v Ad if the decision to dribble is going to be the best choice. Note also that this simple analysis suggests that given that team B puts pressure on A 1, she maximizes her potential by maximizing the probability of a successful pass. Since a successful pass depends not only on the passer, but also on the receiver and the opponents, the only way to reach a high pass success rate p is to pass early. The reason is of course that team B will then have little chance to intercept the pass or to force A 1 to dribble.

Extensive game situations

More elaborate sport situations, where the offense and defense make sequential choices, can be modeled with so-called extensive games. We need to introduce some additional game theoretic notation for this setting. Again, these are standard, see e g [18].

Definition 14 (Game tree) A game tree 〈P, p0, f〉 is a structure consisting of the following components:

a non-empty set P, the elements of P are called positions;

an element p0 ∈ P, the game starting position;

a function f:P → 𝓟(P) from the set of positions to the power set of P i.e. all subsets of P.

The positions in f(p) are called direct followers to p. Furthermore, f has the property that for each position p ≠ p0 there is a unique sequence with p0 the starting position and pn = p and pk+1 is a direct follower to pk for k = 0, 1, …, n − 1. Any position q which can be reached from p is called a follower to p. We denote the pair (p, q) consisting of a position and any of its direct followers a move. The set of ending positions, i.e. positions with no followers, is denoted Pe and positions in Pi = P\Pe are denoted inner positions.

Definition 15 (Extensive game) An extensive game is a structure consisting of the following components:

a game tree T = 〈P, p0, f〉,

a function t:Pi → {a, b, c} which determines the turn-order; whether the choice in the position is to be made is by the team A, team B or a random outcome.

probability distributions μp over the direct followers of p for all positions such that t(p) = c.

the potential, , in the ending positions of the game tree.

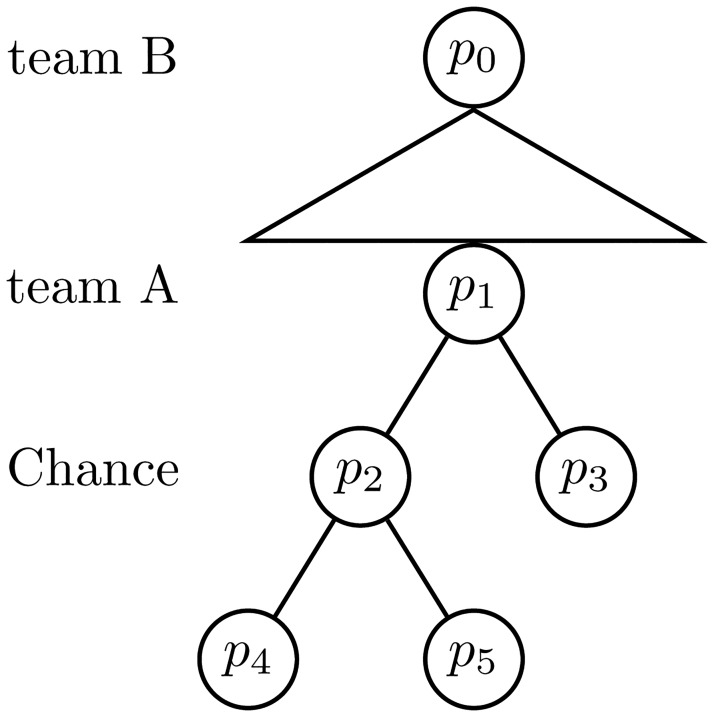

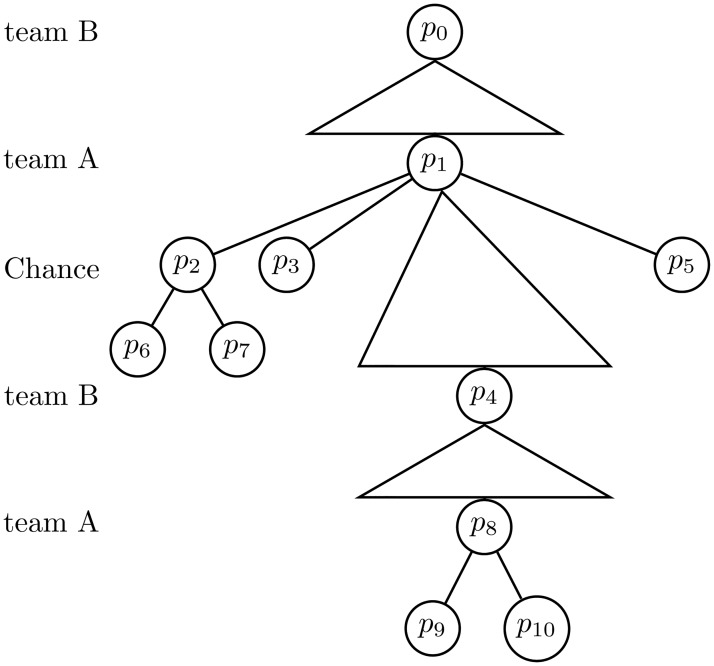

Extensive games may be illustrated in game trees where the turn order is displayed. Here, direct followers are displayed by connections between the nodes, and triangles denote actions in continuum (such as choice of position or velocity). Further, lines denote categorical actions, such as to shoot or to pass, see Fig 4.

Fig 4. A game tree.

An extensive game where p 0 is the starting position, and p 0 (team B), p 1 (team A) and p 2 (chance) are inner positions. Here, team B has a decision in continuum while the team A and chance moves are categorical.

Definition 16 (Subgame) For p ∈ P, the restriction of a game G starting in p is called a subgame Gp to G.

Definition 17 (Team positions) The positions p ∈ Pi such that t(p) = a are called team A positions and denoted Π, analogously are Γ = {p ∈ Pi:t(p) = b} called team B positions, and Λ = {p ∈ Pi:t(p) = c} called chance positions.

Definition 18 (Strategy) A team A strategy, σ A:Π ↦ 𝓛(P) in a game situation on extensive form is a function with the property that σA(p) assigns probabilities to all direct followers q ∈ f(p), for every team A position p. I.e. σA gives the probabilistic decision path for team A, for all its possible positions. Analogously, a team B strategy, σB, defines the corresponding probabilistic decision path of team B.

We will denote by 𝓛(Π) and 𝓛(Γ) the set of all team A and team B strategies, respectively. The potential of the game, given strategies π and γ, is set to be

where X has distribution π ∈ 𝓛(Π) and Y has distribution γ ∈ 𝓛(Γ).

Definition 19 (Nash equilibrium for extensive game situation) For an extensive game , strategy vectors (π*, γ*) such that π* = 𝓛(Π) and γ* = 𝓛(Γ) are called a Nash equilibrium if

for all strategies π = 𝓛(Π) and γ = 𝓛(Γ). Further, if the restriction of π*, γ* to every subgame is a Nash equilibrium in that game, it is called a subgame perfect Nash equilibrium.

The Indifference Principle applied to extensive games

We summarize here the results of the previous section in a theorem, which is an immediate consequence of our game theoretic model. The theorem states that for a given game situation, with utility given by the potential, the optimal strategy for both the offense and the defense is a subgame perfect Nash equilibrium.

Theorem 20 (Fundamental Principle of Extensive Games in Team Sports) For an extensive game situation the subgame perfect Nash equilibrium strategy π* for team A is the strategy that maximizes the minimal potential,

for every subgame Gp. Further, the subgame perfect Nash equilibrium strategy γ* for team B minimizes the maximal potential such that

for every subgame Gp.

Proof. The result follows immediately from the definition of subgame perfect Nash equilibrium.■

Completely analogously to the strategic game setting, the strategy γ* guarantees that team B gets the lowest possible potential it can achieve without knowing anything about the team A strategy. Hence, the result above extends the Positioning for Indifference lemma such that for sequential moves, the optimal defensive strategy is to make the best alternative for the offense as bad as possible.

Notable examples

We analyze now a few examples where each team can make sequential choices.

Ice hockey; two against one

In ice hockey, the game situation where two team A players A 1 and A 2 face a single team B defender B 1 occurs often. We assume that A 1 has initial puck possession. Due to the blue line offside, the situation starts as a one against one situation where B 1 positions herself with the move (p 0, p 1) to prevent the attack. Next, A 1 can choose either of the following four moves: to dribble (p 1, p 2); to shoot (p 1, p 3); to avoid B 1, (p 1, p 4); and to pass A 2, (p 1, p 5). If A 1 decides to dribble then she is either successful, (p 2, p 6), or looses possession, (p 2, p 7). Further, if A 1 shoots, she ends up in p 3, an ending position. If A 1 makes the move to avoid B 1, (p 1, p 4), then B 1 re-positions with the move (p 4, p 8). The subgame situation is ended by a shot from A 1, (p 8, p 9), or with a pass to A 2 for her to shoot a one timer, (p 8, p 10). Finally, if A 1 passes A 2, (p 1, p 5), we are in a new two against one situation, but where A 2 has puck possession. The corresponding game is illustrated in Fig 5, where a description of the positions are given in Table 3.

Fig 5. The extensive game tree in the example Ice hockey; two against one.

Table 3. Description of positions for the example Ice hockey; two against one.

| Positions | Description |

|---|---|

| p 0 | team B position, chooses a position in continuum. |

| p 1 | team A position, chooses categorically to dribble, to shoot, to pass, or to take a new position to avoid confrontation. |

| p 2 | chance position, dribble move. |

| p 3 | ending position, shot by A 1. |

| p 4 | team B position, chooses a position in continuum. |

| p 5 | pass to A 2, the game starts over in p 0 with new positions. |

| p 6 | ending position, successful dribble. |

| p 7 | ending position, failed dribble. |

| p 8 | team A position, chooses categorically to shot or to pass. |

| p 9 | ending position, shot by A 1. |

| p 10 | ending position, shot by A 2. |

Note that there is a certain amount of subjectivity regarding how many nodes to include, and which ones.

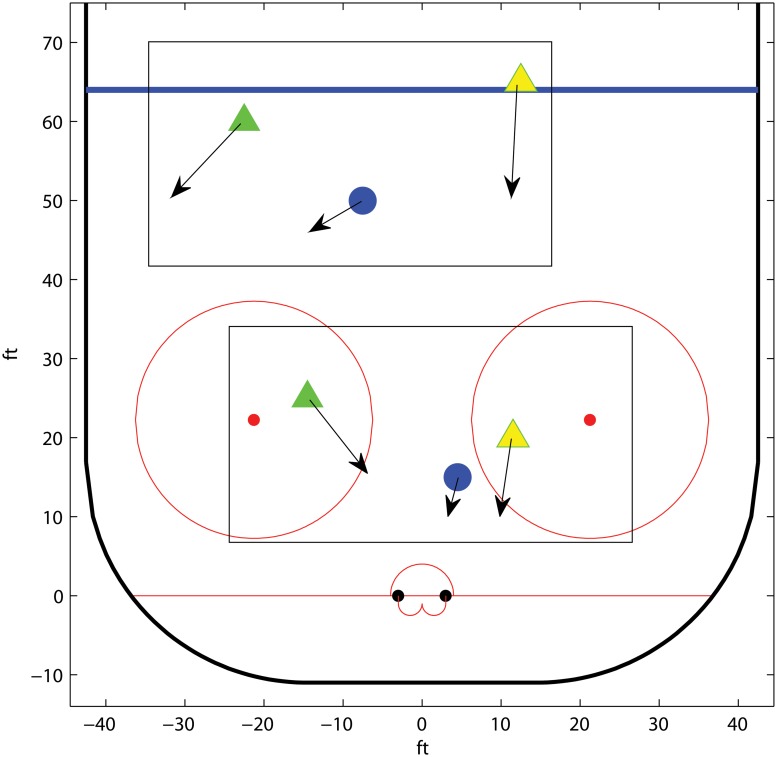

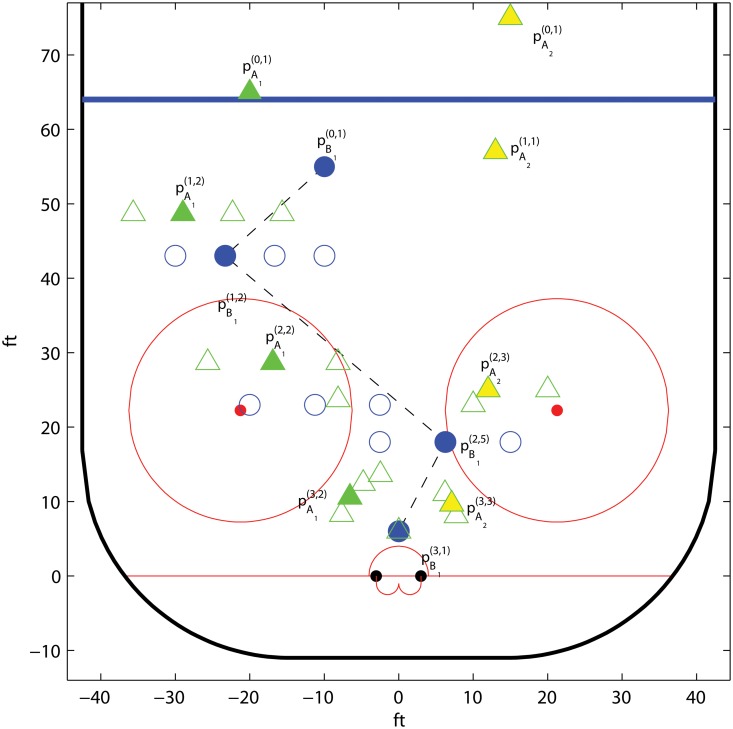

As in the one against one example, we assume that regardless of how possession is lost, the resulting potential will be the same. Note that if the potential of the subgames starting in positions p 4 and p 5 are dominated by the subgames started in the alternatives p 4 and p 5, the present game will be played identically to the one against one example. However, in reality the opposite is true; A 1 knows that team A benefits considerably from being two against one, rather than one against one, so a rational A 1 will avoid the moves (p 1, p 2) and (p 1, p 3) in the first instance. The player B 1 knows this, too, and can therefore put more pressure on A 1 in order to force A 1 towards the side of the rink, to a position with smaller potential. The defender B 1 can do this to the extent that a pass back to A 2 will yield an equally good potential. Hence, to pass and to avoid are the relevant alternatives for A 1 in node p 1. The potential of the ending situation is strongly dependent on the position of A 1 in node p 4. The subgame started at p 4 will have smaller potential the further out to the side A 1 has been forced by B 1. Next, due to the limited mobility of the goalkeeper, the position p 10, a pass to A 2, will have a very high potential. Conversely, the goalkeeper can save a large proportion of the shots coming from A 1, due to that she has clear vision and can attain good positioning. Hence, since it is easier for B 1 to intercept a pass if she is near A 2, the optimal position for B 1 will be close to A 2, focusing primarily on preventing the pass from A 1 to be completed. This is done to the extent that the potential of the two alternatives are equal, by the Positioning for Indifference lemma. Consequently, the defender B 1 should always maintain focused on A 2 until A 1 is close enough to the goal so that B 1 can put pressure on both A 1 and A 2 simultaneously. It follows that the optimal team B defender trajectory will be shaped like an S, see Fig 6.

Fig 6. Outline of the optimal trajectories in the example Ice hockey; two against one.

The offensive players are denoted by triangles, where a solid triangle marks the possession holder, and the defender is denoted by a circle. In the upper rectangle, B 1 puts pressure on the puck holder A 1, who avoids confrontation to await A 2. In the lower rectangle, having steared A 1 into a worse position, B 1 shifts focus to A 2, in order to decrease the greater threat posed by that player.

The player B 1 starts by putting aggressive pressure on A 1—more so than if A 1 was to come alone in a one against one in the same position—to force her to the side. She then withdraws to devote her main attention to prevent a pass to A 2. Finally, B 1 comes back to a position in front of her goal keeper. From here, B 1 can both intercept a pass to A 2 and stop A 1 from advancing closer to the goal with the puck at the same time.

Rationalizable beliefs

We indicate now a direction in which it is natural to expand the framework that we have developed so far.

We know that players deviating from the Nash equilibrium will invite the counterpart to improve the potential. For example, if team B knows that team A will follow a certain strategy , then team B can lower the potential of the game situation by choosing the optimal defense . Hence, it is distinctly good to have the ability to choose “late” in decision situations, which is a skill that professional players practice in many sports.

To illustrate further, consider the ice hockey example of one against one. If the defender knows that the offensive player is reluctant to dribble, then she can put more pressure early on, in order to stear the offensive player further away and to the sides. This renders that the potential of the game will be smaller than if team A played by the optimal Max-Min strategy.

As an example from team handball, we re-visit the “truth” that the wing players should have a much higher efficiency than the back court players. We argued earlier that this is likely to be a suboptimal strategy. If we assume that team A takes too few shots from some positions, then team B can focus more effort on making the remaining positions even worse, which has the effect that the players who take too large shot proportions will have trouble to maintain their shot efficiency.

Shot potential

We introduce in this section the concept of a shot potential, which is related to the potential. The shot potential fields can be used to make numerical analysis of various game situations.

The present paper is to a large extent based on the concept of potential i.e. the probability of team A scoring next minus the probability of team B scoring next. However, in many situations the potential will be approximately equal to the probability of team A scoring, see e.g. the 2 against 1 example in Section 1. Thus the potential depends only on the probability of scoring, given the chosen strategies for each side. We make the following definitions.

Definition 21 (Shot potential) The shot potential for A1 is the probability that A1 scores with an instant shot from her present position, given that the resulting situation if the shot is missed has potential 0.

Definition 22 We refer to the level curves of the shot potential as isolines.

Note that the isolines give sets of points for which the shot potential is equal.

Notable examples

Here we will use the shot potential to analyze several situations in ice hockey in a dynamic setting.

Ice hockey; eccentric isolines

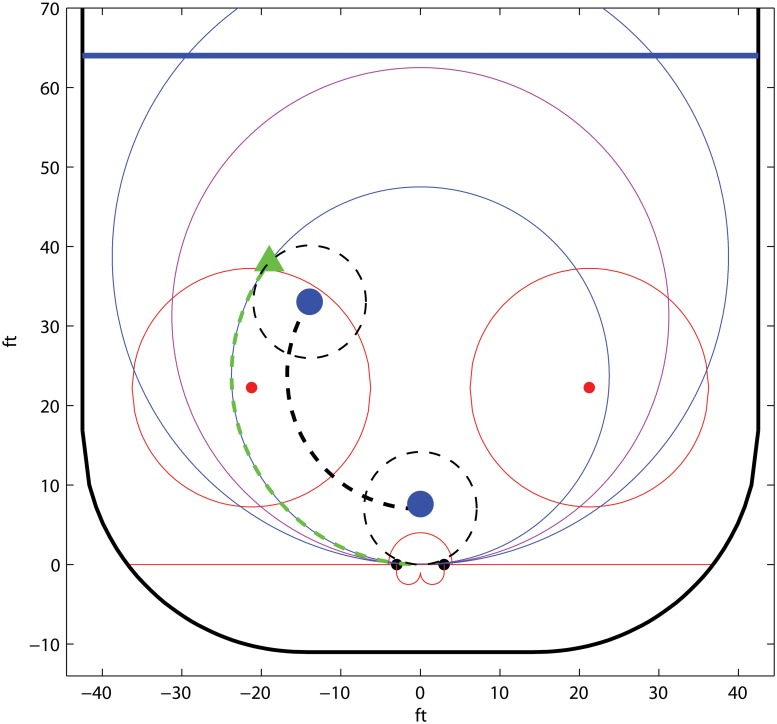

Consider a one against one situation in ice hockey. We assume that the team A attacker A 1 is skating towards the goal with speed v A. The attacker A 1 can not shoot if she is closer than r to the team B defender B 1, since B 1 can then interfere with the shot. Further, if the attacker A 1 decides to try to skate past B 1 outside her reach, and the isoline which she is presently skating along is circular with radius r A, what is the necessary speed v B of B 1 that can guarantee that A 1 will not be able to obtain a higher shot potential before reaching the goal? We see immediately that v B must satisfy

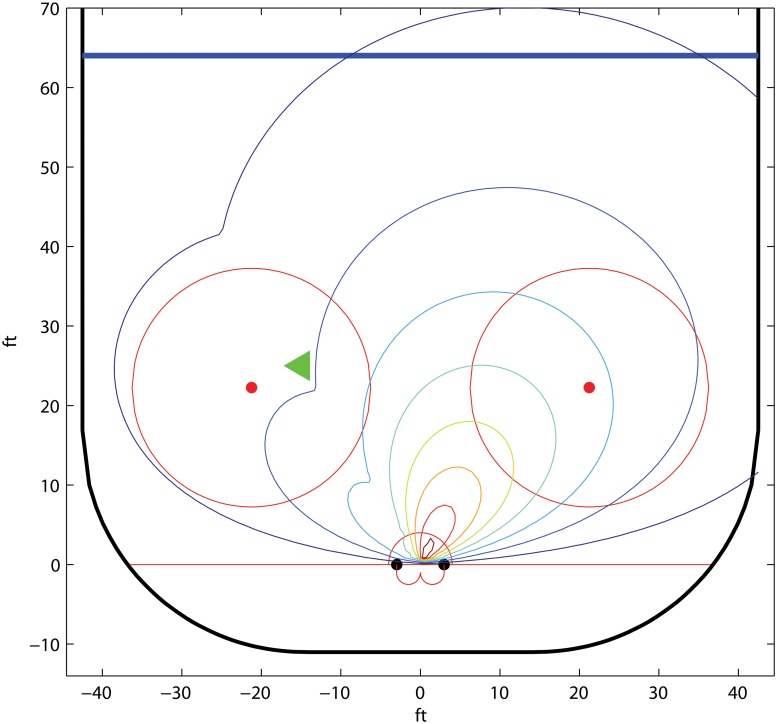

so that . If B 1 can keep this critical speed, then she should attempt to hold A 1 at the present isoline. If not, B 1 should fall back and hold an isoline associated with a larger shot potential, see Fig 7.

Fig 7. The optimal B 1 strategy for the example Ice hockey; eccentric isolines.

The offensive starting position is denoted by a triangle and the defensive positions are denoted by circles. Here B 1 has critical speed and the present isoline is circular.

Remark 23 Note that the team B strategy in the example above minimizes the maximal shot potential during the course of the situation.

Ice hockey; parametric isolines

Beside the skills of the shooter, the probability of scoring with a shot in any team sport depends on at least two factors; the distance to the goal and the firing angle. Obviously, the chance of scoring will improve the closer to the goal, and the more central the position, the shot is fired from.

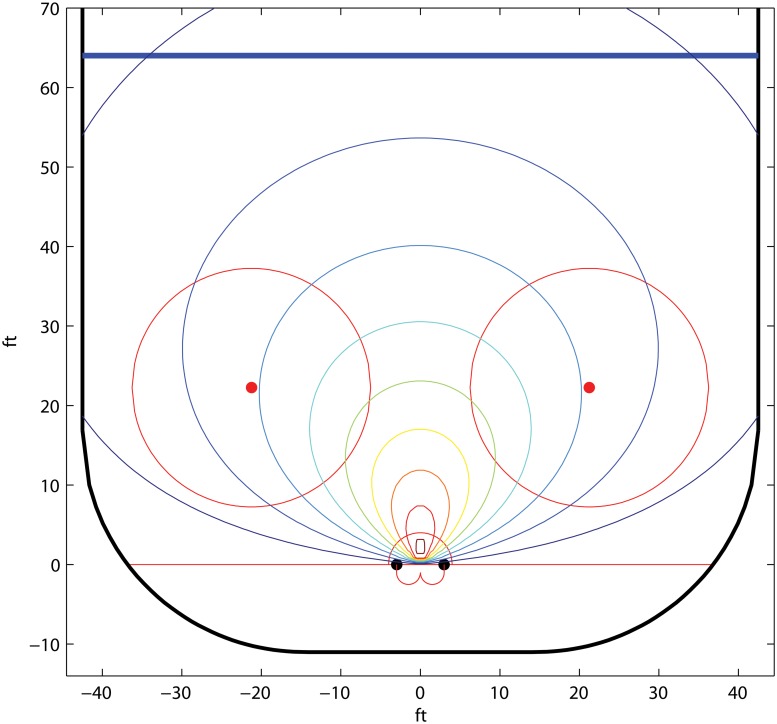

We derive here two simple parametric models of the shot potential. The first one is for a team A puck holder. The second is for another team A player who does not have puck possession and who shoots instantly when she get the puck—a so called one timer. We define the shot potential model for the puck holder as

and the shot potential for the player who shoots a one timer as

Here x i, i = 1, 2, are the locations for A i, (r i, θ i) is the polar representation of x i around the y-axis for a coordinate system with origo in the middle of the goal, λ, α, β > 0, and α + βπ ≤ 1. The players’ shot potentials are illustrated in Figs 8 and 9.

Fig 8. The isolines of the shot potential of A 1 in the example Ice hockey; parametric isolines.

The parameter values λ = 0.03, α = 0.2.

Fig 9. The isolines of the shot potential of A 2, given that the pass comes from A 1 in the example Ice hockey; parametric isolines.

The assisting player A 1 is positioned at the green triangle and the parameter values are λ = 0.03, α = β = 0.2.

The motivation for the second model is that a one timer may have a considerably larger shot potential than if that shot would have been fired from a player who has had puck possession for some time. The reason is that, in the one timer case, the goalkeeper needs to make a sudden shift of position, while for a direct shot, the goalkeeper is already well positioned to save the puck as the shot is fired.

Ice hockey; optimal trajectories

Here we use the parametric shot potential model defined in Section Ice hockey; parametric isolines to derive the optimal trajectories for a simple example of a two player offense facing a single defender. All trajectories are given on a discretized grid. Recall that the game tree of this setting is given in Fig 5. We assume that the A 1 move to dribble, (p 1, p 2), is dominated by the other alternatives. The player B 1 makes the first move.

The positions for the participating players are denoted by , where X denotes the player, i is the level starting from the top, and j gives the node in that level counted from the left. We assume that B 1 is the center point of a ball with radius r and that the offensive players keep at least that distance to B 1 at all nodes. If B 1 is close to the forward who is receiving a pass, then B 1 intercepts that pass with probability q c ∈ [0, 1]. Otherwise, B 1 intercepts passes with probability q p ∈ [0, 1], where q c > q p. The potential of a shot fired by a puck possession holder X is given by . Similarly, the shot potential of a one timer from Y following a pass from player X is .

The time dynamic locations for the players may be illustrated in game trees. These trees describe the trajectories for each player, and depend on the moves of B 1. The direct followers to the inner positions of the game tree for each player are listed in Table 4. The ending positions are given in Table 5.

Table 4. The direct followers to inner positions, with location given by , for a player X.

| Inner positions | Direct followers |

|---|---|

Table 5. The ending positions for each player’s game tree.

| Player | Ending positions |

|---|---|

| B 1 | |

| A 1 | |

| A 2 |

Note that since it is easier for B 1 to intercept a pass if she is close to the pass receiving forward, the optimal ending position for B 1 must always be such that she puts pressure on both offensive players simultaneously. For this reason, this ending position is the only one we will include for B 1 on the final level.

The grid for a specific example is given in Fig 10, where the filled circles and triangles indicate the optimal strategies. The trajectory of A 1 depends on how much pressure B 1 puts at level 1. Similarly, the trajectory of A 2 is determined by the position of B 1 at level 2. Note that the optimal strategy is S shaped, which is in line with the reasoning in Section Ice hockey; two against one.

Fig 10. Example of a grid and the corresponding optimal trajectories for A 1, A 2, and B 1, in the example Ice hockey; two against one.

The offensive players are denoted by triangles, where a solid triangle marks the possession holder, and the defender is denoted by a circle. We use the shot potential model in Section Ice hockey; parametric isolines with parameters α = β = 0.2, λ = 0.03, q p = 0.1, and q c = 0.5.

Remark 24 We have chosen a sparse grid for simplicity of exposition. However, it is straightforward to consider more dense ones. This would allow for more realistic modeling. Further, our results in the example are robust to variations in how the nodes are positioned.

Parameter estimation

We have in this paper derived game theoretic models which are general enough to cover a wide range of game situations in several team sports. The applicability of the theory will depend, among other things, on how well we can statistically estimate the model parameters. To this end, we will in this section briefly describe some possible approaches to parameter estimation.

Statistical analysis of game data

We have in previous sections analyzed a number of game situations which are frequently occurring in their respective sport. By suitably categorizing entire games into a number of such situations, what choices the players made, and the outcome, it is in principle straightforward to obtain estimates of the model parameters for those particular situations. By doing this, one can conclude whether teams as a whole, or even specific players, appear to play in a non-minimax optimal way.

One against one

Previously in this paper, we have addressed this game situation in an ice hockey setting. However, it is very common in many other sports as well, including basketball, soccer, and team handball. Given a specific sport, we assume that we have observed a number of games for team B. In these games, we have n occasions which we have judged to be one against one situations. Further, we categorize the choices that the offensive players did in each situation into either shoot or dribble. We have now n s data points where the offensive player chose to shoot, and n d situations where she decided to dribble. The number of goals scored when the offensive players decided to shoot and dribble is denoted by X s and X d, respectively. Hence, X s ∼ Bin(n s, p s) and X d ∼ Bin(n d, p d). We can now apply standard statistics to draw conclusions. E g, we can investigate whether we can reject the hypothesis that the probability of scoring is the same for the shoot and the dribble alternative, respectively. If we are able to reject this hypothesis, we can also conclude that team B does not appear to play the optimal min-max strategy.

Experimental design

Given a game situation, it is reasonable to aim to find the overall optimal strategy. However, due to the complexity of many game situations, it is likely to be insufficient to merely analyze game data. The reason is that this approach only has in its scope to discover if an existing strategy is better than some other existing strategy. It will be less efficient at determining what is indeed the actual optimal strategy. To be able to obtain this, we need to consider experimental design.

Parameterizing the isolines

While we, thus far, have discussed isolines mainly in relation to ice hockey, the concept is central to e g basketball, soccer and team handball as well. The reason is that the isolines are important factors in determining how to defend in one against one, two against one and many other common game situations. To estimate the isolines in a controlled experiment is particularly straightforward. Random players take shots from random positions, in relevant cases at an undisturbed goalkeeper. The outcome (goal or no goal), as well as the position from which the shot was taken, is recorded. To estimate the isolines can now be done by e g applying standard logistic regression techniques.

Ice hockey; chasing the puck

It is straightforward to set up a controlled experiment for the example Ice hockey; chasing the puck. In the setting of the experiment, we can for each team B defender B 1 run the game situation multiple times. For each situation, B 1 gets assigned if she should either play the alternative to charge or if she should use the other option; to hold back. The offensive player A 1, who is assigned at random for each situation, will always charge, by the analysis in the example Ice hockey; chasing the puck. We can run the experiment a sufficiently large number of times to be able to conclude to what extent either of the defensive alternatives are better than the other. Examples similar to the present one can be found in several other team sports.

Discussion

In this paper, we make an attempt to develop a mathematical theory on game intelligence in team sports. It is central to this theory to value game situations by their potential. Intuitively, the potential is the probability that the offense scores the next goal minus the probability that the next goal is made by the defense. We give many examples to illustrate the width of the applicability of our results, but the set of chosen situations is by no means exhaustive.

In Section Team handball; shot proportions, we argue that the classical efficiency thresholds—e g that back court and wing players should have 50% and 80% mean shot efficiency, respectively—are likely to be non-optimal. It would be interesting to investigate this issue further.

One of the authors, Nicklas Lidström, relied on a set of first principles which he used to analyze how to play game situations during his career as a professional ice hockey player. His approach constitutes a cornerstone in the present paper.

In Section Ice hockey; chasing the puck, we analyzed whether a defense player should charge or hold back. We note here that it appears that the vast majority of backchecking hockey players judge that it is optimal to charge. Nicklas thought that it was optimal for him to hold back, which consequently was how he played in such situations.

The example in Section Ice hockey; pass or dribble presents Nicklas’ analysis of why it is optimal to pass early in that situation, and in similar ones.

Further, in one against one situations, Nicklas recalls using his reached out stick extensively as a first line of defense against opposing forwards. He believed that this increased the width with which he could operate. The operating width is equivalent to the quantity r in the examples of this paper. Hence he made the action to dribble less attractive in terms of potential for the opposing forwards. This had the effect that he could stear the forward further to the sides—preferably their back hand side—to make that alternative, too, lower in potential than what he thought he could have obtained otherwise.

We note that Nicklas’ S shaped strategy in the two against one examples in ice hockey is different to how most defense players choose to play such situations. It seems that most defenders eventually decide to let go of the non-puck holding forward to focus on the forward with puck possession instead.

Acknowledgments

The authors are grateful to the academic editors and a reviewer for insightful comments which helped improve the paper considerably. The third author would also like to thank Anders Lordemyr for fruitful input concerning the applications of our results to soccer.

Data Availability

All relevant data are within the paper.

Funding Statement

JL was funded by Gothenburg University. CL was funded by Second swedish Ap fund. The funders had no role in study design, analysis, decision to publish, or preparation of the manuscript. NL received no funding for this work.

References

- 1. Monderer D, Shapley LS (1996) Potential games. Games and Economic behavior 14(1): 124–143. 10.1006/game.1996.0044 [DOI] [Google Scholar]

- 2. Walker M, Wooders J (2001) Minimax play at Wimbledon. American Economic Review 91(5): 1521–1538. 10.1257/aer.91.5.1521 [DOI] [Google Scholar]

- 3. Hsu SH, Huang CY, Yang CT (2007) Minimax play at Wimbledon: Comment. American Economic Review 97(1): 517–523. 10.1257/aer.97.1.517 [DOI] [Google Scholar]

- 4. Bailey BJ, McGarrity JP (2012) The effect of pressure on mixed-strategy play in tennis: The effect of court surface on service decisions. International Journal of Business and Social Science 3(20): 11–18. [Google Scholar]

- 5.Kovash K, Levitt S (2009) Professionals do not play minimax: Evidence from Major League Baseball and the National Football League. NBER Working Papers No. 15347.

- 6.Emara N, Owens D, Smith J, Wilmer L (2014) Minimax on the gridiron: Serial correlation and its effects on outcomes in the National Football League. MPRA Paper No 58907.

- 7.Mitchell M (2010) Dynamic matching pennies with asymmetries: An application to NFL play-calling. Working Paper.

- 8. Chiappori PA, Levitt S, Groseclose T (2002) Testing mixed-strategy equilibria when players are heterogeneous: The case of penalty kicks in soccer. American Economic Review 92(4): 1138–1151. 10.1257/00028280260344678 [DOI] [Google Scholar]

- 9. Palacios-Huerta I (2003) Professionals play minimax. Review of Economic Studies 70: 395–415. 10.1111/1467-937X.00249 [DOI] [Google Scholar]

- 10. Buzzacchi L, Pedrini S (2014) Does player specialization predict player actions? Evidence from penalty kicks at FIFA world cup and UEFA euro cup. Applied Economics 46(10): 1067–1080. 10.1080/00036846.2013.866205 [DOI] [Google Scholar]

- 11. Azar OH, Bar-Eli M (2011) Do soccer players play the mixed-strategy Nash equilibrium? Applied Economics 43(25): 3591–3601. 10.1080/00036841003670747 [DOI] [Google Scholar]

- 12. Skinner B (2010) The price of anarchy in basketball. Journal of Quantitative Analysis in Sports 6(1): 595–608. 10.2202/1559-0410.1217 [DOI] [Google Scholar]

- 13. Skinner B (2012) The problem of shot selection in basketball. PLoS ONE 7(1) e30776 10.1371/journal.pone.0030776 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Alferink LA, Critchfield TS, Hitt JL, Higgins WJ (2009) Generality of the matching law as a descriptor of shot selection in basketball. Journal of Applied Behavior Analysis 42(3): 595–608. 10.1901/jaba.2009.42-595 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Skinner B (2011) Scoring strategies for the underdog: Using risk as an ally in determining optimal sports strategies. Journal of Quantitative Analysis in Sports 7(4) 11 10.2202/1559-0410.1364 [DOI] [Google Scholar]

- 16. Moschini G (2004) Nash equilibrium in strictly competitive games: live play in soccer. Economics Letters 85: 365–371. 10.1016/j.econlet.2004.06.003 [DOI] [Google Scholar]

- 17. Nash SG, Sofer A (1996) Linear and nonlinear programming. The MacGraw-Hill Companies, Inc. [Google Scholar]

- 18. Osborne MJ (2009) An Introduction to Game Theory. Oxford University Press, international edition. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All relevant data are within the paper.