Abstract

The design and selection of 3D modeled hand gestures for human-computer interaction should follow principles of natural language combined with the need to optimize gesture contrast and recognition. The selection should also consider the discomfort and fatigue associated with distinct hand postures and motions, especially for common commands. Sign language interpreters have extensive and unique experience forming hand gestures and many suffer from hand pain while gesturing. Professional sign language interpreters (N=24) rated discomfort for hand gestures associated with 47 characters and words and 33 hand postures. Clear associations of discomfort with hand postures were identified. In a nominal logistic regression model, high discomfort was associated with gestures requiring a flexed wrist, discordant adjacent fingers, or extended fingers. These and other findings should be considered in the design of hand gestures to optimize the relationship between human cognitive and physical processes and computer gesture recognition systems for human-computer input.

Keywords: Gesture-based interaction, Computer interface, hand postures, Multitouch, Computer input

Introduction

Human-computer input can be accomplished in many ways; with keyboards, mice, tablets, voice, and, now, hand gestures. The design of gestures for computer input is in its early stages. Currently, 2D gesture input involves moving the fingertips across the surfaces of touch sensing displays or touchpads with pinching, flicking and swiping gestures to perform relatively simple functions such as text selection/editing, zoom in/out, view rotation, and scrolling (Radhakrishnan et al. 2013). New technologies (e.g., Leap Motion, X-box, WII) that can capture hand postures and motions in 3D-space and decouple the hand from a touch-sensing surface will allow for an increase in gesture-language complexity and thus functionality. These technologies include gloves, wearable sensors and remote optical methods (Wenhui et al., 2009; Wan and Nguyen, 2008; Wu et al., 2004; Cerveri et al., 2007; Manresa et al., 2005). These 3D technologies offer the potential for improved user experience by improving productivity and ease of learning while reducing postural constraints. Furthermore, 3D gestures are likely to be more complex than the simple gestures used currently for 2D input.

The assignment of 3D gestures to computer commands have generally followed principles of natural language while also considering the technology limitations of hand posture and motion recognition. Researchers have proposed gestures for some HCI tasks, such as moving objects, zoom in, etc. that use such hand motions of flicking and shaking of hand-held devices (Mistry et al., 2009; Scheible et al., 2008; Yatani et al., 2005; Yoo et al., 2010). In addition, end users have been recruited to suggest and evaluate the best 2D and 3D gestures for common computer and mobile device tasks such as panning, zooming, etc. (Wobbrock et al., 2009; Fikkert et al., 2010; Wright et al., 2011; Kray et al., 2010; Ruiz et al., 2011). These approaches consider natural language, ease of learning and ability of the system to detect the gesture.

However, there has been relatively little consideration of the physical effort, both fatigue and discomfort, involved in selecting gestures, especially when the gesture is performed frequently throughout the day. While natural language and cognitive usability factors are critical for gesture selection, the selection process should also consider the discomfort and pain associated with a gesture. Nielsen et al. (2004) proposed including measures of the internal hand forces caused by posture for gesture selection. Stern et al. (2008) proposed a model that considered a balance between comfort, hand physiology, and gesture intuitiveness in the selection process.

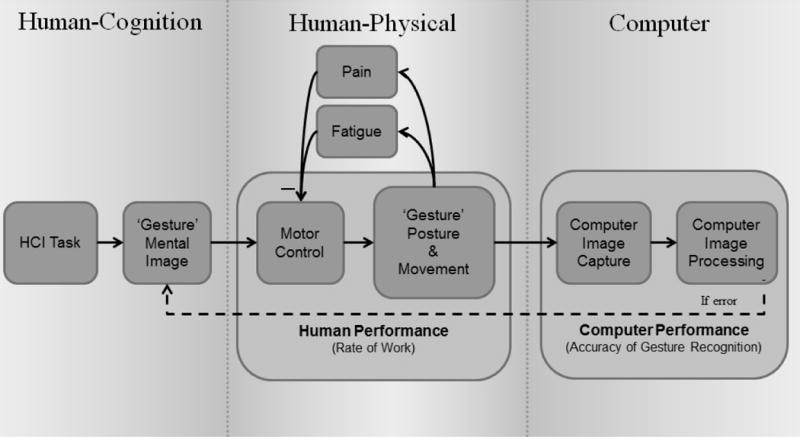

Another model for the relationship between human cognitive and motor processes and computer gesture recognition for executing an HCI task is presented in Figure 1. On the human side, system performance is limited by the cognitive and motor processes used for gesture formation. On the computer side performance is limited by the rate and fidelity of gesture capture and processing. The pace of gesture formation and external stress can cause variations in the hand postures formed (Qin et al., 2008). Pain and fatigue may interfere with the rate of gesture formation and the consistency of the hand postures formed resulting in a potential negative feedback loop and a decrease in human performance (i.e. reduced accuracy and rate of work performed).

Figure 1.

A model of the relationships between human cognitive and motor processes and computer gesture recognition for completion of an HCI task.

Sign language interpreters have extensive experience in forming both simple and complex hand gestures at high rates for many hours. The job usually involves translating the words of teachers or conference speakers into American Sign Language (ASL) for a hearing impaired audience. Between 25 and 70% of sign language interpreters report persistent hand pain and hand disorders (Cohn et al., 1990; DeCaro et al., 1992; Scheuerle et al., 2000; Smith et al., 2000; Qin et al. 2008; Fischer et al., 2012). Some interpreters are unable to continue with the work due to the pain, while others have learned to modify their signing methods or to share their workload with other interpreters in order to prevent disabling pain (Feuerstein et al., 1992; Feuerstein et al., 1997). Sign language interpreters are at greater risk for hand pain than the deaf. They sign continuously for long durations and make gestures that are well enunciated in order to be clearly visible to a large audience while conversational signing by the deaf allows pauses in signing when the other person speaks or signs.

The unique experience of sign language interpreters in knowing which hand gestures are or are not associated with hand and arm pain may provide guidance in the design of a gesture language for computer input. While sign language interpreters use complex gestures for words they also have extensive experience with the simple hand postures and motions that are likely to be adopted for 3D gestures for HCI. Examples are gestures for numbers and letters (e.g., O, A, C, V) (see Figure 4). These simpler gestures are attractive for HCI because the hand postures are both easy to remember and are distinct and more readily discriminated by image capture.

Figure 4.

Rank order of 37 alphanumeric characters by mean discomfort(1)/comfort(−1) ratings.

The goal of this study was to determine which hand gestures were comfortable to form and which ones were associated with hand pain when performed repeatedly by sign language interpreters. The hypothesis was that sign language interpreters can associate specific hand gestures to different levels of hand pain when the gestures are formed repeatedly. If some gestures are painful and some are not then the findings can help guide the design of 3D hand gestures for HCI tasks in order to reduce pain and fatigue among computer users and improve productivity.

Methods

Study Design

Twenty-four experienced sign language interpreters participated in the study. Participants were recruited from among San Francisco Bay Area professional interpreters located using the Northern California Registry of Interpreters for the Deaf. Participants were required to have more than one year of experience as a paid interpreter. A demographic questionnaire was administered to assess personal characteristics of participants and their signing history. The most common styles of signing were American Sign Language (ASL), Pidgin Signed English (PSE), and Signed English. Symptom pattern with signing was assessed with questions on quality and location of symptoms, frequency, duration, and when the symptoms started relative to the signing sessions. A questionnaire was administered to assess the discomfort level associated with various hand postures and motions and characters used during signing. The study was approved by the University Committee on Human Research and participants signed an informed consent.

Participants

Most of the participants were female and the average duration of experience as a professional interpreter was 16 years (Table 1). Most spent more than 20 hours per week signing. All but one of the participants experienced symptoms in the hands or arms during or after long signing sessions. The symptoms were described primarily as pain, discomfort, fatigue, or ache. The symptoms were primarily located in the wrist, forearm, elbow, shoulder, and upper back. On average, the symptom flares occurred for 11.4 days per month and lasted half a day. Symptoms usually (81%) began during signing sessions but 19% reported that symptom onset was delayed and began after signing sessions. There was no relationship between those who reported pain with signing and participant age.

Table 1.

Characteristics of participating sign language interpreters and hand symptoms related to signing (N=24).

| N (%) | Mean (S.D.) | |

|---|---|---|

| Gender (females) | 20 (83%) | |

| Age (years) | 40.5 (8) | |

| Years as interpreter | 16 (9) | |

| Hours of signing per week | ||

| 0 to 9 | 1 (4%) | |

| 10 to 19 | 2 (8%) | |

| 20 to 29 | 5 (21%) | |

| >= 30 | 13 (52%) | |

| Mean signing duration (hours) | 2.4 (1.3) | |

| Symptoms after a long signing session? (yes) | 23 (96%) | |

| If “Yes,” what type of symptoms? (N=23) | ||

| Discomfort | 17 (74%) | |

| Fatigue | 16 (70%) | |

| Ache | 14 (61%) | |

| Pain | 12 (52%) | |

| Numbness | 10 (43%) | |

| Burning | 9 (39%) | |

| Frequency of symptoms (occurrences per month) | 11.4 (13.5) | |

| Duration of pain (days) | 0.5 (0.4) | |

| When do symptoms occur in relation to session? | ||

| During session | 17 (71%) | |

| After session | 4 (17%) | |

| Unable to tell | 2 (8%) |

Comfort Rating by Interpreters

Commonly used gestures, postures and motions were rated for discomfort level if repeated frequently during signing on a 6-point scale; 0 = comfortable; 5 = very uncomfortable or painful. Gestures and postures included specific hand postures, motions, hand locations, size of movements, and speed of movements. Images of shoulder, elbow, forearm, wrist, fingers, and thumb postures (Greene & Heckman 1994), as well as specific signs from American Sign Language (ASL) (Gustason et al., 1980; Butterworth & Flodin 1991), were presented to the participants. Participants also rated the ASL gestures for every letter and number (0–10) as comfortable (−1), neutral (0), or uncomfortable (1) when formed repeatedly.

Posture Classification by Researchers

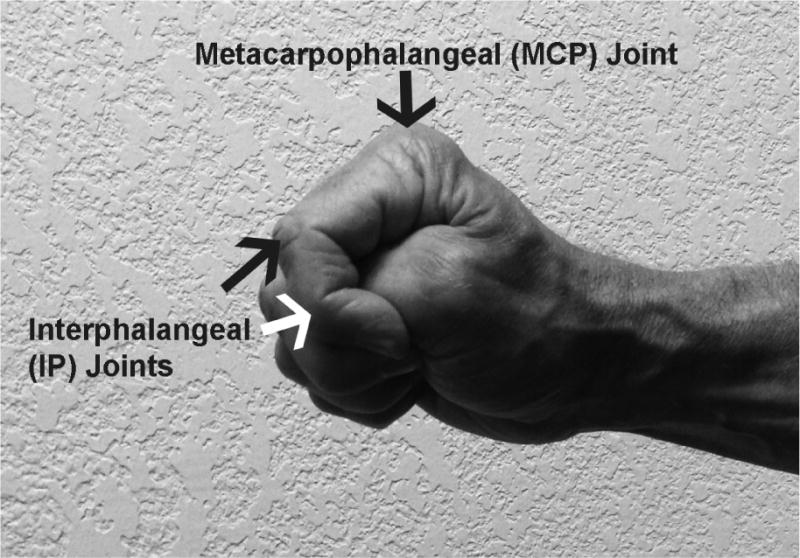

Each of the ASL gestures for 37 alphanumeric characters (26 letters and 11 numbers) was classified to specific hand postures by the authors with differences resolved by consensus. The postures of 6 hand and forearm joints that formed the character were assigned one of two levels: (1) wrist: flexed vs. non-flexed, (2) metacarpalphalangeal and interphalangeal joints for digits 2–5: discordant vs. concordant, (3) thumb: extended vs. not extended, (4) forearm: pronated vs. non-pronated, (5) metacarpalphalangeal joints for digits 2–5: extended vs. not extended, and (6) metacarpalphalangeal joints for digits 2–5: abducted vs. not abducted (Figure 2). Therefore, each character was coded to one of 64 (2ˆ6) different possible posture classifications. The two postures for each joint represented either a high or low risk for fatigue or pain based on physiologic and biomechanical models of the hand (Keir et al., 1998; Rempel et al., 1998).

Figure 2.

Location of metacarpophalangeal (MCP; knuckle) and interphalangeal (IP) joints. The IP joints are the two joints closest to the end of the fingers. The three joints of the thumb, from the wrist going the nail, are the carpometacarpal (CMC), metacarpophalangeal (MCP) and interphalangeal (IP) joints.

Statistical Analysis

Mean discomfort rating scores for hand shapes, word signs, and postures were calculated. The discomfort levels associated with specific words, characters and motions were rank ordered and the Friedman’s nonparametric test was used to test for differences. Significant findings were evaluated in follow-up with the Wilcoxon test to examine unique pairs.

Mean discomfort/comfort scores were also calculated for the 37 characters then rank ordered and charted. The relationships between the discomfort/comfort scores and the hand posture classifications were evaluated using a multinomial logistic regression model (SAS 9.3, Cary, NC). Both the full model, including all 6 postures, and a stepwise backwards elimination model were generated. Joint postures were eliminated sequentially until the p-values of the remaining regression coefficients were less than 0.05 and the model QIC was minimized.

Results

Discomfort ratings were assessed for location of hands during signing, hand postures and motions and finger postures (Table 2). The most comfortable location for the hands during signing was at lower chest height and close to the body. Generally, smooth movements of the elbows or shoulders were comfortable. The least comfortable area was at shoulder or face height or off to one side or the other with the hands further from the centerline than the shoulders (e.g., shoulder external rotation). Elbows bent (e.g., flexed) to more than 90 degrees were uncomfortable.

Table 2.

Mean (SD) discomfort scores (0= comfortable, 5=very uncomfortable) reported for specific joint angles (Greene 1994) when formed repeatedly during signing. (N=24)

| Joint | Angle/Posture1 | ||||

|---|---|---|---|---|---|

| Elbow | 0° flexion | 90° flexion | 135° flexion | 160° flexion | |

| 2.0 (0.4)a | 1.9 (0.3)b,c | 2.8 (0.4)b | 3.2 (0.4)a,c | ||

| Shoulder2 | Internal rotation | Neutral | External rotation | ||

| 1.2 (0.3)a | 1.1 (0.3)b | 2.1 (0.3)a,b | |||

| Forearm | 90° pronation | 45° pronation | Neutral | 45° supination | 90° supination |

| 1.9 (0.3)a,b | 1.3 (0.3)c | 0.8 (0.2)a,d | 1.6 (0.2)e | 2.7 (0.3)b,c,d,e | |

| Wrist | Ulnar deviation | Neutral | Radial deviation | ||

| 2.9 (0.3)a | 1.0 (0.3)a,b | 3.1 (0.3)b | |||

| Wrist | 45° extension – fist | 45° extension – fingers extended | Neutral | 45° flexion – fingers extended | 45° flexion – fist |

| 2.9 (0.3)a | 3.2 (0.3)b | 1.0 (0.3)a,b,c,d | 2.4 (0.4)c | 3.0 (0.3)d | |

| Fingers | MCP extended and DIP&PIP flexed | Fist | Slight flexion | Abduction | Extended |

| 2.7 (0.3)a,b | 1.7 (0.3)a,c | 0.4 (0.1)b,c,d,e | 1.9 (0.3)d | 2.5 (0.3)e | |

| Thumb | Flexed | Neutral | Palmar abduction | Radial abduction | |

| 3.2 (0.3)a,b,c | 0.6 (0.2)a,d,e | 2.1 (0.3)b,d | 2.0 (0.4)c,e | ||

Common superscripts in a row indicate a significant difference

Elbow flexed to 90 degrees.

The most comfortable hand postures and motions were those involving a straight (e.g., neutral) wrist with the palms facing each other or rotated slightly toward the floor (e.g., forearm rotation between neutral (thumb up) and 45 degrees pronation). The least comfortable involved extreme wrist postures such as radial or ulnar deviation or more than 45 degrees of wrist extension or flexion.

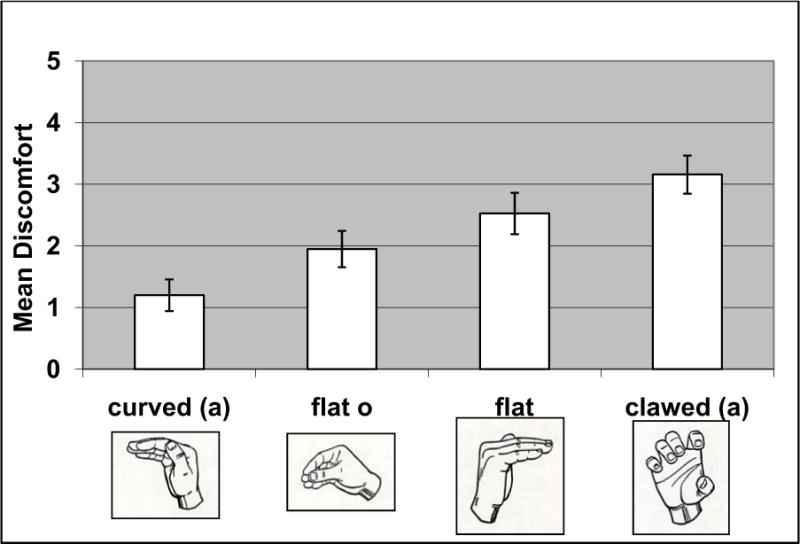

The most comfortable finger postures were with all fingers slightly bent (flexed). The least comfortable were with fingers straight (extended) or spread apart (abducted), the fingers in a ‘claw’ shape (Figure 3), or the fingers forming a tight fist. The least comfortable thumb posture was the thumb flexed to touch the small finger. Smooth and synchronized finger motions were comfortable; fast paced individual finger movements (e.g, finger spelling) were uncomfortable.

Figure 3.

Mean discomfort scores (0= comfortable, 5=very uncomfortable) for hand shapes when formed repeatedly during signing. Common letters indicate significant differences. Error bars = SEM.

The mean ratings of discomfort/comfort for the gestures for the 37 alphanumeric characters could be grouped to 15 distinct discomfort/comfort levels and rank ordered (Figure 4). By this ranking, 12 characters were rated as being comfortable while 25 were rated as being uncomfortable to perform repeatedly.

The 37 alphanumeric characters were classified by researchers on hand posture and could be grouped into 16 distinct postural sets (Table 3). Ten of these sets included only one character each; six sets included two or more postural classification-equivalent characters. The mean discomfort/comfort ratings within the 16 sets ranged from −0.08 to 0.31.

Table 3.

Posture classifications by researchers and subject discomfort(1)/comfort(−1) ratings of 37 alphanumeric characters.

| Wrist | MCP & IP1 |

Thumb | Forearm | MCP1 | MCP1 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Character | Mean subject comfort/ discomfort rating (SD) |

Mean comfort/ discomfort rating within set (SD) |

Flexed | Other | Discordant | Concordant | Extended | Other | Pronated | Other | Extended | Other | Abducated | Adducated | |

| Unique Posture Characters | d | 0.13 (0.61) | + | + | + | + | + | + | |||||||

| 1 | −0.04 (0.62) | + | + | + | + | + | + | ||||||||

| P | 0.46 (0.59) | + | + | + | + | + | + | ||||||||

| 4 | 0.08 (0.50) | + | + | + | + | + | + | ||||||||

| 2 | 0.00 (0.59) | + | + | + | + | + | + | ||||||||

| 10 | −0.04 (0.62) | + | + | + | + | + | + | ||||||||

| 5 | 0.00 (0.51) | + | + | + | + | + | + | ||||||||

| 3 | 0.08 (0.65) | + | + | + | + | + | + | ||||||||

| I | 0.04 (0.62) | + | + | + | + | + | + | ||||||||

| y | 0.04 (0.62) | + | + | + | + | + | + | ||||||||

| Posturally Equivalent Character Sets | c o 0 |

−0.08 (0.65) −0.21 (0.66) 0.08 (0.41) |

−0.07 (0.15) | + | + | + | + | + | + | ||||||

| a m n t s |

−0.21 (0.59) −0.08 (0.65) −0.08 (0.65) −0.04 (0.55) 0.00 (0.59) |

−0.08 (0.08) | + | + | + | + | + | + | |||||||

| b e |

−0.13 (0.61) 0.25 (0.61) |

0.06 (0.27) | + | + | + | + | + | + | |||||||

| i j r x u f z |

0.21 (0.66) 0.13 (0.61) 0.13 (0.61) 0.13 (0.61) 0.13 (0.61) 0.29 (0.69) 0.08 (0.58) |

0.15 (0.07) | + | + | + | + | + | + | |||||||

| k v w 8 6 9 7 |

0.17 (0.64) 0.17 (0.56) 0.42 (0.65) 0.08 (0.65) 0.25 (0.68) 0.04 (0.62) 0.08 (0.65) |

0.17(0.13) | + | + | + | + | + | + | |||||||

| h g q |

0.08 (0.65) 0.21 (0.66) 0.63 (0.49) |

0.31 (0.28) | + | + | + | + | + | + | |||||||

Digits 2 (index) to 5 (small) finger.

The multinomial logistic regression models evaluating the relationships between hand postures and the discomfort/comfort ratings of the 37 alphanumeric characters are summarized in Table 4. The backwards elimination model identified flexed wrist, discordant fingers, and extended MCP joints as significant predictors of discomfort. Based on the coefficient values in the backward elimination model, flexed wrist contributes approximately 3 times more to discomfort than discordant fingers or extended MCP joints.

Table 4.

Summary of nominal logistic regression models for discomfort(1)/comfort(−1) ratings for 37 alphanumeric characters and postural state.

| Joint | Postural State | Gesture Count | Mean Discomfor t/Comfort Rating | Full Model1 β-coefficient (p-value) |

Elimination Model2 β-coefficient (p-value) |

|---|---|---|---|---|---|

| Wrist | Flexed Non-flexed |

4 33 |

0.34 0.06 |

1.02(<0.001) | 0.95(<0.001) |

| MCP & IP, D2–D5 |

Discordant Concordant |

24 13 |

0.16 −0.04 |

0.28 (0.07) | 0.32 (0.04) |

| MCP, D2–D5 |

Extended Non-extended |

24 13 |

0.13 0.03 |

0.31 (0.07) | 0.33 (0.04) |

| MCP & IP, D1 (thumb) |

Extended Non-extended |

5 32 |

0.03 0.10 |

−0.02 (0.22) | |

| Forearm rotation | Pronated Non-pronated |

23 14 |

0.09 0.10 |

0.09 (0.62) | |

| MCP, D2–D5 |

Abducted Adducted |

12 25 |

0.12 0.08 |

0.06 (0.73) |

QIC= 1715

QIC= 1710

Discussion

The study found that sign language interpreters, who gesture with the hands as a profession, frequently experience hand and arm pain and fatigue, a finding reported by others (Cohn 1990; DeCaro 1992; Scheuerle 2000; Smith 2000; Qin et al. 2008; Fischer 2012). The symptoms associated with gesturing are not trivial; prolonged pain and fatigue were reported by more than half of the interpreters. Gesturing for HCI could pose a similar risk for hand and arm pain and fatigue if done for many hours per week. It is conceivable that gesturing for HCI will replace some or all keyboard and mouse input and could be done for 20 to 50 hours per week; many more hours than sign language interpreters perform gesturing for their jobs. The risk may be reduced if the selection of gestures for HCI tasks considers the discomfort, pain or biomechanical risk associated with gestures.

The study hypothesis was supported; sign language interpreters were able to differentiate hand postures and motions that are comfortable from those that are uncomfortable when performed repeatedly. The purpose of the study was to identify hand gestures that are comfortable to form; those gestures would be recommended for common HCI tasks. Painful gestures should be avoided for HCI tasks. However, the gestures used by sign language interpreters may differ from the gestures that will be used for computer input. Sign language interpreters use both complex and simple gestures; some of the complex gestures are unlikely to be used for HCI.

Our analysis focused on extracting findings that would be relevant for HCI by 1) evaluating the simpler gestures used for numbers and characters and not complex gestures used for words and 2) focusing on comfort related to general signing techniques such as gesturing area, arm postures, speed, etc. Gesture features most related to hand and arm pain and fatigue were the location of the hands relative to the body; the speed of motion of the fingers and arms; and the finger and wrist postures.

The location of the hands relative to the shoulders and torso is important to consider to avoid shoulder pain and fatigue. Generally, the hands should be near the midline of the body, and when bi-manually gesturing near each other near the midline of the body, and not further apart than the shoulders. For someone standing in front of a computer monitor, the hands should be near the height of the elbows and close to the lower chest and abdomen, so that the shoulder muscles are not tense. For someone sitting, the hands may be higher, closer to chest level to be visible to a camera, but then forearm or elbow support may be needed to reduce the shoulder load. Sustained elbow flexion of more than 90 degrees was uncomfortable and should be avoided.

Palm up (supination) or palm down (pronation) hand postures are achieved by rotating the forearm. Halfway between the extremes, e.g., thumb up, is the neutral forearm position. The most comfortable postures were between neutral and 45 degrees of pronation (palms rotated toward ground). Repeated full rotations to palm up (supination) or palm down (pronation) should be avoided. The relationship of forearm rotation with pain was also noted with the alphanumeric characters. Characters which were performed in full pronation (e.g., 0, D, V) were more uncomfortable than characters with similar finger postures that were formed with forearm rotation closer to neutral (e.g., O, 1, 2).

Gestures that included movement of the elbow or shoulder were more comfortable than gestures with just finger movements. The more comfortable gesture motions were up-down hand movements performed with motion at the elbows or side-to-side hand movements with motion at the shoulders. For example, moving the hands up and down at the elbow is more comfortable than moving the hands up and down at the wrist.

While there is no simple model that can classify the comfort level of all the possible finger postures, some overriding patterns emerged. In general, the most comfortable hand gestures were with the wrists straight and the fingers slightly flexed or in a loose fist (e.g., A, C, M, N, O). The least comfortable gestures were those involving wrist flexion; discordant adjacent fingers; or fingers extended. Examples of discordant adjacent fingers are the victory or peace sign, the Hawaiin shaka sign, and some ASL characters (e.g., Q, P, W, F, 6, 9). Examples of comfortable and uncomfortable hand postures are in Figure 5. Repeated or sustained wrist flexion and other non-neutral wrist postures have been associated with hand pain in other studies of sign language interpreters (Feuerstein & Fitzgerald 1992).

Figure 5.

Examples of comfortable (c) and uncomfortable (u) hand postures: (1c) fingers slightly flexed; (2c) hand in a loose fist; (3u) halt sign with wrist and fingers extended; (4u) wrist in ulnar deviation and fingers extended; (5c) loose hand pointing; (6c) thumb up; (7u) shaka sign with discordant adjacent finger postures; (8u) fingers extended and abducted (spread apart); (9c) forearm rotation to 45 degrees pronation; (10c) forearm rotation in neutral; (11u) forearm rotation to full pronation; (12u) forearm rotation to full supination.

The position of the thumb did not appear to have a large influence on comfort ratings in the final regression model. Many alphanumeric characters, both comfortable (e.g., B, M, N) and uncomfortable (e.g., 0, 4) ones, require the thumb to be flexed. Thumb extension (e.g., L, Y, 3, 10, hitchhiking gesture) was not associated with discomfort. Likewise, thumb palmar abduction was comfortable if the adjacent fingers were not discordant (e.g., O, C vs F, Q).

The speed of hand motion or dynamic element of a gesture can be comfortable or uncomfortable. Comfortable gestures involve smooth finger movements; rapid finger motions or motions with high accelerations or impact, for example, hand striking the other hand or a surface, are uncomfortable.

The findings on hand postures associated with discomfort are supported by an understanding of hand physiology and biomechanics. There is large variability between people in the range they can move their forearms, wrists and fingers, a variability that is influenced by age, genetics and medical conditions (Lester 2012). The discomfort associated with discordant adjacent finger positions is likely due to the inter-tendon connections between the extensor or flexor tendons that move the fingers. The degree of linkage between the tendons (quadriga) differs between people but the effect is that the posture of one digit can influence how far the neighboring digit can move (Horton 2007). An example of this is the limited degree that the ring finger can be extended when the other fingers form a fist. Only when the index and middle finger are allowed to open and extend can the ring finger extend fully. Therefore, gestures with discordance between adjacent fingers can be comfortable if the index or small finger are not fully extended or the other fingers are not in a tight fist (e.g., 1, 2, L, Y). Hand postures at the extremes of motion, such as a tight fist or a hand with the fingers fully spread apart (e.g., abducted) require high levels of muscle activity and coordination. These postures will be more fatiguing compared to a relaxed hand posture with the fingers gently curved that require much less muscle activity to form.

Guidelines for the design of hand gestures for HCI

The findings from this study can be applied to the design of 3D hand gestures for HCI. Tasks that are executed frequently (e.g., next page, delete, menu, return, paste, copy, etc.) should be assigned to gestures that are comfortable to form repeatedly. If a task set is large, then infrequent commands could be assigned to gestures that are less comfortable.

Optimal location of hands for gesturing during for HCI

The optimal location of the hands for gesturing is near the lower chest area, near the midline of the body and not too far from the torso. In general, the hands should not be wider or higher than the shoulders. The hands should not be further away from the body than allowed with 45 degrees of shoulder flexion unless there is comfortable forearm or elbow support. Similar recommendations apply to the seated person.

Rate of gesturing and impact

The speed of hand gesturing is primarily under user control; however, applications should not require high speeds for gesturing nor high accelerations of the hands or fingers. In addition, gestures should not require the forceful striking of one hand against the other or against a hard surface.

Wrist and forearm postures and motions

For common tasks or commands, the wrists and forearms should be near their neutral postures as illustrated in Figure 5 (c images). Postures and motions on either side of neutral are encouraged, but avoid extremes in wrist posture and forearm rotation. For example, avoid the prayer or halt gestures for common tasks. The prayer or ‘Namaste’ hand posture has been proposed for the common task of navigating to the home screen. The open palms toward the screen (halt sign) moving toward each other or away from each other have been proposed for ‘zoom in’ and ‘zoom out’ (Mistry 2009). Both gestures involve maximum wrist and finger extension and would, therefore, not be recommended for such common HCI tasks.

Hand and finger postures and motions

Common tasks should be assigned hand and figure postures and motions with relaxed finger flexion. Avoid gestures that require the fingers to be in full extension (Figure 5) or in a tight fist. The fingers should be similarly shaped –avoid postures where adjacent fingers are in very different postures, especially the middle, ring and small finger. The thumb, and to some degree the index finger, have greater independence of control than the other fingers; but even for these, extremes in posture should be avoided. Common tasks can be assigned the loose fist, the loosely open hand, the hitchhiking gesture and other gestures on the left side of Figure 4. For common HCI tasks, avoid the Hawaiian ‘shaka’ sign (only thumb and small finger are extended), the ‘corona’ sign (only index and small finger are extended), the ‘peace’ or ‘victory’ sign or other gestures on the right side of Figure 4.

There are limitations to generalizing findings from sign language interpreters to the design of gestures for HCI. Sign language interpreters need to form gestures that are distinct so that they are clearly visible to an audience. For HCI it may be possible to design gesture processors that do not require such distinct hand postures for accurate recognition. Another consideration is that sign language interpreters do not control the pace of gesturing; the pace is controlled by the speaker. Gesturing for HCI can usually be done at a pace controlled by the user. Controlling the pace may allow users to work at a comfortable gesturing rate and allow them to take breaks to prevent fatigue. However, there will be some HCI tasks where gesture rates are not controlled by the user; for example, gaming.

In conclusion, sign language interpreters can consistently differentiate hand gestures by level of comfort and their experience can help guide in the selection of comfortable gestures for HCI. There is no other professional group that has such extensive experience with hand gestures. Those who design gestures for HCI should consider the experience of sign language interpreters. Discomfort and fatigue can be minimized by assigning comfortable gestures to frequently performed tasks and commands. However, comfort is just one factor to consider when assigning hand gestures to HCI tasks. Technology will influence accuracy, reliability, and responsiveness; but technology is always improving. The other important factors for designing a gesture language are cognitive: intuitiveness of the language, learning time, and mental load. Ultimately, productivity for gesture based HCI languages will be optimized by understanding the interplay between task frequency and the cognitive and comfort factors associated with gestures.

Highlights.

Hand gestures were comfortable, when performed repeatedly, if the wrists were straight, the fingers were slightly flexed and adjacent fingers were similarly shaped.

Hand gestures were uncomfortable, when performed repeatedly, if the wrists were flexed, the fingers were extended at the knuckles, or adjacent fingers were in markedly different postures.

Arm postures were uncomfortable, when performed repeatedly, when the elbows were flexed more than 90 degrees, the forearms were rotated in full supination or pronation, or the shoulders were externally rotated.

The comfort/discomfort level associated with specific hand gestures should be one of the factors to consider when designing gesture languages for human-computer interaction.

The discomfort levels of gestures should be considered in the design gesture languages for HCI.

Comfortable gestures are those with the wrists straight or adjacent fingers similarly shaped.

Uncomfortable gestures are with wrists flexed, fingers extended or discordant adjacent fingers.

Uncomfortable arm postures are elbows flexed, forearms rotated or shoulders externally rotated.

Acknowledgments

The authors thank Emily Hertzer for her assistance with data collection and the sign language interpreters for participating to the project.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Butterworth RR, Flodin M. The Perigee visual dictionary of signing: an A-to-Z guide to over 1,250 signs of American sign language. Perigee Books; New York, NY: 1991. [Google Scholar]

- Cerveri P, De Momi E, Lopomo N, Baud-Bovy G, Barros RM, Ferrigno G. Finger kinematic modeling and real-time hand motion estimation. Ann Biomed Eng. 2007;35:1989–2002. doi: 10.1007/s10439-007-9364-0. [DOI] [PubMed] [Google Scholar]

- Cohn L, Lowry RM, Hart S. Overuse syndromes of the upper extremity in interpreters for the deaf. Orthopaedics. 1990;13:207–9. doi: 10.3928/0147-7447-19900201-11. [DOI] [PubMed] [Google Scholar]

- DeCaro JJ, Feuerstein M, Hurwitz TA. Cumulative trauma disorders among educational interpreters. Am Ann Deaf. 137:288–92. doi: 10.1353/aad.2012.0483. [DOI] [PubMed] [Google Scholar]

- Feuerstein M, Fitzgerald TE. Biomechanical factors affecting upper extremity cumulative trauma disorders in sign language interpreters. J Occup Med. 1992;34(3):257–64. doi: 10.1097/00043764-199203000-00009. [DOI] [PubMed] [Google Scholar]

- Feuerstein M, Carosella AM, Burrell LM, Marshall L, Decaro J. Occupational Upper Extremity Symptoms in Sign Language Interpreters: Prevalence and Correlates of Pain, Function, and Work Disability. J Occup Rehab. 1997;7:187–205. [Google Scholar]

- Fikkert W, van der Vet P, van der Veer G, Nijholt A. Gestures for large display control. In: Kopp S, Wachsmuth I, editors. Gesture in Embodied Communication and Human-Computer Interaction. Springer; Berlin/Heidelberg: 2010. pp. 245–256. (volume 5934 of Lecture Notes in Computer Science). [Google Scholar]

- Fischer SL, Marshall MM, Woodcock K. Musculoskeletal disorders in sign language interpreters: a systematic review and conceptual model of musculoskeletal disorder development. Work. 2012;42(2):173–84. doi: 10.3233/WOR-2012-1342. [DOI] [PubMed] [Google Scholar]

- Greene WB, Heckman JD. The Clinical Measurement of Joint Motion. 1. American Academy of Orthopaedic Surgeons; Chicago, IL: 1994. [Google Scholar]

- Gustason G, Pfetzing D, Zawolkow E. Signing Exact English. Modern Signs Press; Los Alamitos, CA: 1980. [Google Scholar]

- Horton TC, Sauerland S, Davis TR. The effect of flexor digitorum profundus quadriga on grip strength. J Hand Surg Eur. 2007;32(2):130–4. doi: 10.1016/J.JHSB.2006.11.005. [DOI] [PubMed] [Google Scholar]

- Keir PJ, Bach JM, Rempel DM. Effects of finger posture on carpal tunnel pressure during wrist motion. J Hand Surgery. 1998;23A:1004–1009. doi: 10.1016/S0363-5023(98)80007-5. [DOI] [PubMed] [Google Scholar]

- Kray C, Nesbitt D, Dawson J, Rohs M. User-defined gestures for connecting mobile phones, public displays, and tabletops; Proc 12th international conference on Human computer interaction with mobile devices and services, MobileHCI ’10; 2010. pp. 239–248. [Google Scholar]

- Lester LE, Bevins JW, Hughes C, Rai A, Whalley H, Arafa M, Shepherd DE, Hukins DW. Range of motion of the metacarpophalangeal joint in rheumatoid patients, with and without a flexible replacement prosthesis, compared with normal subjects. Clin Biomechanics. 2012;27(5):449–52. doi: 10.1016/j.clinbiomech.2011.12.010. [DOI] [PubMed] [Google Scholar]

- Manresa G, Varona J, Mas R, Perales FJ. Hand tracking and gesture recognition for human-computer interaction. Elect Let on Comp Vis and Image Anal. 2005;5(3):96–104. [Google Scholar]

- Mistry P, Maes P, Chang L. Wuw – wear ur world: a wearable gestural interface; Proc. 27th international conference extended abstracts on Human factors in computing systems, CHI EA ’09; 2009. pp. 4111–4116. [Google Scholar]

- Ni T, Bowman DA, North C, McMahan RP. Design and evaluation of freehand menu selection interfaces using tilt and pinch gestures. Int J Human-Computer Studies. 2011;69:551–562. [Google Scholar]

- Nielsen M, Störring M, Moeslund TB, Granum E. A Procedure for Developing Intuitive and Ergonomic Gesture Interfaces for HCI. proc Gesture-Based Communication in Human-Computer Interaction 2004. 2004:105–106. [Google Scholar]

- Qin J, Marshall M, Mozrall J, Marschark M. Effects of pace and stress on upper extremity kinematic responses in sign language interpreters. Ergonomics. 2008;51:274–89. doi: 10.1080/00140130701617025. [DOI] [PubMed] [Google Scholar]

- Radhakrishnan S, Ling Y, Zeid I, Kamarthi S. Finger-based multitouch interface for performing 3D CAD operations. Int J Human-Computer Studies. 2013;71:261–275. [Google Scholar]

- Rempel DM, Bach J, Gordon L, Tal R. Effects of forearm pronation/supination on carpal tunnel pressure. J Hand Surgery. 1998;23(1):38–42. doi: 10.1016/S0363-5023(98)80086-5. [DOI] [PubMed] [Google Scholar]

- Ruiz J, Li Y, Lank E. User-defined motion gestures for mobile interaction. Proc Human factors in computing systems 2011. 2011:197–206. [Google Scholar]

- Scheible J, Ojala T, Coulton P. Mobitoss: a novel gesture based interface for creating and sharing mobile multimedia art on large public displays; Proc. 16th ACM international conference on Multimedia, MM ’08; 2008. pp. 957–960. [Google Scholar]

- Scheuerle J, Guilford AM, Habal MB. Work-related cumulative trauma disorders and interpreters for the deaf. Appl Occup Environ Hyg. 2000;5:429–34. doi: 10.1080/104732200301386. [DOI] [PubMed] [Google Scholar]

- Smith SM, Kress TA, Hart WM. Hand/wrist disorders among sign language communicators. Am Ann Deaf. 2000;145:22–25. doi: 10.1353/aad.2012.0235. [DOI] [PubMed] [Google Scholar]

- Stern HI, Wachs JP, Edan Y. Designing hand gestures vocabularies for natural interaction by combining psycho-physiological and recognition factors. Int J Semantic Computing. 2008;2(1):137–160. [Google Scholar]

- Wan S, Nguyen HT. Human computer interaction using hand gesture. Proc IEEE Eng Med Biol Soc 2008. 2008:2357–60. doi: 10.1109/IEMBS.2008.4649672. [DOI] [PubMed] [Google Scholar]

- Wenhui W, Xiang C, Kongqiao W, Xu Z, Jihai Y. Dynamic gesture recognition based on multiple sensors fusion technology. Proc IEEE Eng Med Biol Soc. 2009:7014–7. doi: 10.1109/IEMBS.2009.5333326. [DOI] [PubMed] [Google Scholar]

- Wobbrock JO, Morris MR, Wilson AD. User-defined gestures for surface computing. Proceedings of the 27th international conference on Human factors in computing systems, CHI ’09; 2009. pp. 1083–1092. [Google Scholar]

- Wright M, Lin CJ, O’Neill E, Cosker D, Johnson P. 3D Gesture Recognition: An Evaluation of User and System Performance. Proc Pervasive 2011. 2011:294–313. [Google Scholar]

- Wu A, Hassan-Shafique K, Shah M, da Vitoria Lobo N. Virtual three-dimensional blackboard: three-dimensional finger tracking with a single camera. Appl Opt. 2004;43:379–90. doi: 10.1364/ao.43.000379. [DOI] [PubMed] [Google Scholar]

- Yatani K, Tamura K, Hiroki K, Sugimoto M, Hashizume H. Toss-it: Intuitive information transfer techniques for mobile devices. Proc CHI’. 2005;05:1881–1884. [Google Scholar]

- Yoo J, Hwang W, Seok H, Park S, Kim C, Park K. Cocktail: Exploiting bartenders’ gestures for mobile interaction. Image. 2010;2(3):44–57. [Google Scholar]