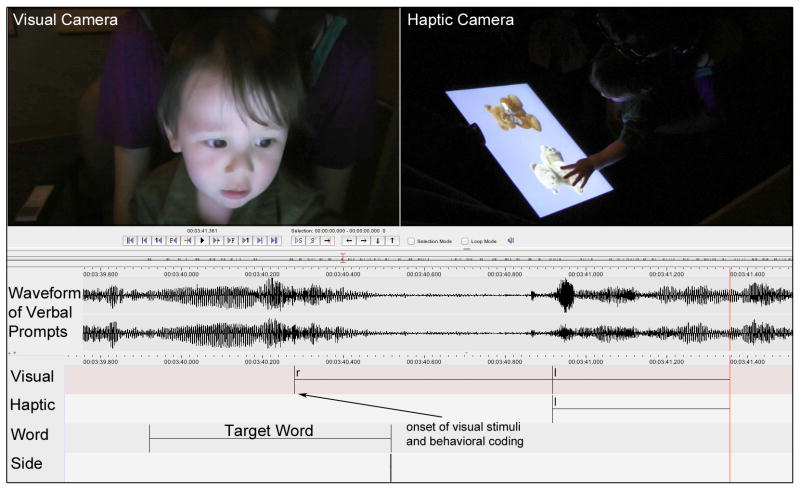

Figure 1.

Eudico Linguistic Annotator coding setup. The waveform of the experimenter’s prompt is extracted from the video camera recording the visual behavior. The waveform is then synced with the video from the visual and haptic cameras. Coding was done using four tiers. On the Visual tier the onset of the visual stimuli was coded, and looking behavior was coded: right look (r), left look (l), or away look (a). On the Haptic tier the onset and offset of the haptic response and the direction of the touch (r or l) was coded. On the Word tier the onset and offset of the target word in the first sentence prompt was marked by viewing the waveform and using frame-by-frame auditory analysis. Finally, on the Side tier, the side (left or right) the target word appeared was coded and hidden from view. Behavioral coding began at the onset of the visual stimulus, which occurred ~ 238 ms after the target word in the first sentence prompt was uttered.