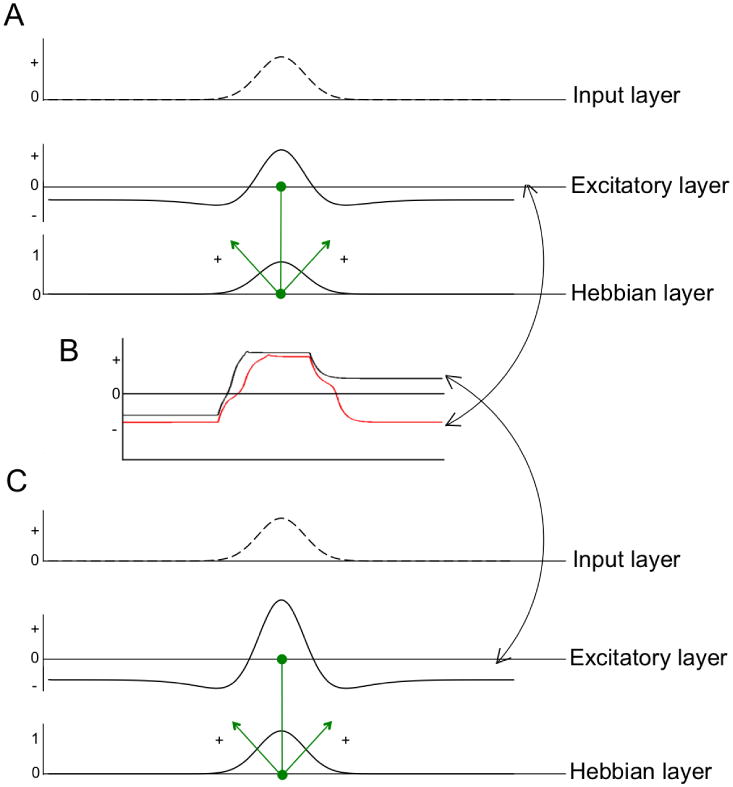

Figure 3.

Variation of the three-layer DNF architecture showing input to the model, a layer of excitatory neurons, and a Hebbian layer. Neurons in the excitatory layer are connected one-to-one to neurons in the Hebbian layer (see green connections); these sites project activation back to the excitatory layer. When the excitatory layer is given a relatively weak input, activation from the Hebbian layer can help create a self-stabilized peak (see A and red line in B). With slightly more Hebbian activation, the weak input can create a self-sustaining peak in the excitatory layer (see C and black line in B).