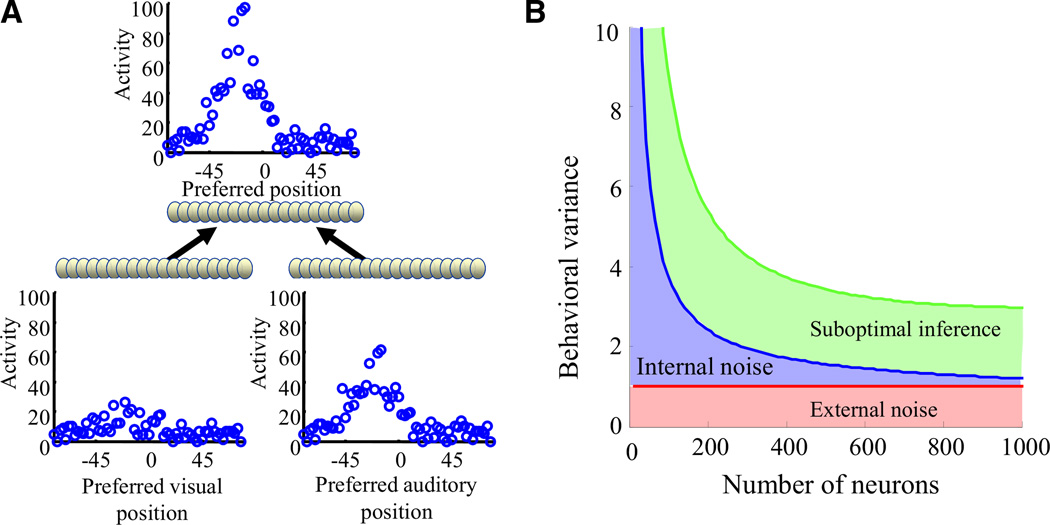

Figure 4. Suboptimal Inference Dominates over Internal Noise in Large Networks.

(A) Network architecture. Two inputs layers encode the position of an object based on visual and auditory information, using population codes. Typical patterns of activity on a given trial are shown above each layer. These input neurons project onto an output layer representing the position of the object based on both the visual and auditory information.

(B) Behavioral variance of the network (modeled as the variance of the maximum likelihood estimate of position based on the output layer activity) as a function of the number of neurons in the output layer. Red line: lower bound on the variance given the information available in the input layer (based on the Cramer-Rao bound). Blue curve: network with optimal connectivity. The increase in variance (compared to the red curve) is due to internal noise in the form of stochastic spike generation in the output layer. The blue curve eventually converges to the red curve, indicating that the impact of internal noise is negligible for large networks (the noise is simply averaged out). Green curve: network with suboptimal connectivity. In a suboptimal network, the information loss can be very large. Importantly, this loss cannot be reduced by adding more neurons; that is, no matter how large the network, performance will still be well above the minimum variance set by the Cramer-Rao bound (red line). As a result, for large networks, the information loss is due primarily to suboptimal inference and not to internal noise.