Abstract

Pareto optimization combines independent objectives by computing the Pareto front of its search space, defined as the set of all solutions for which no other candidate solution scores better under all objectives. This gives, in a precise sense, better information than an artificial amalgamation of different scores into a single objective, but is more costly to compute. Pareto optimization naturally occurs with genetic algorithms, albeit in a heuristic fashion. Non-heuristic Pareto optimization so far has been used only with a few applications in bioinformatics. We study exact Pareto optimization for two objectives in a dynamic programming framework. We define a binary Pareto product operator on arbitrary scoring schemes. Independent of a particular algorithm, we prove that for two scoring schemes A and B used in dynamic programming, the scoring scheme correctly performs Pareto optimization over the same search space. We study different implementations of the Pareto operator with respect to their asymptotic and empirical efficiency. Without artificial amalgamation of objectives, and with no heuristics involved, Pareto optimization is faster than computing the same number of answers separately for each objective. For RNA structure prediction under the minimum free energy versus the maximum expected accuracy model, we show that the empirical size of the Pareto front remains within reasonable bounds. Pareto optimization lends itself to the comparative investigation of the behavior of two alternative scoring schemes for the same purpose. For the above scoring schemes, we observe that the Pareto front can be seen as a composition of a few macrostates, each consisting of several microstates that differ in the same limited way. We also study the relationship between abstract shape analysis and the Pareto front, and find that they extract information of a different nature from the folding space and can be meaningfully combined.

Electronic supplementary material

The online version of this article (doi:10.1186/s13015-015-0051-7) contains supplementary material, which is available to authorized users.

Keywords: Pareto optimization, Dynamic programming, Algebraic dynamic programming, RNA structure, Sankoff algorithm

Background

In combinatorial optimization, we evaluate a search space X of solution candidates by means of an objective function . Generated from some input data of size n, the search space X is typically discrete and has size for some . Conceptually, as well as in practice, it is convenient to formulate the objective function as the composition of a choice function and a scoring function, , computing their composition as for the overall solution. The most common form of the objective function is that evaluates each candidate to a score (or cost) value, and chooses the candidate which maximizes (or minimizes) this value. One or all optimal solutions can be returned, and with little difficulty, we can also define to compute all candidates within a threshold of optimality. This scenario is the prototypical case we will base our discussion on. However, it should not go unmentioned that there are other, useful types of “choice” functions besides maximization or minimization, such as computing score sums, full enumeration of the search space, or stochastic sampling from it.

Multi-objective optimization arises when we have several criteria to evaluate our search space. Scanning the pizza space of our home town, we may be looking for the largest pizza, the cheapest, or the vegetarian pizza with the richest set of toppings. When we use these criteria in combination, the question arises exactly how we combine them.

Let us consider two objective functions and on the search space X and let us define different variants of an operator to designate particular techniques of combining the two objective functions.

- Additive combination optimizes over the sum of the candidates scores computed by and . This is a natural thing to do when the two scores are of the same type, and optimization goes in the same direction, i.e. ; we define

In fact, this recasts the problem in the form of a single objective problem with a combined scoring function. This applies, e.g. for real costs (money), which sum up in the end no matter where they come from. Gotoh’s algorithm for sequence alignment under an affine gap model can be seen as an instance of this combination [1]. It minimizes the score sum of character matches, gap openings and gap extensions. Jumping alignments are another example [2]. They align a sequence to a multiple sequence alignment. The alignment always chooses the alignment row that fits best, but charges a cost for jumping to another row. Jump cost and regular alignment scores are balanced based on test data. However, often it is not clear how scores should be combined, and researchers resort to more general combinations.1 - Parametrized additive combination is defined as

Here the extra parameter signals that there is something artificial in the additive combination of scores, and the is to be trained from data in different application scenarios, or left as a choice to the user of the approach. Such functions are often used in bioinformatics [3–7]. For example, the Sankoff algorithm scores joint RNA sequence alignment and folding by a combination of base pairing () and sequence alignment () score [3]. RNAalifold scores consensus structures by a combination of free energy () and covariance () scores [8]. Covariance scores are converted into “pseudo-energies”, and the parameter controls the relative influence of the two score components. This combination often works well in practice, but a pragmatic smell remains. Returning to our earlier pizza space example: It does not really make sense to add the number of toppings to the size of the pizza, or subtract it from the price, no matter how we choose . In a way, the parameter manifests our discomfort with this situation.2 -

Lexicographic combination performs optimization on pairs of scores of potentially different type, not to be combined into a single score.

where optimizes lexicographically on the score pairs . With the lexicographic combination, we define a primary and a secondary objective, seeking either the largest among the cheapest pizzas, or the cheapest among the largest—certainly with different outcomes. This is very useful, for example, when produces a large number of co-optimal solutions. Having a secondary criterion choose from the co-optimals is preferable to returning an arbitrary optimal solution under the first objective, maybe even unaware that there were alternatives.3 Lexicographic and parameterized additive combination are incomparable with respect to their scope. can combine scoring schemes of different types, but cannot optimize a sum of two scores even when they have the same type. does exactly this. Only when both scores have the same type, there may be a choice of big enough such that emulates .

-

Pareto combination must be used in the case when there is no meaningful way to combine or prioritize the two objectives. It may also be useful and more informative in the previous scenarios, producing a set of “optima” and letting the user decide the balance between the two objectives a posteriori.

Pareto optimality is defined via (non-)domination. An element dominates another one, if it is strictly better in one dimension, and not worse in the other. The solution set one computes is the Pareto front of . Taking and as maximization, the Pareto front operator is defined on subsets S of , ordered by and , respectively, as follows:

A set without dominated elements is called a Pareto set. Naturally, every subset of a Pareto set is a Pareto set, too. We define the Pareto combination as4

The Pareto combination is more general than both and . This is obvious for , and is also obvious for when the two scores are of different type. It even holds for when they have the same type, but becomes more subtle. It can be shown that produces all optima that can be produced by for some , but there can also be others. See Theorem 3.1, and we also refer the reader to the careful treatment of this intriguing issue in Schnattinger’s thesis [9].5

The combinations and are common practice and merit no theoretical investigation, as they reduce the problem to the single objective case. The lexicographic combination has been studied in detail in [10]. Aside from its obvious use with primary and secondary objectives, has an amazing variety of applications when of one the two objectives does not perform optimization, but enumeration or summation (cf. above). Furthermore, when computes a classification attribute from the candidates and is the identity, it gives rise to the method of classified dynamic programming used, e.g. in probabilistic RNA shape analysis [11].

Here, we will take a deeper look into Pareto optimization, which has been used in bioinformatics mainly in a heuristic fashion. It naturally arises with the use of genetic algorithms. They traverse the search space improving candidates in a certain dimension, returning a solution when (say) can no longer be increased without decreasing . A genetic algorithm uses different starting points and produces a heuristic subset of the Pareto front of the search space. This approach was used by Zhang et al. [12], who compute the Pareto front to solve a multiple sequence alignment problem. Cofolga2mo [13] is a structural RNA sequence aligner based on a multi-objective genetic algorithm. Cofolga2mo does not look strictly at the Pareto optimal solutions, but produces a heuristic subset of the (larger) set of “weak” Pareto optimal solutions. The objective function is computed on similarity sequence score and consensus structure score (under the base-pairing probabilities model). Only the score of the consensus structure is given, the structure itself is not shown.

A similar approach was used by Taneda [14] to solve the inverse RNA folding problem by computing the Pareto front between the folding energy in the first dimension and the similarity score to the target in the second dimension. In [15], the authors compute the Pareto front for multi-class gene selection. They use a genetic algorithm to avoid the statistics aggregation in gene expression, which could lead to the “siren pitfall” issue [16] when using .

Let us now turn to non-heuristic cases of Pareto optimization. A family of monotonous operators in preordered and partially ordered sets in dynamic programming were defined in [17]. The author showed the principle of optimality applies to the maximal return in the case of Markovian processes. Such processes evolve stochastically over time and do not consume any input. We are aware of only a few cases where Pareto optimization has been advocated within a dynamic programming approach. It was used by Getachew et al. [18] to find the shortest path in a network given different time cost/functions, computing the Pareto front. The Pareto-Optimal Allocation problem was solved with dynamic programming by Sitarz [19]. In the field of bioinformatics, Schnattinger et al. [20, 21] advocated Pareto optimization for the Sankoff problem. Their algorithm computes , where optimizes a sequence similarity score and optimizes base pair probabilities in the joint folding of two RNA sequences.

Libeskind-Hadas et al. [22] used an exact Pareto optimisation by using dynamic programming to compute reconciliation trees in phylogeny. They introduce two binary operators and which stand respectively for set union and set cartesian product, both followed by a Pareto filtration for their specific problem. They optimized AB, where A and B are sets, by sorting A and B in lexicographical order and keeping the resulting Pareto list sorted.

Pareto optimization in a dynamic programming approach raises four specific questions, which we will address in the body of this article.

-

(i)

Does the Pareto combination of two objectives satisfy Bellman’s principle of optimality, the prerequisite for all dynamic programming ("Pareto optimization in ADP")?

-

(ii)

How to compute Pareto fronts both efficiently and incrementally, when proceeding from smaller to larger sub-problems ("Implementation)?

-

(iii)

What is the empirical size of the Pareto front, compared to its expected size ("Applications")?

-

(iv)

What observations can de drawn from a computed Pareto front in a concrete application ("Applications")?

Heretofore, the issues (i) and (ii) had to be solved ad-hoc with every approach employing Pareto optimization. Motivated by and generalizing on the work by Schnattinger et al., we strive here for general insight in the use of Pareto optimization within dynamic programming algorithms. To maintain a well-defined class of dynamic programming algorithms to which our findings apply, we resort to the framework of algebraic dynamic programming (ADP) [23].

Here is a short preview of our findings: we can prove, in a well circumscribed formal setting, that the Pareto combination preserves Bellman’s principle of optimality (Theorem 3.3). Thus, it is amenable to implementation in a dynamic programming framework such as ADP as a “single keystroke” operation. We show that while the search space size is typically exponential, we can expect Pareto fronts of linear size. This is confirmed empirically by our implementations of (covering several algorithmic variants), and we observe that this is actually more efficient that producing a similar number of (near-optimal) solutions with other means. Finally, using our implementations in the field of RNA structure prediction in some (albeit preliminary) experiments, we find that a small Pareto front in joint alignment and folding may be indicative of a homology relationship, and elucidate differences in the MFE and MEA scoring schemes for RNA folding that could not be observed before.

Pareto sets: properties and algorithms

We introduce Pareto sets together with some basic mathematical properties and algorithms ("Pareto sets and the Pareto front operator", "Worst case and expected size of Pareto fronts"). We restrict our discussion to Pareto optimization over value pairs, rather than vectors of arbitrary dimension. Pareto optimization in arbitrary dimension is shortly touched upon in our concluding section.

The operations on Pareto sets that arise in a dynamic programming framework are threefold: taking the Pareto front of a set of sub-solutions ("Computing the Pareto front", "Pareto operator complexity, revisited"), joining alternative solution sets ("Pareto merge in linear time"), and computing new solutions from smaller subproblems by the application of scoring functions ( "Pareto set extension").

Pareto sets and the Pareto front operator

We start from two sets A and B and their Cartesian product . The sets A and B are totally ordered by relations and , respectively. This induces a partial domination relation on C as follows. We have if and , or and . In words, the dominating element must be larger in one dimension, and not smaller in the other. In , an element is dominant iff there is no other element in X that dominates it. A set without dominated elements is a Pareto set. We can restate Eq. (4) in words as: The Pareto front of X, denoted , is the set of all dominant elements in X. The definition of actually depends on the underlying total orders, and we should write more precisely , but for simplicity, we will suppress this detail until it becomes relevant.

The following properties hold by definition and are easy to verify:

| 6 |

| 7 |

| 8 |

| 9 |

Note that is not monotone with respect to . Idempotency of (Eq. 8) justifies the alternative definition: A set is a Pareto set if .

Algorithmically, we represent sets as lists, without duplicate elements. If a list represents a Pareto set, we call it a Pareto list.

A sorted Pareto list, by definition, is sorted lexicographically under in decreasing order. Naturally, on sorted lists, we can perform certain operations more efficiently, which must be balanced against the effort of keeping lists sorted.

The intersection of two Pareto sets is a Pareto set because it is a subset of a Pareto set by (9). This does not apply for Pareto set union, as elements in one Pareto set may be dominated by elements from the other. Therefore, we define the Pareto merge operation

| 10 |

Clearly, inherits commutativity from .

Observation 1

(Pareto merge associativity)

| 11 |

We show that both sides are equal to .

Let . Clearly, , and such that . This holds if and only if there is no such in , nor in C, which is equivalent to . follows by a symmetric argument.

As a consequence, we can simply write . Note that in practice, it may well make a difference in terms of efficiency whether we compute a three-way Pareto merge as or as .

Worst case and expected size of Pareto fronts

In combinatorial optimization, the search space is typically large, but finite. This allows for some statements about the maximal and the expected size of a Pareto front.

Observation 2

(Sorted Pareto lists) A Pareto list sorted on the first dimension based on (i) is also sorted lexicographically by in decreasing order, and at the same time (ii) is sorted lexicographically in increasing order based on .

This is true because when the list l is a Pareto list and , there can be no other element with . Because is a total order, one of the two would dominate the other. Therefore, (i) the overall lexicographic order is determined solely by , and (ii) looking at the values in the second dimension alone, we find them in increasing order of .

This implies a worst-case observation on the size of Pareto fronts over discrete intervals:

Observation 3

(Worst case size of Pareto set) If A and B are discrete intervals of size M, then any Pareto set over has elements.

This is true because by Observation 2, each decrease in the first dimension must come with an increase in the second component.

Observation 4

On random sets, the expected size of the Pareto front of a set of size N follows the harmonic law [24, 25],

| 12 |

Computing the Pareto front

We specify algorithms to compute Pareto fronts from unsorted and sorted lists. In our pseudocode, denotes the empty list, x : l denotes a list with first element x and remainder list l, and vice versa for l : x. The arrow indicates term rewriting or state transition.

From an unsorted list

An obvious possibility is to sort the list by an sorting algorithm, and then compute the Pareto front by one of the algorithms for sorted lists specified below. We call this implementation of the Pareto front operator .

However, it is also interesting to combine the two phases. We present a Pareto-version of insertion sort, asymptotically in , but potentially fast in practice, because it effectively decreases N already during the sorting phase by eliminating dominated elements.

Pareto front operator

The definition of the remove function makes use of the inductive property that the list l is already a (sorted) Pareto list, and by our above observation, it is increasing in the second dimension. Hence, we remove one dominated element in each application of the last rule, and terminate when the second rule is applied. All the steps of remove are productive in the sense that they reduce the list length for subsequent calls to into.

From a lexicographically sorted list

From a sorted list, the Pareto front can be extracted in linear time [26]. We describe such an algorithm by a state transition system, which transforms an input and an (initially empty) output list into empty input and the Pareto front as output and call it

Since the input list is shortened by one element in each step, this algorithm runs in O(N).

A smooth Pareto front algorithm for the general case

We can adapt the algorithm to the general case by adding two clauses for elements that appear out of order{:}

We extended by the following rules to obtain :

where the function up(x, (a, b)) inserts the new pair from the low end into the Pareto list x:

Like our first algorithm , handles the general case in quadratic time, but smoothly adapts to sorted lists, becoming the same as when all elements are in order.

Unsorted Pareto front computation

Our previous implementations all compute the Pareto front in the form of a sorted list. However, in dynamic programming, solution sets are created in various ways and arise not necessarily sorted, even when sub-solutions are given on sorted order. Hence, it may be attractive to consider an algorithm that does not bother about sorting at all, consumes and produces unsorted lists. We call this variant .

This resembles without sorting the output, and hence the resulting list must always be traversed completely for each element added. Worst case complexity is .

Pareto operator complexity, revisited

For a more detailed complexity analysis of the above algorithms, we must distinguish the size of input and output. In our dynamic programming applications, we will compute , where f is some local scoring function. If the Pareto sets X and Y have size n, then is of size , while the final result can be expected to be smaller again.

For a list of size N, the result of has size N in the worst case. In the expected case, however, output size is H(N) (Eq. 12), and because [24], we can asymptotically treat it as . Our observations are summarized in Table 1.

Table 1.

Complexities of operators

| Operator | Worst case | Expected case |

|---|---|---|

| O(N) | O(N) | |

The operator has the best complexity in both worst and expected case, but it also makes the strongest assumptions. In the expected case, and even asymptotically catch up with , whose separate sorting phase gets no benefit from the elimination of dominated elements.

In a dynamic programming approach, the operation is executed in the innermost loop of the program, and therefore, constant factors are also relevant. In particular, becomes interesting as it makes the weakest assumption by not requiring lists to be sorted at any time, in contrast to . We will return to this aspect with our applications.

Pareto merge in linear time

We now specify an implementation of the Pareto merge operation which makes use of the fact that its arguments are Pareto sets, represented as lists in decreasing order by the first component (and in increasing order by the second).

The function walks down a list l until it finds an element that does not satisfy the predicate p. It returns this element and the remaining list. We use it to eliminate elements smaller than b (resp. d) in the second dimension. At first glance, the combination of and reminds of an algorithm, but this is not true. For input lists of length and , where , the output list has at most length N. It requires at most O(N) calls to . requires calls when it deletes k elements, with . However, each element deleted by safes a subsequent call to . Overall, the number of steps remains within O(N).

Pareto set extension

Dynamic programming is governed by Bellman’s principle of optimality, which the objective function must obey. Choice must distribute over scoring, which is computed incrementally from smaller to larger sub-solutions. The score is computed by a combination of local scoring functions . In Pareto optimization, f takes for form . For each such function f,

| 13 |

must hold. (The above equation is formulated here for the simplest case: a unary function f and a choice function that returns a singleton result). This requirement implies the functions f to be strictly monotone in each argument that is a subproblem result. (The score functions may take other arguments, too, which are taken from the problem instance).

By Pareto set extension we mean the computation of , , and so on for more arguments. On the partially ordered set , we call f strictly monotone if and are strictly monotone on A and B, respectively.

Lemma 2.1

Pareto set extension. The extension of a Pareto set under a strictly monotone, unary functionfis a Pareto set.

Proof

We must show that f(X) holds no dominated elements. Assume f(X) holds a dominated element , dominated by some f((a, b)). We have or . Strict monotonicity implies or , implying in contradiction to the prerequisite that X is a Pareto set.

The same reasoning does not apply for functions f with multiple arguments. Let . We have where and this extension is not a Pareto set.

Dressing up Pareto optimization for dynamic programming, we must (i) formulate conditions under which fulfills Bellman’s principle, and (ii) show how the Pareto front of the overall solution can be computed incrementally and efficiently from Pareto fronts of sub-solutions, using a combination of the techniques introduced above. Heretofore, these issues had to be resolved with every dynamic programming algorithm that uses Pareto optimization, such as the one by Sitarz or Schnattinger et al. [19, 20]. Striving for general results for a whole class of algorithms, we resort to the framework of ADP.

Pareto optimization in ADP

Algebraic dynamic programming (ADP) is a framework for dynamic programming over sequential data. Its declarative specifications achieve a perfect separation of the issues of search space construction, tabulation, and scoring, in clear contrast to the traditional formulation of dynamic programming algorithms by matrix recurrences. Therefore, ADP lends itself to the investigation of Pareto optimization in dynamic programming in general, i.e. independent of a particular DP algorithm. The base reference on ADP is [23] and the lexicographic product was introduced in [10]. ADP in practice is supported by implementations of the framework embedded in Haskell [27] or as an independent domain-specific language and compiler in the Bellman’s GAP system [28, 29]. The results in the present article suggest to extend these systems by a generic Pareto product on evaluation algebras, i.e. to provide the operator as a language feature.

In this section, we recall the basic definitions of ADP (Signatures, evaluation algebras, and tree grammars), in order to relate the Pareto product to other product operators (Relation between Pareto and other products) and prove our main theorem (Preservation of Bellman's principle by the Pareto product). We also show a hand-crafted case of a Pareto product, and its reformulation in ADP (A hand-crafted use case of the Pareto product).

Algebraic framework

Signatures, evaluation algebras, and tree grammars

Let be an alphabet and the set of finite strings over . A signature is a set of function symbols and a data type place holder (also called a sort) S. The return type of an is S, each argument is of type S or . denotes a term language described by the signature and is the term language with variables from the set V. A -algebra or interpretationA is a mathematical structure given by a carrier set for S and functions operating on this set for each , consistent with their specific type. Interpreting by A is denoted A(t) and yields a value in . An evaluation algebraA is a -algebra augmented with an objective function, where square brackets denote multisets.

A regular tree grammar over a signature is defined as tuple , where V is the set of non-terminal symbols, is an alphabet, Z is the axiom and P a set of production rules. Each production is of form

| 14 |

The regular tree language of is the subset of that can be derived from Z by the rules in P.

ADP semantics

For input sequence z, the tree grammar defines the search space of the problem instance

| 15 |

where yield is the function returning the alphabet symbols decorating the leaves of the tree. (For our present purpose, the reader needs not worry about technical details and can take tree grammars as black-box generators of the search space).

Given a tree grammar , an evaluation algebra A with choice function , and input sequence z, an ADP problem is solved by computing

| 16 |

While this declarative formulation suggests a three-phase computation—construct X, evaluate to A(X), choose from it via —an ADP compiler adds in the amalgamation of these three phases, as it is typical for dynamic programming. For this dynamic programming machinery to work correctly, the algebra A must satisfy Bellman’s principle of optimality, stated in full generality [30] by the requirements

| 17 |

| 18 |

| 19 |

where the denote multisets (reflecting that the same intermediate result can be found several times; this is why we write instead of ) and is any function from the underlying signature. Note that for nullary functions (constants) (17) is trivially satisfied as it simplifies to the identity

| 20 |

When the choice function maximizes or minimizes over a total order, Bellman’s principle implies the strict monotonicity of the scoring functions (Lemma 3.2 below). When only some maximal or minimal solution is sought, one could relax this condition to weak monotonicity, but when all optimal solutions, or even near-optimals are desired, monotonicity must be strict [31]. The formulation given above is more general than the monotonicity requirement, as it also applies to arbitrary objective functions where there may be no maximization or minimization involved, such as candidates counting or enumeration.

Products of algebras

Combinations of multiple optimization objectives can be expressed in ADP by products of algebras. For all variants of the product operator , we define

| 21 |

These functions compute independently scores in the Cartesian product of A and B. By contrast, objective functions are combined in different ways by different product operators.

The lexicographic product, for example, is an evaluation algebra over and the objective function of is:

In this formula, set(X) reduces the multiset X to a set. So, implements the lexicographical ordering of the two independent criteria as its objective. Aside from , Bellman’s GAP also implements a Cartesian and (in a restricted form) a so-called “interleaved” product. To our knowledge, a Pareto product operator has not yet been considered for inclusion in ADP compilers.

Relation between Pareto and other products

As we show next, Pareto optimization can rightfully be considered as the most general of the combinations discussed here. This holds strictly in the sense that from the Pareto front, the solutions according to the other combinations can be extracted.

Theorem 3.1

(Pareto front subsumption) For any grammar, scoring algebrasA andB satisfying Bellman’s principle, and input sequencex, consider the algebra combinations, , and.

Proof

Let t be the optimal candidate chosen by the left-hand side. Its score is , by (7) and by the definition of (). This score is maximal. Hence, candidate t must be in the Pareto front computed on the right-hand side, represented by the Pareto-optimal pair (A(t), B(t)). It could only be missing in the Pareto front if there was another candidate dominating t, i.e. with and or vice versa. But then, we would have , contradicting the optimality of t. Conversely, no candidate in the Pareto front can score strictly higher that t, because then, this candidate would have been returned instead of t.

The same reasoning applies to the lexicographic combination.

The above argument is formulated for an application of the choice function to a complete search space of candidates. By virtue of Bellman’s principle satisfied by A, B, and their products, the argument inductively holds (by structural induction on the candidates involved) when the choice functions are applied at intermediate steps during the dynamic programming computation. That also satisfies Bellman’s principle will be shown in our main theorem.

A hand-crafted use case of the Pareto product

Any dynamic programmer can hand-craft a Pareto product from two evaluation algebras. This requires a substantial programming and debugging effort. Our intention is that this human effort can avoided by a general technique delegated to an ADP compiler. This idea was inspired by the work of Schnattinger et al. [20, 21]. Their algorithm computes via dynamic programming the Pareto front for the “Sankoff problem” of joint RNA sequence alignment and consensus structure prediction [3]. This is their algorithm in facsimile:

| 22 |

Here, is the probability that i and j be paired in the sequence X. This is computed independently of the alignment score, which is composed of , the gap penalty and , the alignment score between the jth base in X and the lth base in Y.

The authors demonstrated their algorithm respects Bellman’s principle and correctly computes the Pareto front of its search space. The proof essentially uses the fact that both scores are additive. They showed that all Pareto solutions are generated by the algorithm and that no Pareto solutions are lost during the computation. They based their proof on monotonicity in order to show that the three first terms of the computation of S(i, j, k, l) consist in summing constant vectors to the current solutions, which leads to the conservation of the dominant solutions. They also showed that the last term needs only dominant solutions contribute to the final result. So, previous deletions of dominated solutions do not lead to a loss of Pareto overall optima. However, in this problem formulation, the general nature of the proof is not easily recognized. We now reformulate this algorithm in the algebraic framework. The correctness of the Pareto optimization then follows from our main theorem below.

Sankoff problem signature and algebras

The signature and algebras used to compute base pair probabilities (PROB) and similarity between two sequences (SIM) are presented in Table 2.

Table 2.

Two evaluation algebras for the Sankoff problem

| SIGNATURE | PROB | SIM | ||

|---|---|---|---|---|

| nil | 0 | 0 | ||

| NoStr (x, y) | x + y | x + y | ||

| Split (x, y) | x + y | x + y | ||

| Pair | x + + | x + + | (*) | |

| Ins | 0 | |||

| Del | 0 | |||

| Match | 0 | |||

| Max | Mx | |||

The function returns the probability that the bases x and y be paired. These base pair probabilities are computed as a preliminary step. The functions returns 1 if and else, it returns 0. The function returns the penalties for insertion or deletion, here it is −3. The line marked (*) corresponds to the Eq. 22 of the original algorithm.

Tree grammar for the Sankoff problem

For the Sankoff problem, there are two input sequences, refered to in the form .

Calling the Sankoff program

Calling either or , we either align for maximal similarity, or for maximal base pairing. To solve the problem with Pareto optimization, we call

Preservation of Bellman’s principle by the Pareto product

In this section we present our main theorem, showing that the Pareto product always preserves Bellman’s principle. For the Pareto product to apply, we have the prerequisite that algebras A and B both maximize over a total order. In this situation, Bellman’s principle specializes as follow:

Lemma 3.2

If maximizes over a total order, Eq. (17) implies that allk-ary functionsf for are strictly monotone with respect to each argument.

Proof

Assume Eq. (17) holds and max stands for . If strict monotonicity was violated, there would be a value pair such that , but , with all other arguments of f unchanged. Then , whereas max[f(x), f(y)] is either [f(x), f(y)] if both are equal, or otherwise it is [f(y)]. In either case, (17) is violated.

Theorem 3.3

The Pareto product preserves Bellman’s principle.

Proof

Under the premise that algebras A and B satisfy Bellman’s principle of optimality, we must show that satisfies Eqs. (17–19). The algebra functions in the product algebra are , cf. (21), and the choice function is .

satisfies (19). This is a trivial consequence of Eq. (6).

satisfies (18). We have to show that

where is short for . W.l.o.g assume . The element is in if and only if it is not dominated by any other element in . This implies , and x is not dominated by an element in . Hence, . Conversely, is not in if and only if it is dominated by some element . Because of transitivity of , it will be also dominated by a dominant element in X or Y, which is a member of or , respectively. Hence, x is not in .

satisfies (17). If is a constant (nullary) function, it satisfies (17) because of (20).

For the other functions, we have to show that

with short for . It is clear that the right-hand side is a subset of the left-hand side, so we only have to show that no dominating elements are lost. From Lemma 3.2 we know that and are strictly monotone in each argument position. Now consider . With all other arguments equal, if and only if , and the same for . We conclude that if and only if , and hence is strictly monotone with respect to the partial ordering . If in and hence , then the element will not be considered on the left-hand side. But anyway, it would be dominated by and could not enter the overall result.

While our theorem guarantees that satisfies Bellman’s principle under the above prerequisites, an ADP compiler providing the operation on evaluation algebras cannot check these prerequisites. In general, it cannot prove that and maximize over a total order, nor can it ensure strict monotonicity. However, there may be obvious abuses of that a compiler can safeguard against.

Implementation

The Pareto product can be implemented simply by providing the Pareto front operator as the choice function for the algebra product . In this case, the results of can be represented as sorted or unsorted lists. A more ambitious implementation would monitor the status of intermediate results as lexicographically sorted lists, to take advantage of the more efficient Pareto front operator or on sorted lists.

We will describe these implementation options by means of an example production which covers the relevant cases. A tree grammar describing an ADP algorithm has an arbitrary number of productions, but their meaning is independent.

Let f, g, and h be a binary, an unary and a nullary scoring function from the underlying signature. A tree grammar rule such as  specifies the computation of partial results for a subproblem of type W from partial results already computed from subproblems of types X and Y, of type Z, or for an empty subproblem via a (constant) scoring function h. It is important to have a binary operation in our example, as this type of Pareto set extension does not perserve the Pareto property, and hence is a more difficult case (cf. Lemma 2.1). Beyond this, signature functions may have arbitrary arity, and trees on the right-hand side can have arbitrary height. These cases can be handled in analogy to what we do next.

specifies the computation of partial results for a subproblem of type W from partial results already computed from subproblems of types X and Y, of type Z, or for an empty subproblem via a (constant) scoring function h. It is important to have a binary operation in our example, as this type of Pareto set extension does not perserve the Pareto property, and hence is a more difficult case (cf. Lemma 2.1). Beyond this, signature functions may have arbitrary arity, and trees on the right-hand side can have arbitrary height. These cases can be handled in analogy to what we do next.

We use the nonterminal symbols also as names for the list of subproblem solutions derived from them. Hence, we compute a list of answers from and so on. Note that h denotes a constant list, in most cases a singleton, but not necessarily so. We do not have to worry about indexing subproblems and dynamic programming tables, as this is added by the standard ADP machinery.

Standard implementation

Candidate lists are created by terminal grammar rules, by extension of intermediate results with scoring functions, and by union of answers from alternative rules for the same nonterminal.

We describe the standard implementation by three operators , respectively pronounced “extend”, “combine” and “select”.

Let , and let denote the powerset of C. Elements of are simply lists over C in our implementation. denotes functions over subsets of C, such as our choice functions. Our operators have the following types:

| 23 |

| 24 |

| 25 |

| 26 |

The type of is overloaded according to the arity of its function argument, which is arbitrary in general. For our exposition, we need only arities 1 and 2. (This flexible arity overloading explains why we do not use infix notion with .) The operators are defined as follows:

| 27 |

| 28 |

| 29 |

| 30 |

Operator simply applies the choice function to a list l of intermediate results (27), generally the function , and in our specific case. We append lists of solutions with (28), and extends solutions from smaller subproblems to bigger ones (29, 30). Note that there is no requirement on the constant scoring function h. Typically, such a function generates an empty list or a single element anyway. In general however, it may produce a list of alternative answers, and this need not be a Pareto list in the standard implementation.

Using this set of definitions, our example production describes the computation of

Any of our variants can be used for , but not the linear-time , because in (28) and (29), lists come out unsorted.

Lexicographically sorted implementation

This implementation defines the operators and such that they keep intermediate lists sorted. As a consequence, the Pareto front operator can be replaced by the more efficient .

| 31 |

| 32 |

| 33 |

| 34 |

| 35 |

The function merge merges two sorted lists in linear time, such that the result is sorted, and foldrmerge does so iteratively for a list of sorted lists.

We show by structural induction that all intermediate solution lists are sorted. Eq. (35) covers the base case. It requires that a constant function such as h produces its answer list in sorted form. Now consider the recursive cases, assuming that lists X and Y are sorted. In Eq. (34), the constructed list is sorted. This follows from the prerequisite that algebras A and B satisfy Bellman’s principle, and hence g is monotonic in both algebras. Thus, given a sorted list X, the list is also sorted lexicographically (Lemma 2.5). In Eq. (33), the above monotonicity argument holds for each of the lists for fixedy. Lists for different are merged, which results in an overall sorted list. This also takes linear time. In Eq. (32), two sorted lists are merged into a sorted list. In Eq. (31), finds a sorted list and reduces it to a Pareto list, which by definition is sorted (cf. 2.2).

Pareto-eager implementation

The standard implementation applies a Pareto front operator after constructing a list of intermediate results. This list is built and combined from several sublists. By our main theorem, the Pareto front operation distributes over combinations of sublists, so we can integrate the operator into the operator. This has the effect that sizes of intermediate results are reduced as early as possible. We define our operators as follows:

| 36 |

| 37 |

| 38 |

| 39 |

| 40 |

Again we argue by structural induction over the candidates in the search space. As the base case, h must produce Pareto lists as initial answers (Eq. 40). The operation in Eq. (37) can assume argument lists to be Pareto lists already. In Eq. (39), the new list must be a Pareto list due to the extension Lemma 2.5. In Eq. (38), the same holds for each intermediate list for each y, and we can merge them successively. Finally, the operator skips the computation of the Pareto front, as by induction, all the lists that arise at this point are Pareto lists already.

In "Pareto merge in linear time", we showed that can be implemented in O(N), and therefore, each step in the Pareto-eager implementation takes linear time. This means that Pareto optimization incurs no intrinsic overhead, compared to a single objective which returns a comparable number of results. This is an encouraging insight, but leaves us one aspect to worry about: The size N of the Pareto front which is computed from an input sequence of length n.

Runtime impact of Pareto front size

For a typical dynamic programming problem in sequence analysis, an input sequence of length n creates an exponential search space of size . Still, by tabulation and re-use of intermediate subproblem solutions, dynamic programming manages to solve such a problem in polynomial time, say . The value of r depends on the nature of the problem, and when encoded in ADP, it is apparent as a property of the grammar which describes the problem decomposition [23]. We have for simple sequence alignment, for simple RNA structure prediction, to for RNA structures including various classes of pseudoknots, and so on. This all applies when a single, optimal result is returned.

For ADP algorithms returning the k best results, complexity must be stated more precisely as . As long as k is a constant, such as in k-best optimization, this does not change the asymptotics. However, computing all answers within p percent of the optimal score may well incur exponential growth of k. Probabilistic shape analysis of RNA has a runtime of with , because the number of shape classes grows exponentially with sequence length [11, 32].

With Pareto optimization, the size k of the answer set is not fixed in beforehand. The size of the Pareto front, for a set of size N, is expected to be H(N) (cf. "Pareto sets and the Pareto front operator"). Using and [24], we can expect an effective size of the result sets in O(n). Taking all things together, we can compute the Pareto front for an (algebraic) dynamic programming problem in expected time, where n is input length and r reflects the complexity of the search space.

In applications, the size of the Pareto front needs not to follow expectation. We may achieve efficiency of where . Fortunately, in the application scenario of the next section, we find ourselves in this positive situation.

Applications

Evaluation goals

In our applications reported here, we persue a twofold goal. (i) Our foremost goal is to determine whether Pareto optimization is practical in some real-world applications. This includes the assessment of constant factors of alternative implementations of the Pareto front operator . And (ii), we want to demonstrate that Pareto optimization allows us to draw interesting observations about the relative behaviour of two scoring schemes competing for the same purpose. Our applications are taken from the domain of RNA secondary structure prediction. One is the Sankoff problem of simultaneous alignment and folding of two RNA sequences, introduced already above. The two scoring systems are sequence similarity versus base pair probabilities. Our second application choice is the single sequence structure prediction problem, using two alternative scoring functions. One is MFE, the classical minimum free energy folding approach, based on a thermodynamic nearest-neighbour model with about thousand parameters. The other one is MEA, maximum expected accuracy folding, which is a recent refinement of MFE folding. By this method, the MFE approach is first used to calculate base pair probabilities for the folding space of the given sequence, and in a second phase, the structure is determined which maximises the accumulated base pair probabilities.

Our first hypothesis addresses efficiency issues.

Hypothesis A

Pareto optimization in a realistic scenario is not more expensive than other approaches calculating a similar amount of alternative answers.

We assess hypothesis A in "Runtime and memory measurements" by computing the trade-off between the different Pareto front implementations described in "Implementation". Emprical Pareto front sizes are reported in "Pareto front size". We use the MFE versus MEA application for all these measurements. An interesting occurence of worst case behaviour for Schnattinger’s variant of the Sankoff algorithm is analyzed in "Anti-correlation and real worst case behaviour".

For a more biologically inspired assessment, we chose the Sankoff problem to test

Hypothesis B

A small Pareto front is indicative of a strong biological signal of homology.

This assessment is shown in "Pareto solutions in the Sanko algorithm".

We try to get insight into the relationship of the structures that make up the Pareto front, coming back to the application of MFE versus MEA folding.

Hypothesis C

The Pareto front of MFE versus MEA is comprised of a small number of macrostates, accompanied by essentially the same corona of microstates.

Akin to Pareto optimization, abstract shape analysis of RNA can give us an “interesting” set of alternative foldings [11]. They are characterized by the best-scoring structures having different abstract shapes. This idea being perfectly orthogonal to Pareto optimization, we give some attention to the question how abstract shapes and Pareto optima are related. Here we test the

Hypothesis D

Abstract shape analysis and Pareto optimization produce about the same set of alternative “interesting” structures.

In the evaluation of hypotheses A–D, our test data for the MFE/MEA application consists of 331 RNA sequences of length 12–356 nucleotides, extracted from the full data set used in [33]. The data set is available with the supplementary material. For the Sankoff problem, we use sequences from two Rfam families. We use PreQ1 RNA sequences () and IRE RNA sequences () extracted from the core data set of the Rfam database [34].

Algorithms implemented

We give a short sketch of how our algorithms are implemented. For each application, we can re-use grammars and algebras from the RNAshapes repository [35]. It is just the Pareto optimization which is new.

For the standard implementation, we tested the variants , , , and (cf. "Computing the Pareto front"). Fortunately, the standard implementation can be mimicked in GAP-L without changing language or compilera. However, we can not evaluate the Pareto-eager implementation based on with Bellman’s GAP, as this would imply extension of GAP-L and modification of the sophisticated code generation in the Bellman’s GAP compiler.

In order to compare the Pareto-eager implementation to the others, we resorted to an implementation of ADP as a Haskell-embedded combinator language [23]. First, we added the variants , , and for the standard implementation. Then, we designed a modified set of combinators, corresponding to the outline in "Pareto-eager implementation". (For the expert: The key idea is to exploit monotonicity and compute the set of intermediate results represented as nested lists in the form . For fixed , the sublist is sorted if the list is. This is ensured by structural induction and strict monotonicity of f on its second argument position). While this implementation is significantly slower than Bellman’s GAP code, it suffices to compare the Pareto-eager implementation to its alternatives.

We use available building blocks for the independent optimizations: the RNA folding grammar OverDangle (avoiding lonely base pairs) and the evaluation algebras MFE and MEA. In MFE and MEA, we replace their objective functions by ones that report the k best (near-optimal) structures, where k is a parameter. This allows us to run OverDangle(MFE(k), x) and OverDangle(MEA(k), x), with the choice of k explained further below.

The size of the folding space X for a given sequence x is independent of the optimization we perform. The Bellman’s GAP compiler can automatically produce a counting algebra COUNT, that determines the size of the folding space. We run OverDangle(COUNT, x) on all our test data to get concrete folding space sizes, to be related to the sizes of their Pareto fronts.

The choice of k for a fair evaluation is not obvious. Selecting for MFE and MEA would be unfair, as the Pareto front provides much deeper information. We considered using , but the expected size of the search space is not a good predictor, as |X| varies strongly with the sequence content of x. Therefore, we first run Pareto optimization on x, record the size of the Pareto front for this call, and then set k to this number when computing OverDangle(MFE(k), x) and OverDangle(MEA(k), x).

Runtime and memory measurements

This section and the next are devoted to our

Hypothesis A

Pareto optimization in a realistic scenario is not more expensive than other approaches calculating a similar amount of alternative answers.

We evaluate the performances of the Pareto front computation, using , , , and . Note that all compute the same Pareto front, and hence have the same k in their asymptotics. For a fair comparison with two single-objective algorithms MFE and MEA, we use their versions MFE(k) and MEA(k), computing the k best structures under each objective. Here, k is set to the actual Pareto front size for the given input (which, of course, is only known because before we also compute the Pareto front with the other algorithms). All programs are compiled by the Bellman’s GAP compiler using the same optimization options [29].

In Table 3 we show computation time and memory consumption, accumulated over all sequences and specifically for the longest sequence. These are our main observations:

In terms of runtime, we find that the Pareto optimization performs not only better than the sum of the two independent optimizations, but also better than each of them individually. We attribute this to the fact that the Pareto algorithm adjusts itself to the size of the Pareto front, and this size tendsb to be smaller than k for small sub-problems. The search space itself, however, is exponentially larger than the Pareto front, and even on small sub-words it provides k near-optimals for MFE(k) and MEA(k) to spend computation on. This effect is strongest for our longest sequence, where and the ratio of .

The average case behaviour of is superior to all the sorting implementations of . This is an unexpected and interesting observation. We attribute this to a positive randomization effect. Comparing a new element to the extremal points of the Pareto front, maximal in one but minimal in the other dimension, is unlikely to establish domination. This what always happens first with sorted intermediate lists, and the element will walk along towards the middle of the list until it eventually is found to be dominated. In unsorted lists, a non-extremal element that dominates the new entry will, on average, be encountered earlier.

For evaluating the Pareto-eager strategy, we used the Haskell-embedded implementation. In the functional setting, required the least garbage collections and performed best. Somewhat unexpectedly, the eager strategy was consistently a bit slower than and close to , slower only by a factor varying between 1.0 and 1.2. It was faster than , in turn by a factor between 1.1 and 1.5.

Memory consumption of Pareto optimization is consistent over different implementations of . It is higher than either MFE(k) or MEA(k) alone, but clearly less than the sum of MFE(k) and MEA(k). This is better than expected, because after all, it solves both problems simultaneously.

Table 3.

Runtimes and memory requirements for MFE(k), MEA(k) (where k is the empirical Pareto front size for a given input), and their Pareto product , accumulated over 331 sequences (left) and for the longest sequence (, right)

| Algebra | Time (min) | Memory (GB) | Time (min) | Memory (GB) |

|---|---|---|---|---|

| MFE(k) alone | 71 | 163.68 | 5 | 1.16 |

| MEA(k) alone | 61 | 153.51 | 5 | 1.05 |

| MFE(k) + MEA(k) | 132 (+) | 163.68 (max) | 10 | 1.16 (max) |

| 8 | 197.28 | 0.22 | 1.28 | |

| 9.5 | 192.79 | 0.5 | 1.28 | |

| 18 | 271.21 | 1 | 1.28 | |

| 32 | 250.21 | 3 | 2.11 | |

The computations were performed by using Bellman’s GAP.

Note that the above values are measurements of constant factors, and averaged over many runs. So, is not always faster than . In fact, we have seen cases where is faster than for , but slower for (where in the latter case, we switch from maximization to minimization in algebra B).

Pareto front size

The size of the Pareto front is of critical practical importance. Pareto front sizes in the hundreds, even for sequences of moderate length, would be prohibitive. The number of solutions in the Pareto front depends on the data. Not only on the sequence length and the size of the search space in our RNA folding scenario, but also on the actual structures found. For example, if there is a very prominent structure in the folding space, it will dominate many other solutions in both objectives, and the Pareto front will be small. On the other hand, in one case we observed a Pareto front of size with the program of Schnattinger et al. on a Sankoff-style algorithm, an effect we will study in detail below.

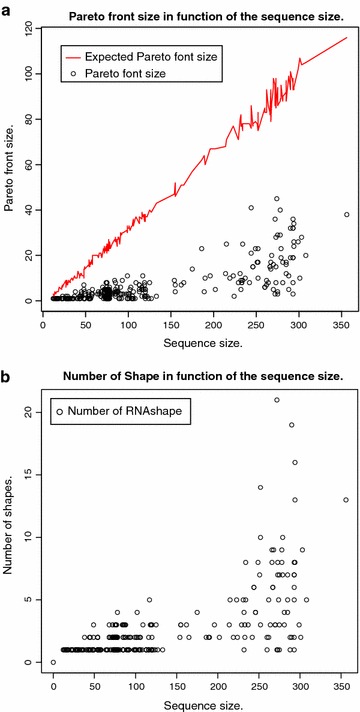

Figure 1 shows our measurements. We observe the following:

Pareto front sizes are quite moderate, ranging round 10 for , 15 for , up to 45 for . Specifically, our longest sequence () has a Pareto front of size 38.

Variance is high (as expected), and because of the strong variation, we did not fit a line through our measurement points. However, they are all dominated by the expected size of the Pareto front (red line).

We did not smooth the graph for H(|X|), such that it also demonstrates the variance in the search space sizes; just read the y-axis as a logarithmic scale for . The roughly linear behavior conforms with the theoretical analysis.

Figure 1.

a Empirical Pareto front size of as a function of |x|. The red line corresponds the H(|X|), the expected Pareto front size according to the harmonic law, applied to the empirical value of |X| for each x. b Number of abstract RNA shapes [11] in the Pareto front, in function of the sequence size.

The moderate sizes of Pareto fronts in our applications also imply that no benefit is to be expected from using quad-tree data structures in place of our sorted list representation. According to measurements in [36], population sizes in the thousands are required to make the more sophisticated data structure pay off.

Summing up our empirical data, we state that Hypothesis A has been confirmed in general, which does not rule out that there are problematic cases. One of these is discussed next.

Anti-correlation and real worst case behaviour

Two scoring functions are correlated to the extent by which they rank the candidates of the search space in the same order. Perfect correlation or anti-correlation would render a combined application of both objectives meaningless. Perfect positive correlation implies that an optimal candidate under is also optimal under , so nothing is to be gained from optimizing with respect to . Perfect anti-correlation means that the optimal candidates under are the worst candidates under . Hence, they can also be obtained as the optimal candidates optimizing under alone. In interesting scenarios, we can expect the two scoring schemes to correlate in some of the local scoring functions, and anti-correlate in others.

Anti-correlation can make the worst case real, where the size of the Pareto front does not follow the Harmonic law, but is linear the size of the interval of score values actually occurring (cf. Observation 3). There is a minor flaw in the objective function used in [20], harmless at first sight, but provoking worst case behaviour on some inputs. It is instructive to look at the situation in detail.

The objective functions in [20] are adopted from the amalgamated score in [8]. Schnattinger et al. took RNAalifold’s parametrized combination of energy and covariance scoring, dissecting ( literally into (. However, Hofacker et al. had chosen for their combination an engineered variant of similarity scoring, where base pair columns were not scored for sequence similarity (see equation line marked 22). Our algorithm corrects this case, see label in Table 3, making algebra SIM a proper similarity score. Literal dissection of this combination in [20] led to two scoring schemes that are negatively correlated in the following case: Choosing a base pair increases the covariance score but decreases the sequence similarity score in the case where the individual bases in the paired alignment columns actually match. Amusingly, the worst case occurs when solving the Sankoff problem for two identical sequences! While we would expect a Pareto front of size 1, with no gaps, no mismatches, and a maximal number p of base pairs, what we actually get is a worst case Pareto front of p elements, because every base pair omitted increases the sequence score.

This observation teaches us that a large Pareto front can result from inadvertent anti-correlation in the scoring functions.

Pareto solutions in the Sankoff algorithm

We now consider Pareto optimization for the Sankoff problem. The two optimization objectives combined are sequence similarity (SIM) and base pair probability of the consensus structure (PROB). One can expect both measures to be more correlated when the sequences are in fact closely related and have a conserved consensus structure. We investigate our

Hypothesis B

A small Pareto front is indicative of a strong biological signal of homology.

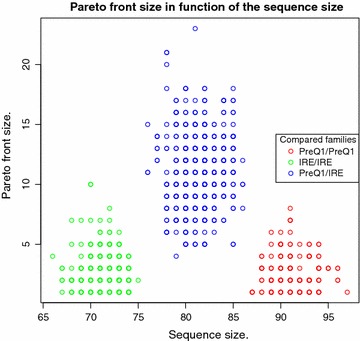

A thorough assessment of this hypothesis is outside of the scope of the present article, but we give some evidence that supports it. Our test data consists of PreQ1 RNA sequences () and IRE RNA sequences () extracted from the core data set of the Rfam database [34]. We perform all intra-family and inter-family alignments. The results are shown in Figure 2.

Figure 2.

Empirical Pareto front size of . The red plots correspond to the alignment of two PreQ1 sequences, the green ones to the alignment of two IRE sequences and the blue ones to the alignment between a IRE sequence and a PreQ1 sequence. Sequence size is the sum of the two aligned sequences size.

We observe the following:

The Pareto front size is reduced when comparing sequences from the same family (average Pareto front size of 2.56 for the IRE/IRE and 2.50 for the PreQ1/PreQ1).

The Pareto front size is larger when aligning two sequences from different families (average Pareto front size of 11). Note that the two families are unrelated, so this can be taken as an experiment on random RNA sequences.

However, there is some overlap between the extreme cases of both scenarios.

The size of the Pareto front could be useful for deciding family membership, not by itself but as a third criterion in addition to the two scores obtained separately. In fact, one might also be interested in the similarity between the structures that occur in the Pareto front. This is what we consider next.

Internal structure of the Pareto front of MFE and MEA folding

We now consider each structure in the Pareto front of some sequences extracted from the MFE/MEA dataset with respect to our

Hypothesis C

The Pareto front is comprised of a small number of macrostates, accompanied by essentially the same corona of microstates.

By using the RNA movies software [37], we can illustrate the transitions between the different structures in the Pareto front. In the Movie 1, Movie 2, Movie 3 (cf. Additional files 1, 2, 3, respectively; these are animated GIFs best viewed with a browser), we can see that there is a single dominating structure (macrostate). The different structures in the Pareto front are all minor modifications of this macrostate. They are local helix modifications or show the formation of small new helices inside big loops. For larger Pareto fronts, we can observe such microstates arranged around several macrostates, indicating very different structures (Additional file 4: Movie 4, Additional file 5: Movie 5, Additional file 6: Movie 6). Each macrostate has a different abstract shape.

Hypothesis C was confirmed in all the cases we studied. This means that one could condense the information in the Pareto front to a small set of macrostate structures, which would have different shapes. This leads us to further explore the relationship between Pareto optimization (based on MFE and MEA) and abstract shape analysis (based on shape abstraction and eitherMFE or MEA alone).

Pareto optimization versus abtract shape analysis

Our Hypothesis D is a somewhat radical statement:

Abstract shape analysis and Pareto optimization produce about the same set of alternative “interesting” structures.

It would make Pareto optimization less attractive in the domain of RNA structure analysis, as well as in other domains where the idea of shape abstraction can be replicated. But our observations refute this hypothesis. Overall, the relation between the two approaches appears to be non-trivial.

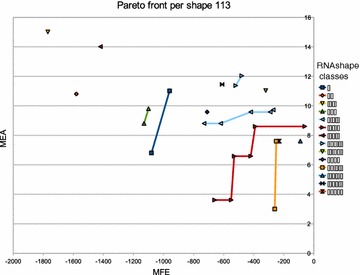

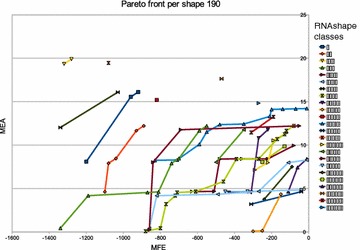

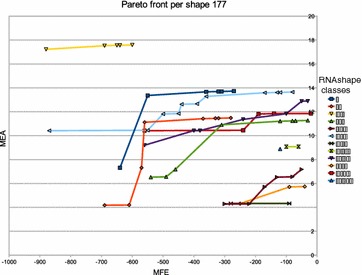

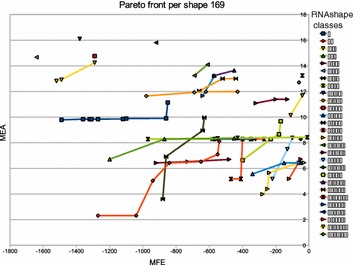

We checked the ratio of the number of structures in the Pareto front, and the number of different abstract shapes they represent, but this ratio, ranging from 1 to , did no exhibit an obvious pattern. Taking a SHAPE algebra from the RNAshapes repository, an experiment with has been performed. This call computes the Pareto front for each shape. The computation was performed for six sequences extracted from the dataset used for computing the Pareto front between MFE/MEA. The results are presented in the Figures 3, 4, 5 and 6. We see different scenarios occuring:

Figure 3.

Pareto front per shape for the RNA structure of a hammerhead ribozyme ().

Figure 4.

Pareto front per shape for the structures of a tRNA .

Figure 5.

Pareto front per shape for the structures of a tRNA ().

Figure 6.

Pareto front per shape for the structures of a tRNA ().

In Figure 3, we find a dominating shape and a singleton Pareto front. In Figure 4, we find a dominating shape and a Pareto front which holds exactly the MFE and MEA optima of this shape. In Figure 5, we see a more fine-grained Pareto front with all elements residing in the dominant shape. Figure 6 shows a two-element Pareto front composed of different shapes.

Note that in a case like Figure 4, Pareto optimization with MFE and MEA will only produce the dominant shape (“[[][]]”, yellow), while in combination with abstract shape analysis, we also see two further macrostates that might be of interest: “[][]” (pink) and “[[][][]]” (dark green). From these observations, we conclude that Hypothesis D is to be refuted. Shape abstraction and Pareto optimization are independent techniques that allow for even deeper analysis in combination.

Conclusion

Let us review our results, referring back to the questions (i)–(iv) formulated in the introduction. We have shown (i) that the exact Pareto front of two independent objectives can be computed by dynamic programming. The theoretical prerequisite for this is the preservation of Bellman’s principle by the Pareto product operator , established in our main theorem.

We have shown (ii) that by the Pareto-eager implementation, one can achieve Pareto optimization without an asymptotic penalty, compared to other optimizations which return a comparable number of results. We have shown (iii) that empirically, for the case of RNA folding under different objectives, the size of the Pareto front remains within moderate bounds, clearly lower than theoretical expectation. All in all, this says the Pareto optimization is practical for sequence analysis and moderate sequence sizes.

We demonstrated (iv) that Pareto optimization allows us to study in depth the relative behaviour of two competing objectives, minimum-free-energy and maximum-expected-accuracy in our application domain. We found in pairwise sequence analysis that, as to be expected, a small Pareto front in the Sankoff problem indicates a potential family relationship.

These findings, of course, create new work items and open questions. Foremost, the implementation of the operator together with its Pareto-eager implementation is up as a challenge to all who work on frameworks supporting dynamic programming in sequence analysis. It will be interesting to see if the Pareto-eager implementation can beat in terms of constant factors in such implementations.

Many established bioinformatics tools, which so far rely on an ad-hoc combination of different objectives, could be re-evaluated using Pareto optimization. Specifically for RNA structure analysis, in a recent review Rivas argues that in order to further improve predictions, different types of informations must be taken into account [38]. She advocates the conversion of all data sources into a probabilistic framework as a unifying solution. Pareto optimization opens up an alternative route, as it allows to combine multiple objectives without such conversion. This includes the Pareto-style combination of stochastic grammars with other (non-probabilistic) types of information.

Eventually, Pareto optimization may be useful in development to avoid it in production! After computing a set of Pareto-optimal answers, the user is left with the problem to draw conclusions from this set. Often, what users want is a single answer. This holds in particular when the “user” is a high-throughput pipeline. This calls for product operations such as or , as we used to provide in the past. But now, the designers of such a program can use Pareto optimization in the design stage to make a well-informed choice of the combination of objective functions eventually offered for production use.

Let us end this introduction with a word on Pareto optimization in higher dimensions than two. Pareto optimization can be defined over score vectors of any dimension. Here we deal only with two dimensions, providing the operator that turns two scoring schemes A and B into their Pareto combination . This may suggest the idea that with we have Pareto optimization in three dimensions, and in four with . But No!, Pareto optimization is not modular in this sense. The prerequisite for is that A and B optimize over a total order, while their Pareto combination optimizes over the partial order . Hence, is not admissible for further Pareto combinations. To arrive at higher dimensions of Pareto optimization, one must define a Pareto combination operator of flexible arity. Complexity of algorithms changes, and more sophisticated data structures, such as the quad-trees studied in [36] may come into focus. This is remains a challenge for future research.

Endnotes

aFor GAP-L experts: We supply the grammar with the algebra product , where , and add application of via a semantic filter where appropriate.

bThis is only a tendency—a final Pareto front of size k does not rule out intermediate results with Pareto fronts larger than k.

Authors' contributions

The authors closely cooperated on all aspects of this work. Both authors read and approved the final manuscript.

Acknowledgements

The authors would like to thank T. Schnattinger and H.A. Kestler for the discussions which inspired this generalization of their work. Thanks go to Stefan Janssen for help with the Bellman’s GAP system, and to the anonymous reviewers of this journal for helpful comments and criticism. We acknowledge support of the publication fee by Deutsche Forschungsgemeinschaft and the Open Access Publication Funds of Bielefeld University.

Compliance with ethical guidelines

Competing interests The authors declare that they have no competing interests.

Additional files

Movie 1. Transitions between the different structures in the Pareto front.

Movie 2. Transitions between the different structures in the Pareto front.

Movie 3. Transitions between the different structures in the Pareto front.

Movie 4. Transitions between the different structures in the Pareto front.

Movie 5. Transitions between the different structures in the Pareto front.

Movie 6. Transitions between the different structures in the Pareto front.

Contributor Information

Cédric Saule, Email: cedric.saule@uni-bielefeld.de.

Robert Giegerich, Email: robert@techfak.uni-bielefeld.de.

References

- 1.Gotoh O. An improved algorithm for matching biological sequences. J Mol Biol. 1982;162:705–708. doi: 10.1016/0022-2836(82)90398-9. [DOI] [PubMed] [Google Scholar]

- 2.Spang R, Rehmsmeier M, Stoye J. A novel approach to remote homology detection: jumping alignments. J Comput Biol. 2002;9(5):747–760. doi: 10.1089/106652702761034172. [DOI] [PubMed] [Google Scholar]

- 3.Sankoff D. Simultaneous solutions of the RNA folding, alignment and proto-sequences problems. SIAM J Appl Math. 1985;45(5):810–825. doi: 10.1137/0145048. [DOI] [Google Scholar]

- 4.Gorodkin J, Heyer LJ, Stormo GD. Finding the most significant common sequence and structure motifs in a set of RNA sequences. Nucleic Acid Res. 1997;25(18):3724–3732. doi: 10.1093/nar/25.18.3724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Havgaard JH, Lyngsø RB, Gorodkin J. The FOLDALIGN web server for pairwise structural RNA alignment and mutual motif search. Nucleic Acid Res. 2005;33:650–653. doi: 10.1093/nar/gki473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mathews DH. Predicting a set of minimal free energy RNA secondary structures common to two sequences. Bioinformatics. 2005;21(10):2246–2253. doi: 10.1093/bioinformatics/bti349. [DOI] [PubMed] [Google Scholar]

- 7.Wexler Y, Zilberstein C, Ziv-Ukelson M. A study of accessible motifs and RNA folding complexity. J Comput Biol. 2007;14(6):856–872. doi: 10.1089/cmb.2007.R020. [DOI] [PubMed] [Google Scholar]

- 8.Hofacker IL, Bernhart SHF, Stadler PF. Alignment of RNA base pairing probabilities matrices. Bioinformatics. 2004;20(14):2222–2227. doi: 10.1093/bioinformatics/bth229. [DOI] [PubMed] [Google Scholar]

- 9.Schnattinger T (2014) Multi-objective optimization for RNA folding, alignment and phylogeny. PhD thesis, Fakultät für Ingenieurwissenschaften und Informatik der Universität Ulm

- 10.Steffen P, Giegerich R. Versatile and declarative dynamic programming using pair algebras. BMC Bioinform. 2005;6(1):224. doi: 10.1186/1471-2105-6-224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Voß B, Giegerich R, Rehmsmeier M. Complete probabilistic analysis of RNA shapes. BMC Biol. 2006;4(1):5. doi: 10.1186/1741-7007-4-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang C, Wong AKC. Toward efficient molecular sequence alignment: a system of genetic algorithm and dynamic programming. Trans Syst Man Cybern Part B Cybern. 1997;27(6):918–932. doi: 10.1109/3477.650054. [DOI] [PubMed] [Google Scholar]

- 13.Taneda A. Multi-objective pairwise RNA sequence alignment. Bioinformatics. 2010;26(19):2383–2390. doi: 10.1093/bioinformatics/btq439. [DOI] [PubMed] [Google Scholar]

- 14.Taneda A. MODENA: a multi-objective RNA inverse folding. Adv Appl Bbioinform Chem. 2011;4:1–12. doi: 10.2147/aabc.s14335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rajapakse JC, Mundra PA. Multiclass gene selection using Pareto-fronts. IEEE/ACM Trans Comput Biol Bioinform. 2013;10(1):87–97. doi: 10.1109/TCBB.2013.1. [DOI] [PubMed] [Google Scholar]

- 16.Forman G (2004) A pitfall and solution in multi-class feature selection for text classification. In: Proceedings of the 21th International Conference on Machine Learning

- 17.Henig MI. The principle of optimality in dynamic programming with returns in partially ordered states. Inst Op Res Manag Sci. 1985;10(3):462–470. [Google Scholar]

- 18.Getachew T, Kostreva M, Lancaster L (2000) A generalization of dynamic programming for Pareto optimization in dynamic networks. Revue Française d’Automatique, d’Informatique et de Recherche opérationnelle. Recherche Opérationelle 34(1):27–47

- 19.Sitarz S. Pareto optimal allocation and dynamic programming. Ann Op Res. 2009;172:203–219. doi: 10.1007/s10479-009-0558-8. [DOI] [Google Scholar]

- 20.Schnattinger T, Schöning U, Marchfelder A, Kestler HA. Structural RNA alignment by multi-objective optimization. Bioinformatics. 2013;29(13):1607–1613. doi: 10.1093/bioinformatics/btt188. [DOI] [PubMed] [Google Scholar]

- 21.Schnattinger T, Schöning U, Marchfelder A, Kestler HA. RNA-Pareto: interactive analysis of Pareto-optimal RNA sequence-structure alignments. Bioinformatics. 2013;29(23):3102–3104. doi: 10.1093/bioinformatics/btt536. [DOI] [PubMed] [Google Scholar]

- 22.Libeskind-Hadas R, Wu Y-C, Bansal MS, Kellis M. Pareto-optimal phylogenetic tree reconciliation. Bioinformatics. 2014;30(12):87–95. doi: 10.1093/bioinformatics/btu289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Giegerich R, Meyer C, Steffen P. A discipline of dynamic programming over sequence data. Sci Comput Program. 2004;51(3):215–263. doi: 10.1016/j.scico.2003.12.005. [DOI] [Google Scholar]

- 24.Graham RL, Knuth DE, Patashnik O. Concrete mathematics: a foundation for computer science. 2. Boston: Addison-Wesley Longman Publishing Co., Inc; 1994. [Google Scholar]

- 25.Yukish MA (2004) Algorithms to identify Pareto points in multi-dimensional data sets. PhD thesis, Pennsylvania State University, Graduate School, College of Engineering

- 26.Kung H, Luccio F, Preparata F. finding on the maxima of a set of vectors. J Assoc Comput Mach. 1975;4(4):469–476. doi: 10.1145/321906.321910. [DOI] [Google Scholar]

- 27.zu Siederdissen CH (2012) Sneaking around concatMap: efficient combinators for dynamic programming. In: Proceedings of the 17th ACM SIGPLAN International Conference on Functional Programming. ICFP ’12, pp 215–226. ACM, New York, NY, USA. doi:10.1145/2364527.2364559

- 28.Sauthoff G, Möhl M, Janssen S, Giegerich R (2013) Bellman’s GAP—a language and compiler for dynamic programming in sequence analysis. Bioinformatics 29(5):551–560. doi:10.1093/bioinformatics/btt022. http://bioinformatics.oxfordjournals.org/content/early/2013/01/25/bioinformatics.btt022.full.pdf+html [DOI] [PMC free article] [PubMed]

- 29.Sauthoff G, Giegerich R. Yield grammar analysis and product optimization in a domain-specific language for dynamic programming. Sci Comput Program. 2014;87:2–22. doi: 10.1016/j.scico.2013.09.011. [DOI] [Google Scholar]