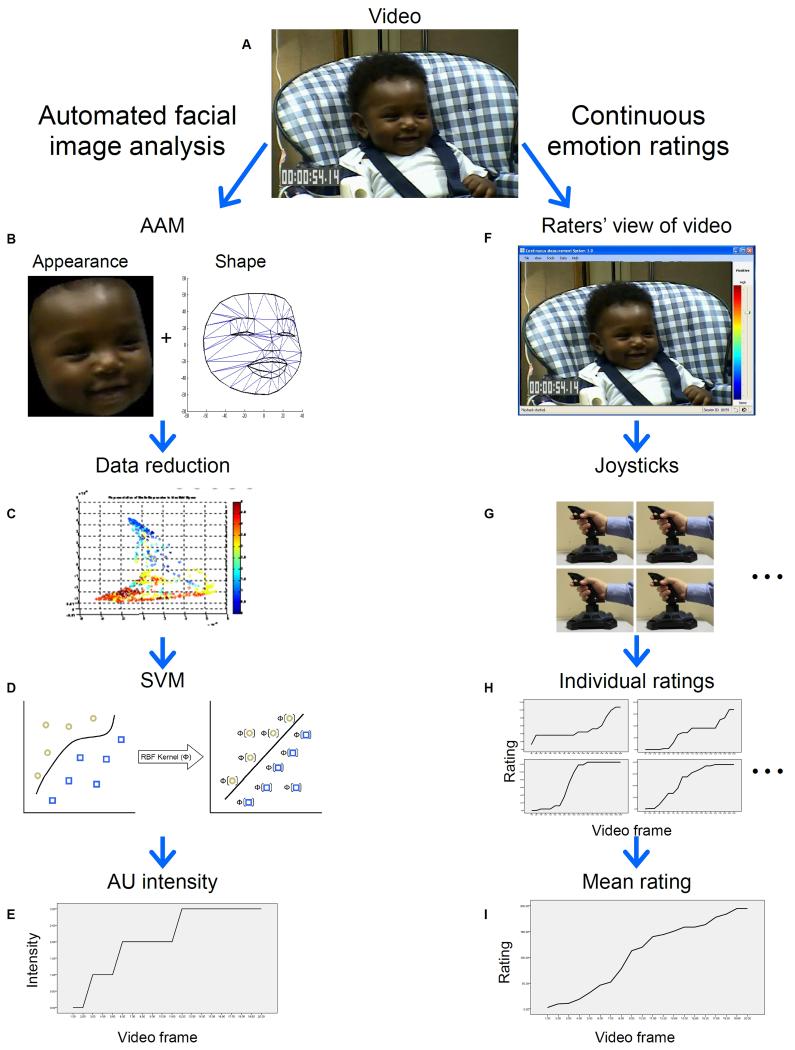

Figure 2.

The measurement approach. (A) Video is recorded at 30 fps. (B) Appearance and shape features of the face are distinguished and tracked using an Active Appearance and Shape Model (AAM). (C) Nonlinear mapping is used to reduce the appearance and shape features to a set of 12 data points per video frame. (D) A separate support vector machine (SVM) classifies the occurrence and intensity of each FACS Action Unit (AU). (E) This yields information on the presence and intensity of AUs 6, 12, and 20 in each video frame. (F) Raters’ view video in real-time. (G) Raters use joysticks used to continuously rate the infant’s affective valence. (H) Individual ratings (I) are combined to produce a mean rating of affective valence for each video frame.