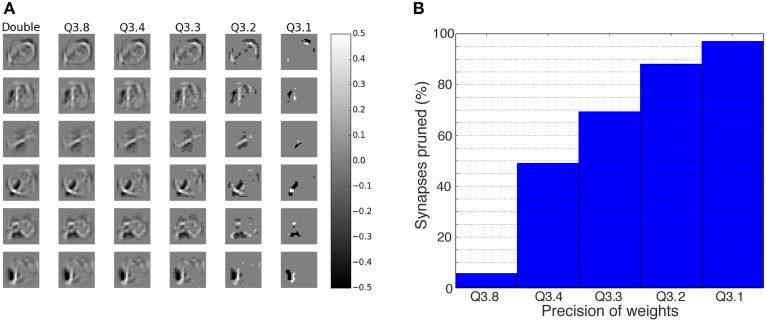

Figure 3.

Impact of weight bit precision on the representations within a DBN. (A) The receptive fields of the first six neurons (rows) in the first hidden layer. The first column shows the weights for double precision, while the remaining columns show the weights for different fixed-point schemes, using the format Qm.f, where m is the number of bits representing the integer part and f the number of bits representing the fractional part. The effect of truncation of the synapses is visible to the eye for the case of f = 4 bits and less. (B) Percentage of synapses from all layers that are set to zero due to the reduction in bit precision for the fractional part. For lower precisions, the majority of the synapses is set to zero and thus becomes obsolete.