Abstract

We demonstrate a system that combines a tracking scanning laser ophthalmoscope (TSLO) and an adaptive optics scanning laser ophthalmoscope (AOSLO) system resulting in both optical (hardware) and digital (software) eye-tracking capabilities. The hybrid system employs the TSLO for active eye-tracking at a rate up to 960 Hz for real-time stabilization of the AOSLO system. AOSLO videos with active eye-tracking signals showed, at most, an amplitude of motion of 0.20 arcminutes for horizontal motion and 0.14 arcminutes for vertical motion. Subsequent real-time digital stabilization limited residual motion to an average of only 0.06 arcminutes (a 95% reduction). By correcting for high amplitude, low frequency drifts of the eye, the active TSLO eye-tracking system enabled the AOSLO system to capture high-resolution retinal images over a larger range of motion than previously possible with just the AOSLO imaging system alone.

OCIS codes: (170.3890) Medical optics instrumentation, (170.4460) Ophthalmic optics and devices, (330.2210) Vision - eye movements

1. Introduction

Adaptive optics (AO) imaging systems allow for the visualization of individual photoreceptors in the living human eye [1]. This technology, when combined with a scanning laser ophthalmoscope (AOSLO), is capable of recording high-resolution movies of the retina in real-time [2]. Recent advances in the design of AOSLO systems have showcased the resolution capabilities of the system by resolving both foveal cones and rods in the human retina [3,4]. Apart from its imaging capabilities, the AOSLO system has the capacity to stimulate single cone photoreceptors for microperimetry [5–7], and monitor retinal disease progression and treatment over time [8]. While the uses of the AOSLO for these tasks are becoming more prevalent in both basic research and the clinic, one major disadvantage remains when using a system at such a fine scale: eye motion.

The human eye is always in motion. When fixating on a single spot, the eye drifts and makes microsaccades, moving the target on our retina over many light-detecting cells [9]. The ability to record high-fidelity, high-resolution videos or images and to deliver light to targeted locations on the retina is hindered by these eye movements. Online tracking achieved through the recording of eye motion from an AOSLO video alone [10] has been previously effective for anesthetized monkeys [7] and trained psychophysical observers with normal vision [5,6]. However, only one publication reports the use of online tracking for targeted visual function testing in patients with eye disease [11]. The particular patients in that study had normal visual acuity and fixation. However, many subjects with retinal diseases, particularly those affecting the macula, demonstrate compromised fixational stability [12] and exhibit larger fixational eye movements than normal subjects [13]. Compromised fixation in patients may include one or more of the following abnormal eye movements: fixation nystagmus, slow re-fixation saccades, saccadic intrusions and oscillations, superior oblique myokymia, ocular paralysis, or an increased drift or scarcity of microsaccades in amblyopia [12]. Patients with retinal diseases, particularly AMD, typically have a larger range of motion than normal subjects, with an average mean motion of just over 4° in the horizontal direction and 3° in the vertical [13], as compared to a 0.3° average extent of fixational motion in normal subjects [14].

To enable reliable high-resolution imaging of patients and to make the tracking system more robust for smaller fields of view in healthy eyes, we have built and quantified a hybrid tracking scanning laser ophthalmoscope (TSLO) and AOSLO system capable of both optical and digital eye-tracking. The system acts similar to a woofer-tweeter eye-tracking system; utilizing both software (digital tracking) and an active tilt/tip mirror (optical tracking) in order to achieve real-time image stabilization and correction. It has been shown in previous work with the TSLO system that the allowable eye motion/velocity threshold for digital tracking scales linearly with a system’s field size [15]. Therefore, using an external tracking system with a larger field of view (FOV) to guide/steer the AOSLO imaging beam allows for more eye motion to be captured than with the AOSLO system alone. For example, an AOSLO system with a FOV of 0.75° corresponds to an eye motion velocity threshold of 2.6 °/s, whereas a larger 3.5° TSLO FOV corresponds to a larger range of motion – up to 12 °/s. Previously, if the fixational extent of motion was larger than roughly 50% of the AOSLO field size, digital tracking would fail. This is no longer a constraint with a larger FOV external eye-tracker.

Previous work has been published regarding the use of eye-trackers with AOSLO systems. Stevenson and Roorda compared a dual Purkinje image eye-tracker [16] with an AOSLO system to study the frequency contribution of fixational eye motion at a fine scale. Burns et al. described the use of a closed-loop tracker that used a dithering probe beam and servo tracking system to lock onto a bright retinal feature. That system was integrated with an AOSLO system and they reported a bandwidth of eye-tracking up to ~1 KHz [17–19]. Yang et al. described the use of a tip/tilt mirror for active closed-loop eye-tracking with an AOSLO over a 1.5° field of view (FOV) [20]. Additionally, eye-trackers have been added to OCT [21–24] and AO-OCT systems [25] in both the clinical and basic research domains, some utilizing the same TSLO technology we describe herein; particularly, the eye-tracker used by both Vienola et al. and Braaf et al. was a TSLO system designed and built in our lab [21,22]. Most recently, Kocaoglu et al. used hardware based eye-tracking to limit image distortion and blur in AO-OCT images [25]. These experiments and set-ups highlight the current state of the art eye-tracking for high-resolution imaging modalities. Our system combines many of the advantages of prior technologies into one system, such as larger field of view and higher accuracy, into a novel woofer-tweeter eye-tracking system set-up with AOSLO.

The system we describe herein uses both software and active hardware to achieve real-time, open-loop, high-resolution retinal eye-tracking capabilities. It is the first time a confocal-SLO imaging system has been used to record eye motion and actively steer an AOSLO system to correct for eye motion. This system takes advantage of the fine digital eye-tracking for imaging over smaller fields and for subjects with larger amounts of eye motion. With the tracking accuracy of better than a single foveal cone photoreceptor, the TSLO-AOSLO combination system captures high-resolution retinal images over a larger range of eye motion than previously possible with the AOSLO imaging system alone.

2. Methods

2.1 System hardware

The hybrid system is comprised of two modular systems – the TSLO and AOSLO – combined via a notch filter prior to the eye. We used the 808 nm StopLine single-notch filter (Semrock, Rochester, NY) which, when used at 45°, allows for nearly 100% of the 730 nm TSLO beam to reflect onto the eye, with the other wavelengths of the AOSLO transmitting through the filter. The TSLO design and set-up have been described in detail in a previous publication [15]. Briefly, a series of three telescopes are used to relay the pupil of the eye onto both horizontal and vertical scanners, with a photomultiplier tube (PMT) used to capture the reflected scanned retinal image. The pupil at the eye for the TSLO system was 3.1 mm. Slight modifications were made to the original system design, including: an off the shelf diode laser (Thorlabs, Newton, NJ), the individual focal lengths of the mirrors, and the replacement of the final spherical mirror with a lens. The lens was used for two reasons: First, having a lens instead of a mirror made the system more flexible in system orientation and allowed for easier coupling into the AOSLO system. Second, the lens was of a shorter focal length than the mirror prior to it within the final telescope of the system, making a larger FOV for the TSLO than that originally achieved with a system magnification of unity. The system beam magnification and scan angle are inversely proportional [26]. The field of view is flexible for the TSLO (up to 10°), but was used at 3.5° for these experiments due to the limiting size of the notch filter that was used to combine the two systems. The diffraction-limited optical design and system optimization for the TSLO were completed using optical design software (Radiant ZEMAX LLC, Bellevue, WA). An out-of-plane design, similar to that described by Gomez-Vieyra et al [27] and Dubra and Sulai [3], was used to limit system astigmatism. It is important to note that using an out-of-plane design can affect the rotation of the TSLO imaging raster. System alignment was optimized in order to minimize rotation as much as possible, with subsequent tracking software correction used to account for the remaining rotation. This was done to ensure that any eye motion logged in the TSLO would be sent to the proper axis of the tip/tilt mirror in the AOSLO system for correction (see Fig. 1).

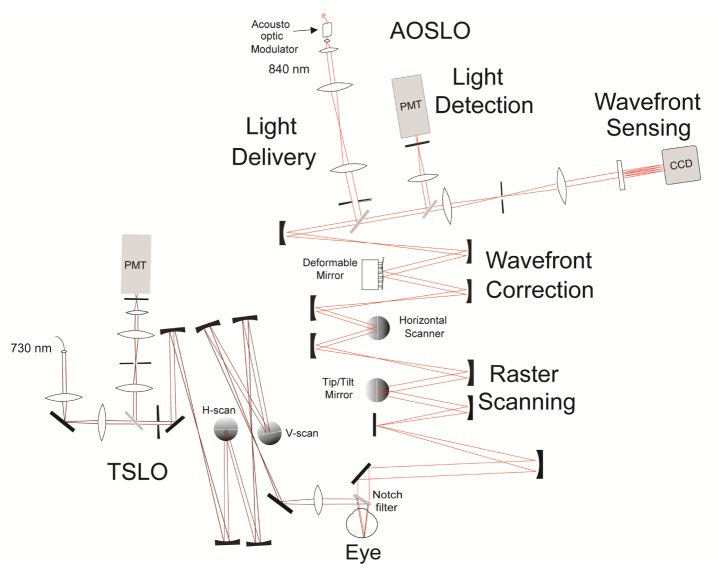

Fig. 1.

A 2-D Optical design schematic of the TSLO-AOSLO combination system. AOSLO: Light exiting the supercontinuum laser is fiber-coupled into the acousto-optic modulator (AOM) before entering the system. The light is collimated and sent through a basic 4f series of lenses onto an adjustable aperture. Light then travels through four mirror based telescope assemblies to the human eye. TSLO: Collimated light exiting the 730 nm laser diode is sent through a 4f system, followed by a 50/50 beamsplitter and then leaves the delivery arm through an adjustable aperture of the system. It travels through a series of three telescopes and joins the AOSLO beam, via the notch filter, into the eye. Light reflected off the retina propagates back through each system into their respective light detection arms. Another series of lenses in a 4f configuration relays the light to be collected at the photomultiplier tubes (PMT). A pinhole is placed at the retinal conjugate planes prior to the PMTs for confocality. The intensity of the signal is sent to two separate PCs for readout (one for TSLO and one for AOSLO). Note: this is a schematic layout; the actual components are not aligned in a single plane.

The AOSLO experiments described herein used a 0.75° FOV, a smaller field than routinely used for experiments in our lab (1.2° FOV). The AOSLO imaging system itself is almost identical to that reported previously from our lab [28], with the exception of a piezo tip/tilt mirror (Physik Instrumente (PI), Germany) replacing the original galvonometer slow-scanning mirror. The role of the tip/tilt mirror is threefold: (1) to generate the necessary ramp signal needed for the slow scan (30 Hz), (2) to feed the y-motion of the eye in to the piezo, and (3) to feed the x-motion of the eye in to the piezo. All motion displacements are sent as voltage signals directly from the TSLO to compensate eye motion. This process will be discussed in more detail in the next section of this manuscript.

2.2 Software and hardware for open-loop eye tracking

The eye-tracking concepts and the software used for digital eye-tracking and reference frame selection for both the TSLO and AOSLO have been reported previously [10, 15, 29, 30] and so they will only be described briefly here. First, a reference frame is chosen for both systems, typically the first frame to occur during a recorded movie, unless otherwise selected. All subsequent frames are then divided into a series of strips, each of which is cross-correlated with the reference frame. Any translation of the eye from the reference frame is logged as both horizontal (x) and vertical (y) displacements and are measures of the relative motion of the eye at a specific point in time. Both the TSLO and AOSLO data are recorded using a custom-programmed field programmable gate array (FPGA) board [29]. A standard FFT-based algorithm is used for the reference cross-correlation and is done on a graphics board (Nvidia, Santa Clara, CA) in the host PCs. For the data presented here, 32 overlapping strips per frame are recorded, providing up to a 960 Hz tracking signal from the TSLO [15].

In order to provide active hardware-based eye-tracking to the AOSLO, the TSLO must send signals to the AOSLO’s tip/tilt mirror to compensate for the eye’s motion. The optical correction we employ here is open-loop. First, the TSLO software tracks the eye’s motion over a specified field of view (in the case of this experiment, 3.5°). The eye’s displacement in both x and y are logged as motion traces, generating a record of where the eye has moved in real time. Next, the TSLO’s motion signal is thresholded via software in order to eliminate noise that could lead to erroneous motion signals sent to the tip/tilt mirror. To do this, eye motion values are monitored over time in order to eliminate spikes that if sent, would result in error. There are two types of thresholding criteria that have been implemented into our system. First, if the active mirror detects a large difference between the currently received pixel position and the previously received one, the new location will not be sent and the tip/tilt mirror will remain at its current position until a new, valid signal is sent. This type of error can occur during a large saccade or blink, causing the pixel differences to be quite large. Experimentally, an “8-pixel” difference was found to be the most appropriate final threshold value for this scenario. Additionally, consecutive “0-pixel” differences can trigger software thresholding. For up to and including 4 consecutive “0” values, the mirror will not update its position and will remain at its current position. However, if there are greater than 4 consecutive ‘0” values, image stabilization is assumed to have been lost by the software entirely and the active-mirror will update to ensure that new image stabilization is enabled. These thresholding constraints occur for both the horizontal and vertical mirror axes simultaneously.

Eye motion is initially recorded in pixel units, which are then converted into two 14-bit voltage signals to be output by the FPGA. To do this, we first measure the pixels per degree in the TSLO system using a model eye with a calibrated grid for a retina. Next, we input varying amplitudes of the DC offset values into the PI mirror of the AOSLO system to record how many volts are needed to move one degree (volts/degree). The product of the degrees/pixel in the TSLO and the volts/degree calculated from the AOSLO is the needed voltage/pixel conversion to generate the 14-bit signal for the FPGA. Analog gain amplification is then used to scale the voltage signal sent from the FPGA to match the range of that required by the tip/tilt mirror [21]. Due to the fact the tip-tilt mirror is also being used to generate the 30 Hz slow scan signal, a summing junction was used to send the filtered signals to the active mirror in the AOSLO.

The system was set up with a model eye equipped with a galvonometric scanner, to create a “moving retina” to digitally fine tune the conversion parameters until no residual motion was seen in the video of the model eye. The scanner itself was placed between the model eye lens and its retinal plane. In order to better understand the systems’ latencies, the time it takes for the tip tilt mirror to move according to the TSLO motion trace was measured in real time with an oscilloscope; this was found to be 2.5 ms (also reported in [21]). In terms of digital tracking, any remaining high spatial resolution eye motion artifacts seen in the raw AOSLO video are corrected using the same strip and image based eye-tracking software as that described above for the TSLO system - i.e. the AOSLO system software acquires a reference frame at the beginning of the imaging session in parallel with the TSLO system. It is important to note that this “digital tracking” step is done completely separately in the AOSLO software [15,29].

3. Results

The experiment was approved by the University of California, Berkeley, Committee for the Protection of Human Subjects. All protocols adhered to the tenets of the Declaration of Helsinki.

3.1 System bandwidth analysis

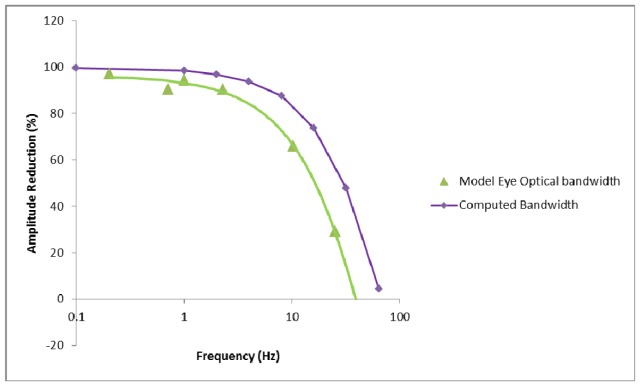

A galvanometric-scanning model eye was input into the final pupil plane of the combined TSLO and AOSLO system in order to test the maximum amount of motion that could be successfully imaged in the AOSLO system both with and without active eye-tracking enabled. To do this, varying amounts of frequency and amplitude of a sinusoidal wave were input into the model eye (moving retina) using a waveform generator. Six frequency values, as seen below in Fig. 2, were chosen and increasing levels of amplitude at each frequency were added until the AOSLO system alone was no longer able to digitally track and stabilize the retinal image. The raw AOSLO videos were then recorded. Next, the same frequency and amplitude values were input into the system with active tracking enabled. Previous values that caused AOSLO stabilization alone to fail were now able to be tracked using the active tip/tilt mirror to keep the AOSLO imaging beam on target. Residual motion in the raw AOSLO video with optical tracking enabled was recorded and the motion reduction as a function of frequency was subsequently calculated using an offline program that could analyze the videos with the same strip-based analysis, but with higher resolution [16]. The offline program was set to compute eye motion trace at 64 strips per frame, or 1920 Hz. Figure 2 shows the percent amplitude reduction with tracking enabled, using a model eye, in the green curve. For lower frequencies, which mainly encompass drift, the optical tracking is able to sufficiently reduce the amplitude of motion; however by the time it reaches 10 Hz, the amplitude reduction is down to 65%. We then compared our model eye acquired data to a computed system bandwidth based on: (1) the bandwidth of the strip-based tracking algorithm in the TSLO (50% amplitude correction at 400Hz [15]) combined with (2) a measured motion trace latency from the TSLO of 2.5 ms and (3) the frequency response curve of the active tip/tilt tracking mirror (50% drop in amplitude at 80 Hz). The frequency response curve of the tip-tilt mirror was measured experimentally by recording the scanning amplitude response of the mirror to a sine wave input with fixed amplitude as a function of temporal frequency. The overall computed performance is shown as the purple curve in Fig. 2. For frequencies of 10 Hz, the computed bandwidth is 84%, with a 50% correction cutoff of just over 30 Hz. The experimental bandwidth is slightly lower, but compares reasonably well with the expected bandwidth.

Fig. 2.

Amplitude Reduction (%) vs frequency obtained for both a model eye (measured experimentally and shown in green) and the computed system bandwidth (modeled using TSLO performance with a motion trace latency of 2.5 ms and the tip/tilt mirror’s frequency response curve) is shown in purple.

3.2 Human eye motion frequency analysis

Human eye frequency data are reported here for four normal subjects, between the ages of 26- 45, all experienced in AOSLO imaging protocols. A bite bar was used in order to minimize head motion for all human eye experiments.

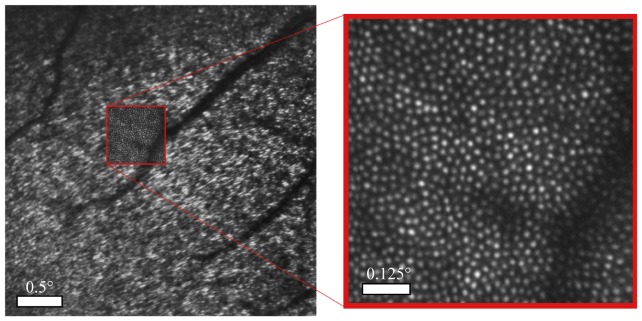

Subjects were instructed to look at three corners of the 3.5° TSLO raster and were imaged at the same three retinal locations surrounding the fovea – upper left, lower left, and lower right hand corners respectively. (+/− 1.75°). TSLO and AOSLO videos of 300 frames (10 seconds) each were captured simultaneously in order to compare the raw eye motion of the TSLO with the residual eye motion in the resulting AOSLO videos. All images were recorded in conventional fundus view. Figure 3 shows the average of a 300 frame AOSLO video overlaid on an average of 300 frames of the TSLO video.

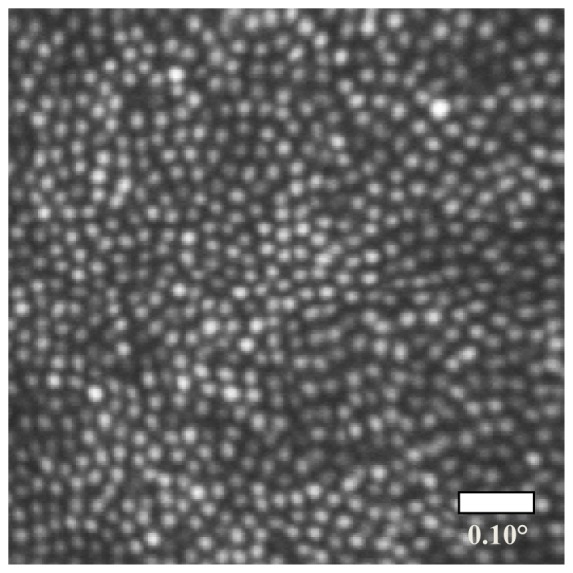

Fig. 3.

The image of the TSLO is shown on the left (the subject is fixated on the corner of the TSLO raster and, in this case, the fovea is on the lower left of the image), with the AOSLO smaller field shown in a red box within it. Note the TSLO image is rich in structure – high contrast blood vessels with cones at larger eccentricities (in the upper right of image) and interference artifacts at lower eccentricities. On the right is the high-resolution AOSLO image where each white spot represents the scattered light from an individual cone photoreceptor. The field of view of the TSLO and AOSLO was 3.5° and 0.75° respectively.

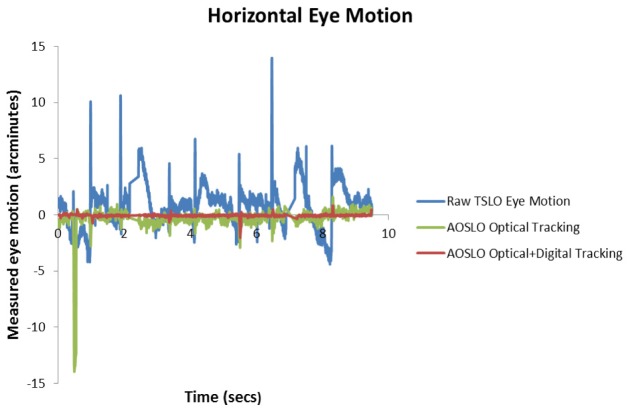

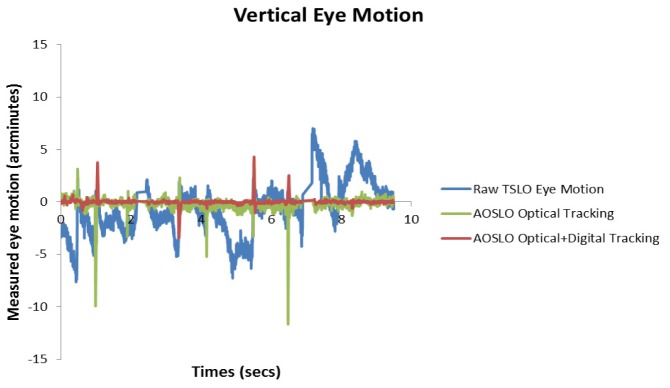

In order to clearly see the percent reduction of eye motion that occurs post eye- tracking, we will orient the reader as to what the different stages of tracking are. A “raw video” refers to a TSLO video which has had no motion correction and contains the actual eye motion of the subject over the imaging session. “Optical tracking” refers to the active tracking or use of the tip/tilt mirror in the AOSLO. The result of optical tracking is seen in a raw AOSLO video, which appears to be mostly stabilized. “Optical + digital tracking” refers to the correction done by both the active tracking of the tip/tilt mirror (optical tracking) and the final stabilization of the AOSLO video using the image-based eye-tracking software (digital tracking). The videos in Fig. 4 show real-time movies of the TSLO (not tracked) and AOSLO (tracked) systems before and after digital and optical tracking, while Figs. 5 and 6 show the eye motion traces generated from these videos.

Fig. 4.

Image generated from the registered sum of 300 frames from the AOSLO movie with both optical and digital tracking enabled. Note that roughly halfway down the above image, a slight compression is present due to eye motion captured in the reference frame. The steps to obtaining the final image are best shown by a sequence of videos. Visualization 1 (8.5MB, MP4) shows the raw 3.5° TSLO video with the natural fixational eye motion. Visualization 2 (8.5MB, MP4) shows a stabilized TSLO video after online image based stabilization. The byproduct of the stabilized video is the eye motion trace, which is sent to the tip-tilt mirror in the AOSLO. Visualization 3 (8.3MB, MP4) shows the AOSLO video with correction from the TSLO (optical stabilization). The features in the video remain relatively stable, although there are high frequency artifacts present. Visualization 4 (8.3MB, MP4) shows the same 0.75° AOSLO video after online digital stabilization. It is cropped to show only the portion of the frame that is not affected by the tip-tilt mirror artifacts. Aside from some frames where the tracking failed, the features remain stable to within a fraction of a cone diameter.

Fig. 5.

Measured horizontal eye motion traces from the above media files. The blue curve depicts the natural fixational eye motion of the subject over the course of the imaging session (as measured in the TSLO system). The large spikes in blue above occur during the microsaccades and blinks, as seen in the videos. The green curve represents the remaining eye motion after optical tracking was enabled (as measured in the AOSLO system). Finally, the red curve shows the remaining motion with both optical and digital software tracking enabled in the AOSLO system. Any spikes seen in the green or red curve resulted from tracking error.

Fig. 6.

Measured vertical eye motion traces from the subject in the provided media files.

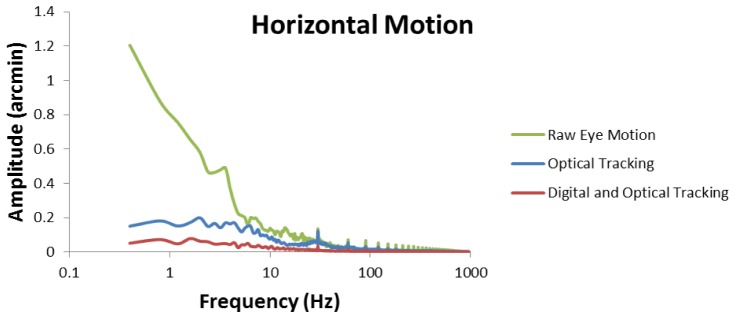

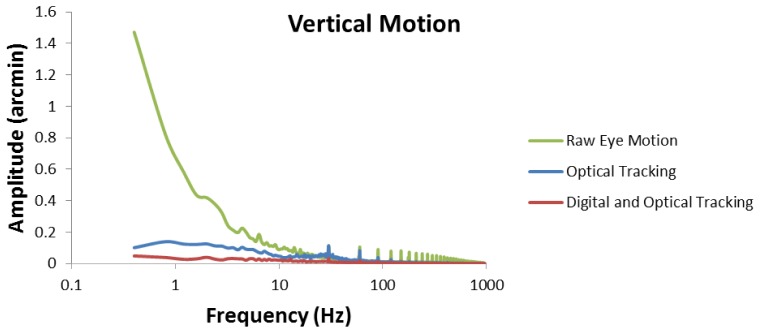

Out of the 12 videos recorded at the three locations, 9 of them were analyzed for residual motion using an offline stabilization program set to generate eye motion traces at 64 strips per frame, or 1920 Hz [16]. The subset of videos chosen were those in which 285 or more of the 300 (10 sec) recorded frames were available for analysis (i.e. not containing multiple blinks and/or large saccades that resulted in frame rejection). AOSLO videos were cropped to eliminate mirror response delay at the top of each frame. The eye motion data was then resampled to generate a uniformly sampled time-series at 960 Hz and Fourier-transformed to generate amplitude v.s. frequency spectra. The spectra were calculated for each of the nine videos (so as not to weight one subject or location more than another) and then averaged together to display the overall frequency contributions of the four subjects. Figures 7 and 8 show the average frequency contribution of the horizontal and vertical motion of the raw eye motion respectively from: the TSLO videos, the residual frequency seen in the raw AOSLO video post optical tracking, and the final frequency spectrum when using both optical and digital eye tracking. The “Optical Tracking” curve shown in blue shows an amplitude of motion of at most 0.2 arcminutes for horizontal motion and 0.14 arcminutes for vertical motion. The “Digital + Optical Tracking” curve shows that the amplitude of eye motion is kept below 0.08 arcminutes for all frequencies in the horizontal motion plot and kept below 0.05 arcminutes in the vertical motion plot. This corresponds to an average amplitude reduction of 95%. The peaks seen at the 30 Hz frame rate and subsequent harmonics are artifacts that arise due to the torsion of the eye as well as distortions in the TSLO and AOSLO reference frames. These artifacts will be explained further in the discussion. Bandwidth estimates were not computed directly from these data since the average spectra from only 9 videos were too noisy to yield sensible results at frequencies above 5 Hz. A basic analysis shows that the bandwidth is close to, but not as high as the computed bandwidth or that measured in the model eye.

Fig. 7.

The reduction of horizontal eye motion as a function of frequency. Raw eye motion represents the actual eye motion recorded by the TSLO system. Optical tracking refers to the raw AOSLO video, where the tip/tilt mirror was actively correcting for eye motoin. Digital and optical tracking represents the active eye tracking plus subsequent software correction. 30 Hz (and subsequent harmonics) reference frame and torsion artifacts are visible in the plot.

Fig. 8.

The reduction of vertical eye motion as a function of frequency.

4. Discussion

The TSLO can be combined with the AOSLO system in order to provide real-time optical and digital eye-tracking. Motion traces from the TSLO were sent in the form of voltage signals to the AOSLO tip/tilt mirror in order to compensate eye motion. An average amplitude reduction of 95% was measured in the final stabilized AOSLO videos (optical + digital tracking).

The advantages of using the TSLO for eye-tracking are five-fold.

-

1.)

Although the bandwidth of the TSLO is ultimately limited by the motion trace reporting latency of 2.5 ms, the correction is more than sufficient to track the motion of the eye, whose power spectrum of motion falls off with approximately a 1/frequency dependence [16]. The stability of the optically stabilized AOSLO raster makes it better suited for subsequent digital correction of the residual, uncorrected motion.

-

2.)

The stability of the optically stabilized AOSLO raster is also expected to yield better adaptive optics corrections since the source of the signal from the wavefront beacon is more stable. This may be especially beneficial in patients with age-related macular degeneration, a disease that results in variable topography (drusen) and reflectance (geographic atrophy) across the retinal surface.

-

3.)

By compensating motion artifacts, stimulus delivery to specific retinal locations is expected to be more repeatable and therefore more reliable. This could prove extremely beneficial in patient imaging and vision testing. Many subjects with retinal diseases demonstrate compromised fixation stability, with larger fixational eye movements than normal subjects [12,13].

-

4.)

The TSLO offers a flexible and larger field of view than AOSLO systems. This can help operators orient themselves during an imaging session by locating larger retinal landmarks, such as blood vessels or certain cones. A FOV of 3.5° was used in these experiments, but can be easily adjusted to larger field of views by exchanging the final lens of the TSLO system.

-

5.)

The TSLO and AOSLO are modular – meaning that they can be used together or separately with the insertion or removal of a filter. This can allow a laboratory to run experiments on both systems – together or separately.

The TSLO system has disadvantages for external eye-tracking as well. Eye motion traces that are sent to the AOSLO are based on the displacements of the eye from a reference frame. Since the retina is scanned over time, each frame in a movie has unique distortions, including the reference frame. The use of a distorted reference frame gives rise to periodic artifacts in the eye motion trace (at the frame rate and at higher harmonics) that are sent to the tip/tilt mirror. However, real-time feedback is provided to the operator if the choice of a reference frame is poor - a warped stabilized image will appear and a new reference frame can be chosen. These artifacts will also be readily apparent in the AOSLO with optical tracking. In fact, since the periodic reference frame artifact from the TSLO and the frame rate of the AOSLO are very close, but not necessarily identical, the distortion often appears as a scrolling distortion in the AOSLO video at the beat frequency between the two frame rates. These reference frame artifacts are visible in the frequency spectrum as peaks at 30 Hz and higher harmonics. It is important to note then, that the frequency contributions of 30, 60, 90 Hz etc. shown in this paper are not solely eye motion contributions and should not be treated as such. Next, whenever the eye makes movements that are orthogonal to the fast scan direction, the TSLO loses its continuous track and the frame acquired after the vertical movements will contain strips that do not overlap with the reference frame, as stated in Sheehy et al. [15]. At present, the software holds onto the latest recorded eye position during the blank intervals until new reliable eye-tracking data is generated. Another disadvantage that must be noted is that TSLO tracking is performed in open-loop. Open-loop tracking requires a one-time initial calibration in order for the larger field TSLO image to scale its output motion traces for the tip/tilt mirror in the AOSLO system. Calibration however, is not required for closed-loop tracking with the same active tip/tilt mirror as described by Yang et al. [20]. Additionally, the extent of eye motion correction has a hard limit based on the size of current system optics. Eye motion that is larger than the maximum FOV that can be accommodated by the AOSLO system optics (in this case, up to 4°) will cause the beam to become vignetted and data to be potentially lost. Lastly, proper alignment of the TSLO and AOSLO pupil planes at the eye are crucial for the high-resolution imaging in both systems simultaneously. We observed that during the imaging session, if the TSLO alignment became even slightly off, image quality was severely affected.

In the AOSLO system, the active tracking was achieved by a single tip-tilt mirror. To replace the galvanometer of the system with a single element that can provide both the slow scanning capabilities and correct for the eye’s motion is certainly a benefit, as the addition of a telescope is not needed for another scanning element. However, we had many difficulties using the tip-tilt mirror for active-tracking. First, the mirror frequency bandwidth is inadequate for a 30 Hz sawtooth ramp signal. We monitored the mirror’s response on an oscilloscope using the input signal and saw that the linear portion of the ramp signal was sufficient, but there were large “ringing” artifacts after each fly-back of the scanner, which generated significant distortion artifacts at the top of each frame. Electronic tuning of the scanner helped to minimize this substantially, but could not completely eliminate these artifacts. For users who are only interested in imaging, this is not an issue, as one can simply remove any extraneous error or delay from the top of the image and use the remaining portion. For tracking applications, however, a continuous eye motion record can be crucial and one cannot simply ignore a portion of the image. Given the limits of today’s tip-tilt mirror technology, the use of two galvanometers instead of a single tip/tilt mirror could be a better solution.

5. Conclusion

The TSLO-AOSLO hybrid system provides both optical and digital eye-tracking capabilities to track the retina over a larger range of motion than previously possible in our AOSLO system alone. The TSLO enables a larger FOV to orient the operator and more easily locate retinal landmarks. High-resolution eye-tracking, with an accuracy of down to a single cone photoreceptor, was shown. This will allow for more robust imaging and functional testing of normal subjects using smaller imaging field sizes and patients with fixational instability in the future.

Disclosure Statement

Roorda, Sheehy and Tiruveedhula hold intellectual property related to the TSLO. Roorda and Sheehy have a financial interest in TSLO technology. Roorda holds a patent and has a financial interest in Canon USA, Inc. Both he and the company stand to benefit from publication of the results of this research.

Acknowledgments

This research was supported by grants from the Macula Vision Research Foundation (AR, CKS), the National Institutes of Health EY014735 and EY023591 (AR) and Fight for Sight (RS). Ramkumar Sabesan holds a Career Award at the Scientific Interface from the Burroughs Welcome Fund. Scott Stevenson and Girish Kumar provided the offline motion analysis software. This paper was presented as a poster at the 2014 ARVO Annual Meeting, Orlando FL, May 2014.

References and links

- 1.Liang J., Williams D. R., Miller D. T., “Supernormal vision and high-resolution retinal imaging through adaptive optics,” J. Opt. Soc. Am. A 14(11), 2884–2892 (1997). 10.1364/JOSAA.14.002884 [DOI] [PubMed] [Google Scholar]

- 2.Roorda A., Romero-Borja F., Donnelly W., III, Queener H., Hebert T., Campbell M., “Adaptive optics scanning laser ophthalmoscopy,” Opt. Express 10(9), 405–412 (2002). 10.1364/OE.10.000405 [DOI] [PubMed] [Google Scholar]

- 3.Dubra A., Sulai Y., “Reflective afocal broadband adaptive optics scanning ophthalmoscope,” Biomed. Opt. Express 2(6), 1757–1768 (2011). 10.1364/BOE.2.001757 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dubra A., Sulai Y., Norris J. L., Cooper R. F., Dubis A. M., Williams D. R., Carroll J., “Noninvasive imaging of the human rod photoreceptor mosaic using a confocal adaptive optics scanning ophthalmoscope,” Biomed. Opt. Express 2(7), 1864–1876 (2011). 10.1364/BOE.2.001864 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Harmening W. M., Tuten W. S., Roorda A., Sincich L. C., “Mapping the Perceptual Grain of the Human Retina,” J. Neurosci. 34(16), 5667–5677 (2014). 10.1523/JNEUROSCI.5191-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tuten W. S., Tiruveedhula P., Roorda A., “Adaptive optics scanning laser ophthalmoscope-based microperimetry,” Optom. Vis. Sci. 89(5), 563–574 (2012). 10.1097/OPX.0b013e3182512b98 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sincich L. C., Zhang Y., Tiruveedhula P., Horton J. C., Roorda A., “Resolving single cone inputs to visual receptive fields,” Nat. Neurosci. 12(8), 967–969 (2009). 10.1038/nn.2352 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Williams D. R., “Imaging single cells in the living retina,” Vision Res. 51(13), 1379–1396 (2011). 10.1016/j.visres.2011.05.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Martinez-Conde S., Macknik S. L., Hubel D. H., “The role of fixational eye movements in visual perception,” Nat. Rev. Neurosci. 5(3), 229–240 (2004). 10.1038/nrn1348 [DOI] [PubMed] [Google Scholar]

- 10.Arathorn D. W., Yang Q., Vogel C. R., Zhang Y., Tiruveedhula P., Roorda A., “Retinally stabilized cone-targeted stimulus delivery,” Opt. Express 15(21), 13731–13744 (2007). 10.1364/OE.15.013731 [DOI] [PubMed] [Google Scholar]

- 11.Wang Q., Tuten W. S., Lujan B. J., Holland J., Bernstein P. S., Schwartz S. D., Duncan J. L., Roorda A., “Adaptive optics microperimetry and OCT images show preserved function and recovery of cone visibility in macular telangiectasia Type 2 retinal lesions,” Invest. Ophthalmol. Vis. Sci. 56(2), 778–786 (2015). 10.1167/iovs.14-15576 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Martinez-Conde S., “Fixational eye movements in normal and pathological vision,” Prog. Brain Res. 154, 151–176 (2006). [DOI] [PubMed] [Google Scholar]

- 13.Kumar G., Chung S. T., “Characteristics of fixational eye movements in people with macular disease,” Invest. Ophthalmol. Vis. Sci. 55(8), 5125–5133 (2014). 10.1167/iovs.14-14608 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cherici C., Kuang X., Poletti M., Rucci M., “Precision of sustained fixation in trained and untrained observers,” J. Vis. 12(6), 1–16 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sheehy C. K., Yang Q., Arathorn D. W., Tiruveedhula P., de Boer J. F., Roorda A., “High-speed, image-based eye tracking with a scanning laser ophthalmoscope,” Biomed. Opt. Express 3(10), 2611–2622 (2012). 10.1364/BOE.3.002611 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stevenson S. B., Roorda A., “Correcting for miniature eye movements in high resolution scanning laser ophthalmoscopy,” Proc. SPIE 5688, 145–151 (2005). [Google Scholar]

- 17.Burns S. A., Tumbar R., Elsner A. E., Ferguson D., Hammer D. X., “Large-field-of-view, modular, stabilized, adaptive-optics-based scanning laser ophthalmoscope,” J. Opt. Soc. Am. A 24(5), 1313–1326 (2007). 10.1364/JOSAA.24.001313 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ferguson R. D., Zhong Z., Hammer D. X., Mujat M., Patel A. H., Deng C., Zou W., Burns S. A., “Adaptive optics scanning laser ophthalmoscope with integrated wide-field retinal imaging and tracking,” J. Opt. Soc. Am. A 27(11), A265–A277 (2010). 10.1364/JOSAA.27.00A265 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hammer D. X., Ferguson R. D., Bigelow C. E., Iftimia N. V., Ustun T. E., Burns S. A., “Adaptive optics scanning laser ophthalmoscope for stabilized retinal imaging,” Opt. Express 14(8), 3354–3367 (2006). 10.1364/OE.14.003354 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Yang Q., Zhang J., Nozato K., Saito K., Williams D. R., Roorda A., Rossi E. A., “Closed-loop optical stabilization and digital image registration in adaptive optics scanning light ophthalmoscopy,” Biomed. Opt. Express 5(9), 3174–3191 (2014). 10.1364/BOE.5.003174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Vienola K. V., Braaf B., Sheehy C. K., Yang Q., Tiruveedhula P., Arathorn D. W., de Boer J. F., Roorda A., “Real-time eye motion compensation for OCT imaging with tracking SLO,” Biomed. Opt. Express 3(11), 2950–2963 (2012). 10.1364/BOE.3.002950 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Braaf B., Vienola K. V., Sheehy C. K., Yang Q., Vermeer K. A., Tiruveedhula P., Arathorn D. W., Roorda A., de Boer J. F., “Real-time eye motion correction in phase-resolved OCT angiography with tracking SLO,” Biomed. Opt. Express 4(1), 51–65 (2013). 10.1364/BOE.4.000051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ferguson R. D., Hammer D. X., Paunescu L. A., Beaton S., Schuman J. S., “Tracking optical coherence tomography,” Opt. Lett. 29(18), 2139–2141 (2004). 10.1364/OL.29.002139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hammer D., Ferguson R. D., Iftimia N., Ustun T., Wollstein G., Ishikawa H., Gabriele M., Dilworth W., Kagemann L., Schuman J., “Advanced scanning methods with tracking optical coherence tomography,” Opt. Express 13(20), 7937–7947 (2005). 10.1364/OPEX.13.007937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kocaoglu O. P., Ferguson R. D., Jonnal R. S., Liu Z., Wang Q., Hammer D. X., Miller D. T., “Adaptive optics optical coherence tomography with dynamic retinal tracking,” Biomed. Opt. Express 5(7), 2262–2284 (2014). 10.1364/BOE.5.002262 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Porter J., Queener H., Lin J., Thorn K., Awwal A. A., Adaptive Optics for Vision Science: Principles, Practices, Design and Applications (John Wiley & Sons, 2006), Chap. 10. [Google Scholar]

- 27.Gómez-Vieyra A., Dubra A., Malacara-Hernández D., Williams D. R., “First-order design of off-axis reflective ophthalmic adaptive optics systems using afocal telescopes,” Opt. Express 17(21), 18906–18919 (2009). 10.1364/OE.17.018906 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Harmening W. M., Tiruveedhula P., Roorda A., Sincich L. C., “Measurement and correction of transverse chromatic offsets for multi-wavelength retinal microscopy in the living eye,” Biomed. Opt. Express 3(9), 2066–2077 (2012). 10.1364/BOE.3.002066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Yang Q., Arathorn D. W., Tiruveedhula P., Vogel C. R., Roorda A., “Design of an integrated hardware interface for AOSLO image capture and cone-targeted stimulus delivery,” Opt. Express 18(17), 17841–17858 (2010). 10.1364/OE.18.017841 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.J. B. Mulligan, “Recovery of motion parameters from distortions in scanned images,” in Proceedings of the NASA Image Registration Workshop (IRW97) (NASA Goddard Space Flight Center, MD, 1997). [Google Scholar]