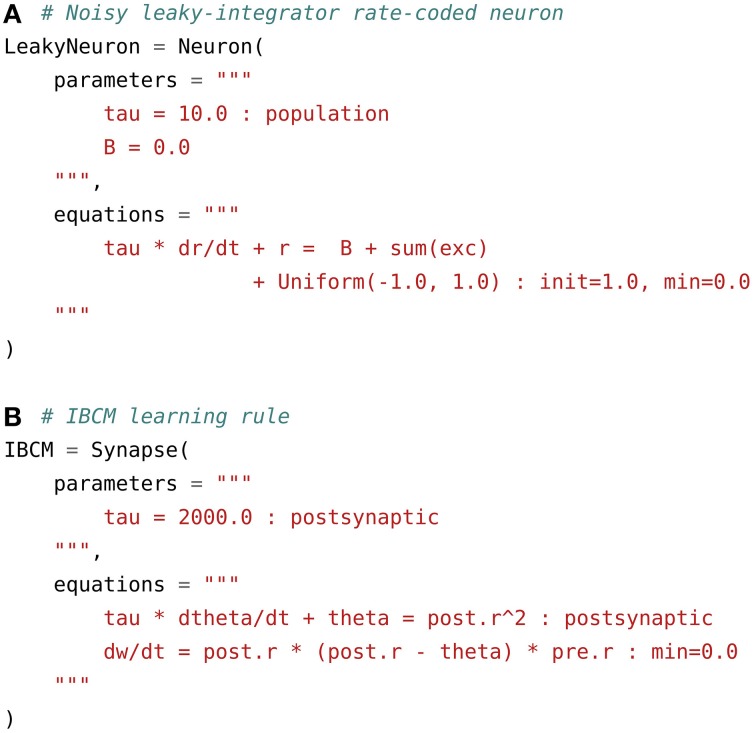

Figure 2.

Examples of rate-coded neuron and synapse definitions. (A) Noisy leaky-integrator rate-coded neuron. It defines a global parameter tau for the time constant and a local one B for the baseline firing rate. The evolution of the firing rate r over time is rules by an ODE integrating the weighted sum of excitatory inputs sum(exc) and the baseline. The random variable is defined by the Uniform(–1.0, 1.0) term, so that a value is taken from the uniform range [−1, 1] at each time step and for each neuron. The initial value at t = 0 of r is set to 1.0 through the init flag and the minimal value of r is set to zero. (B) Rate-coded synapse implementing the IBCM learning rule. It defines a global parameter tau, which is used to compute the sliding temporal mean of the square of the post-synaptic firing rate in the variable theta. This variable has the flag postsynaptic, as it needs to be computed only once per post-synaptic neuron. The connection weights w are then updated according to the IBCM rule and limited to positive values through the min=0.0 flag.