Abstract

Expertise effects for nonface objects in face-selective brain areas may reflect stable aspects of neuronal selectivity that determine how observers perceive objects. However, bottom-up (e.g., clutter from irrelevant objects) and top-down manipulations (e.g., attentional selection) can influence activity, affecting the link between category selectivity and individual performance. We test the prediction that individual differences expressed as neural expertise effects for cars in face-selective areas are sufficiently stable to survive clutter and manipulations of attention. Additionally, behavioral work and work using event related potentials suggest that expertise effects may not survive competition; we investigate this using functional magnetic resonance imaging. Subjects varying in expertise with cars made 1-back decisions about cars, faces, and objects in displays containing one or 2 objects, with only one category attended. Univariate analyses suggest car expertise effects are robust to clutter, dampened by reducing attention to cars, but nonetheless more robust to manipulations of attention than competition. While univariate expertise effects are severely abolished by competition between cars and faces, multivariate analyses reveal new information related to car expertise. These results demonstrate that signals in face-selective areas predict expertise effects for nonface objects in a variety of conditions, although individual differences may be expressed in different dependent measures depending on task and instructions.

Keywords: attention, competition, expertise, fMRI, fusiform

Introduction

Our experience with the world changes our brains. In particular, gaining visual expertise with a category of objects can change the way we perceive these objects (Diamond and Carey 1986; Bukach et al. 2010) and affect their representations in visual cortex (Gauthier et al. 1999; Op de Beeck et al. 2006). Scientists have studied expertise effects for objects in face-selective regions of the ventral visual pathway to test the hypothesis that expertise with faces can account for face-selectivity in these regions. Expertise has been found to increase activity in face-selective areas for objects in domains as varied as birds, cars, chess, and radiological images (Gauthier et al. 2000; Xu 2005; Harley et al. 2009; Harel et al. 2010; Bilalic et al. 2011). Using high-resolution imaging and analyses in individual regions of interest in flattened cortex, some of us reported that area (FFA) were obtained in a small area centered on the peak of face selectivity (McGugin, Gatenby, et al. 2012). But even outside of the debate regarding the origins of face selectivity, expertise effects can help us understand the functional organization of the brain. Here and elsewhere (Gauthier et al. 1999; 2000; McGugin, Gatenby, et al. 2012), we use the term “expertise effect” to refer to a correlation between individual behavioral performance for objects of a given category with activity in the brain for other objects from this same category, sometimes in a different task (Gauthier et al. 2005). Such expertise effects provide us with a window into how our experience with objects shapes category-selectivity in the brain.

While such expertise effects are correlational, they are generally interpreted as reflecting stable aspects of neuronal selectivity for objects. These stable aspects of selectivity in turn determine how an observer perceives these objects, thereby accounting for behavioral advantages in expert performance. This interpretation is supported by converging evidence from a variety of studies. In one study, the FFA's response to cars predicted the behavioral performance with other cars measured months later (Gauthier et al. 2005) suggesting a stable skill was measured. In training experiments, the degree of holistic processing subjects acquired with objects from a novel category was associated with activity for these objects in (Gauthier and Tarr 2002) or near (Wong et al. 2009) the FFA. In addition, lesions in the fusiform gyrus can result in an inability to acquire expertise normally (Behrmann et al. 2005) or at least to use the same strategies as controls (Bukach et al. 2012). These studies converge with those reporting expertise effects in familiar domains to suggest that perceptual expertise in domains where visually similar objects are individuated depends on specialization in occipitotemporal cortex, particularly in FFA.

However, an alternative is that expertise effects do not reveal stable, trait-like aspects of functional specialization that are only changed by considerable training, but rather reflect state-like aspects of functional activity associated with how experts look at objects in their domain of expertise. Indeed, experts are likely to be more interested in objects in their domain of expertise and attention can boost signals at virtually all levels of the visual system (Wojciulik et al. 1998; Murray and Wojciulik 2004). Thus, Harel et al. (2010) suggested that expertise effects for nonface objects such as cars can be largely explained by such differences in attention. This is not a new question. Gauthier et al. (2000) reported that attending to object identity recruited the FFA more than attention to object location, but still found an effect of expertise during a location task. Car expertise effects have been obtained during judgments of identity, location, or during attention to local parts (Gauthier et al. 2000; 2005). One idea was that these effects would reflect attentional modulation specific to blocked designs, but a replication using an event-related paradigm obtained similar expertise effects (Xu 2005). Harel et al. revisited this question by asking car novices and experts to attend to either cars or planes in an alternating sequence, and they observed expertise effects only when car experts attended to cars. These expertise effects were found in several brain areas, which was not surprising given that face selectivity is also found across the brain (e.g., Hoffman and Haxby 2000; Rossion et al. 2012). However, Harel et al. found effects of expertise in putative V1 (or early visual cortex, EVC), which they took as evidence that expertise effects reflect top-down voluntary engagement. However, other expertise studies with cars or birds had not obtained similar expertise effects in EVC (Gauthier et al. 2000; McGugin et al. submitted), and in recent work we argued that the EVC activation observed by Harel et al. was due to their car images occupying significantly more pixels than their plane images. When low-level visual properties of images are controlled, no expertise effects are found in EVC (McGugin et al. submitted). There is, however, another interesting aspect of Harel et al.'s design that helps motivate the present work, which is the possibility that competition between planes and cars limited the expertise effects observed in that study. At least in some cases, expertise with cars is linearly correlated with expertise for planes (McGugin, Gatenby, et al. 2012; McGugin, Richler, et al. 2012). In a number of paradigms, showing faces among nonface objects of expertise reduced behavioral hallmarks of expertise or ERP responses for faces, whether the 2 categories were shown simultaneously or sequentially, and regardless of whether objects were task-relevant or not (Gauthier et al. 2003; Rossion et al. 2004, 2007; Behrmann et al. 2005; McKeeff et al. 2010; McGugin et al. 2011). The presence of objects from another potential category of expertise for car experts may have made it particularly difficult to measure car expertise effects in these conditions. This conjecture is supported by the fact that car experts in Harel et al. were slower than novices, whether they attended or ignored cars.

Here, we explore the influences of attentional manipulations and competition on expertise effects in and outside face-selective areas. In addition to the effects of attention and competition, we wanted to explore the effect of “clutter” (showing multiple objects at once, Reddy and Kanwisher 2007) on expertise effects. Prior work found that multivariate patterns of activity for nonpreferred categories in FFA and the parahippocampal place area (PPA) were not stable enough to survive clutter or reduced attention but, in contrast, the responses for the preferred category (e.g., faces in FFA) were robust to clutter and to some extent to a reduction of attention (Reddy and Kanwisher 2007). Therefore, we measured how behavioral expertise for cars predicted activity in the brain 1) when cars were attended and shown in isolation (a replication of prior expertise studies); 2) when cars were attended but presented in the context of other objects (to test the effect of clutter); 3) when cars were to be ignored and presented in the context of other objects that were attended (to test the effect of attentional selection, or diverted attention, which we will refer to for short as attention), and 4) when cars were attended or ignored in the context of faces, which were also either ignored or attended (to test for the effect of competition). As our main hypothesis, we propose that if the responses to cars in face-selective areas are, like responses to faces, a stable reflection of the visual representation of cars used by car experts, then car expertise effects may be minimally affected by clutter and relatively robust to reductions of attention, but based on prior work on competition, they may be most sensitive to concurrent competition from faces.

Materials and Methods

Subjects

Thirty-three subjects initially took part in the study. Four were excluded from all analyses due to severe motion artifacts (motion greater than the voxel size of 3 mm; Formisano et al. 2005). The remaining 29 subjects were healthy right-handed adults (3 females), 26 ± 4.5 years of age. According to the car expertise index described below, the 3 females were ranked 7th, 8th, and 17th. Informed written consent was obtained from each subject in accordance with guidelines of the institutional review board of Vanderbilt University and Vanderbilt University Medical Center. All participated for monetary compensation and had normal or corrected-to-normal vision.

Behavioral Tests, Stimuli, and Expertise Index

All subjects completed 3 behavioral tasks outside the scanner: a sequential matching expertise test used to quantify individual skill at matching cars (Gauthier et al. 2000, 2005; Grill-Spector et al. 2004; Rossion et al. 2004; Xu 2005; Curby et al. 2009), the Cambridge Face Memory Test (CFMT; Duchaine and Nakayama 2006), and the Vanderbilt expertise test (VET; McGugin, Richler, et al. 2012).

The matching test included 12 blocks of 28 sequential matching trials on cars, planes, and birds (56 images/category). On each trial, the first stimulus appeared for 1000 ms, followed by a 500-ms mask. A second stimulus then appeared and remained visible until subjects made a same or different response, or 5000 ms elapsed. Subjects determined whether the 2 images showed cars/planes of the same make and model regardless of year, or birds of the same species.

In the CFMT, subjects first studied frontal views of 6 target faces for a total of 20 s, followed by an 18-trial introductory learning phase. They were then presented with 30 forced-choice test displays each containing one target face and 2 distractor faces. Subjects were instructed to select the face that matched one of the original 6 target faces. The matching faces varied from their original presentation by means of lighting, pose, or both. Next, subjects were again presented with the 6 target faces to study, followed by 24 test displays presented in Gaussian noise. For a complete description of the CFMT, see Duchaine and Nakayama (2006).

The VET, structurally modeled after the CFMT, included 8 object categories: leaves, owls, butterflies, wading birds, mushrooms, cars, planes, and motorcycles. For each category, target images consisted of 4 exemplars from 6 unique species/models, and distractor images showed 48 exemplars from novel species/models. Before a category block, subjects studied a display with one exemplar from each of 6 species/models. For each test trial, one of the studied exemplars (identical images for the first 12 trials, or transfer images requiring generalization across viewpoint, size, and settings for the subsequent 36 trials) was presented with 2 distractors from another species/model in a forced-choice paradigm. The target image could occur in any of the 3 positions, and subjects indicated which image of the triplet was the studied target. For a complete description of the VET, see McGugin, Richler et al. (2012).

Magnetic Resonance Imaging (MRI) Acquisition

Scanning was performed using a Philips 3-Tesla Intera Achieva MRI scanner with an 8-channel head coil located at the Vanderbilt University Institute for Imaging Science. High-resolution (HR) T1-weighted anatomical volumes were acquired (time repetition [TR], 8.93 ms; time echo [TE], 4.6 ms; flip angle, 9°; field of view [FOV], 256 × 256; slice thickness, 1 mm, no gap; in-plane resolution, 1 × 1 mm; 170 slices acquired in the sagittal plane). For all functional scanning (1 face-localizer scan plus 8 experimental scans) we used standard gradient-echo echoplanar T2*-weighted imaging to obtain functional images (TR, 2000 ms; TE, 35 ms; flip angle, 79°; FOV, 192 × 192; slice thickness, 3 mm, no gap; in-plane resolution, 3 × 3 mm; 34 ascending interleaved slices acquired axially).

Functional Magnetic Resonance Imaging (fMRI) Stimuli and Design

All images were presented with an Apple Macintosh computer running Matlab (MathWorks, Natick, MA) using the Psychophysics Toolbox extension (Brainard 1997). Stimuli were displayed on a rear-projection screen using an Eiki LC-X60 LDP projector with a Navitar zoom lens.

A face-localizer scan used 72 grayscale images (36 faces, 36 objects) in a 1-back detection task with 18 alternating blocks of faces or objects (16 images shown for 1 s) with a 2 s fixation at the beginning and end of each block. Sensitivity did not differ for Face and Object blocks: (hit rate, false alarm rate) Face (0.92, 0.008), Object (0.93, 0.004).

Following the face-localizer scan, subjects completed 8 runs of the main experiment. We used a blocked fMRI design with a modified 1-back memory task in which the presence of distractors and task-relevance of different object categories varied across runs and blocks. Images from 3 different categories (70 images each of faces, cars, and butterflies) were used in the main experiment. Butterflies were selected as a category with which car experts were unlikely to have considerable expertise (in contrast to planes, which have been used in the past [Harel et al. 2010] but have also shown a strong correlation with cars [McGugin, Gatenby, et al. 2012]). The correlation between performance for cars and butterflies in the matching task was r = −0.17, ns. In addition, special effort was taken to equate stimuli within and across categories for low-level image properties using the functions from the Spectrum, Histogram, and Intensity Normalization and Equalization (SHINE) program (Williams et al. 2009; Willenbockel et al. 2010), which equates luminance distribution and Fourier amplitude at each spatial frequency.

All images were presented with the inner edge at 3 degrees on either side of fixation and subtended 6 degrees of visual angle. Images from a given category alternately appeared on the left and right side of fixation, and subjects looked for an immediate image repeat (regardless of location). Repeats occurred either once or twice per block, never in the first or last trial of a block. Subjects pressed their right index or middle finger when they detected a repeat on the left or right side of the screen, respectively.

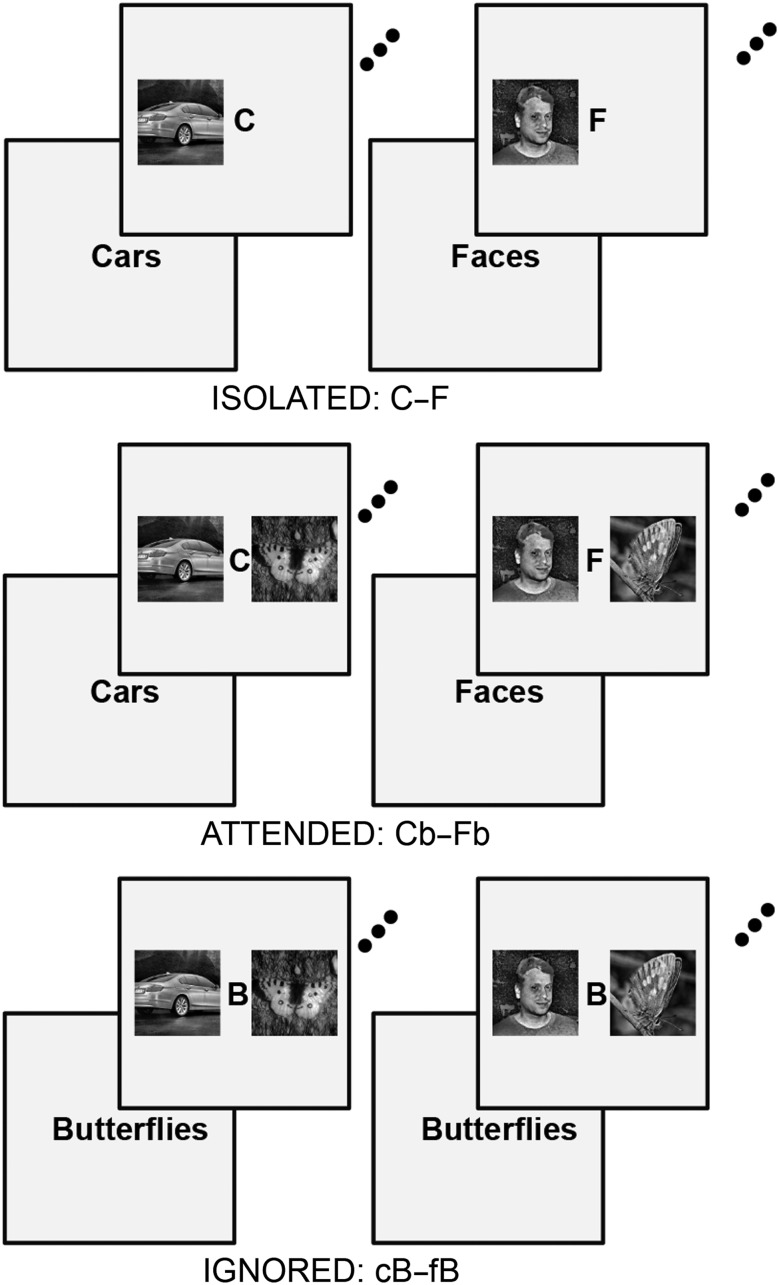

Subjects were instructed to maintain central fixation while searching for an immediate image repeat in either a stream of target images shown in isolation (“Isolated” runs) or target images shown in the context of distractor images from a second category that should be ignored (“Attend/Ignore” runs). At fixation, a letter/number appeared reminding the subjects of the target category: “F”, “C”, “B” = search for a face, car, or butterfly repeat, respectively (Fig. 1). All runs contained 18 blocks with 16 trials per block. Each block was 18 s in duration, containing a 2-s instruction screen (announcing which category to attend) followed by 16 1-s trials. All runs began with a 2-s fixation, for a total run duration of 5 min 26 s. Run order and block order was the same for all subjects.

Figure 1.

Example trial structure from fMRI runs where cars and faces were shown in isolation (top), attended in the presence of another to-be-ignored category (middle), or ignored while subjects were instructed to attend to another category (bottom).

In the first 2 runs (“Isolated” runs) subjects viewed 6 blocks each of the 3 stimulus categories, seeing images in isolation. In the next 4 runs (“Attended/Ignored” runs) subjects still looked for an immediate image repeat from a single target category, but now a distractor image from an irrelevant category always appeared along with the target category image (Fig. 1). Each run contained 3 blocks of 6 unique conditions. Throughout this paper, attended/ignored block names will be represented by a full spelling or uppercase letter for the attended category and a lowercase first letter for the ignored category.

Data Analysis

The HR T1-weighted structural scans were normalized to Talairach space.

Functional data were analyzed using Brain Voyager (www.brainvoyager.com) and in-house Matlab scripts. Preprocessing included registration to the original (nontransformed) structural scan, slice scan time correction (cubic spline), 3D motion correction (trilinear/sinc interpolation) and temporal filtering (high-pass criterion of 2 cycles per run) with linear trend removal.

Regions of interest (ROIs) were defined using the Face > Object contrast from the face-localizer scan, without any spatial smoothing was applied. We localized bilaterally ROIs that responded more to faces than objects in the posterior fusiform gyrus (FFA1), middle fusiform gyrus (FFA2), occipital face area (OFA), and more to objects than faces in the parahippocampal gyrus (PHG). We localized 2 discrete peaks of face selectivity in bilateral fusiform gyri of 27 subjects (FFA1 and FFA2), and in both the right and left hemispheres another 2 subjects had one peak corresponding to right FFA1 (Pinsk et al. 2009; Weiner et al. 2010, Table 1). We had no specific prediction about the difference between FFA1 and FFA2, but they were clearly distinct anatomically. In addition, we defined in each subject bilateral control clusters in the EVC of a fixed size and position based on Talairach coordinates corresponding to the medial aspect of the striate and extrastriate cortex.

Table 1.

For 5 bilateral regions, mean peak Talairach coordinates, mean volume (mm3), and results from a one-sample t-test (P-value) on the mean PSC values for faces relative to butterflies from the Isolated runs

| Mean Talairach coordinates for peak voxel ± SD | Average volume (mm3) ± SD | One-sample t-test (P-value) on mean face PSC (relative to butterflies) from isolated runs | |

|---|---|---|---|

| Right FFA1 (N = 29) | 40.3, −58.4, −23.1 (4, 7.5, 5.2) | 1786 (259) | 7.0 (<0.0001) |

| Right FFA2 (N = 27) | 39.9, −37.6, −22.6 (3.2, 7.1, 4.1) | 1680 (308) | 9.6 (<0.0001) |

| Right OFA (N = 29) | 30.2, −84, −21.7 (8.6, 7.3, 7) | 955 (289) | 4.1 (0.0003) |

| Right PHG (N = 29) | 27.1, −54, −18.8 (2.6, 6.6, 3.8) | 3034 (760) | −10.8 (<0.0001) |

| Right EVC (N = 29) | 10.1, −79.2, −1.64 (0, 0, 0) | 1593 (0) | 0.3 (ns) |

| Left FFA1 (N = 29) | −39.5, −59.2, −24 (4.1, 7.5, 4.5) | 1569 (460) | 5.1 (<0.0001) |

| Left FFA2 (N = 27) | −40.6, −39.7, −23.8 (4.2, 6.7, 5.3) | 1593 (404) | 6.8 (<0.0001) |

| Left OFA (N = 27) | −33.6, −80.6, −24.1 (8.2, 8.1, 5.1) | 1028 (383) | 3.6 (0.002) |

| Left PHG (N = 29) | −28.9, −52.6, −19.2 (3.8, 7, 4) | 2817 (503) | −11.4 (<0.0001) |

| Left EVC (N = 29) | −10.1, −79.2, −1.64 (0, 0, 0) | 1728 (0) | −0.2 (ns) |

All ROIs were initially defined based on the 1 mm (interpolated) statistical maps using a fixed millimeter spread of activation to ensure consistency with reported sizes of these functional ROIs in the literature as well as consistency across subjects (Table 1). However, to ensure that the signal was weighted per functional voxel, ROIs were subsequently down-sampled to functional (3 mm) resolution. Any functional voxel containing one or more 1 mm voxel from the initial ROI was considered to be part of the final ROI, thus leading to larger final ROIs relative to those initially defined. This procedure also ensured that identical ROIs were used for univariate and multivariate analyses (see below).

Functional voxels that were members of multiple initial ROIs were dropped from all final ROIs. This latter qualification avoided partial-volume effects with regard to functional region membership. For full-brain group analyses only, 3D spatial smoothing was applied to all functional data using a Gaussian filter of 8 mm full-width at half-maximum, and preprocessed functional slice-based data were aligned to the Talairach-transformed brain. For visualization purposes only, the maps were projected onto a representative subject's segmented and flattened, Talairach-normalized right and left hemisphere. All correlations between neural activation and behavioral expertise were tested for bivariate outliers, which were denoted as points whose externally studentized residual was >3.5 or less than −3.5. The distribution of car expertise was positively skewed (skewness = 0.64), which should not bias our correlations since Pearson's r does not require normality (Nefzger and Drasgow 1957).

Univariate Activation Maps

For each voxel, a Michelson contrast ratio (CR) as described below was computed for stimulus blocks over 8–18 s after trial onset, an interval selected to account for the rise and fall times of the blood oxygen level-dependent (BOLD) response. The responses from all voxels within a predefined ROI were then averaged for a given condition. Expertise effects were computed by correlating behavioral expertise with the voxel-by-voxel differences across conditions. The isolated conditions of interest were defined as (Car − Face)/(Car + Face), which will be referred to as C − F, and (Car − Butterfly)/(Car + Butterfly), which will be referred to as C − B. In the attend conditions, we tested expertise effects for cars versus faces in the context of ignored butterflies (CARb − FACEb)/(CARb + FACEb), or Cb − Fb, and cars versus butterflies in the context of ignored faces, (CARf − BUTTERFLYf)/(CARf + BUTTERFLYf), or Cf − Bf. The comparisons of interest in the ignored conditions were cars versus faces when both categories were ignored amongst attended butterflies, (cBUTTERFLY − fBUTTERFLY)/(cBUTTERFLY + fBUTTERFLY), or cB − fB, and cars versus butterflies when both categories were ignored amongst attended faces (cFACE − bFACE)/(cFACE + bFACE), or cF − bF. Thus, we can compare car expertise effects on univariate BOLD activation for cars relative to faces to that for cars relative to butterflies when images are shown in isolation, are attended with distractors, or are ignored.

Separately for each whole-FOV contrasts, an initial contrast with an uncorrected threshold of P < 0.05 was corrected for multiple comparisons using Brain Voyager's Cluster Threshold Estimator (Forman et al. 1995; Goebel et al. 2006), which uses a Monte Carlo simulation with iterative Gaussian filtering to calculate the cluster size that yields a corrected threshold of P < 0.05 separately for each contrast of interest (C–F [162 mm3], C–B [142 mm3], Cb–Fb [137 mm3], Cf–Bf [137 mm3], cB–-fB [132 mm3], and cF–bF [126 mm3]).

Multi-Voxel Pattern Analysis (MVPA)

For each univariate contrast in each ROI, we carried out a parallel MVP analysis (e.g., Car vs. Face). MVPA was performed using LIBSVM (Chang and Lin 2011) and custom Matlab code. All MVPA used a linear support vector machine with the C-parameter fixed at 1. Classification was performed using a leave-one-run-out approach in which one run was held out as test data, and the remaining data were used as the training set. All data were z-transformed and mean-subtracted within ROI to ensure that we did not simply duplicate the univariate analysis.

Instead of the standard SVM categorization scheme, in which prediction values are binarized to yield trial- or block-level categorization, we instead utilized LIBSVM's ability to generate block-level probabilities. Instead of outputting a prediction for each block (e.g., “Face, not Car”), the SVM yielded a probability for each category (e.g., “Face—72%; Car—28%”). This probability estimate was averaged across all blocks for a given classifier to yield a final prediction result for each combination of subject, ROI, and classification comparison. Because the predicted category is the category with higher probability in the SVM, this procedure effectively gives the classifier's confidence in its categorization, yielding greater information than standard binary categorization. Many ROIs yielded ceiling-level classification in at least some subjects when using conventional categorization; thus, this MVPA confidence measure (which was not at ceiling even when categorization was at or near 100%) allowed us to correlate MVPA performance with behavioral expertise in a manner parallel to that by which we correlated univariate activation with behavioral expertise.

Results

Behavioral Results From the Lab

In the behavioral matching test expertise sensitivity scores were computed for each subject for cars (car d′, range 0.23–4.21), planes (plane d′, range 0.45–3.00) and birds (bird d′, range 0.45–2.14).

Principal component analysis has revealed that the underlying structure of the 8-category VET can be largely explained by 2 independent factors that map onto living and nonliving objects. Therefore, we reduced VET performance to a Living Objects score (average of butterflies, leaves, mushrooms, owls, and wading birds) and a NonLiving Objects score (average of motorcycles and planes, excluding cars).

An aggregate car expertise index was calculated based on the standardized performance for cars in the matching test and standardized car performance on the VET Car category (r = 0.68). An aggregate noncar performance index was calculated based on the standardized performance for planes and birds in the matching test, and the standardized VET Living Objects and NonLiving Objects scores (average r = 0.29). In the following analyses, we regress out the influence of the noncar aggregate scores from the car aggregate score. Henceforth, all mention of car expertise refers to the car aggregate residualized by the noncar aggregate. The distribution of car expertise was positively skewed (skewness = 0.64), which should not bias our correlations since Pearson's r does not require normality (Nefzger and Drasgow 1957).

Behavioral Results From the Scanner

In the isolated fMRI runs, sensitivity was high for all categories: (hit rate, false alarm rate) Face (0.84, 0.10), Car (0.76, 0.12), Butterfly (0.74, 0.13), however paired t-tests revealed significantly better detection of faces relative to cars (t = 5.5, P < 0.001) or butterflies (t = 4.51, P < 0.001) with no difference for cars and butterflies. Subjects were also able to perform the task in the Attended/Ignored fMRI runs (hit rate, false alarm rate): 1) Attend Car, Ignore Face (0.71, 0.12); 2) Attend Car, Ignore Butterfly (0.74, 0.13); 3) Attend Face, Ignore Car (0.84, 0.11); (4) Attend Face, Ignore Butterfly (0.84, 0.10); 5) Attend Butterfly, Ignore Face (0.75, 0.11), 6) Attend Butterfly, Ignore Car (0.72, 0.12). Paired t-tests on hit rates revealed an advantage for face detection (FACEc > all comparison, t > 3.0, P < 0.001; FACEb > all comparisons, t > 3.01, P < 0.001) with no difference between FACEc and FACEb (t= −0.12, ns) or between Attend Butterfly and Attend Car conditions.

MRI Results

Most of the analyses conducted below consist of a correlation with behavioral car expertise. We based our sample size on what we can expect to give sufficient power to detect the typical car expertise effect in right FFA. A meta-analysis of all published fMRI studies of expertise with cars (Gauthier et al. 2000; Xu 2005; Harel et al. 2010; McGugin, Gatenby, et al. 2012) using the correlation between the percent signal change (PSC) to cars (relative to a high-level baseline) and a behavioral measure of car expertise yielded a value of r = 0.54, 95% CI [0.4; 0.652]. With 29 subjects, our power to find such an effect is 0.96 for a one-tail test at an alpha of 0.05, which we use for correlations with univariate contrasts in face-selective ROIs. This relatively liberal threshold was chosen because car expertise effects in these ROIS are replications using univariate analyses, at least in the Isolated runs. Our main goal is to test if these effects are still observed under various conditions, so we chose to test them all at the same threshold. The multivariate ROI analyses are thresholded using 2-tails tests with an alpha of 0.05, so that the pattern of significant effects is comparable to the univariate contrasts. We favor a focus on effect sizes (here, Pearson's r) rather than on a specific statistical threshold, because treating any given threshold as a cliff can lead to unjustified conclusions (Rosnow and Rosenthal 1989).

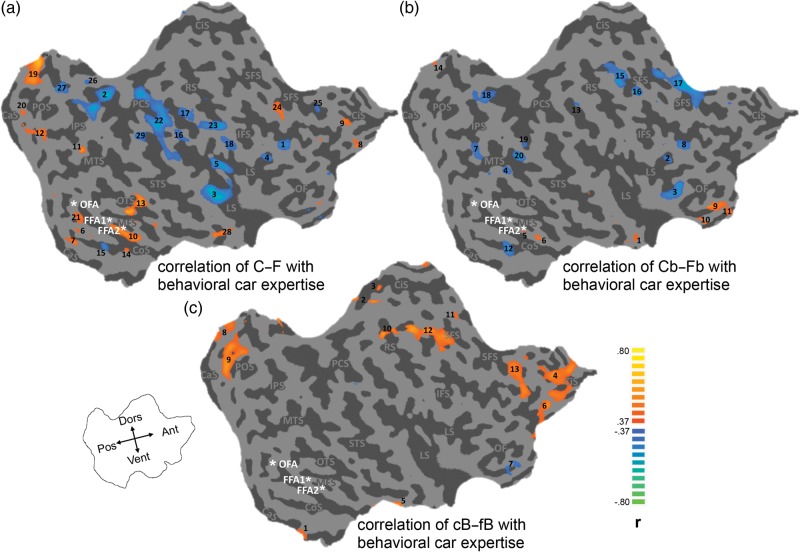

We use a corrected 2-tail alpha of 0.05 for whole-brain analyses—these analyses are reported for completeness but they are less powerful both due to the multiple comparison correction and to the greater variance expected when subjects are compared in regions aligned according to gross anatomical landmarks rather than functional ones. For simplicity's sake, we report whole-brain analyses by showing and describing the maps for the right hemisphere (Fig. 2; Table 2; Supplementary Table 1), but foci of activity for the left hemisphere are shown in Supplementary Figure 1 and reported in Table 2 and Supplementary Table 1.

Figure 2.

Group-average partial correlation maps depicting the BOLD response to cars relative to faces in (a) isolated, (b) attended, or (c) ignored conditions, with car expertise regressing out the influence of noncar performance, overlaid on an individual's flattened right hemisphere. The maps are shown at a corrected threshold of 0.05 based on an alpha of 0.05. See Table 3 for names, peak r-values, and peak Talariach coordinates of each activation cluster. Group-average coordinates for FFA1, FFA2, and OFA are marked on the flattened hemisphere. Sulci labels: calcarine sulcus, CaS; collateral sulcus, CoS; middle fusiform sulcus, MFS; occipitotemporal sulcus, OTS; arietoocciptial sulcus, POS; intraparietal sulcus, IPS; middle temporal sulcus, MTS; superior temporal sulcus, STS; postcentral sulcus, PCS; Rolandic Sulcus, RS; cingulate sulcus, CiS; superior frontal sulcus, SFS; inferior frontal sulcus, IFS; orbitofrontal sulcus, OF; lateral sulcus, LS.

Table 2.

Results from group-average full-brain partial correlations maps between the BOLD response to cars (relative to faces) in isolated, attended, and ignored runs, with behavioral car expertise (regressing out the influence of noncar performance)

| C–F × car expertise |

Cb–Fb × car expertise |

cB–fB × car expertise |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Name | Cluster peak r-value | Mean Talairach coordinates for peak voxel | Name | Cluster Peak r-value | Mean Talairach coordinates for peak voxel | Name | Cluster Peak r-value | Mean Talairach coordinates for peak voxel | |||

| Right hemisphere | Right hemisphere | Right hemisphere | |||||||||

| 1 | inf front s | −0.51 | 44, 34, 15 | 1 | hipp | 0.43 | 20, 4, −24 | 1 | ca s | 0.49 | 8, −38, 5 |

| 2 | intra par s | −0.61 | 20, −56, 45 | 2 | inf front g | −0.47 | 49, 23, 15 | 2 | cing g | 0.63 | 1, −41, 54 |

| 3 | lat s | −0.66 | 47, −2, −6 | 3 | inf front g | −0.56 | 35, 22, −6 | 3 | cing s | 0.5 | 14, −35, 42 |

| 4 | lat s | −0.51 | 50, 22, 12 | 4 | inf temp g | −0.55 | 47, −56, 18 | 4 | front g | 0.59 | 14, 46, 23 |

| 5 | lat s | −0.63 | 35, −17, 21 | 5 | med fus g | 0.41 | 34, −43, −20 | 5 | hipp | 0.59 | 45, −5, −39 |

| 6 | ling g | 0.41 | 5, −68, −11 | 6 | med fus g | 0.46 | 32, −29, −25 | 6 | med front g | 0.57 | 17, 64, 6 |

| 7 | ling g | 0.53 | 1, −62, 3 | 7 | mid temp g | −0.45 | 44, −80, 18 | 7 | orb front g | −0.51 | 14, 37, −15 |

| 8 | med front g | 0.6 | 8, 67, 3 | 8 | min front g | −0.51 | 48, 26, 18 | 8 | post cing g | 0.48 | 2, −41, 15 |

| 9 | med front g | 0.53 | 2, 55, 24 | 9 | orb front 1 | 0.51 | 12, 31, −15 | 9 | post occ s | 0.56 | 8, −59, 15 |

| 10 | med fus g | 0.53 | 32, −32, −18 | 10 | orb front 2 | 0.49 | 8, 13, −9 | 10 | ro s | 0.57 | 20, −29, 54 |

| 11 | mid temp g | 0.58 | 46, −76, 27 | 11 | orb front 3 | 0.48 | 8, 40, −6 | 11 | supp front g | 0.43 | 11, 25, 57 |

| 12 | mid temp g | 0.49 | 2, −86, 24 | 12 | parahipp g | −0.56 | 23, −41, −6 | 12 | supp front s | 0.58 | 20, −5, 54 |

| 13 | occ temp s | 0.64 | 59, −41, −18 | 13 | post cent g | −0.44 | 47, −26, 51 | 13 | supp front s | 0.53 | 18, 50, 24 |

| 14 | parahipp g | 0.48 | 31, −30, −17 | 14 | post cent g | 0.42 | −2, −38, 18 | ||||

| 15 | parahipp g | −0.51 | 26, −47, −6 | 15 | pre cent g | −0.59 | 32, −11, 54 | ||||

| 16 | post cent g | −0.45 | 65, −23, 35 | 16 | sup front g | −0.59 | 35, −11, 54 | ||||

| 17 | post cent g | −0.5 | 56, −20, 42 | 17 | sup front g | −0.54 | 20, 28, 42 | ||||

| 18 | post cent g | −0.46 | 53, −2, 12 | 18 | sup par g | −0.48 | 11, −68, 48 | ||||

| 19 | post cing | 0.63 | 1, −41, 21 | 19 | sup temp g | −0.49 | 51, −54, 20 | ||||

| 20 | post cing | 0.49 | 1, −61, 6 | 20 | sup temp g | −0.56 | 50, −53, 18 | ||||

| 21 | post co s | 0.48 | 23, −71, −9 | ||||||||

| 22 | pre cent g | −0.6 | 29, −41, 45 | ||||||||

| 23 | ro s | −0.56 | 56, −5, 21 | ||||||||

| 24 | sup front s | 0.46 | 44, 17, 36 | ||||||||

| 25 | sup front s | −0.47 | 15, 43, 31 | ||||||||

| 26 | sup par g | −0.46 | 5, −62, 62 | ||||||||

| 27 | sup par g | −0.56 | 2, −71, 36 | ||||||||

| 28 | sup temp g | 0.56 | 29, 9, −33 | ||||||||

| 29 | sup temp s | −0.51 | 55, −54, 41 | ||||||||

| Left hemisphere | Left hemisphere | Left hemisphere | |||||||||

| 1 | cing s | 0.45 | −1, 61, 3 | 1 | fus g | 0.54 | −43, −41, −18 | 1 | ant cing g | 0.51 | −1, 36, 9 |

| 2 | evc | 0.46 | −4, −63, 12 | 2 | intra par s | −0.49 | −22, −68, 51 | 2 | ant cing g | 0.47 | −13, 25, 24 |

| 3 | evc | 0.55 | −4, −92, 0 | 3 | orb front g | 0.55 | −22, 19, −15 | 3 | evc | 0.46 | −1, −65, 21 |

| 4 | fus g | 0.61 | −34, −29, −15 | 4 | post cing | 0.43 | −7, −38, 15 | 4 | evc | 0.55 | −7, −38, 9 |

| 5 | hipp | 0.62 | −19, −5, −24 | 5 | sup temp g | 0.46 | −58, −47, 12 | 5 | mid front g | 0.59 | −34, 55, 3 |

| 6 | inf front s | 0.47 | −46, 4, 54 | 6 | sup temp g | 0.45 | −67, −17, 0 | 6 | parahipp g | 0.51 | −16, −77, −18 |

| 7 | inf front s | 0.47 | −48, 28, 5 | 7 | sup temp s | 0.51 | −40, 1, −27 | 7 | sup front g | 0.47 | −4, 28, 54 |

| 8 | inf par g | −0.64 | −63, −31, 39 | 8 | sup temp s | 0.46 | −52, −38, 3 | 8 | sup front g | 0.47 | −7, 52, 33 |

| 9 | intra par s | −0.52 | −19, −75, 51 | 9 | sup front g | 0.64 | −22, 40, 30 | ||||

| 10 | intra par s | −0.53 | −40, −41, 33 | ||||||||

| 11 | lat s | 0.53 | −28, 16, −9 | ||||||||

| 12 | lat s | 0.48 | −42, 28, 3 | ||||||||

| 13 | ling g | 0.49 | −25, −86, −25 | ||||||||

| 14 | mid temp g | 0.46 | −55, −38, 0 | ||||||||

| 15 | mid temp g | 0.5 | −52, −11, −18 | ||||||||

| 16 | orb front | 0.46 | −49, 22, −9 | ||||||||

| 17 | parahipp g | 0.46 | −7, −77, −18 | ||||||||

| 18 | parahipp g | 0.65 | −7, −59, 0 | ||||||||

| 19 | parahipp g | 0.62 | −16, −17, −12 | ||||||||

| 20 | post occ g | 0.63 | −7, −47, 12 | ||||||||

| 21 | ros | −0.47 | −55, −2, 24 | ||||||||

| 22 | sup front g | 0.59 | −10, 61, 24 | ||||||||

| 23 | sup front g | 0.55 | −16, 31, 54 | ||||||||

| 24 | sup front g | 0.54 | −28, 43, 42 | ||||||||

| 25 | sup front s | 0.54 | −31, 40, 38 | ||||||||

| 26 | sup temp g | 0.52 | −37, 16, −21 | ||||||||

| 27 | sup temp g | −0.52 | −49, −8, 0 | ||||||||

| 28 | sup temp s | 0.47 | −43, 7, −24 | ||||||||

Note: The table gives the name, peak r-value, and peak Talairach coordinates for each cluster of activation.

Univariate Activation Across all Subjects

First, we analyzed the data averaged over all voxels within each ROI, without considering car expertise. To confirm that the localizer-defined ROIs (OFA, FFA1, FFA2, and PHG ROI in both hemispheres) were face selective, we report the results for the faces versus butterflies contrast. As expected, the OFA and FFAs from the localizer showed higher response to faces, the PHG showed a higher response to objects than faces, and no category preference was observed in the anatomically defined EVC (Table 1).

Multivariate Decoding of Category Across all Subjects

MVPA demonstrated high levels of decoding between all pairs of categories (Faces, Cars and Butterflies) in the Isolated conditions in all ROIs except in EVC (Table 3). As expected, decoding outside of EVC was greater between isolated faces and either object category than between cars and butterflies. Though this difference did not achieve statistical significance in bilateral PHG when we compared decoding performance for Cars versus Faces to that for Cars versus Butterflies (t28 < 1.1, P > 0.28), all other non-EVC comparisons achieved statistical significance (t > 2.22, P < 0.035). In spite of this, Cars versus Butterflies achieved above-chance decoding in all non-EVC ROIs (Table 3). Notably, Cars and Butterflies were most robustly decoded in the PHG and least in FFA2. Decoding was also significant in all ROIs (except EVC in several cases) for all pairs of categories in the Clutter Attended conditions. Finally, decoding was much more limited in the Clutter Ignored conditions, showing a significant decrease in all non-EVC ROIs for all conditions relative to the corresponding Clutter Attended conditions. Most striking was the Ignored cars versus Ignored butterflies (among attended faces) condition, which could only be decoded above chance in the left PHG and right OFA.

Table 3.

Multivariate results for all regions and all category comparisons in isolated, attended, and ignored conditions

| Isolated | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Car versus face |

Car versus butterfly |

Face versus butterfly |

|||||||||||

| Region | N | Mean | SD | t | P | Mean | SD | t | P | Mean | SD | t | P |

| Left OFA | 27 | 83.2 | 18.3 | 9.43 | 0.0000 | 74.1 | 16.6 | 7.55 | 0.0000 | 86.1 | 16.9 | 11.09 | 0.0000 |

| Left FFA1 | 29 | 89.1 | 13.6 | 15.41 | 0.0000 | 78.5 | 16.6 | 9.27 | 0.0000 | 94.2 | 11.3 | 21.11 | 0.0000 |

| Left FFA2 | 26 | 84.8 | 17.6 | 10.08 | 0.0000 | 65.2 | 19.2 | 4.03 | 0.0005 | 90.2 | 15.8 | 12.96 | 0.0000 |

| Left PHG | 29 | 91.5 | 9.1 | 24.71 | 0.0000 | 89.0 | 10.0 | 21.06 | 0.0000 | 94.5 | 7.9 | 30.42 | 0.0000 |

| Left EVC | 29 | 53.6 | 12.2 | 1.57 | 0.13 | 50.2 | 9.0 | 0.09 | 0.93 | 55.6 | 13.0 | 2.33 | 0.03 |

| Right OFA | 28 | 79.1 | 16.2 | 9.47 | 0.0000 | 69.2 | 17.7 | 5.72 | 0.0000 | 80.0 | 18.4 | 8.62 | 0.0000 |

| Right FFA1 | 29 | 91.6 | 10.5 | 21.24 | 0.0000 | 84.8 | 13.8 | 13.62 | 0.0000 | 97.3 | 4.1 | 62.08 | 0.0000 |

| Right FFA2 | 27 | 88.5 | 13.1 | 15.26 | 0.0000 | 69.2 | 19.4 | 5.13 | 0.0000 | 95.0 | 8.6 | 27.22 | 0.0000 |

| Right PHG | 29 | 89.3 | 11.6 | 18.33 | 0.0000 | 87.6 | 10.3 | 19.67 | 0.0000 | 92.4 | 12.2 | 18.71 | 0.0000 |

| Right EVC | 29 | 51.8 | 12.1 | 0.81 | 0.43 | 55.2 | 12.0 | 2.32 | 0.03 | 53.2 | 9.7 | 1.80 | 0.08 |

| Clutter attended | |||||||||||||

| Cb versus Fb | Cf versus Bf | Fc versus Bc | |||||||||||

| Region | N | Mean | SD | t | P | Mean | SD | t | P | Mean | SD | t | P |

| Left OFA | 27 | 79.8 | 17.5 | 8.84 | 0.0000 | 63.2 | 16.3 | 4.21 | 0.0003 | 80.7 | 19.6 | 8.16 | 0.0000 |

| Left FFA1 | 29 | 90.0 | 11.3 | 19.04 | 0.0000 | 68.2 | 17.8 | 5.50 | 0.0000 | 92.2 | 12.2 | 18.63 | 0.0000 |

| Left FFA2 | 26 | 89.0 | 9.9 | 20.04 | 0.0000 | 56.2 | 15.1 | 2.10 | 0.05 | 86.4 | 15.8 | 11.77 | 0.0000 |

| Left PHG | 29 | 86.3 | 13.1 | 14.90 | 0.0000 | 76.8 | 17.6 | 8.23 | 0.0000 | 90.9 | 11.1 | 19.93 | 0.0000 |

| Left EVC | 29 | 56.4 | 12.1 | 2.83 | 0.01 | 56.1 | 11.7 | 2.83 | 0.0084 | 51.5 | 13.9 | 0.59 | 0.56 |

| Right OFA | 28 | 68.1 | 19.2 | 4.99 | 0.0000 | 62.5 | 15.2 | 4.34 | 0.0002 | 74.4 | 23.7 | 5.46 | 0.0000 |

| Right FFA1 | 29 | 94.0 | 8.5 | 27.96 | 0.0000 | 66.4 | 18.2 | 4.84 | 0.0000 | 95.0 | 10.5 | 23.03 | 0.0000 |

| Right FFA2 | 27 | 89.0 | 13.7 | 14.81 | 0.0000 | 56.5 | 16.0 | 2.10 | 0.05 | 90.0 | 15.3 | 13.55 | 0.0000 |

| Right PHG | 29 | 85.1 | 15.6 | 12.14 | 0.0000 | 76.6 | 14.8 | 9.65 | 0.0000 | 88.4 | 12.4 | 16.63 | 0.0000 |

| Right EVC | 29 | 51.3 | 16.4 | 0.41 | 0.68 | 47.8 | 11.8 | −1.03 | 0.31 | 50.3 | 15.7 | 0.10 | 0.92 |

| Clutter ignored | |||||||||||||

| cB versus fB | cF versus bF | fC versus bC | |||||||||||

| Region | N | Mean | SD | t | P | Mean | SD | t | P | Mean | SD | t | P |

| Left OFA | 27 | 60.7 | 15.7 | 3.54 | 0.0015 | 51.9 | 17.7 | 0.56 | 0.58 | 58.3 | 16.7 | 2.60 | 0.02 |

| Left FFA1 | 29 | 72.7 | 20.1 | 6.09 | 0.0000 | 49.9 | 11.5 | −0.07 | 0.95 | 66.4 | 17.2 | 5.14 | 0.0000 |

| Left FFA2 | 26 | 65.2 | 16.0 | 4.86 | 0.0001 | 51.2 | 15.4 | 0.38 | 0.71 | 55.3 | 16.2 | 1.66 | 0.11 |

| Left PHG | 29 | 67.6 | 19.5 | 4.87 | 0.0000 | 55.9 | 12.9 | 2.46 | 0.02 | 61.8 | 15.0 | 4.22 | 0.0002 |

| Left EVC | 29 | 50.9 | 13.8 | 0.37 | 0.71 | 49.3 | 12.5 | −0.28 | 0.78 | 50.4 | 10.9 | 0.21 | 0.83 |

| Right OFA | 28 | 53.8 | 18.7 | 1.07 | 0.29 | 44.3 | 10.4 | −2.89 | 0.01 | 53.7 | 14.3 | 1.38 | 0.18 |

| Right FFA1 | 29 | 77.2 | 19.9 | 7.36 | 0.0000 | 47.8 | 11.3 | −1.03 | 0.31 | 67.7 | 15.9 | 5.97 | 0.0000 |

| Right FFA2 | 27 | 66.5 | 18.5 | 4.64 | 0.0001 | 48.2 | 9.6 | −0.97 | 0.34 | 64.0 | 14.3 | 5.09 | 0.0000 |

| Right PHG | 29 | 54.1 | 16.1 | 1.38 | 0.18 | 53.4 | 16.3 | 1.12 | 0.27 | 62.8 | 15.1 | 4.57 | 0.0001 |

| Right EVC | 29 | 50.3 | 13.0 | 0.11 | 0.92 | 49.3 | 9.8 | −0.41 | 0.69 | 51.4 | 10.5 | 0.74 | 0.47 |

Car Expertise Effects in Isolated Conditions

Next, we sought to replicate prior work showing effects of car expertise in face-selective regions with cars and control objects viewed in isolation (Gauthier et al. 2000; 2005; Xu 2005; McGugin, Gatenby, et al. 2012; McGugin et al. submitted). To maximize power and because at least one study reported a car expertise effect in each of these ROIs (including in the EVC in Harel et al. 2010), we used one-tailed tests for positive correlations of car-evoked activity and car expertise. With faces used as baseline (Gauthier et al. 2005; Xu 2005; Furl et al. 2011; McGugin et al. submitted),we computed the partial correlation between the BOLD response to cars with the behavioral car aggregate measure, regressing out the noncar aggregate measure (Materials and Methods; McGugin et al. submitted). Behavioral car expertise predicted the neural selectivity to cars relative to faces in bilateral FFA1, FFA2, and OFA, as well as in the right PHG ROI (Table 4). ROI analyses were supported by whole-brain group analyses (Fig. 2a; Supplementary Fig. 1a; Table 2). Similar results were obtained when butterflies were used as a baseline (Supplementary Figs 2a and 3a; Supplementary Table 1).

Table 4.

Univariate results for all regions during conditions where cars are presented in isolated, attended, and ignored attention conditions, or in attended and ignored competition conditions

| Attention |

Competition |

||||||

|---|---|---|---|---|---|---|---|

| Critical r values, P < 0.05, 1-tail | Isolated C–F | Isolated C–B | Attended Cb–Fb | Ignored cB–fB | Attended Cf–Bf | Ignored cF–bF | |

| Right FFA1 | r(29) = 0.32 | 0.40 | 0.29 | 0.57 | 0.30 | 0.09 | 0.02 |

| Right FFA2 | r(27) = 0.33 | 0.51 | 0.55 | 0.41 | 0.26 | 0.07 | −0.12 |

| Right OFA | r(29) = 0.32 | 0.52 | 0.24 | 0.25 | 0.14 | 0.13 | 0.00 |

| Right PHG | r(29) = 0.32 | 0.38 | 0.47 | 0.25 | 0.25 | 0.03 | 0.01 |

| Right EVC | r(29) = 0.32 | 0.27 | 0.34 | 0.28 | 0.22 | 0.10 | −0.08 |

| Left FFA1 | r(29) = 0.32 | 0.33 | 0.48 | 0.34 | 0.28 | 0.21 | −0.10 |

| Left FFA2 | r(27) = 0.33 | 0.60 | 0.43 | 0.61 | 0.36 | 0.42 | −0.08 |

| Left OFA | r(27) = 0.33 | 0.51 | 0.13 | 0.37 | 0.30 | 0.20 | −0.10 |

| Left PHG | r(29) = 0.32 | 0.29 | 0.40 | 0.09 | 0.14 | 0.07 | 0.00 |

| Left EVC | r(29) = 0.32 | 0.22 | 0.30 | 0.08 | 0.29 | 0.15 | −0.23 |

Note: Significant correlations are shown in bold.

We explored the effect of defining right and left FFA1 and FFA2 ROIs of different sizes, starting from the single most face-selective voxel in the localizer, and growing the ROI size up to 64 functional (3 mm isotropic) voxels following the spread of face > object activation. Strikingly, changing the size of the ROI had virtually no effect at all the expertise effects we report below for both the right and left FFA2. The peak of face selectivity shows expertise effects that are as large as those in the larger ROI (as in McGugin, Gatenby, et al. 2012). Expertise effects in the left FFA1 were also relatively insensitive to ROI size and virtually unchanged across a size of 4 voxels to 64 voxels. In contrast, expertise effects in the rFFA1 showed a near linear increase with size, reaching significance only for sizes of 32 and 64 voxels.

The Influence of Clutter and Attention on Expertise Effects

In the Cb–Fb (Attended) comparison, that is, when clutter was added to the displays, car expertise effects were obtained in bilateral FFA1/FFA2 and left OFA, but relative to their corresponding isolated conditions, these effects were no longer observed in right OFA and right PHG (Table 4). Whole-brain analyses looking for areas where the Cb–Fb contrast correlated with car expertise revealed expertise effects in the fusiform gyrus, and areas that are recruited in novices more than experts in middle temporal, parietal, and frontal areas (Fig. 2b; Supplementary Fig. 1b). Showing cars in clutter (among butterflies) produced less extensive expertise effects in ventral cortex, but the effects remained robust in several face-selective ROIs.

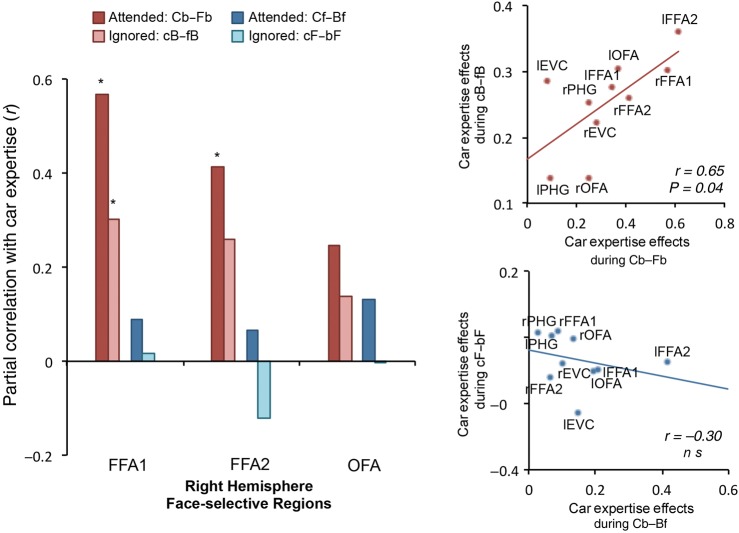

In the Ignored condition, cB–fB, that is, when we looked for changes in expertise effects when attention was diverted from cars, we found marginal effects of car expertise in bilateral FFA1/FFA2 and left OFA ROIs, although the effect was only significant in left FFA2 (Table 4). In a whole-brain analysis the cB–fB contrast correlated with car expertise in bilateral areas very distal from the OFA/FFA region, including in the posterior cingulate and superior frontal gyrus as well as a left parahippocampal region (Fig. 2c; Supplementary Fig. 1c). Critically, it may be premature to conclude that limiting attention to cars fully abolished car expertise effects. Indeed, the pattern of expertise effects across our different ROIs in the ignored cB–fB condition replicates what is observed in the attended condition (Cb–Fb): r = 0.65, P = 0.04 (Fig. 3). Likewise, the pattern of expertise effects across ROIs in Cb–Fb replicates what is observed in C–F: r = 0.69, P = 0.03. In other words, across our ROIs, there is no question that clutter or diverted attention dampens the effect sizes of car expertise effects, but the effects do not seem to be abolished. This is in contrast to what we observed next, when we consider the effect of competition on expertise effects.

Figure 3.

Bar graphs show the partial correlations between the BOLD response to cars relative to either butterflies or faces shown attended or ignored in the presence of either faces or butterflies, respectively, with behavioral car expertise regressing out the influence of noncar performance. Right hemisphere face-selective regions—FFA1, FFA2, and OFA—are shown. The effect sizes are reduced but the pattern is similar for conditions where cars are ignored in the presence of butterflies relative to when they are attended. In conditions of competition, where cars are shown with competing faces, the expertise effect is no longer present. Asterisks denote significant correlations (one-tail, P < 0.05). The top scatterplot depicts a significant relationship for car expertise effects when cars are attended versus ignored in the context of noncompeting butterflies when considering all functionally defined regions. However, when competing faces are shown alongside cars, the pattern breaks down (bottom scatterplot).

The Influence of Competition on Expertise Effects

To test whether car expertise effects were robust to competition from to-be-ignored faces, we turn to the Cf-Bf condition. Interestingly, in contrast to the widespread car expertise effects in Cb–Fb, only a single ROI (left FFA2) showed an effect of expertise for Cf–Bf (Table 3). Since car expertise effects were clearly obtained in the C–B condition, this cannot be attributed to the butterfly baseline. Nonetheless, in a whole-brain analysis, Cf–Bf showed a positive correlation with car expertise in several areas near the average coordinates for right FFA1 and FFA2 (Supplementary Fig. 2b).

Competition from faces also appears to limit expertise effects when cars are ignored and faces are attended (cF-bF): none of the ROIs show evidence of expertise effects in this condition (Table 4), and in whole-brain analyses, attending faces led to expertise effects in posterior fusiform and lingual gyri, near the average coordinates for bilateral OFA and FFA2 (Supplementary Figs 2c and 3c).

Perhaps most telling, while we found that the magnitude of car expertise effects across ROIs was robust to clutter and to diverted attention when cars were presented among butterflies, this is not the case for cars amongst faces. Here, the pattern breaks down; there was no correlation between the car expertise effects in various ROIs for C–B and Cf–Bf (r = −0.07, ns) nor between Cf–Bf and cF–bF (r = −0.30, ns, Fig. 3). Altogether, these results suggest that car expertise effects are quite robust to clutter (high similarity of effects between C–F and Cb–Fb) and are dampened by reducing attention to cars (reduction of expertise effects in cB–fB relative to Cb–Fb), but are nonetheless more robust to a manipulation of attention than to one that induces competition with another domain of expertise (cB–fB more similar to Cb–Fb than cF–bF is to Cf–Bf).

Comparing Univariate and Multivariate Analyses of car Expertise Effects

In the analyses above, when we first only considered correlations of expertise with activity in ROIs, car expertise seemed to be abolished by reducing attention to cars. However, considering the correlation between relative effect sizes across ROIs in different conditions revealed that car experts are still responding to cars differently than car novices. Indeed, distributed patterns of brain activity can be more sensitive and multivariate analyses often prove to be more sensitive than univariate methods (Kriegeskorte et al. 2006). However, some authors have reported that the 2 approaches can sometimes produce markedly different results (Jimura and Poldrack 2012; also see, e.g., Tamber-Rosenau et al. 2011, 2013). Only a subset of fMRI studies using multivariate methods focus on individual differences (e.g., Carp et al. 2011; Clithero et al. 2011; Tong et al. 2012) and still fewer have explicitly compared these methods (Coutanche et al. 2011; Hoeft et al. 2011; Coutanche 2013).

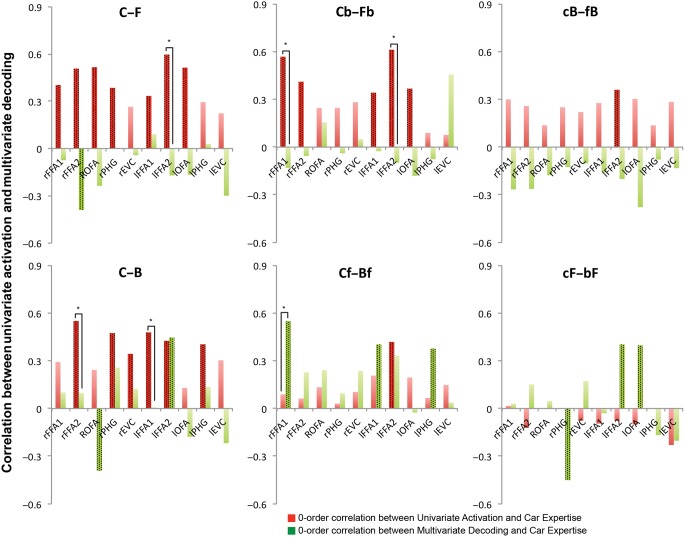

Here our goal was to explore how the 2 approaches can help relate brain activity to behavioral measures of expertise. Specifically, we asked 1) do MVP analyses also predict behavioral expertise for cars?; 2) are these effects qualitatively similar to those obtained using univariate methods?; and iii) are MVPA correlations with car expertise dependent on attention and competition? To compare univariate and multivariate effects, the correlations between car expertise and univariate effects from Table 3 are graphed in Figure 4 alongside the correlations between car expertise and MVP effects. We first report MVPA correlations with expertise (question 1) and then compare these results to those obtained using univariate activation (questions 2 and 3).

Figure 4.

Bar graphs depict the correlations between car expertise and univariate or multivariate effects plotted side-by-side for isolated, attended and ignored conditions. The stippled bars are those that are significant, using one-tail P < 0.05 as in Table 2 for the univariate analyses, and 2-tails <0.05 for the MVP analyses. Brackets indicate Steiger's Z tests comparing the absolute values of the expertise effects for univariate and multivariate analyses, 2-tails P < 0.05.

In the Isolated runs, correlations for C versus F MVPA with car expertise were predominantly negative, achieving significance only in right FFA2 (r = −0.39, P = 0.04). Thus, pattern representations of faces and cars in right FFA2 are more similar to one another in car experts than in novices, consistent with a shared representational mechanism for faces and other categories of perceptual expertise, here cars. In contrast, the correlations of MVPA for C versus B with car expertise were positive in most ROIs, although this was only significant in left FFA2 (r = 0.45, P = 0.022). Thus, pattern representations of cars and butterflies were more distinct in car experts than in car novices in this region, a result that is also consistent with the expertise account's suggestion that car representations are more face-like in car experts. We note however that the only other correlation of behavior with the C versus B MVPA to achieve statistical significance was a negative correlation in right OFA, a result that is more puzzling.

MVP analyses of Ignored categories led to results that were qualitatively similar to those obtained in the Isolated runs. Interestingly, while expertise effects in univariate analyses were generally dampened by reducing attention, the magnitude of several correlations with pattern representations was not reduced (see Fig. 4, rightmost column), perhaps suggesting that attention enhances perceptual signals that are present in ventral cortex regardless of stimulus task-relevance, as opposed to gating signals prior to arriving in these brain regions. Under this account, less-sensitive univariate effects are more prominent under conditions of attention while pattern effects are equally prominent for attended and ignored items. Correlations between decoding and behavioral expertise were generally negative in the cB versus fB condition, again consistent with the expertise account because greater car expertise yields reduced dissimilarity between cars and faces, though the only significant correlation was in the left OFA (r = −0.38, P = 0.05). In the cF versus bF condition, 2 ROIs showed a significant positive correlation with car expertise, the left OFA and left FFA2 (both r = 0.40, P = 0.04), and one area showed a negative correlation with car expertise, the right PHG (r = −0.45, P = 0.01). These results are consistent with an account in which, for experts, cF reflects 2 items of expertise with similar representations, leading to an enhanced representational pattern compared with that for a single item of expertise plus a nonexpert item (bF).

Interestingly, in the Clutter runs with and without competition, a somewhat different pattern emerged: whereas univariate analyses had suggested that competition abolished almost all effects of expertise but for the lFFA1, multivariate analyses reveal expertise effects under competition that are not observed otherwise. For Cb versus Fb, no ROI exhibited a significant correlation of MVPA performance with behavioral car expertise, but for Cf versus Bf, bilateral FFA1 (left: r = 0.40, P = 0.030; right: r = 0.55, P = 0.002) and left PHG (r = 0.38, P = 0.044) achieved significant positive correlations of MVPA with expertise. An additional region, left FFA2, approached significance (r = 0.33, P = 0.097). Surprisingly, MVPA decoding in the Cf-Bf condition yields more expertise effects than both the other clutter condition (Cb vs. Fb) and the corresponding C versus B comparison. This is especially salient in rFFA1, wherein Cb versus Fb leads to a strong correlation with behavior for univariate analyses and virtually no effect for MVPA, with the opposite result in the Cf-Bf comparison. In other words, under competition between cars and faces, we find evidence that new information related to car expertise emerges in patterns of activity.

Discussion

The present work represents the most systematic effort to date in looking at how individual differences in performance for an object category are expressed in the responses to objects from that same category across a range of different situations. Our results converge with a number of prior studies reporting expertise effects in face-selective areas and beyond under relatively standard testing conditions in which objects are presented alone on the screen and attended (Gauthier et al. 2000; Xu 2005; Harley et al. 2009; Harel et al. 2010; Bilalic et al. 2011; McGugin, Gatenby, et al. 2012). As in prior work that looked at very small ROIs (Gauthier et al. 2000; McGugin, Gatenby, et al. 2012), we find these expertise effects even in the single most face-selective voxel in most of our FFA ROIs, in particular in left and right FFA2. Consistent with another study (McGugin et al. submitted), we find that when the low-level visual content of images is controlled for, individual differences in car expertise are not consistently expressed in EVC. We did find expertise effects in the EVC only in the RH and only in the condition where butterflies were used as a baseline. Lacking an explanation for why this effect was lateralized and dependent on the choice of baseline, we assume it to be spurious. However, it should be noted that effects of expertise in bilateral OFA were also dependent on baseline (obtained with a face baseline and not with the butterfly baseline). Exploring different baselines was not a primary goal of the present work, but these effects could potentially result from more long-term competition between car and face expertise, as has been observed between the representations of faces and words (Dehaene et al. 2010).

It is important to note that we were not concerned here with showing statistical evidence of reductions of expertise effects in any given area. Testing for such reductions can require a relatively large sample (as discussed in McGugin et al. submitted) because of the moderate effect size (r∼ 0.5) of the expertise effect in isolated conditions. In addition, our goal is not to show that clutter or attention can influence selectivity, but rather to test the hypothesis that some areas carry information about expertise despite these manipulations. The general conclusion that clutter or attention influences responses in the visual system, consistent with other work (Harel et al. 2010; Reddy and Kanwisher 2007), is broadly supported by the reduced number of ROIs (or foci in the whole-brain analyses) in extra-striate cortex that show expertise effects during these conditions.

We investigated how expertise effects for cars were affected by the presence of task-irrelevant clutter (images of butterflies). While both our ROI and whole-brain analyses found some reductions of expertise effects in extrastriate areas, it is notable that all FFA ROIs (FFA1 and 2 bilaterally) revealed car expertise effects under clutter conditions, without any evidence of being dampened.

We also investigated how expertise effects for cars were affected by reduced attention to cars. In this case, the dependent measure of interest matters: when expertise effects are investigated in ROIs or in univariate whole-brain analyses, reduced attention appears to almost entirely abolish car expertise effects. One exception was the left FFA2, where car expertise effects were still observed (and were significant even in the single most face-selective voxel, r = 0.50, P = 0.01). Despite a severe dampening of local expertise effects, the relative pattern of expertise effects across regions observed in isolated conditions was found to be preserved under conditions where cars were unattended, revealing that the response to cars across ventral areas still expressed individual differences in car expertise.

Finally, we investigated how expertise effects are affected by competition between cars and faces, which behaviorally has been found to reduce effects of expertise (Behrmann et al. 2005; McKeeff et al. 2010; McGugin et al. 2011), and in ERP studies leads to reduced neural markers of expertise (Gauthier et al. 2003; Rossion et al. 2004; 2007). We originally suggested that Harel et al. (2010) mistook an effect of competition (between planes and cars) for an effect of attention on expertise. Given that Harel et al. only used ROIs and univariate analyses, our results in the attentional manipulation with butterflies suggest that they may in fact have captured a dampening of expertise effects with attention. However, our results in competition conditions suggest that if there was indeed competition between cars and planes in Harel et al.'s study (as suggested by their behavioral results), it may also account in part for the reduced expertise effects. ROI analyses did not reveal any effect of car expertise when faces were present, with the single exception of the left FFA2, and only when cars were attended. In contrast to the effect of attention, competition also abolished the relative pattern of expertise effects across regions that we observed in the Isolated conditions. However, the patterns of activity for attended cars relative to attended butterflies, both in the context of faces, revealed significant expertise effects in regions that did not otherwise show such expertise-related decoding.

Thus, pattern representations of cars and butterflies, when shown in the presence of faces, were more distinct in car experts than in car novices in this region. One interpretation is that in car experts, cars (but not butterflies) will compete with faces, such that the pattern of activity may actually be less face-like in the Cf condition. This would increase the difference between Cf and Bf, because the face representation elicited by Bf would be more easily distinguished from that of objects in car experts. An alternative explanation is that at least in some brain areas, categorical pattern representations of cars and faces reinforce or summate in car experts, for whom these categories lead to similar representations, thus increasing the strength of the categorical pattern, allowing MVPA to differentiate it from Bf. While this latter explanation remains highly speculative, if correct, it raises an interesting avenue for further exploration—the reconciliation of category-level patterns, which may be more similar across categories when both categories are domains of expertise, with item-level patterns, which presumably become more differentiable as a product of expertise, both within- and between-category, rather than more similar. In addition, behavioral studies and ERP studies (Gauthier et al. 2003; Rossion et al. 2007; McGugin et al. 2011) have consistently found that presenting faces and cars together results in a reduction of behavioral hallmarks of expertise. What this suggests is that the abolition of expertise effects in univariate analyses when cars are shown among faces may be more relevant to behavioral performance than the correlation with multivariate effects. Regardless of the preferred explanation for the multivariate expertise effects under competition, one thing is clear: more work is required to understand how univariate and multivariate effects relate to stable individual differences in a given domain, as well as to performance under specific bottom-up and top-down conditions.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org.

Funding

This work was supported by the NSF (SBE-0542013), the Vanderbilt Vision Research Center (P30-EY008126), and the NIH (R01 EY013441-06A2).

Supplementary Material

Notes

Conflict of Interest: None declared.

References

- Behrmann M, Marotta J, Gauthier I, Tarr MJ, McKeeff TJ. 2005. Behavioral change and its neural correlates in visual agnosia after expertise training. J Cogn Neurosci. 17:554–568. [DOI] [PubMed] [Google Scholar]

- Bilalic M, Langner R, Ulrich R, Grodd W. 2011. Many faces of expertise: fusiform face area in chess experts and novices. J Neurosci. 31:10206–10214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. 1997. The psychophysics toolbox. Spat Vis. 10:433–436. [PubMed] [Google Scholar]

- Bukach CM, Gauthier I, Tarr MJ, Kadlec H, Barth S, Ryan E, Turpin J, Bub DN. 2012. Does acquisition of Greeble expertise in prosopagnosia rule out a domain-general deficit? Neuropsychologia. 50:289–304. [DOI] [PubMed] [Google Scholar]

- Bukach CM, Phillips WS, Gauthier I. 2010. Limits of generalization between categories and implications for theories of category specificity. Atten Percept Psychophys. 72:1865–1874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carp J, Park J, Polk TA, Park DC. 2011. Age differences in neural distinctiveness revealed by multi-voxel pattern analysis. NeuroImage. 56:736–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang C-C, Lin C-J. 2011. LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol. 2:1–27. [Google Scholar]

- Clithero JA, Smith DV, Carter RM, Huettel SA. 2011. Within- and cross-participant classifiers reveal different neural coding of information. NeuroImage. 56:699–708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coutanche MN. 2013. Distinguishing multi-voxel patterns and mean activation: why, how, and what does it tell us? Cogn Affect Behav Neurosci. 13:667–673. [DOI] [PubMed] [Google Scholar]

- Coutanche MN, Thompson-Schill SL, Schultz RT. 2011. Multi-voxel pattern analysis of fMRI data predicts clinical symptom severity. NeuroImage. 57:113–123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curby KM, Glazek K, Gauthier I. 2009. A visual short-term memory advantage for objects of expertise. J Exp Psychol Hum Percept Perform. 35:94–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Pegado F, Braga LW, Ventura P, Nunes Filho G, Jobert A, Dehaene-Lambertz G, Kolinsky R, Morais J, Cohen L. 2010. How learning to read changes the cortical networks for vision and language. Science. 330:1359–1364. [DOI] [PubMed] [Google Scholar]

- Diamond R, Carey S. 1986. Why faces are and are not special: an effect of expertise. J Exp Psychol Gen. 115:107–117. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Nakayama K. 2006. The Cambridge face memory test: results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia. 44:576–585. [DOI] [PubMed] [Google Scholar]

- Forman SD, Cohen JD, Fitzgerald M, Eddy WF, Mintun MA, Noll DC. 1995. Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): use of a cluster-size threshold. Magn Reson Med. 33:636–647. [DOI] [PubMed] [Google Scholar]

- Formisano E, Di Salle F, Goebel R. 2005. Fundamentals of data analysis methods in functional MRI. In: Landini L, Positano V, Santarelli MF, editors. Advanced imaging processing in magnetic resonance imaging. Boca Raton (FL): CRC Press, Taylor & Francis; pp 481–503. [Google Scholar]

- Furl N, Garrido L, Dolan RJ, Driver J, Duchaine B. 2011. Fusiform gyrus face selectivity relates to individual differences in facial recognition ability. J Cogn Neurosci. 23:1723–1740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gauthier I, Curby KM, Skudlarski P, Epstein RA. 2005. Individual differences in FFA activity suggest independent processing at different spatial scales. Cogn Affect Behav Neurosci. 5:222–234. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Curran T, Curby KM, Collins D. 2003. Perceptual interference supports a non-modular account of face processing. Nat Neurosci. 6:428–432. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. 2000. Expertise for cars and birds recruits brain areas involved in face recognition. Nat Neurosci. 3:191–197. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. 2002. Unraveling mechanisms for expert object recognition: bridging brain activity and behavior. J Exp Psychol Hum Percept Perform. 28:431–446. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. 1999. Activation of the middle fusiform “face area” increases with expertise in recognizing novel objects. Nat Neurosci. 2:568–573. [DOI] [PubMed] [Google Scholar]

- Goebel R, Esposito F, Formisano E. 2006. Analysis of functional image analysis contest (FIAC) data with brainvoyager QX: from single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Hum Brain Mapp. 27:392–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N. 2004. The fusiform face area subserves face perception, not generic within-category identification. Nat Neurosci. 7:555–562. [DOI] [PubMed] [Google Scholar]

- Harel A, Gilaie-Dotan S, Malach R, Bentin S. 2010. Top-down engagement modulates the neural expressions of visual expertise. Cereb Cortex. 20:2304–2318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harley EM, Pope WB, Villablanca JP, Mumford J, Suh R, Mazziotta JC, Enzmann D, Engel SA. 2009. Engagement of fusiform cortex and disengagement of lateral occipital cortex in the acquisition of radiological expertise. Cereb Cortex. 19:2746–2754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoeft F, McCandliss BD, Black JM, Gantman A, Zakerani N, Hulme C, Lyytinen H, Whitfield-Gabrieli S, Glover GH, Reiss AL, et al. 2011. Neural systems predicting long-term outcome in dyslexia. Proc Natl Acad Sci U S A. 108:361–366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV. 2000. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat Neurosci. 3:80–84. [DOI] [PubMed] [Google Scholar]

- Jimura K, Poldrack RA. 2012. Analyses of regional-average activation and multivoxel pattern information tell complementary stories. Neuropsychologia. 50:544–552. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. 2006. Information-based functional brain mapping. Proc Natl Acad Sci USA. 103:3863–3868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGugin RW, Gatenby C, Gore J, Gauthier I. 2012. High-resolution imaging of expertise reveals reliable object selectivity in the FFA related to perceptual performance. Proc Natl Acad Sci U S A. 109:17063–17068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGugin RW, McKeeff TJ, Tong F, Gauthier I. 2011. Irrelevant objects of expertise compete with faces during visual search. Atten Percept Psychophys. 73:309–317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGugin RW, Newton AT, Gore JC, Gauthier I. Submitted. Robust expertise effects in right FFA.

- McGugin RW, Richler JJ, Herzmann G, Speegle M, Gauthier I. 2012. The Vanderbilt expertise test reveals domain-general and domain-specific sex effects in object recognition. Vis Res. 69:10–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKeeff TJ, McGugin RW, Tong F, Gauthier I. 2010. Expertise increases the functional overlap between face and object perception. Cognition. 117:355–360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray SO, Wojciulik E. 2004. Attention increases neural selectivity in the human lateral occipital complex. Nat Neurosci. 7:70–74. [DOI] [PubMed] [Google Scholar]

- Nefzger MD, Drasgow J. 1957. The needless assumption of normality in Pearson's r. Am Psychol. 12:623–625. [Google Scholar]

- Op de Beeck HP, Baker CI, Dicarlo JJ, Kanwisher NG. 2006. Discrimination training alters object representations in human extrastriate cortex. J Neurosci. 26:13025–13036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinsk MA, Arcaro M, Weiner KS, Kalkus JF, Inati SJ, Gross CG, Kastner S. 2009. Neural representations of faces and body parts in macaque and human cortex: a comparative fMRI study. J Neurophysiol. 101:2581–2600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reddy L, Kanwisher N. 2007. Category selectivity in the ventral visual pathway confers robustness to clutter and diverted attention. Curr Biol. 17:2067–2072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosnow R, Rosenthal R. 1989. Statistical procedures and the justification of knowledge in psychological science. Ameri Psychol. 44:1276–1284. [Google Scholar]

- Rossion B, Collins D, Goffaux V, Curran T. 2007. Long-term expertise with artificial objects increases visual competition with early face categorization processes. J Cogn Neurosci. 19:543–555. [DOI] [PubMed] [Google Scholar]

- Rossion B, Hanseeuw B, Dricot L. 2012. Defining face perception areas in the human brain: A large-scale factorial fMRI face localizer analysis. Brain Cogn. 79:138–157. [DOI] [PubMed] [Google Scholar]

- Rossion B, Kung C-C, Tarr MJ. 2004. Visual expertise with nonface objects leads to competition with the early perceptual processing of faces in the human occipitotemporal cortex. Proc Natl Acad Sci USA. 101:14521–14526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamber-Rosenau BJ, Dux PE, Tombu MN, Asplund CL, Marois R. 2013. Amodal processing in human prefrontal cortex. J Neurosci. 33:11573–11587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamber-Rosenau BJ, Esterman M, Chiu Y-C, Yantis S. 2011. Cortical mechanisms of cognitive control for shifting attention in vision and working memory. J Cogn Neurosci. 23:2905–2919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong F, Harrison SA, Dewey JA, Kamitani Y. 2012. Relationship between BOLD amplitude and pattern classification of orientation-selective activity in the human visual cortex. NeuroImage. 63:1212–1222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner KS, Sayres R, Vinberg J, Grill-Spector K. 2010. fMRI-adaptation and category selectivity in human ventral temporal cortex: regional differences across time scales. J Neurophysiol. 103:3349–3365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willenbockel V, Sadr J, Fiset D, Horne GO, Gosselin F, Tanaka JW. 2010. Controlling low-level image properties: The SHINE toolbox. Behav Res. 42:671–684. [DOI] [PubMed] [Google Scholar]

- Williams NR, Willenbockel V, Gauthier I. 2009. Sensitivity to spatial frequency and orientation content is not specific to face perception. Vision Res. 49:2353–2362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wojciulik E, Kanwisher N, Driver J. 1998. Covert visual attention modulates face-specific activity in the human fusiform gyrus: fMRI study. J Neurophysiol. 79:1574–1578. [DOI] [PubMed] [Google Scholar]

- Wong AC-N, Palmeri TJ, Rogers BP, Gore JC, Gauthier I. 2009. Beyond shape: how you learn about objects affects how they are represented in visual cortex. PLoS ONE. 4:e8405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu Y. 2005. Revisiting the role of the fusiform face area in visual expertise. Cereb Cortex. 15:1234–1242. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.