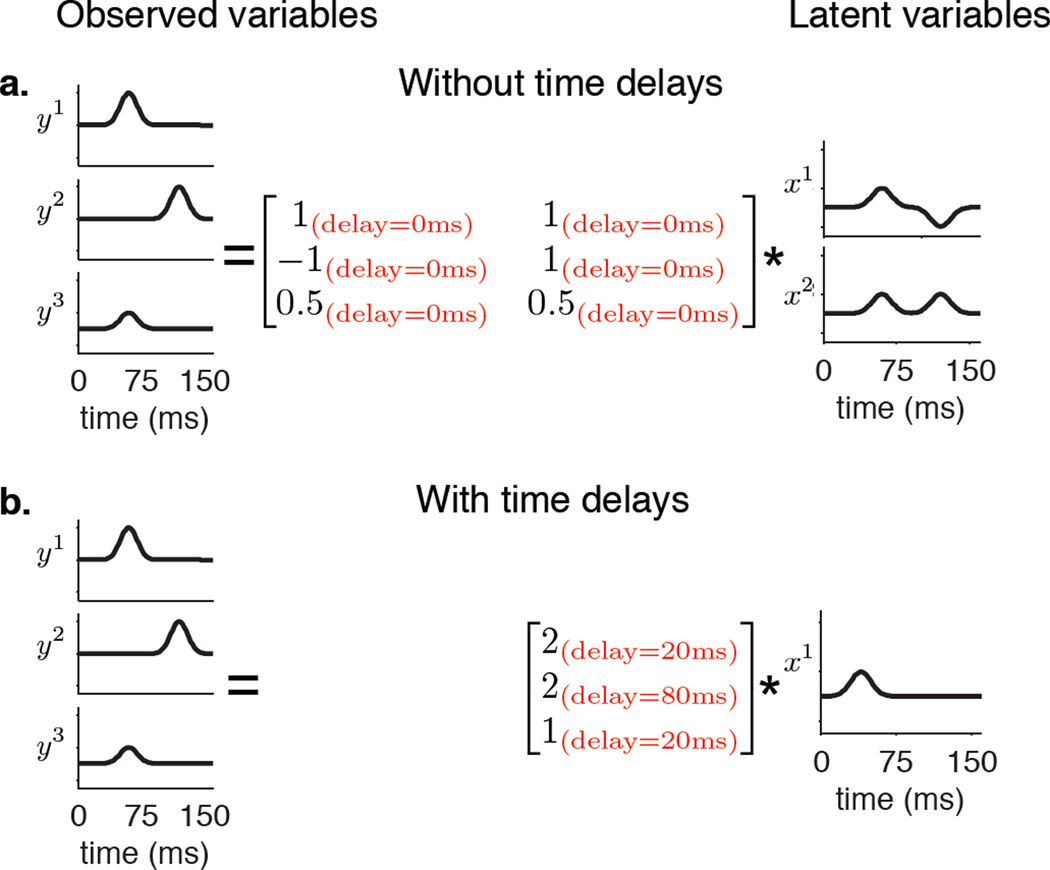

Figure 1.

Conceptual illustration showing how dimensionality reduction algorithms that consider time delays can present a more compact view of the latent space. a. Three observed variables y1, y2 and y3 that each show a single hill of activity, but at different times. If only instantaneous latent-observation relationships are considered, 2 latent variables are needed to explain these observed variables. b. If non-zero time delays between latent and observed variables are considered, only one latent variable with a single hill of activity is needed to explain the same observed variables. The matrices show the delays as well as the weights that linearly combine the latent variables to reconstruct the observed variables.