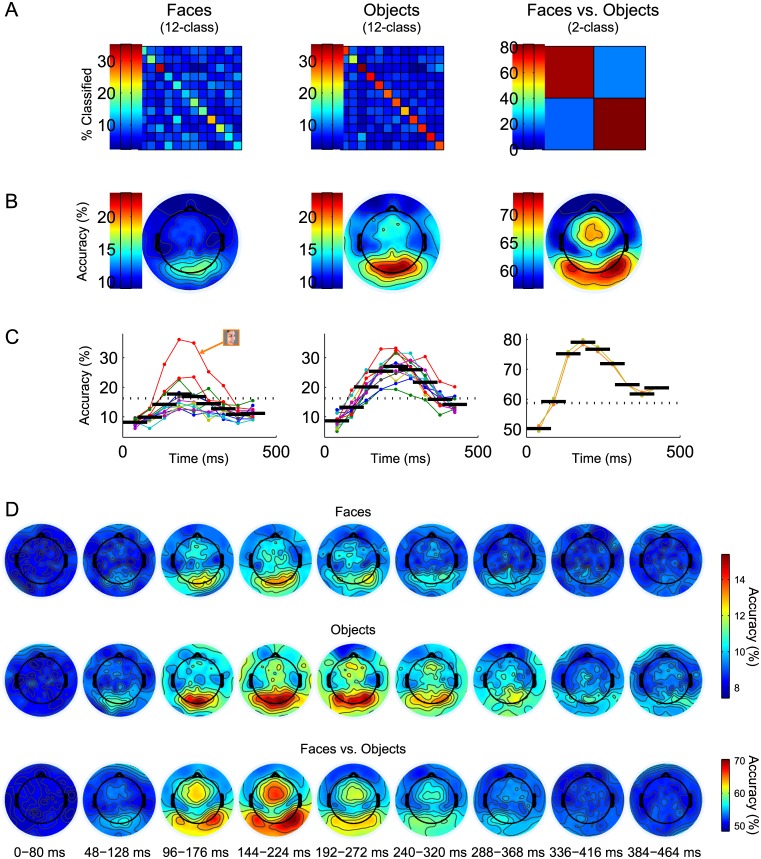

Fig 11. Within-category classification results.

Separate within-category classifications were performed on Human Face and Inanimate Object responses. A two-class face-versus-object classification was also performed for reference. (A) Confusion matrices from classifications using data from all electrodes and time points together for Human Face (Left), Inanimate Object (Center), and face versus object (Right). Matrix layout is as described in Fig 3. Classifier accuracy for Human Face was 18.30%; for Inanimate Object was 28.87%; and for face versus object was 81.06% (summarized in Fig 2). (B) Maps of single-electrode classifier accuracies for Human Face (Left), Inanimate Object (Center), and face versus object (Right). Significance thresholds (α = 0.01) are 16.28% for twelve-class and 58.72% for two-class. (C) Classification rates as a function of time, with exemplar or category accuracies overlaid (as described in Fig 5) for Human Face (Left), Inanimate Object (Center), and face versus object (Right). Dotted horizontal lines designate α = 0.01 significance thresholds. The best-classifying Human Face image (Left) is highlighted. (D) Classifier accuracy maps for temporally resolved single-electrode Human Face (Top), Inanimate Object (Middle), and face-versus-object (Bottom) classifications, using spatiotemporal subsets described in Fig 6.