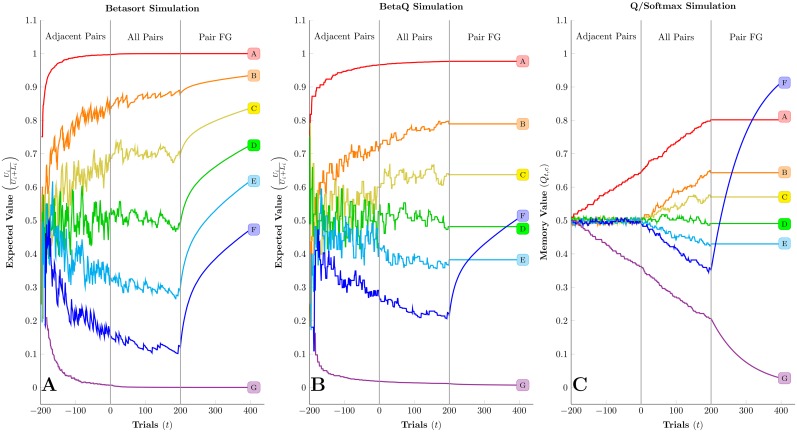

Fig 7. Visualization of the contents of memory for the three algorithms under simulated conditions.

Three phases were included for each algorithm: 200 trials of adjacent pairs only, followed by 200 trials of all pairs, and then followed by 200 massed trials of only the pair FG. (A) Expected value for each stimulus under the betasort algorithm, given parameters of τ = 0.05 and ξ = 0.95. Not only is learning during adjacent pair training faster, but massed trials of FG do not disrupt the algorithm’s representation of the order, because occasional erroneous selection of stimulus G increases the value of all stimuli, not just stimulus F. (B) Expected value for each stimulus under the betaQ algorithm, given parameters of τ = 0.05 and ξ = 0.95. Although the algorithm derives an ordered inference by the time the procedure switches to all pairs, that order is not preserved during the massed trials of FG, as a result of the lack of inferential updating. (C) Expected value Q for each stimulus under the Q/softmax algorithm, given parameters of α = 0.03 and β = 10. Values for non-terminal items remain fixed at 50% throughout adjacent pair training, and only begin to diverge when all pairs are presented in a uniformly intermixed fashion. Subsequent massed training on the pair FG disrupts the ordered representation because rewards drive the value of stimulus F (and the value of stimulus G down) while the other stimuli remain static.