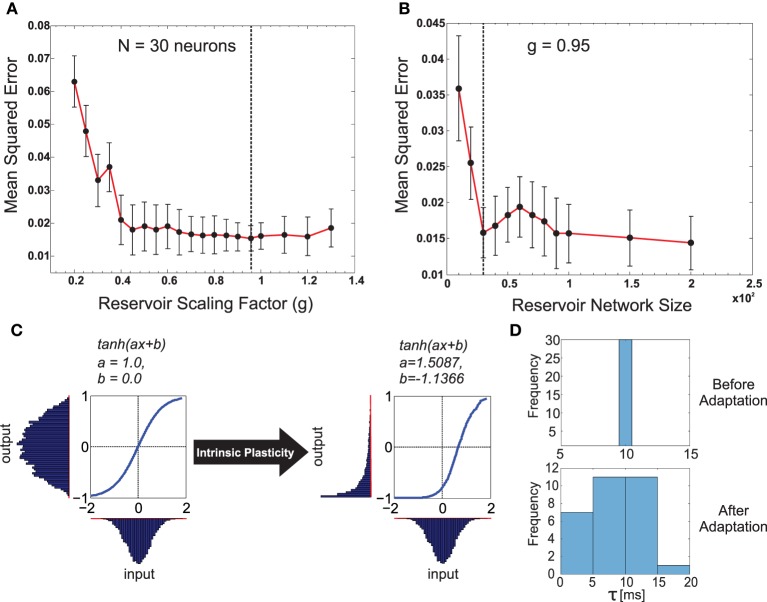

Figure 3.

(A) Plot of the change in the mean squared error for the forward model task for one of the front legs (R1) of the walking robot with respect to the scaling of the recurrent layer synaptic weights Wrec with different g-values. As observed, very small values in g have a negative impact on performance compared with values closer to one being better. Interestingly, the performance did not change significantly for g > 1.0 (chaotic domain). This is mainly due to homeostasis introduced by intrinsic plasticity in the network. The optimal value of g = 0.95 selected for our experiments is indicated with a dashed line. (B) Plot of the change in mean squared error with respect to different reservoir sizes (N). g was fixed at the optimal value. Although increasing the reservoir size in general tends to increase performance, a smaller size of N = 30 gave the same level of performance as N = 100. Accordingly keeping in mind the trade off between network size and learning performance, we set the forward model reservoir size to 30 neurons. Results were averaged over 10 trials with different parameter initializations on the forward model task for a single leg and a fixed walking gait. (C) Example of the intrinsic plasticity to adjust the reservoir neuron non-linearity parameters a and b. Initially the the reservoir neuron fires with an output distribution of Gaussian shape matching that of the input distribution. However, after adjustment using intrinsic plasticity mechanism (Dasgupta et al., 2013) the reservoir neuron adapts the parameters a and b, such that, now for the same Gaussian input distribution the output distribution follow a maximal entropy Exponential-like distribution. (D) Distribution of the reservoir forward model individual neuron time constants before and after adaptation.