Supplemental Digital Content is Available in the Text.

This article outlines the conceptualization, development, and implementation of the Public Health Workforce Interests and Needs Survey, as well as considerations and limitations.

Keywords: public health systems, public health workforce, Public Health Workforce Interests and Needs Survey (PH WINS), state health agencies, workforce development

Abstract

The Public Health Workforce Interests and Needs Survey (PH WINS) has yielded the first-ever nationally representative sample of state health agency central office employees. The survey represents a step forward in rigorous, systematic data collection to inform the public health workforce development agenda in the United States. PH WINS is a Web-based survey and was developed with guidance from a panel of public health workforce experts including practitioners and researchers. It draws heavily from existing and validated items and focuses on 4 main areas: workforce perceptions about training needs, workplace environment and job satisfaction, perceptions about national trends, and demographics. This article outlines the conceptualization, development, and implementation of PH WINS, as well as considerations and limitations. It also describes the creation of 2 new data sets that will be available in public use for public health officials and researchers—a nationally representative data set for permanently employed state health agency central office employees comprising over 10 000 responses, and a pilot data set with approximately 12 000 local and regional health department staff responses.

Identifying a Need

Workforce development has been a major focus of governmental public health for the better part of a quarter century, and especially since the landmark 1988 Institute of Medicine report (now the National Academy of Medicine, [NAM]).1–9 The early 1990s saw significant progress in workforce development, hand in hand with the formalization of the Ten Essential Services.8,10,11 Because of the siloed nature of public health funding and thus the organization of public health itself at federal, state, and local levels, experts in the field identified 2 major challenges central to workforce development during those years.

First, the governmental public health enterprise needed to establish how many people worked in the field. No comprehensive data had been collected to address this, limiting the ability to characterize the field, monitor trends, or conduct research.7,12–16 Divided responsibilities under the US' Federalist system allowed states to develop state and local public health systems that sometimes looked incredibly different one state to the next, leading to the adage, “If you've seen one health department, you've seen one health department.”17,18 However, larger and more complex systems such as health care delivery and education managed to measure the size of the workforce and so too could public health. Thus began enumeration efforts that have continued to this day.

A second challenge caused by the disciplinary and funding silos was an inability to identify systems-level workforce development and training needs in public health.10 Speculation has long existed that a very small proportion of the workforce has any formal training in public health; this has made on-the-job training critical to the field.1 Major efforts have been undertaken to create a set of core competencies for public health professionals generally, as well as specifically by discipline and seniority.19,20 While this set of core competencies has been critical in workforce development, 2 challenges have persisted: (1) these competencies are more widely accepted in academia than in public health practice; and (2) the competencies represent a universe of training needs, without explicit prioritization. Establishing priorities among many training needs was a key reason behind the creation of the Public Health Workforce Interests and Needs Survey (PH WINS).

Similar to the excellent and critical training needs assessments conducted by the Public Health Training Centers in recent years, PH WINS was created to help better understand the perceptions and needs of the public health workforce. However, unlike the Public Health Training Centers' previous surveys that focused on varying types of public health practitioners within a specific jurisdiction, PH WINS was meant to attain a nationally representative sample of permanently employed (i.e., not temporary staff or interns), central office employees at state health agencies (SHAs) and to take initial steps toward obtaining responses from local public health department employees.

Beyond training needs and enumeration, little was known about the motivations of the public health workforce, as well as perceptions of workplace environment and job satisfaction.1 This gap was addressed somewhat in the course of workplace surveys, which a small number of state and local health departments (LHDs) conducted among varying staff populations at varying time points.8,9 Systematic collection and analysis of these sorts of data from multiple health departments were tremendously difficult and occurred infrequently.8 This was another key motivation for the creation of PH WINS.

Survey Development

The idea for PH WINS grew out of a summit held by the Association of State and Territorial Health Officials and the de Beaumont Foundation in 2013. This summit convened leaders of public health membership organizations, discipline-specific affiliate groups, federal partners, and other public health workforce experts to identify crosscutting training needs for governmental public health. Many training needs had been identified in recent years, but leaders in public health had yet to identify which needs were most immediate.10 The 31 organizations represented at the summit prioritized systems thinking, communicating persuasively, change management, information and analytics, problem solving, and working with diverse populations as the major crosscutting training needs. However, there was significant interest as to whether the public health workforce agreed with these leaders about the greatest training needs in the field.

After the summit, a technical expert panel was convened to develop the Web-based survey. The panel comprised 30 public health scientists, researchers, academics, and policy makers. The panel established the goal of PH WINS to “collect perspectives from the field on workforce issues, to validate responses from leaders on workforce development priorities, and to collect data to monitor over time.” This yielded three concrete aims:

To inform future workforce development initiatives.

To establish a baseline of key workforce development metrics.

To explore workforce attitudes, morale, and climate.

Sample Frames

PH WINS includes multiple, distinct sample frames. Major considerations included the size of the jurisdiction served, the geographic location of the respondents' jurisdictions, and the governance classification of the state in which the jurisdictions were located. Governance classification refers to the relationship between the SHA and LHDs (ie, centralized, decentralized, shared, and mixed as outlined by Meit et al21).

The first frame is a nationally representative sample of permanent, central office employees in SHAs. The second frame consists of employees of members of the Big Cities Health Coalition (BCHC), a membership group of the largest LHDs in the country. The third is a pilot frame of LHD employees; it was decided that it would be too difficult to get a nationally representative sample of LHD employees in the first fielding of PH WINS.

The “state” frame involved stratified sampling of staff working at the central office of SHAs. Stratification occurred over 5 regions (paired, contiguous US Department of Health and Human Services regions), and a potential respondent was selected at random with probability proportional to the SHA's total staff as a percentage of the total number of staff from all participating SHAs in the region. Practically, this meant each SHA had a number of needed responses. Staff directories were used to constitute the sample, and e-mail addresses were used to e-mail selected staff directly. A number of states elected to increase their sample size (in line with other large national surveys that provide for sample augmentation, such as the National Adult Tobacco Survey).22 Twenty-four of 37 participating states elected to field the survey to their entire staff. A significant complication was that a number of states were unable to parse contact information from staff who worked in the central office from those working in local or regional health departments. As such, a sample-without-replacement approach was used to ensure we received enough completed surveys from individuals who identified themselves as central office employees to constitute a nationally representative sample. This was planned and accounted for in the complex sampling design; weighting approaches are discussed in more detail later. State health agency employees who indicated they worked at local or regional departments were moved to the local pilot data set, discussed later.

Pilots for LHD Employees

The BCHC and “local” frames may be thought of as pilots—different fielding methods were used to ascertain best practices for a potential, future iteration of PH WINS and related studies; although the data have importance for the localities in which they were collected, it is not intended to constitute a nationally representative sample. Respondents from 14 BCHC LHDs and more than 50 other LHDs participated. In most cases, a staff directory-based approach was used, where staff were contacted directly and asked to participate in PH WINS. In a few cases, the local health official distributed a survey link to their entire staff by e-mail.

The local pilot used several different (state-based) approaches to gather information for the next iteration of PH WINS.

The majority of respondents in the local pilot data set come from states where the SHA was unable to distinguish between central office and local/regional employees. Staff from more than 400 LHDs participated in this way. In “centralized” states, this implies equal probability of selection among all LHD employees. In other states, this may not be the case—while these staff are SHA employees, they may work in local or regional health departments with staff not employed by the SHA. As such, only a subset of states can create state-based estimates for LHD employees. National estimates cannot be constructed from the LHD respondent data set.

- Respondents from 4 states were sampled differently, all using a variant of clustering-based sampling.

- In 2 states (1 centralized and 1 shared), we drew a stratified random sample of LHDs, based on the size of jurisdiction served and type of jurisdiction (city, city-county, county, and multicounty).

- e-mails were sent to all staff members of selected LHDs directly.

- In 2 states (both decentralized), we enlisted local health officials to e-mail survey links to their entire staff members (32 LHDs in total across both states). Weights for all approaches were calculated appropriately, and are discussed in detail later.

Development of the Survey Instrument

The survey was guided by 2 primary principles. First, brevity to minimize burden on practitioners/respondents. With a target length of 15 minutes, 4 major domains are addressed in PH WINS—training needs, workplace environment/job satisfaction, perceptions of national trends, and demographics. The full instrument is available in the Appendix (see Supplemental Digital Content, available at: http://links.lww.com/JPHMP/A163). The first domain assessed training needs broadly, including, specifically, organizational support for continuing education and training, perceptions of importance of and ability related to training needs. The second related to workplace environment, relationship with peers and supervisors, and satisfaction with one's job, pay, organization, and job security. The third domain related to perceptions around national trends, including whether staff had heard about a number of major national issues in public health—for example, implementation of the Patient Protection and Affordable Care Act (ACA). Questions in this section also related to how important the trend was to public health, to the staff's day-to-day work, and whether more emphasis should be placed on the issue going forward. The final section related to demographics and allowed for enumeration of staff by race/ethnicity, educational attainment, supervisory status, and a number of other measures.

A second guiding principle drove the creation of the PH WINS instrument, relating to maximizing data quality of the instrument through utilization of previously used items and questions wherever possible. As such, workforce-related questions were gathered from the peer-reviewed literature, workforce development surveys, and validated scales. The final version of PH WINS draws heavily from the Centers for Disease Control and Prevention Technical Assistance and Service Improvement Initiative: Project Officer Survey; the 2009 Epidemiology Capacity Assessment; the Federal Employee Viewpoint Survey; the Public Health Foundation Worker Survey; the Bowling Green State University Job in General Scale; and the University of Michigan Public Health Workforce Schema.23–28 Cognitive interviews were conducted, and the instrument was pretested among 3 groups of state and local public health practitioners. After each round of pretesting, the survey was streamlined and a small number of items were modified for accessibility and clarity. The pretests and the final version of the instrument were created in Qualtrics (Qualtrics, LLC, Salt Lake, Utah).

Institutional Review Board Approval and Outreach

PH WINS began development in spring 2013 and was fielded approximately a year and a half later in fall 2014. The survey received a judgment of “exempt” from the Chesapeake institutional review board (Pro00009674) due to its focus on professional experiences and perceptions, and low risk to participants. PH WINS was fielded such that contact information was retained only to aid in nonrespondent follow-up. That is, no identifiers are included in the final PH WINS data sets and were only used during fielding to see whether a potential respondent had completed the survey. Only the project team had access to identifiers used in fielding follow-up, and participating agencies received only summary statistics and cross-tabulations; individual records were not shared.

Several months prior to the launch of the survey, one “workforce champion” was identified in each state health department. The workforce champion was the human resources director, workforce development director, or another member of SHA staff with interest, expertise, or responsibility for workforce-related issues. The workforce champion was nominated by the SHA to serve as the point of contact for the PH WINS project, assisting in providing the staffing lists used to generate the final sample and also partnering in the agency-wide promotion and administration of the survey. However, to protect participant confidentiality and the integrity of the project, respondent information was not shared with the workforce champions (eg, e-mail addresses) about who was invited to participate, who participated, who did not participate, or who declined to participate in the survey.

Survey Fielding

The PH WINS Web-based survey was fielded in September-December 2014.

State frame

Workforce champions helped promote the survey in their respective SHAs prior to the launch. Using centrally developed material, workforce champions posted PH WINS flyers, published blurbs in their internal newsletters, distributed PH WINS FAQs, and sent launch date announcements via agency-wide e-mails. In some cases, SHA deputy directors and deputy commissioners also e-mailed announcements, urging their workforce to participate. These prelaunch exercises helped heighten the attention about the survey among potential participants and reduced the possibility of survey e-mails being deleted or left unattended.

In total across all 3 PH WINS frames, approximately 54 000 invitations to participate in a Web-based survey were sent, about 25 000 of which went to central office employees. The primary launch e-mail campaign and subsequent reminder e-mail campaigns were reviewed to assess the percentage of e-mail bounces, initial response rates, unopened rates, partial completes, and refusals. Overall, about 4.1% of the e-mails were undeliverable, in 3.3% of cases, potential respondents opened the survey but did not complete any answers, 1% declined to participate, and in 7.2% of cases, potential respondents answered at least 1 question but did not complete the survey. Analysis of partial completes did not suggest systematic differences in perceptions of workplace environment or training needs; the majority of partial completes did not fill in demographic information, including whether they were permanently employed by their agency and at which level (eg, SHA central office or LHD). These 2 items were needed for weight calculation and so were used as requirements to count the response as completed.

We also monitored sporadic technical difficulties with the survey and provided technical assistance to workforce champions and survey takers by answering their phone calls and e-mails. Participants contacted us with questions about privacy, technical malfunctions, and other reasons. In exceptional cases, we worked directly with an SHA's information technology department to fix any potential gatekeeping issues. We monitored sample characteristics in real-time including state, region, population size, governance, permanent vs temporary/contractual, full-time/part-time status, central/regional office setting. The eligibility and fielding rates from real-time monitoring were used to estimate and select additional sample for states with lower than desired completed cases for central office staff. The selected sample size was also increased to account for undeliverable e-mails, declines, and noncentral office responses in each participating noncensus SHA.

To increase the response rate, we continued outreach and promotions while the survey was in the field. In general, reminder e-mails were sent every other week. We also repeated most of the prelaunch promotion exercises during the survey administration phase—that is, we partnered with workforce champions to campaign for their workforce participation in the survey. Phone calls were also placed to about 5700 staff members in an attempt to boost response rates—about one-third were reached directly, one-third were left a voicemail, and one-third were not in their position anymore, had inaccurate contact information, or were otherwise unreachable.

Local pilot and BCHC frames

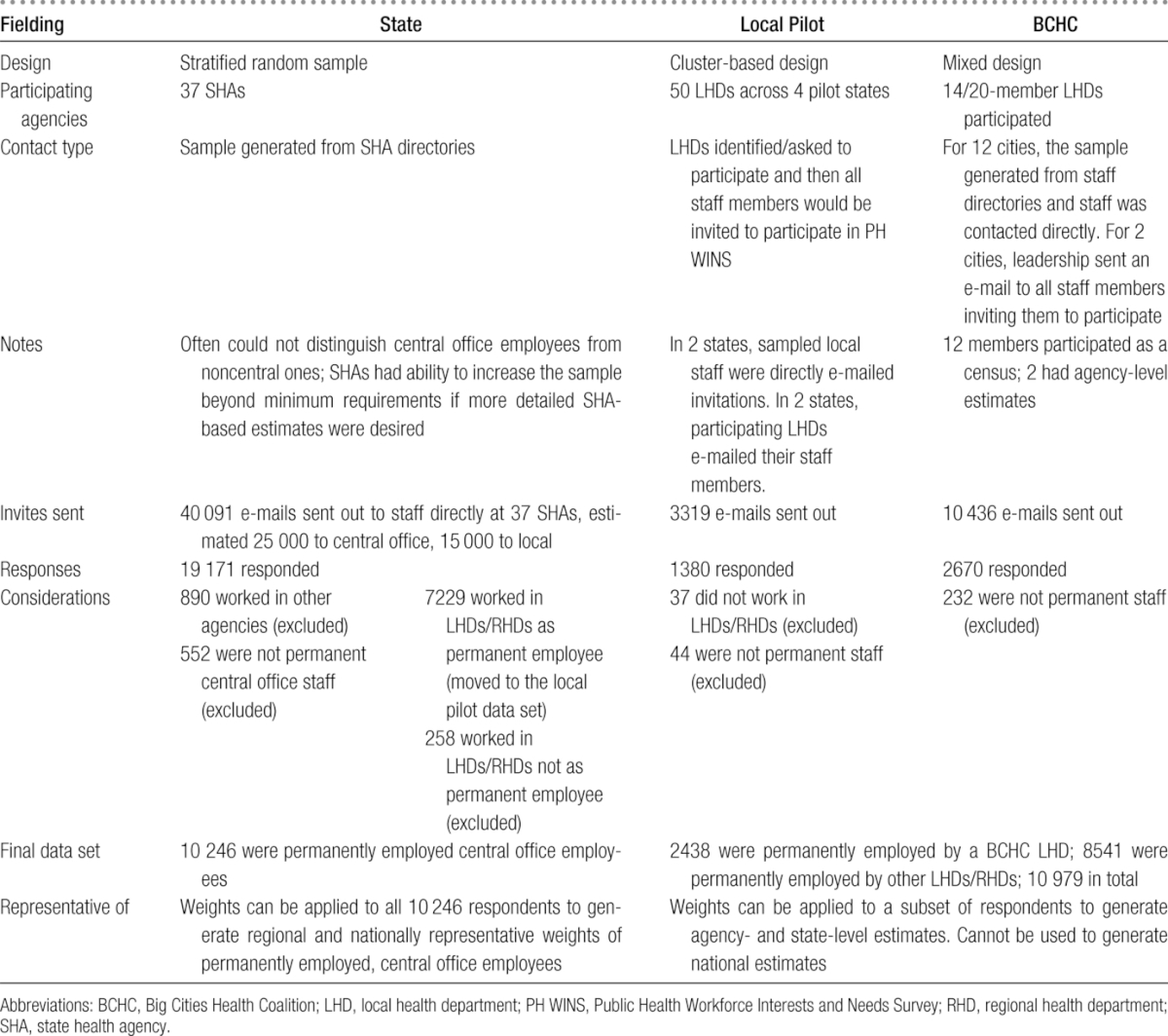

The outreach and promotional efforts for BCHC and local pilot frames were similar to outreach for the state frame. However, because it was a pilot, mixed fielding types were tried to ascertain what worked best (Table).

TABLE •. Overview of PH WINS Fielding and Data Set Creation.

Data Set Preparation and Weighting Approach

Data preparation for PH WINS involved edits, logic checks, creation of composite variables, and cleaning of survey responses to produce final analytic files for the national and local pilot samples and a national public use file. Data cleaning procedures included univariate and descriptive analyses to identify outliers and assess missing data and inconsistencies. When appropriate, new variables were created by collapsing multiple survey items or calculating new variables. Procedures to address issues of missing data were applied such as recoding extreme observations as missing and recoding “missing” as appropriate to account for logic skips. Sample weighting procedures were implemented both to provide sample design base weights reflecting probabilities of selection and to provide the final weights that included adjustments to account for nonresponse. The state frame yielded a nationally representative sample of permanently employed central office employees of SHAs. The local pilot data were weighted differently. Both approaches are described later.

State frame

For the state frame, to compensate for differential unit nonresponse, the sampling weights of employees with a completed survey were adjusted to account for the estimated number of employees who failed to complete a survey in each state. The nonresponse adjustment for the state frame of PH WINS sample is a nonresponse cell adjustment of the base weights. The nonresponse cell procedure used state control totals for central office/noncentral office staff initially obtained from the 2012 Association of State and Territorial Health Officials Profile Survey, which were validated by participating states. The nonresponse cell procedure applies a proportional adjustment to the current weights of the employees who belong to the same category of the variable (ie, central office staff and noncentral office staff in each state). This approach ensures that the new weights have employee totals that match the desired control totals for central office and noncentral office staff in each state. The nonresponse adjusted weights for the state PH WINS sample constitute the final sampling weights. Finalized regional weights were calculated by poststratifying the state-based weights described earlier to marginal national distributions of paired HHS geographic region (5 levels), governance type (4 levels as previously described), and population size served (3 levels). Regional weights are appropriate for calculating estimates for central office, permanent public health employees for the entire United States. For calculating sampling error for survey outcomes in the state frame, balanced repeated replication (BRR) variance methodology was used.29 To support BRR variance estimation for the PH WINS data, replicate weight variables required for the BRR variance methodology were produced and are included as variables in the analysis and public use files. Assessment of nonresponse bias was limited since contact information provided for sampling, such as role, supervisory status, or demographic information, did not include other information about employees.

Local pilot and BCHC frames

Local/BCHC base weights were calculated on the basis of the type of sample design (eg, systematic sampling of employees or “probability proportional to size” sampling of LHDs) and reflect the selection probabilities. The nonresponse adjustment for the PH WINS local pilot samples is a simple poststratification cell adjustment of the base weights. The nonresponse cell procedure used state sample frame staff totals as a benchmark. The nonresponse cell procedure applies a ratio adjustment to the current base weights of the employees to inflate the number of respondents (using the base weight) to match the staff totals for each participating state/agency. These nonresponse-adjusted weights for the PH WINS local pilot sample constitute the final sampling weights.

Methodology Strengths and Limitations

PH WINS has a number of strengths—and limitations—tied to its first-of-its-kind status.

A heavily pretested instrument focusing on critical issues in public health

The survey instrument was developed on the basis of the recommendations of a range of experts from practice, academia, training, and national partner organizations. Furthermore, the majority of survey items were drawn from previously used instruments. Cognitive interviews and preliminary pretesting were conducted to help understand how respondents interpret questions and their ability to select a given response option.

The survey was also pretested with 3 groups that worked in a variety of positions in 20 different state and local health departments. Respondents were asked to complete the survey and respond to a series of open-ended questions to help determine its strengths and weaknesses. The results were used by the survey team to refine the directions, item wording, formatting, sequencing, and other issues that may warrant attention.

Representative and generalizable

PH WINS is the first nationally and regionally representative survey of staff working in central offices at SHAs. These data represent all regions, governance structures, and population sizes. With appropriate weighting, findings are generalizable to all permanently employed SHA central office employees in the United States. The participation of more than 10 000 state central office public health workers from diverse demographics, role classifications, program areas, and educational levels enhances generalizability.

Insights into the collection of survey data from local practitioners

A core component of PH WINS was the local pilot. The pilot was significant in size and scope with more than 10 000 responses. It allowed an examination of the efficacy of varying sampling and fielding approaches while providing data useful to participating agencies and researchers. The pilot shows higher response rates associated with direct e-mail contact with staff versus an approach in which local health officials are asked to distribute a survey link. This suggests that any future fieldings of PH WINS or comparable studies would need to use staff directories to directly contact potential participants or increase sample size requirements.

Independent and comprehensive

PH WINS offers insight into priorities within potential training and professional development needs and quality improvement efforts. It also provides a significant advance in enumerating the governmental public health workforce and its distribution and focuses on topics such as organizational and supervisory support, employee engagement and satisfaction, and impact of the ACA. More importantly, the survey was administered directly to state public health workers at all levels by an outside entity without gatekeeping by the organization's leadership. The identity and individual responses from public workers remain confidential and will not be shared with the employers. This offers greater integrity and independence to the survey findings. It also offers pilot data on respondents from LHDs—large and small—that may offer insight to that component of the workforce.

Methodology limits

Despite participation from all geographic regions, governance types, and population sizes, the participation of the remaining 13 states in the state frame would have further strengthened PH WINS' generalizability. The state sample frame was developed on the basis of staff directories from SHAs. While largely unproblematic, some directories did not contain the most up-to-date records of the employees, did not always provide valid e-mail addresses, and did not always filter central office and noncentral office employees (the latter of which was accounted for in our complex sampling design). While these issues posed challenges to the methodology, these weaknesses were addressed by cleaning and standardizing the data sets and via sampling adjustments as mentioned earlier. By design, responses from staff working at local or regional health departments are not nationally generalizable.

Future Direction and Use of PH WINS in Workforce Development

Workforce development is a critical area of public health. Yet, there is very little prior research that comprehensively brings the interests, needs, and challenges of public health workers into focus. Because PH WINS was designed with both practitioner and researcher use in mind (while protecting respondent confidentiality), it serves as a vast reservoir and a baseline to develop and expand research in areas related to core competencies, workplace environment, and workforce preparedness to confront major initiatives such as accreditation and the implementation of the ACA. Furthermore, PH WINS data can be used in concert with other data sets to make more meaningful and conclusive policy recommendations—PH WINS can be a resource to further explore the impact of policy, governance, and organizational structures on the state of the public health workforce. State health agencies can use aggregate findings from PH WINS data to validate and improve their own surveys and develop follow-up surveys to combine information gained from micro-level insight with macro-level findings. With information gathered as part of the local pilot and BCHC frames in this fielding of PH WINS, organizations interested in future fieldings of this or similar studies should be able to draw a nationally representative sample of LHD employees in addition to the nationally representative sample of central office employees. In combination with efforts by the federal agencies to assess training needs of the federal public health workforce, PH WINS will be able to contribute to data-driven workforce development decisions at the local, state, and federal levels.

Footnotes

PH WINS was funded by the de Beaumont Foundation. The de Beaumont Foundation and the Association of State and Territorial Health Officials acknowledge Brenda Joly, Carolyn Leep, Michael Meit, the Public Health Workforce Interests and Needs Survey (PH WINS) technical expert panel, and state and local health department staff for their contributions to the PH WINS.

The authors declare no conflicts of interest.

Supplemental digital content is available for this article. Direct URL citation appears in the printed text and is provided in the HTML and PDF versions of this article on the journal's Web site (http://www.JPHMP.com).

REFERENCES

- 1.Gebbie KM, Rosenstock L, Hernandez LM. Who Will Keep the Public Healthy? Educating Public Health Professionals for the 21st Century. Washington, DC: National Academies Press; 2002. [PubMed] [Google Scholar]

- 2.Institute of Medicine, Committee for the Study of the Future of Public Health. The Future of Public Health. Washington, DC: National Academies Press; 1988. [Google Scholar]

- 3.Reid WM, Beitsch LM, Brooks RG, Mason KP, Mescia ND, Webb SC. National Public Health Performance Standards: workforce development and agency effectiveness in Florida. J Public Health Manag Pract. 2001;7(4):67–73. [DOI] [PubMed] [Google Scholar]

- 4.Lichtveld MY, Cioffi JP. Public health workforce development: progress, challenges, and opportunities. J Public Health Manag Pract. 2003;9(6):443–450. [DOI] [PubMed] [Google Scholar]

- 5.Cioffi JP, Lichtveld MY, Tilson H. A research agenda for public health workforce development. J Public Health Manag Pract. 2004;10(3):186–192. [DOI] [PubMed] [Google Scholar]

- 6.Tilson H, Gebbie KM. The public health workforce. Annu Rev Public Health. 2004;25:341–356. [DOI] [PubMed] [Google Scholar]

- 7.Bureau of Health Professions. Public Health Workforce Study. Rockville, MD: Bureau of Health Professions; 2005. [Google Scholar]

- 8.Hilliard TM, Boulton ML. Public health workforce research in review: a 25-year retrospective. Am J Prev Med. 2012;42(5)(suppl 1):S17–S28. [DOI] [PubMed] [Google Scholar]

- 9.Place J, Edgar M, Sever M. Assessing the Needs for Public Health Workforce Development. Public Health Training Center Annual Meeting; 2011. [Google Scholar]

- 10.Kaufman NJ, Castrucci BC, Pearsol J, et al. Thinking beyond the silos: emerging priorities in workforce development for state and local government public health agencies. J Public Health Manag Pract. 2014;20(6):557–565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Corso LC, Wiesner PJ, Halverson PK, Brown CK. Using the essential services as a foundation for performance measurement and assessment of local public health systems. J Public Health Manag Pract. 2000;6(5):1–18. [DOI] [PubMed] [Google Scholar]

- 12.Gebbie KM. The Public Health Work Force: Enumeration 2000. Washington, DC: US Department of Health and Human Services, Health Resources and Services Administration, Bureau of Health Professions, National Center for Health Workforce Information and Analysis; 2000. [Google Scholar]

- 13.Gebbie KM, Raziano A, Elliott S. Public health workforce enumeration. Am J Public Health. 2009;99(5):786–787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Boulton M, Beck A. Enumeration and Characterization of the Public Health Nurse Workforce. Ann Arbor, MI: University of Michigan Center of Excellence in Public Health Workforce Studies; 2013. [Google Scholar]

- 15.Beck AJ, Boulton ML, Coronado F. Enumeration of the governmental public health workforce, 2014. Am J Prev Med. 2014;47(5)(suppl 3):S306–S313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Public Health Workforce Development Consortium. Consortium Proceedings. Washington, DC: Government Printing Office; 1989. [Google Scholar]

- 17.Russo P, Kuehnert P. Accreditation: a lever for transformation of public health practice. J Public Health Manag Pract. 2014;20(1):145–148. [DOI] [PubMed] [Google Scholar]

- 18.Mays GP, Scutchfield FD, Bhandari MW, Smith SA. Understanding the organization of public health delivery systems: an empirical typology. Milbank Q. 2010;88(1):81–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Council on Linkages Between Academia and Public Health Practice. Core Competencies for Public Health Professionals. Washington, DC: Public Health Foundation; 2010. [Google Scholar]

- 20.Honore PA. Aligning public health workforce competencies with population health improvement goals. Am J Prev Med. 2014;47(5)(suppl 3):S344–S345. [DOI] [PubMed] [Google Scholar]

- 21.Meit M, Sellers K, Kronstadt J, et al. Governance typology: a consensus classification of state-local health department relationships. J Public Health Manag Pract. 2012;18(6):520–528. [DOI] [PubMed] [Google Scholar]

- 22.Centers for Disease Control and Prevention. 2009-2010 National Adult Tobacco Survey. Atlanta, GA: Centers for Disease Control and Prevention; 2011. [Google Scholar]

- 23.Boulton ML, Hadler J, Beck AJ, Ferland L, Lichtveld M. Assessment of epidemiology capacity in state health departments, 2004-2009. Public Health Rep. 2011;126(1):84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Centers for Disease Control and Prevention. CDC's Technical Assistance & Service Improvement Initiative: Summary of Assessment. Atlanta, GA: Centers for Disease Control and Prevention; 2011. [Google Scholar]

- 25.Office of Personnel Management. Federal Employee Viewpoint Survey Results. Washington, DC: Office of Personnel Management; 2014. [Google Scholar]

- 26.Public Health Foundation. Public Health Workforce Survey Instrument. Washington, DC: Public Health Foundation; 2010. [Google Scholar]

- 27.Ironson GH, Smith PC, Brannick MT, Gibson W, Paul K. Construction of a Job in General scale: a comparison of global, composite, and specific measures. J Appl Psychol. 1989;74(2):193. [Google Scholar]

- 28.Boulton ML, Beck AJ, Coronado F, et al. Public health workforce taxonomy. Am J Prev Med. 2014;47(5):S314–S323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kolenikov S. Resampling variance estimation for complex survey data. Stata J. 2010;10(2):165–199. [Google Scholar]