Abstract

Context

Distressing symptoms interfere with quality of life in patients with lung cancer. Algorithm-based clinical decision support (CDS) to improve evidence-based management of isolated symptoms appears promising but no reports yet address multiple symptoms.

Objectives

This study examined the feasibility of CDS for a Symptom Assessment and Management Intervention targeting common symptoms in patients with lung cancer (SAMI-L) in ambulatory oncology. The study objectives were to evaluate completion and delivery rates of the SAMI-L report and clinician adherence to the algorithm-based recommendations.

Methods

Patients completed a Web-based symptom-assessment, and SAMI-L created tailored recommendations for symptom management. Completion of assessments and delivery of reports were recorded. Medical record review assessed clinician adherence to recommendations. Feasibility was defined as ≥ 75% report completion and delivery rates and ≥ 80% clinician adherence to recommendations. Descriptive statistics and generalized estimating equations were used for data analyses.

Results

Symptom assessment completion was 84% (95% CI: 81–87%). Delivery of completed reports was 90% (95% CI: 86–93%). Depression (36%), pain (30%) and fatigue (18%) occurred most frequently, followed by anxiety (11%) and dyspnea (6%). On average, overall recommendation adherence was 57% (95% CI: 52–62%) and was not dependent on the number of recommendations (P = 0.45). Adherence was higher for anxiety (66%; 95% CI: 55–77%), depression (64%; 95% CI: 56–71%), pain (62%; 95% CI: 52–72%), and dyspnea (51%; 95% CI: 38–64%) than for fatigue (38%; 95% CI: 28–47%).

Conclusion

CDS systems, such as SAMI-L, have the potential to fill a gap in promoting evidence-based care.

Keywords: Palliative care, symptom management, lung cancer, clinical decision support, clinical practice guidelines

Introduction

The majority of patients with lung cancer have multiple symptoms and high degrees of distress at presentation, and these symptoms change with the burden of disease and the cancer treatments themselves.1–5 To date, most studies have addressed treatment of single symptoms, but oncology clinicians may benefit from assistance in assessing and managing multiple symptoms, which are common in their patients.6–9 Palliative care clinicians have the expertise but either may not be present in significant numbers or are not consulted early enough in the course of a patient’s disease.10 Innovative ways are needed to integrate palliative care into oncology care.11 Patients with lung cancer are an ideal group to test new approaches to aiding clinicians in their efforts to manage multiple symptoms.

The use of computerized questionnaires to gather symptom and quality of life (SQL) information in the outpatient setting has been established. Previous studies identified that these systems are associated with accurate assessment, improving communication, and decreasing symptom distress;12–17 however, no change was noted in clinical management of symptoms.18 Clinical studies and review articles have identified targets for expanding the impact of SQL questionnaire use on care processes and health outcomes, including: using salient assessment instruments,19,20 equipping clinicians to interpret SQL reports,19, 21 and providing specific recommendations for clinical management of SQL problems.22

Clinical practice guidelines (CPGs) have been developed as tools to assist clinicians in the management of cancer-related symptoms.23–31 However, such guidelines are not applied consistently in care delivery.32 A variety of barriers exist for implementation of these guidelines including the lack of: 1) a belief that guidelines will lead to better care, 2) time, 3) a system that reports symptoms over time, and 4) access to guideline-based recommendations that are sufficiently specific to guide patient care.33–36

A few studies have examined the impact of applying CPGs for pain or depression as part of cancer care.6, 7, 37, 38 The results from these studies appear promising but further research is needed. Our study extends the literature by examining the feasibility of an algorithm-based Symptom Assessment and Management Intervention clinical decision support (CDS) system for the assessment and management of the most common Lung cancer (SAMI-L) symptoms (fatigue, pain, dyspnea, and depression/anxiety).

The use of CDS systems may help in the dissemination and adherence to CPGs. CDS is defined as computerized programs providing clinicians with person-specific information that is intelligently filtered and presented at the appropriate time to enhance health care. A variety of tools are available to provide CDS and enhance decision making in real-time: computerized alerts, reminders, condition-specific order sets, documentation templates, and clinical guidelines.39 Features of CDS that improve clinical practice include providing a) CDS as part of the workflow, b) recommendations rather than assessment alone, c) CDS at the time and location of decision making, and d) ongoing computer-based CDS.40, 41

Computer technology exists that can provide individually tailored, guideline-based recommendations at the time of each patient visit.33, 42 We reported previously on the modified ADAPTE process and nominal group technique we used to develop and approve locally adapted computable algorithms for the management of multiple symptoms in patients with lung cancer and on the pre-clinical testing of the SAMI-L system.43 The objectives of this phase of the study were to evaluate: 1) patient completion rates for the symptom assessment tool, 2) delivery rates for the tailored report, and 3) clinician adherence to guideline-based recommendations tailored to individual patients and generated by computable algorithms for the management of fatigue, pain, dyspnea, and depression/anxiety.

Methods

Study Design and Participants

The primary aim of this study was to examine the feasibility of using an algorithm-based CDS system to generate symptom management recommendations in an outpatient setting. A single-arm study design was used for this aspect of the study. The study was conducted between December, 2010 and August, 2012 in two settings, Dana-Farber Cancer Institute, a comprehensive cancer center (CCC), and Boston Medical Center, an urban safety net hospital, with approval by the respective Institutional Review Boards.

Eligible clinicians were thoracic oncologists and nurse practitioners working in these sites. Eligible patients were 21 years of age or older; English-speaking; with a diagnosis of Stage III or IV non-small cell lung cancer (NSCLC), recurrence of NSCLC, or any stage small cell lung cancer; and receiving chemotherapy or targeted therapy (+/− any additional modality of therapy) with clinic visits at least once per month. Research assistants (RAs) obtained written consent from interested clinicians and patients.

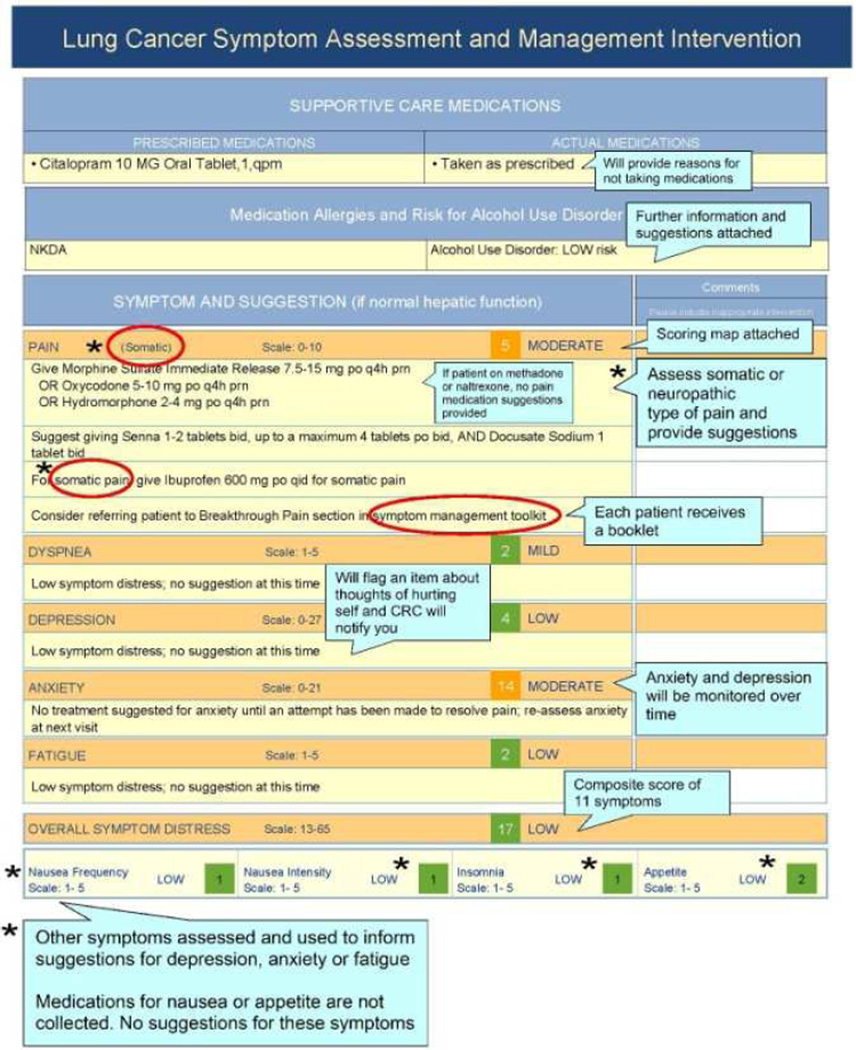

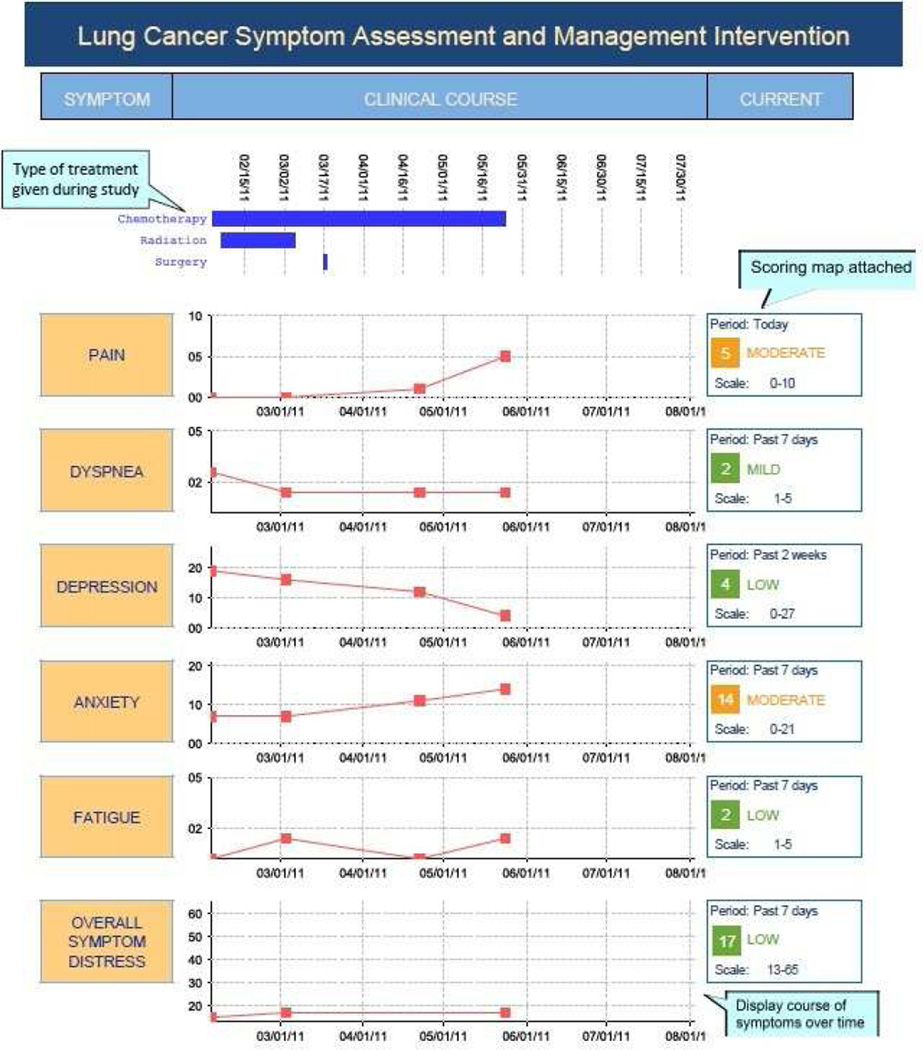

Prior to using SAMI-L, clinicians met with the principal investigator (M.E.C.) and palliative care co-investigator (J.L.A.) for orientation to the validated patient symptom assessment tool,44–48 computable algorithms that generated care recommendations and clinician summary reports (described below). Fig. 1 illustrates the summary reports that were used to orient clinicians to the intervention.

Fig. 1.

Annotated sample SAMI-L clinician summary report, used to orient clinicians to intervention.

Patients and their caregivers received instruction in how to use a touch screen notebook computer to answer the computerized questionnaires. Patients and their clinicians used the CDS system at each visit over six months after patient enrollment. Patients also received a written evidence-based symptom management “toolkit” 49 that included behavioral suggestions to enhance symptom management.

Description of the Clinical Decision Support Intervention

Data Collection

RAs met patients prior to each clinic visit (maximum of once per week) to conduct the assessment. Patients self-reported their symptom severity, comorbidities, and alcohol use. RAs interviewed patients on their use of prescribed and over-the-counter supportive care medications. These data, along with receipt of cancer therapy and selected laboratory values (creatinine, platelet count, and hemoglobin level), on the visit date were entered by the RAs into SAMI-L. Table 1 describes the instruments and data collected at each visit. Shortened assessment forms, collecting only the essential information needed by algorithms, were available for patients who were feeling ill and unable to complete the regular length questionnaire.

Table 1.

Patient-Reported and Clinical Data Collected at Each Visit

| Patient-Reported Data | Clinical Data from Medical Record |

|

|---|---|---|

| Symptoms | Pain intensity (Pain Numeric Rating Scale26), quality, and pattern (investigator developed items) | |

| Dyspnea, fatigue, insomnia, anorexia, and overall symptom distress (Symptom Distress Scale44) | ||

| Depression (Personal Health Questionnaire-945) | ||

| Anxiety (Hospital Anxiety and Depression Scale46) | ||

| Opioid-induced constipation (Constipation severity measure47 and Patient Reported Outcomes version of the Common Terminology Criteria for Adverse Events – PRO-CTCAE v373, 74) | ||

| Alcohol risk and drug allergiesa | Risk for hazardous alcohol use (Alcohol Use Disorders Identification Test48) | |

| Drug allergies | ||

| Comorbidities a | History of peptic ulcer disease | |

| Cancer therapy | Start and end dates of all regimens of chemotherapy, radiation, or targeted therapy | |

| Lab values for visit date | Platelet count, serum creatinine, hemoglobin | |

| Patient characteristics | Age, weight on visit date | |

| Supportive care medications | Prescribed medications with dose and frequency (opioid and non-opioid pain medications, antidepressants, anxiolytics, hypnotics, psychostimulants, laxatives) | |

| Actual use of prescribed and over-the-counter supportive care medications |

Data collected once at the beginning of the study and used at each visit.

SAMI-L Intervention

SAMI-L is a Web-based system that collects, processes and then presents the data to clinicians at the point of care. First, data are collected as described above. The de-identified patient data are transmitted outside the study settings across the Internet to a remote CDS system, SEBASTIAN (System for Evidence-Based Advice through Simultaneous Transaction with an Intelligent Agent across a Network),50 in which the symptom management algorithms were encoded in computer language. Processing the data through the algorithms yielded tailored recommendations for symptom management for each patient at each visit.

Subsequently, a graphical clinician summary report (Fig. 1) was generated at each visit. It showed the patient’s symptom severity longitudinally and at each visit for five targeted common symptoms: pain, dyspnea, depression, anxiety and fatigue; receipt of cancer treatment over the course of the entire study; supportive care medication use; alcohol use and drug allergy warnings; and tailored recommendations to enhance symptom management. Recommendations included specific medications from appropriate drug classes to introduce, or dose adjustments calculated by the system based on current use of medications, and referrals to supportive services with reasons for referral. RAs printed and delivered reports to clinicians prior to each visit and collected reports after the visit.

Measures

Feasibility of the intervention was pre-defined as ≥75% completion rate for the symptom assessment tool across all visits, ≥75% pre-visit delivery rate of completed reports to clinicians and ≥ 80% clinician adherence to the guideline-based recommendations provided through the computerized algorithms. Feasibility levels for completion and delivery rates were based on a study by Wright et al. that demonstrated a 70% completion rate for longitudinal patient-reported symptom assessment in an outpatient oncology setting.51 Feasibility levels for clinician adherence were chosen based on the voluminous patient-related adherence literature in which 80% adherence is considered the standard.52 There is scant literature and no accepted standard for clinician adherence to guideline-based care at the current time because it is recognized that variation in care is influenced by patient characteristics, patient preferences and clinician judgment.35, 53, 54

Completion rates for the symptom assessment tool were derived from documentation at each clinic visit of patient survey completion or reasons for non-completion. Delivery rates of the summary report were derived from documentation at each clinic visit of whether the report was generated and delivered to the clinician on time (before the exam visit), or reasons it was not.

Clinician-adherence was defined as following the guideline-based recommendations. Adherence was measured by medical record review and written comments provided on the report by clinicians. Each of 40 possible recommendations was coded as present or absent, and clinician responses were initially categorized into one of 16 adherence categories, which were then combined to a binary measure of complete/partial or no adherence (Table 2). The coding scheme and operational definitions for each code category were developed and pilot-tested to ensure the scheme was clear and easy to implement. Three coders were trained over two months through practice coding and discussion. Coding occurred over 10 weeks, with weekly discussions. A random selection of cases (n=17, 20%) were double-coded for reliability. Three sets of coding forms were not assessed because they were inadvertently recycled prior to calculation of inter-rater reliability. In reviewing records of 101 visits by 14 (17-3) patients, coders reached a mean of 98.9% (SD 2.5) and a median of 100% agreement per visit (range: 85.4–100%) in coding which recommendations were present. Percent agreement in coding adherence outcomes per recommendation had a mean of 80.6% (SD 26.9) and a median of 100% (range: 0–100%) per visit in 51 visits for which recommendations were made.

Table 2.

Outcomes Coded as Adherent or Non-Adherent, and Reasons for Non-Adherence

| Adherent (fully or partially) | Non-Adherent |

|---|---|

| 1. The recommendation was followed exactly | 13. The health care provider did not follow any of the above strategies to manage the symptom |

| 2. The recommended medication was given at a lower dose | Reasons for non-adherence |

| 3. An alternative or additional medication was given | 13a. The recommendation was not appropriate or was contraindicated |

| 4. An alternative or additional referral to a specialist was made | 13b. The patient’s report of the symptom to the health care provider did not match their self-report in the computerized assessment |

| 5. The recommended prescription or referral was already in place for the symptom | 13c. No reason given |

| 6. The recommended prescription was already in place for another indication | |

| 7. Another specified health care provider or supportive care service was managing the symptom | |

| 8. Cancer therapy was held or dose reduced due to severity of the symptom | |

| 9. A behavioral strategy was advised | |

| 10. The health care provider offered the recommended medication, referral, or behavioral strategy; it was refused by the patient | |

| 11. The patient was sent to the emergency department or hospitalized in response to the symptom | |

| 12. “Other” responses categorized as adherent: The recommended medication was given at a higher dose |

Statistical Analyses

Completion and delivery rates were analyzed using generalized estimating equations (GEE) clustering on the patient (possible repeated measurements for each patient). One-sided tests were performed to assess the probability that the proportion of completed (or delivered) reports was greater than 0.75.

Definitions for overall adherence were pre-determined prior to analyses. An adherence score was computed for each visit and defined as the proportion of recommendations to which the clinician fully or partially adhered. For example, if 10 recommendations were made for a visit and the clinician fully/partially adhered to eight, then the adherence score for that visit would be equal to 0.8. Adherence was analyzed with two methods to ensure consistent results regardless of the analysis method. First, the mean adherence score was estimated using a linear mixed model that accounted for the clinician and potential repeated measurements from a patient. The response variable was the adherence score, which ranged from 0 to 1. The second method fit the mean proportion of recommendations adhered to using a GEE model with a binomial probability distribution and logit link function to estimate the parameters. The response variable in the GEE method was the ratio of number of recommendations adhered to, to the total number of recommendations given in a single visit. Each method allowed for an adjustment for the total number of recommendations.

Once it was determined that there were consistent results across analysis methods, only the GEE model was used to explore further specific symptoms of interest (pain, depression, anxiety, fatigue, dyspnea) and specific recommendations of interest within a symptom (specifically, all fatigue recommendations). All analyses were conducted using SAS v. 9.2 and R; all statistical tests were at a significance level of 0.05.

Results

Sample

Tables 3 and 4 provide characteristics of the clinician (n=14) and patient (n=88) participants receiving the SAMI-L intervention. The majority of clinicians were white, male, and practiced in the cancer center. The typical patient was 61 years, white, female, had NSCLC and received combination chemotherapy.

Table 3.

Characteristics of Clinician Participants in the SAMI-L Intervention (N=14)

| Characteristics | N (%) |

|---|---|

| Clinical Site | |

| Boston Medical Center | 3 (21) |

| Dana-Farber Cancer Institute | 11 (79) |

| Role | |

| Physician | 7 (50) |

| Nurse Practitioner | 7 (50) |

| Gender | |

| Female | 6 (43) |

| Male | 8 (57) |

| Race | |

| White | 11 (79%) |

| Other | 3 (21) |

| Age, yrs, median (range) | 41 (28–61) |

| Years in oncology, median (range) | 11 (4–30) |

Table 4.

Characteristics of Patient Participants in the SAMI-L Intervention (N=88)

| Characteristics | N (%) |

|---|---|

| Clinical Site | |

| Boston Medical Center | 3 (3) |

| Dana-Farber Cancer Institute | 85 (97) |

| Gender | |

| Male | 33 (38) |

| Female | 55 (62) |

| Age, yrs, median (range) | 61 (39–81) |

| Ethnicity | |

| Hispanic/Latino | 5 (6) |

| Non-Hispanic/Latino | 83 (94) |

| Race | |

| White/Caucasian | 76 (86) |

| Other | 9 (10) |

| Missing | 3 (3) |

| Marital Status | |

| Single, never married | 15 (17) |

| Married/living together | 48 (55) |

| Separated, divorced, widowed | 24 (27) |

| Missing | 1 (1) |

| Computer Use | |

| Never/Rare | 14 (16) |

| Sometimes | 10 (11) |

| Often/Very Often | 61 (69) |

| Missing | 3 (3) |

| Education | |

| ≤ HS | 22 (25) |

| > HS | 64 (73) |

| Missing | 2 (2) |

| Disease type, and stage | |

| SCLC | 9 (10.2) |

| Limited stage | 1 (11.1) |

| Advanced stage | 8 (88.9) |

| NSCLC, adenocarcinoma | 50 (56.8) |

| NSCLC, favor adenocarcinoma | 4 (4.6) |

| NSCLC, squamous | 16 (18.2) |

| NSCLC, favor squamous | 0 |

| NSCLC, not otherwise Specified | 7 (8.0) |

| Other (e.g., carcinoid) | 2 (2.3) |

| Stage I (including 0, IA, IB) a | 2 (2.3) |

| Stage II (including IIA, IIB) a | 2 (2.3) |

| Stage IIIA | 9 (10.2) |

| Stage IIIB | 7 (7.9) |

| Stage IV | 59 (67.0) |

| Treatment types received b | |

| Chemotherapy only | 26 (29.5) |

| Investigational therapy only | 14 (16.0) |

| Combined therapy | 48 (54.5) |

SCLC=small cell lung cancer; NSCLC=non-small cell lung cancer.

Recurrent disease.

Treatments received beginning one month prior to study entry until end of study.

Completion and Delivery Rates for SAMI-L

Eighty-eight patient participants had a total of 777 visits. As Table 5 indicates, the mean rate of patient completion of the symptom assessment tool overall was 84% (95% CI: 81–87%) with little fluctuation in the completion of the reports (82–87% in every two-month period) over the course of the study. Of 123 assessments not completed, reasons included staff missing the patient’s appointment because of schedule changes (n= 48, 39%), not enough time before the appointment (n= 27, 22%), patient refused (n=26, 21%) or was too sick (n=16, 13%), and technical problems (n=3, 2%). Only one patient on one visit requested the short-form assessment.

Table 5.

Reports Completed by Patients and Delivered to Clinicians, Clustering on Patient

| Total N (%) |

Completed N (%) |

95% CI for Completed |

P-value a | Delivered N (%) |

95% CI for Delivered |

P-value a | |

|---|---|---|---|---|---|---|---|

| Overall time frame | 774 | 651 (84) | (81, 87)% | < 0.0001 | 580 (76) | (71, 80)% | 0.39 |

| 0–2 months | 333 | 275 (82) | (78, 86)% | 0.0001 | 243 (73) | (67, 79)% | 0.76 |

| 2–4 months | 251 | 214 (85) | (80, 90)% | <0.0001 | 191 (77) | (71, 84)% | 0.24 |

| 4–6 months | 190 | 162 (87) | (82, 92)% | < 0.0001 | 146 (80) | (73, 86)% | 0.10 |

CI = confidence interval.

The P-value indicates the probability that the proportion of completed or delivered reports is greater than the predefined benchmark of 0.75.

Once patients completed the symptom assessment tool and the clinical information required by the algorithms was entered into the SAMI-L system, data could be submitted to the local server, sent through the Web service to the SEBASTIAN decision engine, and returned to the client server for printing in 0.4–2.1 (median 0.9) seconds. It took another one to two minutes for RAs to walk to the printing station, login, and print the report. There were 580 SAMI-L reports printed and delivered. The mean rate of timely delivery of the clinician report was 76% (95% CI: 71–80%), with consistent delivery rates (73–80% in every two-month period) over the six months of the study. The most common reasons that the SAMI-L reports were not delivered included: questionnaires not completed (n=123, 63%), no time before appointment (n= 20, 10%), and technical problems (n=37, 19%). Overall, almost all of the completed reports were delivered (90%; 95% CI: 86–93%) (Table 6).

Table 6.

Completed Reports Delivered to Clinicians, Clustering on Patient

| Completed N (%) |

Delivered N (%) |

95% CI for Delivered |

P-valuea | |

|---|---|---|---|---|

| Overall timeframe | 651 | 580 (90) | (86, 93) | < 0.0001 |

| 0–2 months | 275 | 243 (88) | (83, 93) | < 0.0001 |

| 2–4 months | 214 | 191 (90) | (85, 95) | < 0.0001 |

| 4–6 months | 162 | 146 (90) | (85, 96) | < 0.0001 |

CI = confidence interval.

The P-value indicates the probability that the proportion of completed reports delivered to clinicians is greater than 0.75.

Clinician Adherence to Algorithm-Generated Recommendations

The median number of SAMI-L outpatient visits/patient was 7 (range, 1–14). There were 580 SAMI-L reports delivered representing 83 patients and 872 total recommendations. Of the 872 recommendations, clinicians completely or partially adhered to 494 and did not adhere to 378. Of the 580 reports, 270 (47%) included at least one recommendation and 214 (37%) included two or more recommendations. Using either the linear mixed model or GEE method, the adjustment for the total number of recommendations was not statistically significant (P=0.61 and 0.45, respectively). The intercept-only models for the average adherence score yielded similar results; 56% (95% CI: 50–63%) for the linear mixed model and 57% (95% CI: 52%–62%) for the GEE. Ninety-five percent of the time clinicians did not indicate a reason for failing to adhere to the recommendations; recommendations were perceived as not appropriate or contraindicated in 4% (n=14) of cases, and the clinician judged that the patient’s report of symptom severity during the visit did not match the electronic assessment in 1% (n=4) of cases where recommendations were made.

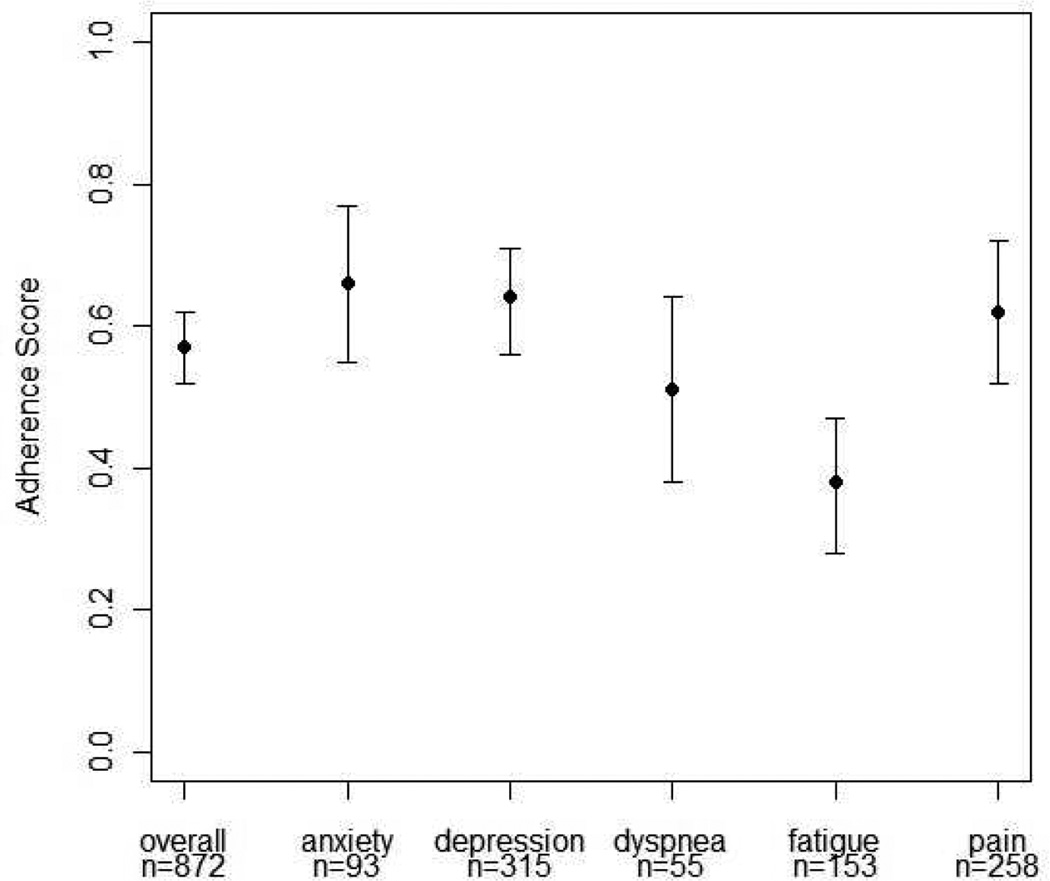

Recommendations were given at the following unadjusted rates: depression (n=315, 36%), pain (n=258, 30%) and fatigue (n=153, 18%), followed by anxiety (n= 93, 11%) and dyspnea (n=55, 6%). Adherence score estimates varied by individual symptoms as seen in Fig. 2. Adherence also varied by type of recommendation. For example, among recommendations for fatigue, adherence to recommendations for stimulant (44%, 95% CI: 27–61%) or hypnotic (44%, 95% CI: 26–62%) medications was higher than for referral to an exercise specialist/physical therapist (PT) (16%, 95% CI: 3–29%).

Fig. 2.

Estimates and 95% CIs for the average adherence to recommendations scores across all visits by symptom (GEE model).

Discussion

Our study extends the literature by providing information on the feasibility of using longitudinal assessment of symptoms and generating real-time reports that include tailored, evidence-based recommendations for common symptoms experienced by patients with lung cancer. We also provide data on adherence to those recommendations by clinicians in the ambulatory setting.

Patient completion rates for the symptom assessment tool were high, exceeding the criteria set to establish feasibility, and remained consistent over six months. These rates are consistent with or higher than other studies reporting longitudinal (three or more times) computerized SQL assessment in clinical trials.17, 51, 55–57 Wright et al.,51 comparing a prospective cohort trial to a study offering assessment routinely to all comers, concluded that overall compliance with assessment completion was higher when assessment was integrated into routine care. This result is cogent to our findings of non-completion reasons. Seventy-five (61%) of the times patients did not complete assessments were because of appointment changes of which RAs were not aware or patients not having enough time from arrival in clinic to the exam visit, two problems that would be largely obviated if the assessment were part of the clinical patient flow.

This is the first study to deliver CDS recommendations to enhance symptom management in “real-time” for more than one symptom. Although overall delivery rates of the summary reports were 76%, delivery rates for completed reports were 90% and remained consistent over time. The main reason that reports, including completed reports, were not delivered before the visit was lack of time, usually related to the patient arriving in clinic with insufficient time for the whole intervention process to be completed. This finding is not surprising, as the RAs worked outside the routine flow of the clinic and were trained to minimize any disruption to patient visits with their clinicians. Despite these limitations, in the majority of cases, we were able to gather complete, accurate and timely information needed for the SAMI-L algorithms and to integrate the summary reports successfully into the workflow of clinicians.

Although overall adherence to the algorithm-generated recommendations was lower than estimated for the study, we found that the overall adherence score was not dependent on the number of recommendations that were delivered. This observation is an important finding, suggesting that clinicians considered each recommendation separately and that addressing multiple symptoms does not adversely affect adherence. Thus, assessment and CDS for multiple symptoms appears to be a feasible approach for future interventions in patients who often experience more than one troublesome symptom.5, 8, 9

The rates of clinician adherence to recommendations for individual symptoms were below our targeted level of 80%. Adherence to guideline-based care often depends on a variety of factors related to the guidelines themselves, the users and the organizational environment.33,35,58 Further evaluation of the reasons for non-adherence will be informative to determine whether issues contributing to lower adherence can be addressed to improve future iterations of the algorithms. In addition, understanding whether certain patient, clinician and organizational characteristics are related to increased adherence to the recommendations would

Adherence to the recommendations for the fatigue algorithm was lower than for other symptoms. To our knowledge, only one other study62 evaluated adherence to guideline-based symptom management for fatigue among patients with cancer. Borneman and colleagues62 described usual care as compared with National Comprehensive Cancer Network (NCNN) cancer-related fatigue guidelines in 69 patients with heterogeneous types of cancer in an outpatient comprehensive cancer center. A medical chart review was conducted assessing adherence to guideline-based care among those who had scores ≥ 4 (0–10 Likert-type scale). Percent adherence was assessed for individual NCCN cancer-related fatigue recommendations, some of which were similar to the recommendations provided to clinicians in our study. Our post-hoc analysis of adherence to fatigue recommendations revealed a higher rate of adherence for specific interventions: referral to exercise/PT (0% in the Borneman et al. study62 vs. 14% in our study) and use of pharmacotherapy to treat fatigue (3% in the Borneman et al. study62 vs. 36% in our study). It is possible clinicians were unfamiliar with the exercise/PT referral service.

Further study assessing clinician adherence to guideline-based care for various symptoms is warranted using more rigorous methods. It is important to note that as advances are made in integrating guidelines into clinical practice, it is imperative to standardize measurement for adherence to guideline-based care so that findings can be compared across studies.63, 64

Limitations

There were several limitations to our study. First, we assessed feasibility of only a subset of factors relevant to using real-time CDS for guideline-based symptom management, namely the willingness and ability of patients and clinicians to use the system at each clinic visit. This approach was necessary because of the complexity of implementing this novel approach in the clinical setting. We relied on RAs, where a similar role would be taken by clinic assistants or clinicians if the intervention were routinely implemented. Therefore, results cannot be generalized outside a research setting to clinical practice. Similarly, integration of the SAMI-L system into the electronic medical record was not possible for this phase of the study because the main purpose was to establish feasibility for implementing the intervention in real-time. Second, the overwhelming majority of participants were recruited and data collected in a single, National Cancer Institute-designated comprehensive cancer center, which has a different patient population, clinical volume, and supportive care resource structure than other cancer centers or many community cancer hospitals. Although we aimed to enroll equal numbers of participants at a community hospital, we were limited by its clinical volume and the trial timeline. Further study would be required to determine if findings would be comparable outside the setting of this study. Third, we did not require clinicians to specify reasons for non-adherence to clinical management suggestions, because participants in pre-clinical testing stated this would be too burdensome. As a consequence, we have limited data on why specific recommendations were not followed.

Conclusion

Innovative ways of integrating evidence-based palliative care into the front-line care of cancer patients are urgently needed.65 Computerized point-of-care palliative care recommendations might be an important component of a broad approach aimed at overcoming barriers at the levels of patient, clinician and healthcare system (e.g., as a part of patient and health professional education and clinical audits66). Voluntary, practice-based, quality improvement programs, using performance measurement and benchmarking among oncology practices across the U.S., are underway67–70 and palliative care-focused quality improvement models also have been proposed.71, 72 The use of CDS systems, such as SAMI-L, in combination with these initiatives has the potential to fill an important gap in promoting evidence-based care. Further research is needed to assess the impact of the SAMI-L system on improving such clinical outcomes as decreased symptom severity and decreased utilization of services, for example, emergency room visits.

Acknowledgments

This study was supported by National Cancer Institute grant R01 CA125256 (PI: Mary E. Cooley). The R01 CA125256 grant was prepared as part of a Mentored Career Development Award (1 K07 CA92696 - M.E. Cooley), Karen M. Emmons, PhD and Bruce E. Johnson, MD, Mentors.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Disclosures

The authors declare no conflicts of interest.

References

- 1.Cooley ME, Short TH, Moriarty HJ. Symptom prevalence, distress, and change over time in adults receiving treatment for lung cancer. Psychooncology. 2003;12:694–708. doi: 10.1002/pon.694. [DOI] [PubMed] [Google Scholar]

- 2.Hopwood P, Stephens RJ. Depression in patients with lung cancer: prevalence and risk factors derived from quality-of-life data. J Clin Oncol. 2000;18:893–903. doi: 10.1200/JCO.2000.18.4.893. [DOI] [PubMed] [Google Scholar]

- 3.Wang XS, Fairclough DL, Liao Z, et al. Longitudinal study of the relationship between chemoradiation therapy for non-small-cell lung cancer and patient symptoms. J Clin Oncol. 2006;24:4485–4491. doi: 10.1200/JCO.2006.07.1126. [DOI] [PubMed] [Google Scholar]

- 4.Boyes AW, Girgis A, D'Este CA, et al. Prevalence and predictors of the short-term trajectory of anxiety and depression in the first year after a cancer diagnosis: a population-based longitudinal study. J Clin Oncol. 2013;31:2724–2729. doi: 10.1200/JCO.2012.44.7540. [DOI] [PubMed] [Google Scholar]

- 5.Koczywas M, Cristea M, Thomas J, et al. Interdisciplinary palliative care intervention in metastatic non-small-cell lung cancer. Clin Lung Cancer. 2013;14:736–744. doi: 10.1016/j.cllc.2013.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cleeland CS, Portenoy RK, Rue M, et al. Does an oral analgesic protocol improve pain control for patients with cancer? An intergroup study coordinated by the Eastern Cooperative Oncology Group. Ann Oncol. 2005;16:972–980. doi: 10.1093/annonc/mdi191. [DOI] [PubMed] [Google Scholar]

- 7.Passik SD, Kirsh KL, Theobald D, et al. Use of a depression screening tool and a fluoxetine-based algorithm to improve the recognition and treatment of depression in cancer patients. A demonstration project. J Pain Symptom Manage. 2002;24:318–327. doi: 10.1016/s0885-3924(02)00493-1. [DOI] [PubMed] [Google Scholar]

- 8.Cooley ME. Symptoms in adults with lung cancer. A systematic research review. J Pain Symptom Manage. 2000;19:137–153. doi: 10.1016/s0885-3924(99)00150-5. [DOI] [PubMed] [Google Scholar]

- 9.Cleeland CS. Symptom burden: multiple symptoms and their impact as patient-reported outcomes. J Natl Cancer Inst Monogr. 2007;37:16–21. doi: 10.1093/jncimonographs/lgm005. [DOI] [PubMed] [Google Scholar]

- 10.Abrahm JL. Integrating palliative care into comprehensive cancer care. J Natl Compr Canc Netw. 2012;10:1192–1198. doi: 10.6004/jnccn.2012.0126. [DOI] [PubMed] [Google Scholar]

- 11.Von Roenn JH, Voltz R, Serrie A. Barriers and approaches to the successful integration of palliative care and oncology practice. J Natl Compr Canc Netw. 2013;11(Suppl 1):S11–S16. doi: 10.6004/jnccn.2013.0209. [DOI] [PubMed] [Google Scholar]

- 12.Bennett AV, Jensen RE, Basch E. Electronic patient-reported outcome systems in oncology clinical practice. CA Cancer J Clin. 2012;62:337–347. doi: 10.3322/caac.21150. [DOI] [PubMed] [Google Scholar]

- 13.Berry DL, Blumenstein BA, Halpenny B, et al. Enhancing patient-provider communication with the electronic self-report assessment for cancer: a randomized trial. J Clin Oncol. 2011;29:1029–1035. doi: 10.1200/JCO.2010.30.3909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sarna L. Effectiveness of structured nursing assessment of symptom distress in advanced lung cancer. Oncol Nurs Forum. 1998;25:1041–1048. [PubMed] [Google Scholar]

- 15.Cleeland CS, Wang XS, Shi Q, et al. Automated symptom alerts reduce postoperative symptom severity after cancer surgery: a randomized controlled clinical trial. J Clin Oncol. 2011;29:994–1000. doi: 10.1200/JCO.2010.29.8315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ruland CM, Andersen T, Jeneson A, et al. Effects of an internet support system to assist cancer patients in reducing symptom distress: a randomized controlled trial. Cancer Nurs. 2013;36:6–17. doi: 10.1097/NCC.0b013e31824d90d4. [DOI] [PubMed] [Google Scholar]

- 17.Berry DL, Hong F, Halpenny B, et al. Electronic self-report assessment for cancer and self-care support: results of a multicenter randomized trial. J Clin Oncol. 2014;32:199–205. doi: 10.1200/JCO.2013.48.6662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Griffin JP, Koch KA, Nelson JE, Cooley ME. Palliative care consultation, quality-of-life measurements, and bereavement for end-of-life care in patients with lung cancer: ACCP evidence-based clinical practice guidelines. Chest. (2nd ed) 2007;132(3 Suppl):404S–422S. doi: 10.1378/chest.07-1392. [DOI] [PubMed] [Google Scholar]

- 19.Luckett T, Butow PN, King MT. Improving patient outcomes through the routine use of patient-reported data in cancer clinics: future directions. Psychooncology. 2009;18:1129–1138. doi: 10.1002/pon.1545. [DOI] [PubMed] [Google Scholar]

- 20.Marshall S, Haywood K, Fitzpatrick R. Impact of patient-reported outcome measures on routine practice: a structured review. J Eval Clin Pract. 2006;12:559–568. doi: 10.1111/j.1365-2753.2006.00650.x. [DOI] [PubMed] [Google Scholar]

- 21.Frost MH, Bonomi AE, Cappelleri JC, et al. Applying quality-of-life data formally and systematically into clinical practice. Mayo Clin Proc. 2007;82:1214–1228. doi: 10.4065/82.10.1214. [DOI] [PubMed] [Google Scholar]

- 22.Rosenbloom SK, Victorson DE, Hahn EA, Peterman AH, Cella D. Assessment is not enough: a randomized controlled trial of the effects of HRQL assessment on quality of life and satisfaction in oncology clinical practice. Psychooncology. 2007;16:1069–1079. doi: 10.1002/pon.1184. [DOI] [PubMed] [Google Scholar]

- 23.Mock V, Atkinson A, Barsevick A, et al. NCCN practice guidelines for cancer-related fatigue. Oncology (Williston Park) 2000;14:151–161. [PubMed] [Google Scholar]

- 24.Benedetti C, Brock C, Cleeland C, et al. NCCN practice guidelines for cancer pain. Oncology (Williston Park) 2000;14:135–150. [PubMed] [Google Scholar]

- 25.Sheldon LK, Swanson S, Dolce A, Marsh K, Summers J. Putting evidence into practice: evidence-based interventions for anxiety. Clin J Oncol Nurs. 2008;12:789–797. doi: 10.1188/08.CJON.789-797. [DOI] [PubMed] [Google Scholar]

- 26.Gordon DB, Dahl JL, Miaskowski C, et al. American Pain Society recommendations for improving the quality of acute and cancer pain management: American Pain Society Quality of Care Task Force. Arch Intern Med. 2005;165:1574–1580. doi: 10.1001/archinte.165.14.1574. [DOI] [PubMed] [Google Scholar]

- 27.Dy SM, Lorenz KA, Naeim A, et al. Evidence-based recommendations for cancer fatigue, anorexia, depression, and dyspnea. J Clin Oncol. 2008;26:3886–3895. doi: 10.1200/JCO.2007.15.9525. [DOI] [PubMed] [Google Scholar]

- 28.Del Fabbro E, Dalal S, Bruera E. Symptom control in palliative care--Part III: dyspnea and delirium. J Palliat Med. 2006;9:422–436. doi: 10.1089/jpm.2006.9.422. [DOI] [PubMed] [Google Scholar]

- 29.Caraceni A, Hanks G, Kaasa S, et al. Use of opioid analgesics in the treatment of cancer pain: evidence-based recommendations from the EAPC. Lancet Oncol. 2012;13:e58–e68. doi: 10.1016/S1470-2045(12)70040-2. [DOI] [PubMed] [Google Scholar]

- 30.National Collaborating Centre for Cancer. National Institute for Health and Clinical Excellence: guidance, in opioids in palliative care: safe and effective prescribing of strong opioids for pain in palliative care of adults. Cardiff, UK: National Collaborating Centre for Cancer; 2012. [PubMed] [Google Scholar]

- 31.Cancer Council Australia. Australian Adult Cancer Pain Management Working Group. [Accessed March 19, 2014];Cancer pain management in adults. 2014 Available from: http://wiki.cancer.org.au/australiawiki/index.php?oldid=72536.

- 32.Borneman T, Piper BF, Sun VC, et al. Implementing the Fatigue Guidelines at one NCCN member institution: process and outcomes. J Natl Compr Canc Netw. 2007;5:1092–1101. doi: 10.6004/jnccn.2007.0090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Latoszek-Berendsen A, Tange H, van den Herik HJ, Hasman A. From clinical practice guidelines to computer-interpretable guidelines. a literature overview. Methods Inf Med. 2010;49:550–570. doi: 10.3414/ME10-01-0056. [DOI] [PubMed] [Google Scholar]

- 34.Cabana MD, Rand CS, Powe NR, et al. Why don't physicians follow clinical practice guidelines? A framework for improvement. JAMA. 1999;282:1458–1465. doi: 10.1001/jama.282.15.1458. [DOI] [PubMed] [Google Scholar]

- 35.Kenefick H, Lee J, Fleishman V. Improving physician adherence to clinical practice guidelines: barriers and strategies for change. Cambridge, MA: New England Healthcare Institute; 2008. [Google Scholar]

- 36.Somerfield MR, Einhaus K, Hagerty KL, et al. American Society of Clinical Oncology clinical practice guidelines: opportunities and challenges. J Clin Oncol. 2008;26:4022–4026. doi: 10.1200/JCO.2008.17.7139. [DOI] [PubMed] [Google Scholar]

- 37.Bertsche T, Askoxylakis V, Habl G, et al. Multidisciplinary pain management based on a computerized clinical decision support system in cancer pain patients. Pain. 2009;147:20–28. doi: 10.1016/j.pain.2009.07.009. [DOI] [PubMed] [Google Scholar]

- 38.Du Pen SL, Du Pen AR, Polissar N, et al. Implementing guidelines for cancer pain management: results of a randomized controlled clinical trial. J Clin Oncol. 1999;17:361–370. doi: 10.1200/JCO.1999.17.1.361. [DOI] [PubMed] [Google Scholar]

- 39.Downing GJ, Boyle SN, Brinner KM, Osheroff JA. Information management to enable personalized medicine: stakeholder roles in building clinical decision support. BMC Med Inform Decis Mak. 2009;9:44. doi: 10.1186/1472-6947-9-44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157:29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 41.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330:765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lobach DF, Hammond WE. Computerized decision support based on a clinical practice guideline improves compliance with care standards. Am J Med. 1997;102:89–98. doi: 10.1016/s0002-9343(96)00382-8. [DOI] [PubMed] [Google Scholar]

- 43.Cooley ME, Lobach DF, Johns E, et al. Creating computable algorithms for symptom management in an outpatient thoracic oncology setting. J Pain Symptom Manage. 2013;46:911–924. e1. doi: 10.1016/j.jpainsymman.2013.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.McCorkle R, Cooley ME, Shea JA. A user´s manual for the Symptom Distress Scale. Philadelphia: University of Pennsylvania School of Nursing; 1998. [Google Scholar]

- 45.Fann JR, Berry DL, Wolpin S, et al. Depression screening using the Patient Health Questionnaire-9 administered on a touch screen computer. Psychooncology. 2009;18:14–22. doi: 10.1002/pon.1368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Zigmond AS, Snaith RP. The Hospital Anxiety and Depression Scale. Acta Psychiatr Scand. 1983;67:361–370. doi: 10.1111/j.1600-0447.1983.tb09716.x. [DOI] [PubMed] [Google Scholar]

- 47.Varma MG, Wang JY, Berian JR, et al. The constipation severity instrument: a validated measure. Dis Colon Rectum. 2008;51:162–172. doi: 10.1007/s10350-007-9140-0. [DOI] [PubMed] [Google Scholar]

- 48.Bradley KA, Bush KR, McDonell MB, Malone T, Fihn SD. Screening for problem drinking: comparison of CAGE and AUDIT. J Gen Intern Med. 1998;13:379–388. doi: 10.1046/j.1525-1497.1998.00118.x. [DOI] [PubMed] [Google Scholar]

- 49.Given C, Given B, Rahbar M, et al. Effect of a cognitive behavioral intervention on reducing symptom severity during chemotherapy. J Clin Oncol. 2004;22:507–516. doi: 10.1200/JCO.2004.01.241. [DOI] [PubMed] [Google Scholar]

- 50.Kawamoto K, Lobach DF. Design, implementation, use, and preliminary evaluation of SEBASTIAN, a standards-based Web service for clinical decision support. AMIA Annu Symp Proc. 2005:380–384. [PMC free article] [PubMed] [Google Scholar]

- 51.Wright EP, Selby PJ, Crawford M, et al. Feasibility and compliance of automated measurement of quality of life in oncology practice. J Clin Oncol. 2003;21:374–382. doi: 10.1200/JCO.2003.11.044. [DOI] [PubMed] [Google Scholar]

- 52.Dunbar-Jacob J, Mortimer-Stephens MK. Treatment adherence in chronic disease. J Clin Epidemiol. 2001;54(Suppl 1):S57–S60. doi: 10.1016/s0895-4356(01)00457-7. [DOI] [PubMed] [Google Scholar]

- 53.McGlynn EA, Asch SM, Adams J, et al. The quality of health care delivered to adults in the United States. N Engl J Med. 2003;348:2635–2645. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- 54.Dilts DM. Practice variation: the Achilles' heel in quality cancer care. J Clin Oncol. 2005;23:5881–5882. doi: 10.1200/JCO.2005.05.034. [DOI] [PubMed] [Google Scholar]

- 55.Abernethy AP, Herndon JE, 2nd, Wheeler JL, et al. Feasibility and acceptability to patients of a longitudinal system for evaluating cancer-related symptoms and quality of life: pilot study of an e/Tablet data-collection system in academic oncology. J Pain Symptom Manage. 2009;37:1027–1038. doi: 10.1016/j.jpainsymman.2008.07.011. [DOI] [PubMed] [Google Scholar]

- 56.Detmar SB, Muller MJ, Schornagel JH, Wever LD, Aaronson NK. Health-related quality-of-life assessments and patient-physician communication: a randomized controlled trial. JAMA. 2002;288:3027–3034. doi: 10.1001/jama.288.23.3027. [DOI] [PubMed] [Google Scholar]

- 57.Velikova G, Booth L, Smith AB, et al. Measuring quality of life in routine oncology practice improves communication and patient well-being: a randomized controlled trial. J Clin Oncol. 2004;22:714–724. doi: 10.1200/JCO.2004.06.078. [DOI] [PubMed] [Google Scholar]

- 58.Trivedi MH, Kern JK, Marcee A, et al. Development and implementation of computerized clinical guidelines: barriers and solutions. Methods Inf Med. 2002;41:435–442. [PubMed] [Google Scholar]

- 59.Smolders M, Laurant M, Verhaak P, et al. Which physician and practice characteristics are associated with adherence to evidence-based guidelines for depressive and anxiety disorders? Med Care. 2010;48:240–248. doi: 10.1097/MLR.0b013e3181ca27f6. [DOI] [PubMed] [Google Scholar]

- 60.Fantini MP, Compagni A, Rucci P, Mimmi S, Longo F. General practitioners' adherence to evidence-based guidelines: a multilevel analysis. Health Care Manage Rev. 2012;37:67–76. doi: 10.1097/HMR.0b013e31822241cf. [DOI] [PubMed] [Google Scholar]

- 61.Green CJ, Fortin P, Maclure M, Macgregor A, Robinson S. Information system support as a critical success factor for chronic disease management: necessary but not sufficient. Int J Med Inform. 2006;75:818–828. doi: 10.1016/j.ijmedinf.2006.05.042. [DOI] [PubMed] [Google Scholar]

- 62.Hopwood P, Stephen R. Symptoms at presentation for treatment in patients with lung cancer: implications for the evaluation of palliative treatment. Brit J Cancer. 1995;71:633–636. doi: 10.1038/bjc.1995.124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Dykes PC. Practice guidelines and measurement: state-of-the-science. Nurs Outlook. 2003;51:65–69. doi: 10.1016/s0029-6554(02)05459-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.van de Klundert J, Gorissen P, Zeemering S. Measuring clinical pathway adherence. J Biomed Inform. 2010;43:861–872. doi: 10.1016/j.jbi.2010.08.002. [DOI] [PubMed] [Google Scholar]

- 65.Riley WT, Glasgow RE, Etheredge L, Abernethy AP, Rapid responsive. relevant (R3) research: a call for a rapid learning health research enterprise. Clin Transl Med. 2013;2:10. doi: 10.1186/2001-1326-2-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Brink-Huis A, van Achterberg T, Schoonhoven L. Pain management: a review of organisation models with integrated processes for the management of pain in adult cancer patients. J Clin Nurs. 2008;17:1986–2000. doi: 10.1111/j.1365-2702.2007.02228.x. [DOI] [PubMed] [Google Scholar]

- 67.McNiff KK, Neuss MN, Jacobson JO, et al. Measuring supportive care in medical oncology practice: lessons learned from the quality oncology practice initiative. J Clin Oncol. 2008;26:3832–3837. doi: 10.1200/JCO.2008.16.8674. [DOI] [PubMed] [Google Scholar]

- 68.Desch CE, McNiff KK, Schneider EC, et al. American Society of Clinical Oncology/National Comprehensive Cancer Network quality measures. J Clin Oncol. 2008;26:3631–3637. doi: 10.1200/JCO.2008.16.5068. [DOI] [PubMed] [Google Scholar]

- 69.Jacobson JO, Neuss MN, McNiff KK, et al. Improvement in oncology practice performance through voluntary participation in the Quality Oncology Practice Initiative. J Clin Oncol. 2008;26:1893–1898. doi: 10.1200/JCO.2007.14.2992. [DOI] [PubMed] [Google Scholar]

- 70.Mallory GA. Professional nursing societies and evidence-based practice: strategies to cross the quality chasm. Nurs Outlook. 2010;58:279–286. doi: 10.1016/j.outlook.2010.06.005. [DOI] [PubMed] [Google Scholar]

- 71.Campion FX, Larson LR, Kadlubek PJ, Earle CC, Neuss MN. Advancing performance measurement in oncology: quality oncology practice initiative participation and quality outcomes. J Oncol Pract. 2011;7(3 Suppl):31s–35s. doi: 10.1200/JOP.2011.000313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.De Roo ML, Leemans K, Claessen SJ, et al. Quality indicators for palliative care: update of a systematic review. J Pain Symptom Manage. 2013;46:556–572. doi: 10.1016/j.jpainsymman.2012.09.013. [DOI] [PubMed] [Google Scholar]

- 73.Bruner DW, Hanisch LJ, Reeve BB, et al. Stakeholder perspectives on implementing the National Cancer Institute's patient-reported outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE) Transl Behav Med. 2011;1:110–122. doi: 10.1007/s13142-011-0025-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Basch E, Iasonos A, McDonough T, et al. Patient versus clinician symptom reporting using the National Cancer Institute Common Terminology Criteria for Adverse Events: results of a questionnaire-based study. Lancet Oncol. 2006;7:903–909. doi: 10.1016/S1470-2045(06)70910-X. [DOI] [PubMed] [Google Scholar]