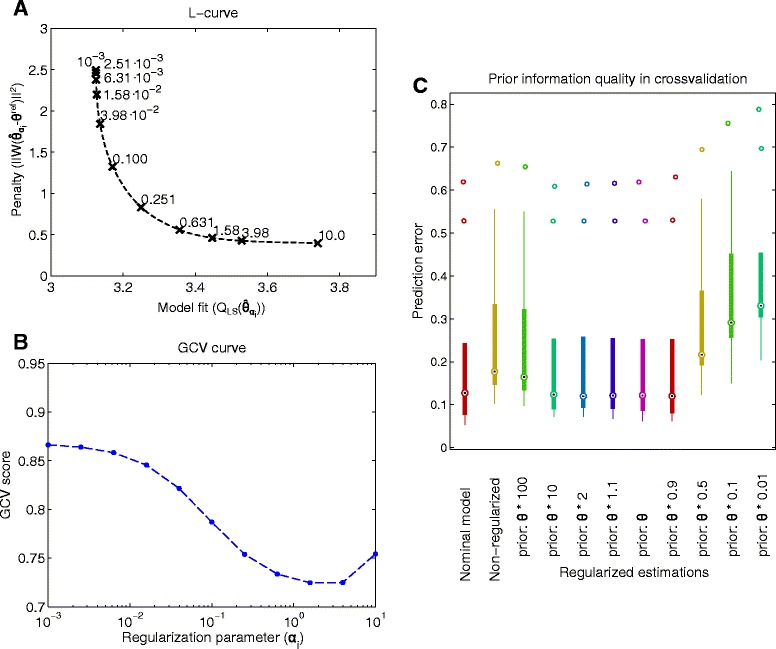

Fig. 5.

Tuning the regularization method for BBG case study. Figure a shows the trade-off between the two terms of the regularized objective function, i.e. model fit and the regularization penalty, for a set of regularization parameters (values shown close to symbols). A larger regularization parameter results in worse fit to the calibration data, small regularization parameter results in a larger penalty. Figure b compares the candidates based on the generalized cross-validation scores. A larger score indicates worse model prediction for cross-validation data. The curve has the minimum at 1.58. Figure c shows the normalized root mean square prediction error of calibrated model for 10 sets of cross-validation data and regularizations considering different quality of the prior information (initial guess of the parameters). For a wide range of priors (initial guesses based on the reference parameter vector) the regularized estimation gives a good cross-validation error. Small priors exhibit worse predictions