Abstract

Gaze direction, a cue of both social and spatial attention, is known to modulate early neural responses to faces e.g. N170. However, findings in the literature have been inconsistent, likely reflecting differences in stimulus characteristics and task requirements. Here, we investigated the effect of task on neural responses to dynamic gaze changes: away and toward transitions (resulting or not in eye contact). Subjects performed, in random order, social (away/toward them) and non-social (left/right) judgment tasks on these stimuli. Overall, in the non-social task, results showed a larger N170 to gaze aversion than gaze motion toward the observer. In the social task, however, this difference was no longer present in the right hemisphere, likely reflecting an enhanced N170 to gaze motion toward the observer. Our behavioral and event-related potential data indicate that performing social judgments enhances saliency of gaze motion toward the observer, even those that did not result in gaze contact. These data and that of previous studies suggest two modes of processing visual information: a ‘default mode’ that may focus on spatial information; a ‘socially aware mode’ that might be activated when subjects are required to make social judgments. The exact mechanism that allows switching from one mode to the other remains to be clarified.

Keywords: direct gaze, averted gaze, N170, task modulation, social and non-social context

Introduction

As social primates, we continually monitor the behaviors of others so that we can appropriately respond in a social interaction. Our ability to do that depends critically on decoding our visual environment, including important information carried by the face, the eyes and gaze changes. An individual’s gaze direction transmits a wealth of information not only as to their focus of spatial attention, but also about their intention to approach or withdraw, therefore conveying both visuospatial and social information to the observer. In this respect, it is important to note crucial differences between direct and averted gaze. Direct gaze mainly signals that the observer is the likely recipient of a directed behavior, and is indicative of the intention to start a communicative interaction; thus direct gaze mainly conveys social information to the observer (Senju and Johnson, 2009). On the contrary, averted gaze transmits both social and spatial information to the observer. On the one hand, gaze cueing experiments indicate that averted gaze serves as a powerful stimulus for altering the observer’s focus of visuospatial attention; yet there appears to be a difference in how the brain treats visual cues consisting of eyes vs arrows. Lesions to the right superior temporal sulcus or the amygdala disrupted gaze, but not arrow cueing (Akiyama et al., 2006, 2007). In contrast to arrows, averted gaze also conveys a range of social meanings including for instance, shyness, dishonesty, the intentionality of the gazer, and their emotional state (Adams and Kleck; 2005; Fox, 2005; Calder et al., 2007). Consequently, modulations of brain activity by gaze direction have been accounted for by either a change in social (Puce and Schroeder, 2010; Caruana et al., 2014) and/or visuospatial attention (Grossmann et al., 2007; Hadjikhani et al., 2008; Straube et al., 2010). This suggests that gaze processing may be sensitive to task-based manipulations of participants’ attention toward either a social or a spatial dimension. Consistently, the task being performed by participants is known to be increasingly important in the processing of social stimuli such as gaze or facial expressions (Graham and Labar, 2012).

Not surprisingly, it is believed that the human brain possesses specialized mechanisms for the processing of gaze and other important information conveyed by the eyes (Langton et al., 2000; Itier and Batty, 2009). Indications of specialized processes dedicated to the perception of gaze come from functional magnetic resonance imaging (fMRI) and event-related potential (ERP) studies (Puce et al., 1998, 2000; Wicker et al., 1998; George et al., 2001). Notably, the face-sensitive N170 (Bentin et al., 1996) also shows sensitivity to static eyes, typically being larger and later for eyes shown in isolation (e.g. Bentin et al., 1996; Itier et al., 2006; Nemrodov and Itier, 2011). Furthermore, the N170 response to eyes matures more rapidly than that of faces (Taylor et al., 2001a), leading researchers to describe the N170 as a potential early marker of eye gaze processing (Taylor et al., 2001a,b; Itier et al., 2006; Nemrodov and Itier, 2011). Interestingly, while in 4-month-old infants early brain activity is greater to gaze contact than to averted gaze (Farroni et al., 2002), in adults, the modulation of N170 by gaze direction seems to vary as a function of task demand and stimulus (Puce et al., 1998; Conty et al., 2007; Ponkanen et al., 2011). Indeed, studies that have measured N170 modulations by gaze direction in adults reported no consistent results (Itier and Batty, 2009). Some studies reported a larger N/M170 to averted gaze (Puce et al., 2000; Watanabe et al., 2002; Itier et al., 2007a; Caruana et al., 2014), some to direct gaze (Conty et al., 2007; Ponkanen et al., 2011) whereas others reported no modulations of the N170 by gaze direction (Taylor et al., 2001b; Schweinberger et al., 2007; Brefczynski-Lewis et al., 2011; Myllyneva and Hietanen, 2015). Inconsistent results in the study of gaze perception are also reported in fMRI (Calder et al., 2007; Nummenmaa and Calder, 2009). Discrepancies between studies have been attributed mainly to task and stimulus factors (Itier and Batty, 2009:11; Nummenmaa and Calder, 2009; Puce and Schroeder, 2010). Gaze perception studies have used either passive viewing tasks (Puce et al., 2000; Watanabe et al., 2002; Caruana et al., 2014), or ‘social’ judgment tasks, where participants report whether the gaze was oriented away or toward them (Conty et al., 2007; Itier et al., 2007a).

Moreover, gaze perception studies have used a diversity of stimuli with varying head orientation, either front-view (e.g. Puce et al., 2000), or/and ¾-viewed (e.g. Kawashima et al., 1999; Conty et al., 2007; Itier et al., 2007a), and varying angles of gaze deviations (from 5° to 30°; e.g. Schweinberger et al., 2007). Importantly, the majority of studies manipulated gaze in static displays, even though gaze is rarely static in natural situations, and social information important for non-verbal communication is often conveyed via dynamic gaze changes. The use of dynamic stimuli can pose a challenge in neurophysiological studies because they may not have clear onsets, and can potentially elicit a continuous and dynamic neural response (see Ulloa et al., 2014). To overcome this problem, apparent face motion stimuli, which allow eliciting clear ERPs to dynamic stimulation, were developed (Puce et al., 2000; Conty et al., 2007). Apparent face motion stimuli have a precise stimulus onset for performing traditional ERP analyses, while conserving the dynamic and more ecological aspects of perception.

The purpose of this study was to investigate whether the modulations of the N170 by gaze direction depend on task demands, using a varied set of eye gaze transitions. To that aim, we used an apparent motion paradigm in a trial structure identical to Conty et al. (2007) and a subset of their stimuli. We generated a series of six potential viewing conditions: three motions away from the participants and three motions toward the participants. The six conditions included full gaze transition between an extreme and a direct gaze, mimicking conditions used in Puce et al. (2000), two conditions starting at an intermediate gaze position, mimicking those of Conty et al. (2007) and an additional two conditions, ending on the intermediate gaze position, to ensure a balanced stimulus design. Importantly, we ran two task versions on the same subject group using the same stimuli in the same experimental session. In Task 1 subjects made a ‘social’ judgment identifying if the gaze moved toward or away from them, as in Conty et al. (2007). In Task 2, subjects made a ‘non-social’ judgment where they indicated if the gaze change was to their left or right. We thus explicitly examined how ERPs to viewing eye gaze changes were influenced by the task performed by the participants. We hypothesized that gaze transition away from the participants will lead to larger N170 than gaze motion toward the participants, at least in the non-social task, regardless of the size of the gaze transition.

Materials and methods

Subjects

Overall, 26 subjects from the general Indiana University (Bloomington) community took part in the experiment. All provided written informed consent in a study that was approved by the Indiana University Institutional Review Board (IRB 1202007935). All subjects were paid $US25 for their participation. Four individuals generated electroencephalographic (EEG) data that contained excessive head or eye movement artifacts, and hence were excluded from subsequent data analysis. Therefore, a total of 22 subjects (11 female; mean age ± s.d.: 26.23 ± 3.44 years) contributed data to this study. All, but one, subjects were right handed (mean handedness ± s.d.: + 54.77 ± 31.94), as assessed by the Edinburgh Handedness Inventory (Oldfield, 1971). All subjects were free from a history of neurological or psychiatric disorders and had normal, or corrected-to-normal, vision.

Stimuli

Stimuli consisted of the frontal face views from Conty et al. (2007) that were presented using an identical trial structure. A total of forty 8-bit RGB color frontal view faces (20 males) were presented with direct gaze (direct), 15° (intermediate) or 30° (extreme) averted gaze positions. There were a total of six images per face: one with a direct gaze, one with an averted horizontal gaze of 15° to the right, one with an averted horizontal gaze of 30° to the right and mirror images of each. Apparent gaze motion was created from the static images by presenting two images sequentially (Figure 1A).

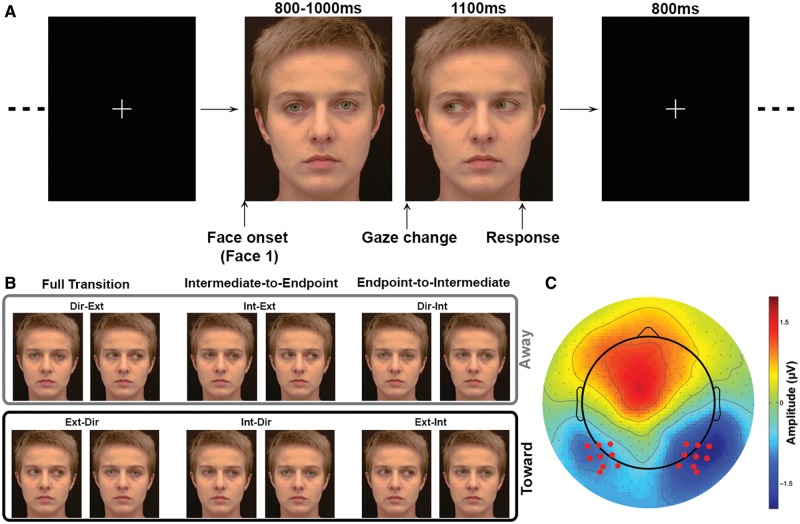

Fig. 1.

Methods. (A) Time line for individual trial structure. A first static face is displayed on the screen. Gaze direction in that first face can be direct (illustrated), intermediate or extreme. The first face is then replaced by a second static face, in which the gaze direction, different from gaze direction in the first face, can be direct, intermediate or extreme (illustrated) in order to create apparent gaze motion. Subjects were instructed to respond while the second face was still on screen. (B) Example of the different apparent motion conditions. The light gray box highlights gaze transition made away from the subjects. The dark gray box highlights gaze transition made toward the subjects. (C) Location of the electrodes of interest (red dots). Data illustrated is the average ERPs across conditions at the latency of the N170.

A total of six gaze apparent motion conditions were generated (see Figure 1A and B):

Direct to extreme gaze [Dir-Ext].

Extreme to direct gaze [Ext-Dir].

Intermediate to extreme [Int-Ext].

Intermediate to direct [Int-Dir].

Direct to intermediate [Dir-Int].

Extreme to intermediate [Ext-Int].

The large number of conditions in this study allows us to reconcile potential differences between previous published studies. In this study, conditions (1) and (2) were identical to those previously studied in Puce et al. (2000), whereas (3) and (4) were a subset of those used in Conty et al. (2007). Conditions (5) and (6) were not used in either of the previous studies, but were added to the current study so that a balanced experimental design could be created. For the sake of brevity, throughout the manuscript we refer to these groupings of pairs of conditions subsequently as ‘full transition’, ‘intermediate-to-endpoint’ and ‘endpoint-to-intermediate’. Note that conditions (1), (3) and (5) correspond to gaze transition made away from the subjects, whereas conditions (2), (4) and (6) correspond to gaze transition made toward the subjects, resulting (2,4) or not (6) in eye contact.

Design

Each subject completed two tasks in a recording session: in the social task subjects pressed one of two response buttons to indicate whether the viewed gaze transition was moving away or toward them. In the non-social task, a gaze transition was judged relatively as either moving toward either their left or their right. The order of the two tasks was counterbalanced across subjects. For each task, 480 trials were broken up into four runs of 120 trials each to allow rest for subjects between runs, so that they could keep their face and eye movements to a minimum.

A single trial consisting of the presentation of two stimuli had the following structure: the first image of each trial was presented for 800, 900 or 1000 ms (randomized) on a black background. It was immediately replaced by a second image, which differed from the first one only by its gaze direction, creating an apparent motion stimulus. The second image remained on the screen for 1100 ms. Trials were separated by an 800 ms white fixation cross appearing on a black background (Figure 1A). Each of the six stimulus conditions was presented a total of 80 times in randomized order, for a total of 480 trials per task.

Data acquisition

Each subject was fitted with a 256-electrode HydroCel Geodesic Sensor Net (EGI Inc., Eugene, OR). Electrodes were adjusted as needed to keep impedances below 60 kΩ, consistent with manufacturer’s recommendations. Half way through the experimental session an additional impedance check was performed, and impedances were adjusted as needed. Continuous EEG recordings were made during both tasks using a gain of 5000 with a set of EGI Net Amps 300 neurophysiological amplifiers using NetStation 4.4 data acquisition software and were stored for off-line analysis. EEG data were recorded with respect to the vertex using a sampling rate of 500 Hz and a band pass filter of 0.1–200 Hz.

Once the EEG set-up was complete, subjects sat in a comfortable chair in a darkened room 2.75 m away from a 160 cm monitor (Samsung SyncMaster P63FP, Refresh Rate of 60Hz) mounted on a wall at eye level. Stimuli were presented with a visual angle of 7.0 × 8.6 deg (horizontal × vertical) using Presentation V14 software (Neurobehavioral Systems, San Francisco, CA).

Data analysis

Behavioral data

Response time (RT) and accuracy data, collected with Presentation, were exported to Matlab 2012 (The Mathworks Inc., Natick, MA). Mean RTs and accuracy were calculated for each condition, task and subject.

EEG data preprocessing

EEG data preprocessing was performed in NetStation EEG software (EGI Inc., Eugene, OR) and EEGlab (Delorme and Makeig, 2004), following recommended guidelines; detailed information is presented in supplementary material. Continuous EEG data were epoched into 1.6 s epochs, including a 518 ms pre-stimulus (the second face of the apparent motion stimulus) onset1.

Event-related potentials

For each task, an average ERP was generated for each subject and condition; average number of trials included in the average ERP were greater than 60 in all conditions and tasks (repeated-measures ANOVAs, all P > 0.05). ERP peak analyses were conducted on individual subject averages for each of the 12 conditions (6 apparent motion × 2 tasks). N170 latencies and amplitudes were measured from the ERPs averaged over a nine-electrode cluster (Figure 1C), centred on the electrode where the grand average (collapsed for conditions) was maximal between 142 and 272 ms post-stimulus. In order to investigate ERP effects other than the N170, we further tested for experimental effects at all time-points and electrodes using the LIMO EEG toolbox (spatial-temporal analyses, Pernet et al., 2011—presented in Supplementary Material).

Statistical analysis

Statistical analyses of behavioral data and ERP peak amplitudes and latencies were performed using IBM SPSS Statistics V20 (IBM Corp., Armonk, NY).

Behavioral data

To directly compare our results with those of previous studies, we ran three separate two-way repeated-measure ANOVAs on the data in this study explicitly comparing the conditions identical to Puce et al. 2000 (Dir-Ext/Ext-Dir), Conty et al. 2007 (Int-Ext/Int-Dir) and new conditions not previously tested (Ext-Int/Dir-Int—Figure 1B); these ANOVAs are respectively referred to as the full transition ANOVA, intermediate-to-endpoint ANOVA or endpoint-to-Intermediate ANOVA. All three ANOVAs had two within-subjects factors: task and condition (Dir-Ext/Ext-Dir or Int-Ext/Int-Dir or Dir-Int/Ext-Int). A significant effect was identified at the P < 0.05; significant interactions were further explored using paired t-test. Results of an omnibus ANOVA with all conditions are presented in Supplementary Material.

Event-related potentials

A mixed-design ANOVA using within-subject factors of task, condition and hemisphere and a between-subjects factor of gender was performed to identify significant differences in N170 amplitude and latency. A significant effect was identified at the P < 0.05 level using a Greenhouse-Geisser sphericity correction, when relevant; pairwise comparisons were Bonferroni corrected. Statistical analyses of N170 latency are reported in Supplementary Material.

Using logic similar to the analysis of the behavioral data, we additionally ran three separate three-way ANOVAs comparing the condition subgroups i.e. the full transition ANOVA, intermediate-to-endpoint ANOVA and endpoint-to-intermediate ANOVA. All three ANOVAs had three within-subjects factors: task, condition and hemisphere (left, right). A significant effect was identified at the P < 0.05 level; significant interactions were further explored using paired t-test.

Results

Behavioral results

Accuracy

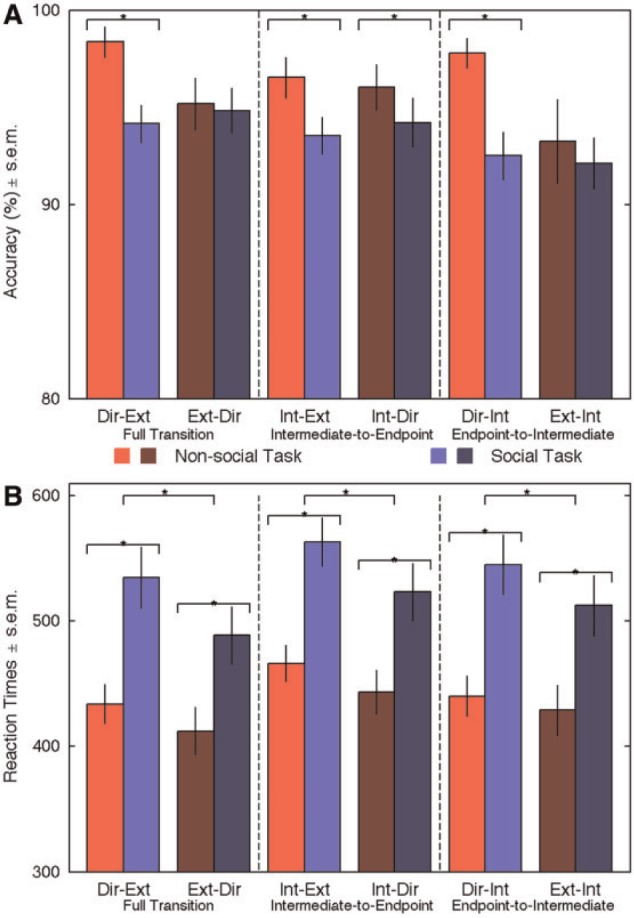

Accuracy results are displayed in Figure 2A and in Table 1. The full transition ANOVA, i.e. comparison between direct-to-extreme and extreme-to-direct gaze changes, revealed an effect of task (F(1,21) = 5.34; P = 0.031; η2 = 0.20), and a two-way interaction (F(1,21) = 12.13; P = 0.002; η = 0.37). Subjects performed better in the non-social task than in the social task, in particular for gaze aversions. In the non-social task, accuracy was higher for gaze aversion than gaze changes toward the participants (t(21) = 3.82; P = 0.001); in the social task, accuracy was not modulated by gaze transition direction (t(21) = −0.751; P = 0.461). The intermediate-to-endpoint ANOVA, i.e. comparisons between Int-Ext and Int-Dir, again showed a main effect of task (F(1,21) = 4.58; P = 0.044; η2 = 0.17): accuracy was better in the non-social task than in the social task, regardless of the gaze direction. No other effect or interaction was found. Finally, the endpoint-to-intermediate ANOVA, namely comparisons between Ext-Int and Dir-Int changes, showed a main effect of task (F(1,21) = 7.48; P = 0.012; η2 = 0.26), condition (F(1,21) = 5.49; P = 0.029; η2 = 0.21) and a two-way interaction (F(1,21) = 7.20; P = 0.014; η2 = 0.26). Again, accuracy differed as a function of gaze transition direction in the non-social task (higher for gaze aversion; t(21) = 2.82; P = 0.01) but not in the social task (t(21) = 0.44; P = 0.666).

Fig. 2.

Behavioral results. Red bars: non-social task; blue bars: social task. Light colored bars illustrate gaze transition made away from the subjects. Dark colored bars highlight transition made toward the subjects. Error bars represent standard error of mean (SEM). (A) Accuracy. *P < 0.05. Note that accuracy is plotted between 80 and 100%. (B) RTs. *P < 0.01.

Table 1.

Behavioral Results (group mean ± SEM) as a function of task and condition

| Dir-Ext | Ext-Dir | Int-Ext | Int-Dir | Dir-Int | Ext-Int | |

|---|---|---|---|---|---|---|

| Accuracy (%) ± SEM | ||||||

| Non-social | 98.35 ± 0.79 | 95.17 ± 1.33 | 96.53 ± 1.04 | 96.02 ± 1.18 | 97.78 ± 0.76 | 93.24 ± 2.17 |

| Social | 94.15 ± 0.97 | 94.83 ± 1.16 | 93.52 ± 0.95 | 94.20 ± 1.27 | 92.50 ± 1.22 | 92.10 ± 1.31 |

| RTs (ms) ± SEM. | ||||||

| Non-social | 434 ± 16 | 412 ± 19 | 466 ± 14 | 443 ± 18 | 440 ± 16 | 429 ± 20 |

| Social | 535 ± 24 | 489 ± 23 | 563 ± 19 | 523 ± 23 | 545 ± 24 | 512 ± 24 |

Response times

RT data are displayed in Figure 2B and in Table 1. The full transition ANOVA, revealed main effects of task (F(1,21) = 33.42; P < 0.001; η2 = 0.61), condition (F(1,21) = 19.09; P < 0.001; η2 = 0.48) and an interaction (F(1,21) = 7.65; P = 0.012; η2 = 0.267). RTs were overall shorter in the non-social than in the social task, and, in the social task, for gaze changes made toward the subjects. The two-way interaction between task and condition revealed that RTs were significantly shorter for toward gaze transition in the social task (t(21) = 4.64; P < 0.001), but less so in the non-social task (t(21) = 2.76; P = 0.012). The intermediate-to-endpoint ANOVA revealed main effects of task (F(1,21) = 30.81; P < 0.001; η2 = 0.59) and condition (F(1,21) = 20.62; P < 0.001; η2 = 0.49). Again RTs were shorter in the non-social task and for eye gaze changes toward the subjects. Finally, the endpoint-to-intermediate ANOVA showed effects similar to that of the full transition ANOVA: main effect of task (F(1,21) = 31.94; P < 0.001; η2 = 0.60), condition (F(1,21) = 12.83; P = 0.002; η2 = 0.38) and an interaction (F(1,21) = 5.58; P = 0.028; η2 = 0.21). RTs were faster in the non-social task, and for gaze changes made toward the subjects. RTs were only significantly faster to toward gaze transition in the social task (t(21) = 4.07; P = 0.001; non-social task: t(21) = 1.56; P = 0.13).

Event-related potentials

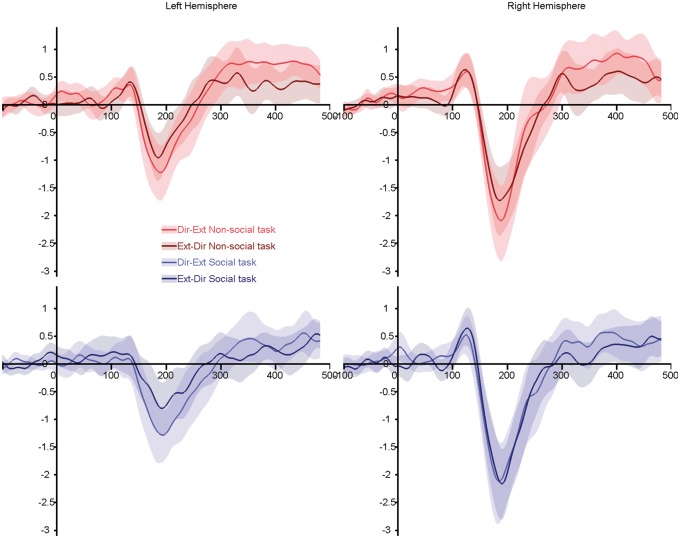

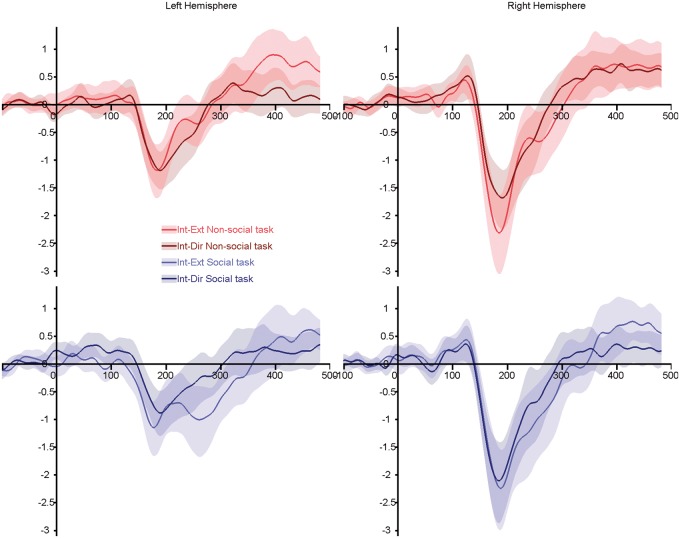

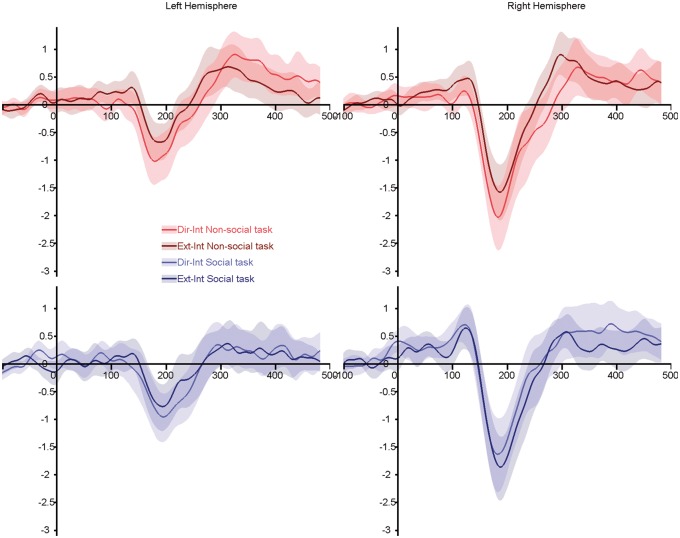

Overall, a very clear triphasic positive–negative–positive ERP complex was observed in all conditions at the posterior scalp bilaterally (Figures 3–5). The negative ERP corresponded to the N170, consistent with previous studies (Puce et al., 2000; Conty et al., 2007) and peaked around 200 ms. So as to better compare with previous studies, averaged ERPs recorded for nine-electrode occipitotemporal clusters over each hemisphere for all conditions were generated (Figures 3–5 and Supplementary Figure S1). For all conditions, N170 was earlier (Supplementary Material) and larger in the right electrode cluster. Below we describe the results of statistical tests on ERP amplitudes as a function of task and condition.

Fig. 3.

Grand average ERPs for the full transitions [direct-to-extreme and extreme-to-direct—conditions similar to Puce et al. (2000)]. Top panels, red lines: non-social task; bottom panels, blue lines: social task. Light colored lines illustrate gaze transition made away from the subjects. Dark colored lines highlighted transition made toward the subjects. Shaded areas represent the 95% confidence interval built using bootstrap (n = 1000) with replacement of the data under H1.

Fig. 4.

Grand average ERPs for the intermediate-to-endpoint transitions (IE and ID—conditions similar to Conty et al. (2007)). Top panels, red lines: non-social task; bottom panels, blue lines: social task. Light colored lines illustrate gaze transition made away from the subjects. Dark colored lines highlighted transition made toward the subjects. Shaded areas represent the 95% confidence interval built using bootstrap (n = 1000) with replacement of the data under H1.

Fig. 5.

Grand average ERPs for the endpoint-to-intermediate transitions (direct-to-intermediate and extreme-to-intermediate). Top panels, red lines: non-social task; bottom panels, blue lines: social task. Light colored lines illustrate gaze transition made away from the subjects. Dark colored lines highlighted transition made toward the subjects. Shaded areas represent the 95% confidence interval built using bootstrap (n = 1000) with replacement of the data under H1.

N170 amplitude: omnibus ANOVA

Histograms of N170 amplitudes and latencies as a function of condition are presented in Supplementary Materials (Supplementary Figure S1). Our ANOVA had factors of task, condition, hemisphere and gender (or participant). Participants’ gender affected N170 amplitude differently for the two tasks (task × gender interaction: F(1,20) = 5.577; P = 0.028; η2 = 0.218). There was no difference in N170 amplitude between male and female participants in the social task; however, N170 was larger in male participants in the non-social task. The omnibus mixed-factor ANOVA also revealed main effects of condition (F(4.09,81.83) = 13.017; P < 0.001; η2 = 0.394), hemisphere (F(1,20) = 15.515; P = 0.001; η2 = 0.437) and a three-way interaction between task, condition and hemisphere (F(4.05,81.11) = 5.125; P = 0.001; η2 = 0.204).

N170 was also larger to eye motion made toward an extreme position, i.e. away from the observer (Dir-Ext and Int-Ext changes, which did not differ), compared to eye motion made from an extreme position, i.e. toward the observer (Ext-Dir/Ext-Int, which did not differ). Other eye gaze motion directions (Dir-Int/Int-Dir) led to N170 with intermediate amplitudes. The three-way interaction between task, condition and hemisphere was further explored by running two 2-way repeated-measure ANOVAs: one per hemisphere. In the left hemisphere (LH), there was a main effect of condition (F(4.09,86.08) = 11.31; P < 0.001; η2 = 0.35): N170 was larger for motion away from the participants which did not differed significantly (Dir-Ext, Int-Ext, Dir-Int; all P > 0.29); it was the smallest for motion toward the participants (Ext-Int and Ext-Dir, which did not differed significantly). In the right hemisphere (RH), there was a main effect of condition (F(4.21,88.35) = 7.65; P < 0.001; η2 = 0.27) and, a significant interaction between task and condition (F(78.67,3.74) = 3.83; P = 0.008; η2 = 0.15). Overall, N170 amplitudes were larger for gaze aversion to an extreme averted position than for gaze motion ending with gaze contact; motion toward an intermediate position led to the smallest N170, with a larger N170 to the motion away from the observer (Dir-Int).

We further explored the interaction in RH, by running a one-way ANOVA for each task: both revealed a significant effect of condition (non-social task: F(3.58,75.07) = 6.46; P < 0.001; η2 = 0.24; social task: F(3.53,73.99) = 6.09; P < 0.001; η2 = 0.23). In the non-social task, N170 were larger for motion away from the participants, and smaller for motion toward the participant. In the social task, N170 amplitudes were the smallest for gaze changes toward an intermediate position: gaze aversion toward an intermediate averted gaze (Dir-Int) yield the smallest N170. Consequently, while in the non-social task, in RH, N170 amplitude appears larger for gaze transition away from the subjects, in the social task, the pictures is less clear cut, with the N170 being the smallest for an away condition (Dir-Int).

N170 amplitude: ANOVAs on condition groupings based on previous studies

In the full transition ANOVA (Figure 3), overall N170 was larger in the RH (F(1,20) = 14.98; P = 0.001; η2 = 0.43) and for gaze aversions (Dir-Ext; F(1,20) = 13.70; P = 0.001; η2 = 0.41). A three-way interaction between task, condition and hemisphere (F(1,20) = 4.69; P = 0.042; η2 = 0.19) indicated that, while the away condition evoked a larger N170 than the toward condition in both hemispheres when subjects were involved in a non-social judgment (LH: t(21) = − 2.79; P = 0.011; RH: t(21) = −2.88; P = 0.009), this difference disappeared in RH in the social task (LH: t(21) = −5.30; P < 0.001; RH: t(21) = −0.96; P = 0.35). This absence of difference in RH during the social judgments was attributed to an enhanced N170 to the stimuli showing a gaze change toward the subjects. A task by gender interaction (F(1,20) = 4.75; P = 0.041; η2 = 0.19) revealed a larger N170 for male subjects than female subjects in the social task, whereas no differences were observed in the non-social task.

The intermediate-to-endpoint ANOVA (Figure 4) again showed a larger N170 over the RH (F(1,20) = 14.74; P = 0.001; η2 = 0.42) and for gaze changes away from subjects (F(20, 1) = 7.54; P = 0.012; η2 = 0.27). A three-way interaction between task, condition and hemisphere (F(20, 1) = 10.35; P = 0.004; η2 = 0.34) showed that the modulation of N170 amplitude by gaze direction was significant in RH, while subjects were involved in the non-social task (t(21) = −2.78, P = 0.011), but not in the social task (t(21) = −1.10, P = 0.284), likely reflecting an enhanced N170 amplitude for gaze changes toward subjects. In LH, the opposite was true: the difference between conditions was not significant during the non-social task (t(21) = −0.11, P = 0.916), but N170 was significantly smaller for gaze changes toward the subjects in the social task (t(21) = −3.30, P = 0.003). There was no interaction between task and gender (F(1,20) = 3.10; P = 0.094).

Finally, the endpoint-to-intermediate ANOVA (Figure 5) again showed that N170 was larger in RH (F(1,20) = 14.80; P = 0.001; η2 = 0.43), and for the motion away from subjects (F(1,20) = 11.72; P = 0.003; η2 = 0.37). As observed in the full transition ANOVA, a three-way interaction between task, condition and hemisphere (F(1,20) = 4.70; P = 0.042; η2 = 0.19) revealed was no difference in N170 amplitude evoked by away and toward gaze changes in RH, when subjects performed a social judgment (t(21) = 1.116; P = 0.28). A task by gender interaction (F(1,20) = 6.70; P = 0.018; η2 = 0.25) revealed a larger N170 for male subjects than female subjects in the social task, and no differences were observed in the non-social task.

Discussion

Our aim in this study was to test if brain activity to gaze changes was sensitive to task demands, as mainly signaled by N170 ERP characteristics. This question is important given inconsistencies found in the gaze perception literature irrespective of whether EEG/MEG or fMRI was used as the imaging modality (Itier and Batty, 2009:11; Nummenmaa and Calder, 2009). Differences between studies could have arisen from differences in task requirements or stimuli used (e.g. size of the gaze transition). Hence, here we studied the same group of subjects as we varied task (social vs non-social judgment) and used the same trial structure with a subset of stimuli previously used in Conty et al. (2007), and analogous stimulus conditions used in two previous studies. Our data showed clear main effects of task, gaze direction (away/toward) and interaction effects between these two variables. These effects were present irrespective of gaze position onset and the size of the gaze transition, and irrespective of whether the motion toward the viewer involved eye contact.

Discrepancies between studies may potentially be explained by differences in the degree of gaze aversion, which ranged from 5° to 30° (Puce et al., 2000; Conty et al., 2007; Schweinberger et al., 2007). In our current study, gaze aversion was produced with different degrees, nonetheless when all conditions were compared in a single statistical analysis, N170 amplitude was not modulated by motion transition size. N170 amplitudes were not significantly different for conditions with the same direction of motion, but with different degrees of motion excursion. Rather, the N170 response pattern was more ‘categorical’, indicating that the observed modulations in N170 amplitude occurred when gaze changed to look (further) away from the observer. This observation is consistent with an fMRI study showing sensitivity of the anterior superior temporal sulcus to overall gaze direction that was independent from gaze angle (Calder et al., 2007).

We performed separate statistical analyses on the current data, based on groups of conditions that were used in each of our previous studies (Puce et al., 2000; Conty et al., 2007), so as to enable interpretation of observed differences. We replicated the findings of Puce et al. (2000) where extreme gaze aversions elicited larger N170s relative to direct gaze in a passive viewing task (using the non-social task here as a comparison). For non-social judgments, we observed similar N170 amplitude modulation effects for all away and all toward conditions, irrespective of the starting/ending position of the gaze transition. Gaze direction facilitates target detection by directing attention toward the surrounding space (Itier and Batty, 2009). Larger N170s to averted gaze could reflect a shift of attention toward the surrounding space, cueing the observer to a potentially more behaviorally relevant part of visual space. Spatial cueing has previously been shown to modulate early ERPs (P1/N1; Holmes et al., 2003; Jongen et al., 2007); notably, the N170 is enhanced for cued/attended targets (McDonald et al., 2003; Pourtois et al., 2004; Carlson and Reinke, 2010). Thus, our results suggest an increased salience of spatial cueing over social processing, at least with the frontal face views used here, in situations where no explicit social judgment needs to be made. It should be noted that these cueing effects occur earlier in time relative to other ERP effects related to spatial cueing seen in the literature. Two types of known ERP negativity elicited to spatial cueing in Posner-like (Posner et al., 1980) visuo-spatial cueing paradigms: the posterior early directing attention negativity and the anterior directing attention negativity (ADAN) (Harter et al., 1989; Yamaguchi et al., 1994; Hopf and Mangun, 2000; Nobre et al., 2000), typically occur in the 200–400 ms and 300–500 ms range, respectively.

Surprisingly, task had no effect on the N170 measured in the LH: left N170s were always larger for gaze aversion, irrespective of whether a social or non-social decision was being made, consistent with previous reports (Hoffman and Haxby, 2000; Itier et al., 2007b; Caruana et al., 2014). Notably, however, in the social task, the modulations of N170s by gaze aversion disappeared in the RH: N170s were not significantly different between conditions due to the occurrence of an enhanced N170 for gaze transitions toward the participants. This effect was seen for all gaze transitions toward participants, regardless of whether the gaze change ended with direct eye contact (and independent of reference electrode—see Supplementary Materials). Our results are consistent with those of Itier et al. (2007b) who used a social task with static face onset displays; they report larger N170 to averted than direct gaze, for front-view faces only, and mainly in the LH. However, our results are inconsistent with Conty et al., 2007 who reported significantly larger N170s to direct gaze than to gaze aversion, in particular for deviated head view. It should be noted that these two studies included stimuli with different head positions in addition to gaze changes, and it is possible that these additional conditions may have further modulated N170 activity (e.g. see Itier et al., 2007b).

Head orientation has been shown to modulate gaze perception in behavioral paradigms (Langton et al., 2000). In particular, incongruence between head and gaze direction can decrease participant’s performance in spatial judgments (Langton et al., 2000). Thus, including different head views may have put greater emphasis on the processing of gaze direction in Conty et al. (2007), because the gaze transitions were displayed under different configurations and therefore judging their direction (away/toward them) required deepened processing of the eye region. Following Conty et al.’s hypothesis, our results indicate that, in the case of explicit social judgments, N170 modulations reflect processes of toward motion transition, even though the observer does not necessarily become the focus of attention. It should be noted that defining social attention is particularly difficult and the definition varies between research groups: some scientists will make the argument that our tasks are actually both social as there is a human face in all conditions, for others both our tasks may be considered as spatial. It is possible that task differences could reflect a self-referential processing effect, as it the spatial computation may seem to be made relatively to the self (in the ‘social’ task), or absolutely (non-social task). However, we believe that the left/right judgment could also be regarded as self-centered as it is dependent on the participant’s right and left.

Overall our observations are consistent with previous studies showing that N170 is modulated by top-down influences (Bentin and Golland, 2002; Jemel et al., 2003; Latinus and Taylor, 2006). Our spatial-temporal analysis showed that ERP effects that were modulated by task began as early as 148 ms and lasted for 300 ms. This modulation was mostly seen on frontal electrodes, in line with the idea that task engages top-down influences arising from higher order regions. The modulation of an ERP as a function of social decision might be indicative of whether the brain is in a ‘socially aware’ mode or not. In the non-social task, involving visuospatial judgments, we observed larger N170s to transitions away from the observer, irrespective of whether the transition was made from gaze contact. This augmented N170 to gaze aversion replicates previous results observed under passive viewing (Puce et al., 2000; Caruana et al., 2014) suggesting that in the case of viewing eye gaze, the implicit working mode of the brain may not be social. Changes in gaze direction, in particular aversion from a direct gaze position, can signal a shift in spatial attention toward a specific location, inducing the observer to shift his/her attention toward the same location (Puce and Perrett, 2003; Hadjikhani et al., 2008; Straube et al., 2010). Our data seems to indicate that the ‘default’ mode of the brain might be to process gaze as an indication of a shift in attention toward a specific visuospatial location. In contrast, in an explicit ‘socially aware’ mode, the N170 is augmented in conditions with toward gaze transitions. Our data suggest that the social meaning of direct gaze arises from being in an explicit social mode where social context is the most salient stimulus dimension. This study raises questions regarding what exactly is an explicit social context. A study by Pönkanen et al. (2011) reported larger N170 to direct than averted gaze with real persons, but not with photography; yet, the same team further demonstrated that these effects were dependent on the mental attribution from the observer (Myllyneva and Hietanen, 2015). Taken together with our results, this may suggest than in ecological situations, such as in a face-to-face conversation, the presence of real faces rather than photographs may be sufficient in generating a social context, allowing the switching of processing mode from a default to an explicit socially aware mode.

Converging with the proposal that gaze processing is non-social by default, participants had more accurate performance and faster RTs in the non-social task. As stated previously, gaze direction is thought to facilitate target detection—target detection is more likely to occur in the non-social task, than in the social task in which the observer is the target of a directed behavior. Alternatively, social judgment may result in a slower attentional disengagement from the face leading to slower RTs and increased error rates in the social task (Itier et al., 2007b). Indeed, slower RTs have been reported for faces with direct gaze, and were thought to reflect an enhanced processing of faces with direct gaze (Vuilleumier et al., 2005), or a slower attentional disengagement from faces with direct gaze (Senju and Hasegawa, 2005). Consistent with this explanation, RTs for ‘toward’ gaze transitions in the social task were faster than gaze aversion, which may reflect a smaller attentional disengagement in gaze change toward the participants in the social task. These smaller RTs were consistent with our neurophysiological data, in that social judgments produced relatively enhanced N170 amplitudes to gaze transitions directed toward the subject.

We have made the claim here that our data are consistent with a ‘socially aware’ and ‘non-social’ bias for information processing in the brain, and that this bias can be conferred via top-down mechanisms (e.g. task demand) or via bottom-up mechanisms (e.g. redeployment of visuospatial attention). An alternative possibility is that non-social mechanisms related to the re-allocation of visual attention to another part of visual space—a possibility that requires future testing. Studies that systematically investigate the hypothesis that social context influences the perception of gaze transition direction are needed. One way of providing an explicitly social context might be to include multisensory stimulation, where auditory cues such as non-speech and speech vocalizations and other (non-human) environmental sounds might be presented as a face generates gaze transitions away from the viewer. This would also make the stimuli more ecologically valid. Multisensory cues with explicitly social and non-social dimensions could potentially differentiate between different ‘top-down’ processing modes (including social and non-social ones). So that this issue can be teased out participants would have to complete a series of tasks, where they might focus on one particular type of auditory cue at a given time. If our claim that communicative intent in incoming stimuli switches the brain into a ‘socially aware’ mode is true, then we should observe the largest N170 to gaze transitions accompanied by speech stimuli, with those to non-speech vocalizations being smaller, and those to environmental sounds being smaller still when subjects are explicitly given a social task e.g. detect a word target such as hello for example.

Conclusions

This dataset reconciles data from two studies on apparent gaze motion with seemingly opposite results. We report modulations of the N170 by gaze transition direction dependent on the task performed by the subjects. N170 was larger to gaze aversions in both hemispheres when subjects were involved in a non-social task, mimicking previous results with passive viewing, suggesting that the brain’s Default mode may not be ‘social’. Focusing subjects’ attention to social aspects of the stimuli, by requiring explicit social judgments, led to an enhanced N170 to toward gaze transitions in the RH, irrespective of the ending position of the gaze motion. This could reflect an increased salience of toward gaze motion in a ‘social’ context, and the brain operating in a ‘socially aware’ mode.

Funding

This study was supported by NIH grant R01 NS049436, and the College of Arts and Sciences at Indiana University Bloomington.

Supplementary data

Supplementary data are available at SCAN online.

Conflict of interest. None declared.

Footnotes

1 An advisory notice from the EGI EEG system manufacturer has informed us about an 18 ms delay between real-time acquisition (to which events are synchronized) and the EEG signal. Consequently, a post hoc latency factor of 18 ms was applied to all ERP latencies.

References

- Adams R.B., Jr, Kleck R.E. (2005). Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion, 5(1), 3–11. [DOI] [PubMed] [Google Scholar]

- Akiyama T., Kato M., Muramatsu T., Saito F., Umeda S., Kashima H. (2006). Gaze but not arrows: a dissociative impairment after right superior temporal gyrus damage, Neuropsychologia, 44(10), 1804–10. [DOI] [PubMed] [Google Scholar]

- Akiyama T., Kato M., Muramatsu T., Umeda S., Saito F., Kashima H. (2007). Unilateral amygdala lesions hamper attentional orienting triggered by gaze direction. Cerebral Cortex, 17(11), 2593–600. [DOI] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E., McCarthy G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8, 551–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., Golland Y. (2002). Meaningful processing of meaningless stimuli: the influence of perceptual experience on early visual processing of faces. Cognition, 86(1), B1–14. [DOI] [PubMed] [Google Scholar]

- Brefczynski-Lewis J.A., Berrebi M.E., McNeely M.E., Prostko A.L., Puce A. (2011). In the blink of an eye: neural responses elicited to viewing the eye blinks of another individual. Frontiers in Human Neuroscience, 5, 68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder A.J., Beaver J.D., Winston J.S., et al. (2007). Separate coding of different gaze directions in the superior temporal sulcus and inferior parietal lobule. Currrent Biology, 17(1), 20–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlson J.M., Reinke K.S. (2010). Spatial attention-related modulation of the N170 by backward masked fearful faces. Brain and Cognition, 73(1), 20–7. [DOI] [PubMed] [Google Scholar]

- Caruana F., Cantalupo G., Lo Russo G., Mai R., Sartori I., Avanzini P. (2014). Human cortical activity evoked by gaze shift observation: an intracranial EEG study. Human Brain Mapping, 35(4), 1515–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conty L., N'Diaye K., Tijus C., George N. (2007). When eye creates the contact! ERP evidence for early dissociation between direct and averted gaze motion processing. Neuropsychologia, 45(13), 3024–37. [DOI] [PubMed] [Google Scholar]

- Delorme A., Makeig S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134(1), 9–21. [DOI] [PubMed] [Google Scholar]

- Farroni T., Csibra G., Simion F., Johnson M.H. (2002). Eye contact detection in humans from birth. Proceedings of the National Academy of Sciences of the United States of America, 99(14), 9602–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E. (2005). The role of visual processes in modulating social interactions. Visual Cognition, 12(1), 1–11. [Google Scholar]

- George N., Driver J., Dolan R.J. (2001). Seen gaze-direction modulates fusiform activity and its coupling with other brain areas during face processing. Neuroimage, 13(6 Pt 1), 1102–12. [DOI] [PubMed] [Google Scholar]

- Graham R., Labar K.S. (2012). Neurocognitive mechanisms of gaze-expression interactions in face processing and social attention. Neuropsychologia, 50(5), 553–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann T., Johnson M.H., Farroni T., Csibra G. (2007). Social perception in the infant brain: gamma oscillatory activity in response to eye gaze. Social Cognitive and Affective Neuroscience, 2(4), 284–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N., Hoge R., Snyder J., de Gelder B. (2008). Pointing with the eyes: the role of gaze in communicating danger. Brain and Cognition, 68(1), 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harter M.R., Miller S.L., Price N.J., Lalonde M.E., Keyes A.L. (1989). Neural processes involved in directing attention. Journal of Cognitive Neuroscience, 1(3), 223–37. [DOI] [PubMed] [Google Scholar]

- Hoffman E.A., Haxby J.V. (2000). Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nature Neuroscience, 3(1), 80–4. [DOI] [PubMed] [Google Scholar]

- Holmes A., Vuilleumier P., Eimer M. (2003). The processing of emotional facial expression is gated by spatial attention: evidence from event-related brain potentials. Brain Research Cognitive Brain Research, 16(2), 174–84. [DOI] [PubMed] [Google Scholar]

- Hopf J.M., Mangun G.R. (2000). Shifting visual attention in space: an electrophysiological analysis using high spatial resolution mapping. Clinical Neurophysiology, 111(7), 1241–57. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Alain C., Kovacevic N., McIntosh A.R. (2007a). Explicit versus implicit gaze processing assessed by ERPs. Brain Research, 1177, 79–89. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Batty M. (2009). Neural bases of eye and gaze processing: the core of social cognition. Neuroscience and Biobehavioral Reviews, 33(6), 843–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier R.J., Latinus M., Taylor M.J. (2006). Face, eye and object early processing: what is the face specificity? Neuroimage, 29(2), 667–76. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Villate C., Ryan J.D. (2007b). Eyes always attract attention but gaze orienting is task-dependent: evidence from eye movement monitoring. Neuropsychologia, 45(5), 1019–28. [DOI] [PubMed] [Google Scholar]

- Jemel B., Pisani M., Calabria M., Crommelinck M., Bruyer R. (2003). Is the N170 for faces cognitively penetrable? Evidence from repetition priming of Mooney faces of familiar and unfamiliar persons. Brain Research Cognitive Brain Research, 17(2), 431–46. [DOI] [PubMed] [Google Scholar]

- Jongen E.M., Smulders F.T., Van der Heiden J.S. (2007). Lateralized ERP components related to spatial orienting: discriminating the direction of attention from processing sensory aspects of the cue. Psychophysiology, 44(6), 968–86. [DOI] [PubMed] [Google Scholar]

- Kawashima R., Sugiura M., Kato T., et al. (1999). The human amygdala plays an important role in gaze monitoring. A PET study. Brain, 122(Pt 4), 779–83. [DOI] [PubMed] [Google Scholar]

- Langton S.R., Watt R.J., Bruce I.I. (2000). Do the eyes have it? Cues to the direction of social attention. Trends in Cognitive Sciences, 4(2), 50–9. [DOI] [PubMed] [Google Scholar]

- Latinus M., Taylor M.J. (2006). Face processing stages: impact of difficulty and the separation of effects. Brain Research, 1123(1), 179–87. [DOI] [PubMed] [Google Scholar]

- McDonald J.J., Teder-Salejarvi W.A., Di Russo F., Hillyard S.A. (2003). Neural substrates of perceptual enhancement by cross-modal spatial attention. Journal of Cognitive Neuroscience, 15(1), 10–9. [DOI] [PubMed] [Google Scholar]

- Myllyneva A., Hietanen J.K. (2015). There is more to eye contact than meets the eye. Cognition, 134, 100–9. [DOI] [PubMed] [Google Scholar]

- Nemrodov D., Itier R.J. (2011). The role of eyes in early face processing: a rapid adaptation study of the inversion effect. British Journal of Psychology, 102(4), 783–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nobre A.C., Gitelman D.R., Dias E.C., Mesulam M.M. (2000). Covert visual spatial orienting and saccades: overlapping neural systems. Neuroimage, 11(3), 210–16. [DOI] [PubMed] [Google Scholar]

- Nummenmaa L., Calder A.J. (2009). Neural mechanisms of social attention. Trends in Cognitive Sciences, 13(3), 135–43. [DOI] [PubMed] [Google Scholar]

- Oldfield R.C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia, 9(1), 97–113. [DOI] [PubMed] [Google Scholar]

- Pernet C.R., Chauveau N., Gaspar C., Rousselet G.A. (2011). LIMO EEG: a toolbox for hierarchical LInear MOdeling of ElectroEncephaloGraphic data. Computational Intelligence and Neuroscience, 2011, 831409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ponkanen L.M., Alhoniemi A., Leppanen J.M., Hietanen J.K. (2011). Does it make a difference if I have an eye contact with you or with your picture? An ERP study. Social Cognitive and Affective Neuroscience, 6(4), 486–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner M.I., Snyder C.R., Davidson B.J. (1980). Attention and the detection of signals. Journal of Experimental Psychology , 109(2), 160–74. [PubMed] [Google Scholar]

- Pourtois G., Grandjean D., Sander D., Vuilleumier P. (2004). Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cerebral Cortex, 14(6), 619–33. [DOI] [PubMed] [Google Scholar]

- Puce A., Allison T., Bentin S., Gore J.C., McCarthy G. (1998). Temporal cortex activation in humans viewing eye and mouth movements. The Journal of Neuroscience, 18(6), 2188–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A., Perrett D. (2003). Electrophysiology and brain imaging of biological motion. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 358(1431), 435–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A., Schroeder C.E. (2010). Multimodal studies using dynamic faces. In: Curio C., Bulthoff H.H., Giese M.A., editors. Dynamic Faces: Insights from Experiments and Computation. Cambridge, MA: MIT Press, 123–40. [Google Scholar]

- Puce A., Smith A., Allison T. (2000). ERPs evoked by viewing facial movements. Cognitive Neuropsychology, 17, 221–39. [DOI] [PubMed] [Google Scholar]

- Schweinberger S.R., Kloth N., Jenkins R. (2007). Are you looking at me? Neural correlates of gaze adaptation. Neuroreport, 18(7), 693–6. [DOI] [PubMed] [Google Scholar]

- Senju A., Hasegawa T. (2005). Direct gaze captures visuospatial attention. Visual Cognition, 12(1), 127–44. [Google Scholar]

- Senju A., Johnson M.H. (2009). The eye contact effect: mechanisms and development. Trends in Cognitive Sciences, 13(3), 127–34. [DOI] [PubMed] [Google Scholar]

- Straube T., Langohr B., Schmidt S., Mentzel H.J., Miltner W.H. (2010). Increased amygdala activation to averted versus direct gaze in humans is independent of valence of facial expression. Neuroimage, 49(3), 2680–6. [DOI] [PubMed] [Google Scholar]

- Taylor M.J., Edmonds G.E., McCarthy G., Allison T. (2001a). Eyes first! Eye processing develops before face processing in children. Neuroreport, 12(8), 1671–6. [DOI] [PubMed] [Google Scholar]

- Taylor M.J., Itier R.J., Allison T., Edmonds G.E. (2001b). Direction of gaze effects on early face processing: eyes-only versus full faces. Brain Research Cognitive Brain Research, 10(3), 333–40. [DOI] [PubMed] [Google Scholar]

- Ulloa J.L., Puce A., Hugueville L., George N. (2014). Sustained neural activity to gaze and emotion perception in dynamic social scenes. Social Cognitive and Affective Neuroscience, 9(3),350–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P., George N., Lister V., Armony J., Driver J. (2005). Effects of perceived mutual gaze and gender on face processing and recognition memory. Visual Cognition, 12(1), 85–101. [Google Scholar]

- Watanabe S., Miki K., Kakigi R. (2002). Gaze direction affects face perception in humans. Neuroscience Letters, 325(3), 163–6. [DOI] [PubMed] [Google Scholar]

- Wicker B., Michel F., Henaff M.A., Decety J. (1998). Brain regions involved in the perception of gaze: a PET study. Neuroimage, 8(2), 221–7. [DOI] [PubMed] [Google Scholar]

- Yamaguchi S., Tsuchiya H., Kobayashi S. (1994). Electroencephalographic activity associated with shifts of visuospatial attention. Brain, 117(Pt 3), 553–62. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.