Abstract

BACKGROUND

The quality of cancer care has become a national priority; however, there are few ongoing efforts to assist medical oncology practices in identifying areas for improvement. The Florida Initiative for Quality Cancer Care is a consortium of 11 medical oncology practices that evaluates the quality of cancer care across Florida. Within this practice-based system of self-assessment, we determined adherence to colorectal cancer quality of care indicators (QCIs) in 2006, disseminated results to each practice and reassessed adherence in 2009. The current report focuses on evaluating the direction and magnitude of change in adherence to QCIs for colorectal cancer patients between the 2 assessments.

STUDY DESIGN

Medical records were reviewed for all colorectal cancer patients seen by a medical oncologist in 2006 (n = 489) and 2009 (n = 511) at 10 participating practices. Thirty-five indicators were evaluated individually and changes in QCI adherence over time and by site were examined.

RESULTS

Significant improvements were noted from 2006 to 2009, with large gains in surgical/pathological QCIs (eg, documenting rectal radial margin status, lymphovascular invasion, and the review of ≥12 lymph nodes) and medical oncology QCIs (documenting planned treatment regimen and providing recommended neoadjuvant regimens). Documentation of perineural invasion and radial margins significantly improved; however, adherence remained low (47% and 71%, respectively). There was significant variability in adherence for some QCIs across institutions at follow-up.

CONCLUSIONS

The Florida Initiative for Quality Cancer Care practices conducted self-directed quality-improvement efforts during a 3-year interval and overall adherence to QCIs improved. However, adherence remained low for several indicators, suggesting that organized improvement efforts might be needed for QCIs that remained consistently low over time. Findings demonstrate how efforts such as the Florida Initiative for Quality Cancer Care are useful for evaluating and improving the quality of cancer care at a regional level.

The importance of quality of care for cancer patients was highlighted by the Institute of Medicine report recommending that cancer care quality be monitored using a core set of quality of care indicators (QCI).1 The QCIs can encompass structural, process, and outcomes measures1; however, process QCIs have several advantages, such as being closely related to outcomes, easily modifiable, and providing clear guidance for quality-improvement efforts.2 The American Society for Clinical Oncology established the National Initiative for Cancer Care Quality (NICCQ) to develop and test a validated set of core process QCIs3,4 and the Quality Oncology Practice Initiative (QOPI) to conduct ongoing assessments of these QCIs within individual practices.5-9 Since 2006, QOPI has been an opportunity for oncology practices to participate in practice-based quality of care self-assessments that have identified areas in need of improvement.9,10 Although QOPI has been successful at improving performance within QOPI sites,8,10 improvement of cancer care outside of QOPI might require local or regional efforts that are physician or practice driven.1

The Florida Initiative for Quality Cancer Care (FIQCC) consortium was established in 2004 with the overall goal of evaluating and improving the quality of cancer care at the regional level in Florida.11-15 Based on a collaborative approach, all FIQCC sites participated in identifying quality measures for breast, colorectal, and non–small cell lung cancer consistent with evidence-, consensus-, and safety-based guidelines that could be abstracted from medical records.4,9,16-21 Using standardized methods, medical records of breast, colorectal, and non–small cell lung cancer patients first seen by a medical oncologist at 11 participating practices in 2006 were abstracted to measure adherence to QCIs.13,22,23 All results were then shared and individual practices were charged with implementing site-specific quality-improvement efforts in areas where performance lagged. Using identical procedures to select cases across the 3 cancer types and measure quality, 10 of the 11 founding practices conducted a second round of medical record abstractions for patients first seen by a medical oncologist in 2009. The current report focuses on 35 QCIs for colorectal cancer (CRC). The objectives were to examine the overall difference in adherence between the 2 assessments, to determine if the change over time was independent of other factors (such as payor mix), and to determine if there was variability in change across practice sites.

METHODS

Study sites

The FIQCC was founded with 11 medical oncology practices in Florida at or affiliated with Space Coast Medical Associates (Titusville), North Broward Medical Center (Pompano Beach), Center for Cancer Care and Research (Lakeland), Florida Cancer Specialists (Sarasota), Ocala Oncology Center (Ocala), Robert & Carol Weissman Cancer Center (Stuart), Cancer Centers of Florida (Orlando), Tallahassee Memorial Cancer Center (Tallahassee), University of Florida Shands Cancer Center (Gainesville), Mayo Clinic Cancer Center (Jacksonville), and Moffitt Cancer Center (Tampa) (Appendix Figure 1, online only).15,24 Each practice met the following criteria for initial participation in the initiative: medical oncology services provided by more than one oncologist; availability of a medical record abstractor; and estimate of ≥40 cases each of colorectal, breast, and non–small cell lung cancer for calendar year 2006. Ten of these practices still met eligibility criteria and were willing to participate in the 2009 abstraction of cases. The project received approval from Institutional Review Boards at each institution. Based on exempt status, informed consent from patients was not required to access medical records. To maintain patient privacy, records were coded with a unique project identifier before transmission to the central data-management site.

Quality of care indicators

Representatives from the 11 oncology sites participating in FIQCC identified quality measures consistent with evidence-, consensus-, and safety-based guidelines that could be abstracted from medical records of breast, colorectal, and non–small cell lung cancer patients. As part of this process, oncology experts from Moffitt Cancer Center and collaborating sites met monthly for 4 months to formulate or select indicators based on group input as well as American Society of Clinical Oncology guidelines,16 National Comprehensive Cancer Network guidelines,17-19 National Quality Forum,20 American College of Surgeons,21 American Society of Clinical Oncology QOPI,9 and NICCQ indicators.4 Consensus from the principal investigator and co-investigators at Moffitt

Cancer Center and the site principal investigators was required for a QCI to be used in the study. The resulting indicators were organized by diagnosis (breast cancer, colorectal cancer, and non–small cell lung cancer), as well as by domains of care (eg, symptom management). In this article, we focus only on results of 35 QCIs based on abstraction of charts for CRC patients (Appendix Table 1, online only).

Medical record selection and review

Medical chart reviews were conducted on all patients diagnosed with CRC with a medical oncology appointment in 2006 and 2009. Patients 18 years old or younger or those diagnosed with anal/rectosigmoid carcinoma, synchronous, or nonadenocarcinoma malignancies were excluded. Chart review and quality-control procedures were conducted throughout the study as reported previously.14,15,25 In brief, the chief medical record abstractor conducted on-site training of data abstractors at each site using a comprehensive abstraction manual. Abstraction did not begin until an inter-rater agreement was 70% on 5 consecutive cases. Quality control of the data retrieved was maintained through 2 audits, which were performed by the abstractor trainer when each site had completed abstraction of one third and two thirds of the total number of charts for review.

Disclosure of 2006 findings/initiation of quality-improvement plans

In June 2007, a conference was held with representatives from each consortium site to discuss results of the 2006 chart abstraction of CRC cases. Each consortium site was given a unique site name (eg, site A) and all results were revealed in a blinded fashion, with each site knowing only their own identity. Particular attention was given to performance indicators with <85% adherence and those quality indicators with considerable variance in performance among the sites. Potential explanations for variance among the sites, such as age distribution of a site's patient population, community vs academic center, and large vs small volume practice were analyzed and discussed.13,22,23 After all results were disclosed, each site representative had the opportunity to present suggestions for improvements and describe that works well in their practice. The conference concluded with the future strategic plan that the representative from each site would disclose these results to their respective practices. Each site was also encouraged to develop and implement a quality-improvement plan for any performance indicators <85%. Site representatives were informed that the same survey would be repeated for cases first seen in 2009 to assess changes in all indicators.

Statistical analysis

Statistical comparisons in case characteristics between 2006 and 2009 were made with the Pearson chi-square exact test, using Monte Carlo estimation. Descriptive statistics and graphical illustrations were used to summarize all indicator variables. The adherence proportion with its 95% confidence interval was calculated based on the exact binomial distribution. Statistical comparisons between 2006 and 2009 data and across practice sites were also made with the Pearson chi-square exact test. Multivariable logistic regression models were used to determine if the effects of time (2006 vs 2009) on adherence to QCIs were independent of practice site. The interaction between practice site and time was tested in logistic regression models to evaluate the effect of practice site variation across time. Significant demographic confounders with time were also included in the logistic regression models. Firth's penalized maximum likelihood approach was used to fit the logistic regression models for small sample sizes.26,27 A p value of 0.05 (2-sided) was considered significant. All analyses were conducted using SAS 9.3 (SAS Institute Inc.).

RESULTS

Case characteristics

A total of 1,000 CRC cases from 10 FIQCC sites were included in this analysis (489 reviewed in 2006 and 511 in 2009). The number of cases reviewed at each site ranged from 31 to 97 in 2006 and 27 to 98 in 2009. Table 1 presents the characteristics of the 1,000 CRC cases evaluated in this study. Mean age of patients did not differ between 2006 and 2009 (p = 0.73; mean ± SD 64.2 ± 12.7 years in 2006 and 63.2 ± 13.1 years in 2009). A majority of the patients were male (52%) and white (80%), with no statistical differences between the 2 assessments. There was a significant difference in the distribution of health insurance when comparing 2006 and 2009 (p < 0.001). Compared with 2006, the number of patients receiving charity care or covered by Medicaid was higher in 2009, and the other insurance types were decreased. Overall, of the 743 colon and 200 rectal cases with documented stage, there were no differences in the distribution of pathologically confirmed stage between the 2 assessments.

Table 1.

Characteristics of 1,000 Colorectal Cancer Cases within Florida Initiative for Quality Cancer Care Consortium

| Total (n = 1,000) |

2006 Cases (n = 489) |

2009 Cases (n = 511) |

|||||

|---|---|---|---|---|---|---|---|

| Variable | n | % | n | % | n | % | p Value* |

| Age, y | 0.725 | ||||||

| 55 or younger | 268 | 26.8 | 127 | 26 | 141 | 27.6 | |

| 56–65 | 262 | 26.2 | 124 | 25.4 | 138 | 27 | – |

| 66–75 | 268 | 26.8 | 133 | 27.2 | 135 | 26.4 | – |

| Older than 75 | 202 | 20.2 | 105 | 21.5 | 97 | 19 | |

| Sex | 0.314 | ||||||

| Female | 483 | 48.3 | 228 | 46.6 | 255 | 49.9 | – |

| Male | 517 | 51.7 | 261 | 53.4 | 256 | 50.1 | |

| Race | 0.382 | ||||||

| White | 798 | 79.8 | 384 | 78.5 | 414 | 81 | – |

| Black | 86 | 8.6 | 41 | 8.4 | 45 | 8.8 | – |

| American Indian | 3 | 0.3 | 0 | 0 | 3 | 0.6 | – |

| Asian | 10 | 1 | 6 | 1.2 | 4 | 0.8 | |

| Missing | 103 | 10.3 | 58 | 11.9 | 45 | 8.8 | |

| Health insurance | <0.0001 | ||||||

| Private | 374 | 37.4 | 191 | 39.1 | 183 | 35.8 | – |

| Medicare | 507 | 50.7 | 252 | 51.5 | 255 | 49.9 | |

| Medicaid/charity | 79 | 7.9 | 20 | 4.1 | 59 | 11.5 | – |

| None/unclassified | 40 | 4 | 26 | 5.3 | 14 | 2.7 | |

| Pathological stage | |||||||

| Colon | 743 | 74.3 | 364 | 74.4 | 364 | 74.4 | 0.140 |

| Stage I | 61 | 6.1 | 20 | 4.1 | 41 | 8 | – |

| Stage II | 162 | 16.2 | 80 | 16.4 | 82 | 16 | – |

| Stage III | 288 | 28.8 | 145 | 29.7 | 143 | 28 | – |

| Stage IV | 232 | 23.2 | 119 | 24.3 | 113 | 22.1 | |

| Rectal | 200 | 20 | 89 | 18.2 | 111 | 21.7 | – |

| Stage I | 21 | 2.1 | 11 | 2.2 | 10 | 2 | – |

| Stage II or III | 123 | 12.3 | 51 | 10.4 | 72 | 14.1 | – |

| Stage IV | 56 | 5.6 | 27 | 5.5 | 29 | 5.7 | |

| Missing† | 57 | 5.7 | 36 | 7.4 | 21 | 4.1 | |

p Values are calculated using chi-square test using exact method with Monte Carlo estimation, excluded missing level for p value calculation.

57 cases did not have a pathology report documenting stage.

Surgical and pathology quality indicators change over time

Table 2 presents the mean adherence and percent change for surgical and pathological quality indicators in 2006 and 2009. A similar proportion of CRCs was detected by colonoscopy screening at both time points (14.5% and 17.6% in 2006 and 2009, respectively; p = 0.200). Adherence was high in 2006 for documentation of tumor stage (American Joint Committee on Cancer, Dukes, or TNM status), and there was a statistically significant increase in 2009 (3.3%; p = 0.029). The percentage of cases that had a barium enema or colonoscopy within 6 months before or after surgical resection to rule out synchronous lesions18,19 was significantly lower in 2009 by 5.9% (p = 0.025).

Table 2.

Percent Change in Adherence to Surgical and Pathological Colorectal Cancer Quality Indicators between 2006 and 2009

| 2006 Cases |

2009 Cases |

||||||

|---|---|---|---|---|---|---|---|

| Indicator | Adherence, % | n/Eligible Cases | Adherence, % | n/Eligible Cases | Change, %* | p Value† | |

| CRC detection by screening | 14.5 | 71/489 | 17.6 | 90/511 | 3.1 | 0.200 | |

| Documentation of staging | 92.6 | 453/489 | 95.9 | 490/511 | 3.3 | 0.029 | |

| Barium enema or colonoscopy within 6 months | 86.7 | 345/398 | 80.8 | 366/453 | –5.9 | 0.025 | |

| Copy of pathology report in chart | 98.7 | 303/307 | 97.7 | 340/348 | –1.0 | 0.394 | |

| Depth of invasion (T level) | 95.4 | 289/303 | 97.6 | 332/340 | 2.3 | 0.137 | |

| Lymphovascular invasion | 74.9 | 227/303 | 88.8 | 302/340 | 13.9 | <0.001 | |

| Perineural invasion | 36.3 | 110/303 | 47.4 | 161/340 | 11.0 | 0.005 | |

| Tumor differentiation (grade) | 92.4 | 280/303 | 96.2 | 327/340 | 3.8 | 0.043 | |

| Radial margin for rectal cancer | 41.7 | 25/60 | 70.9 | 56/79 | 29.2 | <0.001 | |

| Distal and proximal margin status | 96.7 | 293/303 | 96.8 | 329/340 | 0.1 | 1.000 | |

| Removal of LNs | 96.4 | 292/303 | 97.6 | 332/340 | 1.3 | 0.364 | |

| ≥12 LNs examined for nonmetastatic colon cancer | 73.0 | 176/241 | 84.7 | 221/261 | 11.6 | 0.002 | |

| Serum CEA pretreatment for nonmetastatic CRC | 78.5 | 241/307 | 79.0 | 275/348 | 0.5 | 0.925 | |

| Serum CEA post-treatment for nonmetastatic CRC | 81.8 | 251/307 | 84.2 | 293/348 | 2.4 | 0.466 | |

Difference in percent adherence between 2009 and 2006.

Exact Pearson chi-square p values computed using Monte Carlo estimation.

CRC, colorectal cancer; LN, lymph node.

Medical oncology practices were consistently highly adherent (98.7% in 2006 and 97.7% in 2009) in maintaining a copy of the surgical pathology reports confirming malignancy in their office charts over time. Pathology reports maintained high adherence for the documentation of depth of tumor invasion, grade, and status of resection margins. In 2006, lymphovascular (LVI) and perineural invasion (PNI) were documented in 74.9% and 36.3% of charts, respectively. In 2009, there was a significant 13.9% and 11% increase in the documentation of LVI and PNI, respectively; however, the overall adherence in 2009 was still only 47.4% for PNI. Notably, the largest increase observed for surgical QCIs was in the reporting of radial margin status for nonmetastatic rectal cancer patients. In 2006, adherence was 41.7%; however, in 2009, adherence increased to 70.9% (29.2% increase; p < 0.001). A significant improvement was observed for the assessment of ≥12 lymph nodes (LNs) in 2009 compared with 2006 (11.6% change; p = 0.002) among nonmetastatic colon cancer patients. Among nonmetastatic CRC patients who had surgery, assessment of CEA before treatment occurred in 79% of patients in both 2006 and 2009 (p = 0.925). Adherence was slightly better in 2009 for CEA assessment 6 months post treatment (81.8% in 2006 and 84.2% in 2009); however, there was no significant change over time (p = 0.466).

Medical and radiation oncology quality indicators change over time

At both time points, there was documentation in the chart that medical oncologists considered 95% of eligible patients for chemotherapy treatment (Table 3). Among 642 CRC patients who received chemotherapy treatment (n = 308 in 2006 and n = 334 in 2009), adherence was 99% at both time points for documenting body surface area and 87% and 90% in 2006 and 2009 for having chemotherapy flow sheets in the medical records. The documentation of informed consent for treatment changed from 69.2 in 2006 to 72.2% in 2009; however, this change was not statistically significant (p = 0.433). In 2009, 67.7% of charts had the patient's planned treatment regimen dose (eg, name of drug and number of cycles) documented, which was a significant increase of 9.5 from 2006 (p = 0.017). Medical oncologists in 2009 were more likely to document a planned dose of chemotherapy that fell within a range consistent with recommended regimens compared with 2006 (82.8% vs 66.7%, respectively; p = 0.004). The percentage of patients treated with recommended adjuvant chemotherapy for nonmetastatic CRC increased from 83.3% in 2006 to 98.5% in 2009 (15.2% increase; p < 0.001). However, there were no improvements in providing adjuvant chemotherapy within 8 weeks post surgery (67.7% in 2006 and 62.1% in 2009; p = 0.287). Among the nonmetastatic rectal cancer cases (n = 32 in 2006 and n = 55 in 2009), there was no significant difference in the number of patients receiving recommended drug regimens for neoadjuvant chemotherapy (31.3% in 2006 vs 49.1% in 2009; p = 0.121) or the initiation of treatment within 8 weeks of a positive biopsy (87.5% in 2006 and 76.4% in 2009; p 0.263) over time.

Table 3.

Percent Change in Adherence to Medical and Radiation Oncology Colorectal Cancer Quality Indicators between 2006 and 2009

| 2006 Cases |

2009 Cases |

||||||

|---|---|---|---|---|---|---|---|

| Indicator | Adherence, % | n/Eligible Cases | Adherence, % | n/Eligible Cases | Change, %* | p Value† | |

| Medical oncology indicators | |||||||

| Consideration of adjuvant chemotherapy (all stage) | 94.8 | 400/422 | 94.8 | 416/439 | 0.0 | 1.000 | |

| Explanation for not considering for treatment (all stages) | 95.5 | 21/22 | 73.9 | 17/23 | –21.5 | 0.096 | |

| Consent for chemotherapy | 69.2 | 213/308 | 72.2 | 241/334 | 3.0 | 0.433 | |

| Flow sheet for chemotherapy | 86.7 | 267/308 | 90.4 | 302/334 | 3.7 | 0.174 | |

| Documentation of BSA | 99.4 | 306/308 | 99.7 | 333/334 | 0.3 | 0.621 | |

| Documented planned adjuvant chemotherapy treatment dose | 58.1 | 179/308 | 67.7 | 226/334 | 9.5 | 0.017 | |

| Planned dose and cycles acceptable for chemotherapy regimens (nonmetastatic) | 66.7 | 70/105 | 82.8 | 111/134 | 16.2 | 0.004 | |

| Treated with accepted regimen of adjuvant chemotherapy (nonmetastatic) | 83.3 | 155/186 | 98.5 | 195/198 | 15.2 | <0.001 | |

| Adjuvant chemotherapy within 8 weeks (nonmetastatic) | 67.7 | 126/186 | 62.1 | 123/198 | –5.6 | 0.287 | |

| Accepted regimen of neoadjuvant chemotherapy (nonmetastatic rectal cancer) | 31.3 | 10/32 | 49.1 | 27/55 | 17.8 | 0.121 | |

| Receipt of neoadjuvant chemotherapy within 8 weeks of diagnosis (nonmetastatic rectal cancer) | 87.5 | 28/32 | 76.4 | 42/55 | –11.1 | 0.263 | |

| Radiation oncology indicators for rectal cancer | |||||||

| Consideration of radiation therapy | 100 | 51/51 | 97.2 | 70/72 | –2.8 | 0.506 | |

| Consultation with radiation oncologist | 92.2 | 47/51 | 98.6 | 69/70 | 6.4 | 0.160 | |

| Receipt of neoadjuvant radiation treatment | 80.9 | 38/47 | 85.5 | 59/69 | 4.7 | 0.615 | |

| Receipt of adjuvant radiation treatment | 21.3 | 10/47 | 14.5 | 10/69 | –6.8 | 0.456 | |

| Accepted dosage of radiation treatment | 70.2 | 33/47 | 87.0 | 60/69 | 16.7 | 0.033 | |

Difference in percent adherence between 2009 and 2006.

Exact Pearson chi-square p values computed using Monte Carlo estimation.

BSA, body surface area.

Adherence to radiation oncology quality indicators for rectal cancer patients is also presented in Table 3. The vast majority of patients (>98.4%) with locally advanced rectal cancer (T3 and/or node positive) who had surgery (n = 123) were referred for and received radiation treatment. The percentage of patients who received neoadjuvant radiation instead of adjuvant radiation increased from 80.9% in 2006 to 85.5% in 2009; however, this did not reach statistical significance (p = 0.615). Adherence improved significantly from 70.2% in 2006 to 87.0% in 2009 (p 0.033) for delivery of a recommended radiation therapy regimen.

Multivariable logistic regression models were used to determine if the changes over time in adherence to QCIs were independent of payor mix, which differed over time. Overall, significant differences in adherence to QCIs in 2009 compared with 2006 remained after adjusting for differences in payor mix between the 2 time points. Exceptions were that the change in adherence between 2006 and 2009 became marginally significant for documentation of stage (p = 0.066) and delivery of a recommended radiation therapy regimen (p = 0.062) (data not shown).

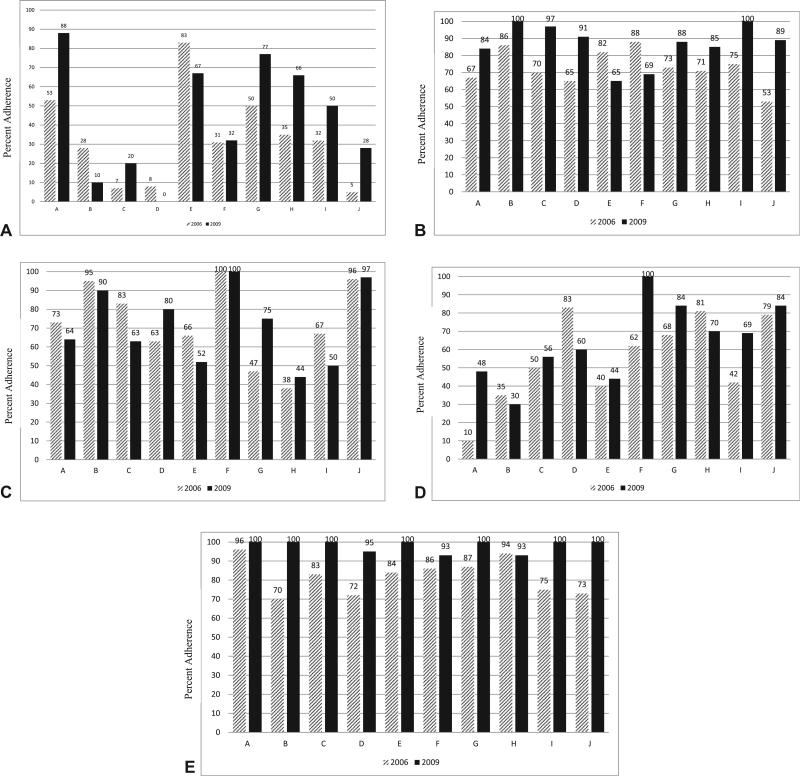

Variability in adherence across practice sites

To examine if adherence changes over time were consistent across the 10 medical oncology practice sites, adherence was visualized graphically (Fig. 1) and an interaction term of practice site and time was tested in logistic regression models (data not shown). There was variability in the magnitude and direction of the change in adherence over time for some QCIs across practice sites. As demonstrated in Figure 1A, documentation of PNI in 2006 and 2009 varied by oncology practice, with change within a site ranging from a decrease of 17.8% to an increase of 34.6%. This variability in adherence over time across sites was statistically significant in logistic regression modeling of the interaction between time and practice site (p = 0.01, data not shown). In contrast, the change for documenting LVI was consistent across oncology practices (site by time interaction, p = 0.473). The magnitude of the change in adherence to evaluating ≥12 LNs in 2009 varied by oncology practice (site and time interaction, p = 0.008; Fig. 1B). Overall, there was not a significant change in adherence for documentation of informed consent for treatment in 2009; however, there was significant variability in adherence over time by oncology practice (site by time interaction, p = 0.041). As evident in Figure 1C, adherence for documenting informed consent increased at some practice sites (eg, site G had largest change of 28%), and decreased at other sites (eg, site C had a −20% change). The percent change in adherence between 2006 and 2009 in the documentation of planned chemotherapy dose varied by oncology practice (site by time interaction, p < 0.001) for both the magnitude and direction of the change (range of change −23% to 38%; Fig. 1D). In contrast, the increase in adherence with documenting planned dose and cycles that fell within accepted guidelines and treating with accepted regimens (Fig. 1E) were consistent across all sites (site by time interaction, p = 0.778 and p = 0.795, respectively).

Figure 1.

Variability in adherence to select quality of care indicators over time across medical oncology practices. The magnitude of the change in adherence from 2006 (hatched bars) to 2009 (black bars) varied significantly across medical oncology practice sites for (A) perineural invasion (site by time interaction, p < 0.001), (B) examination of ≥12 lymph nodes (site by time interaction, p ≤ 0.001), (C) informed consent (site by time interaction, p = 0.04), and (D) documentation of planned chemotherapy regimen (site by time interaction, p < 0.001); however, (E) treatment with approved chemotherapy regimens improved consistently across sites (site by time interaction, p = 0.80).

DISCUSSION

The FIQCC conducted successive quality of cancer care assessments within 10 medical oncology practices in Florida. Overall, we observed numerous improvements in adherence to quality indicators during the 3-year interval. The success of this program highlights the dedication of each medical oncology practice site to conduct self-directed improvement efforts. In addition, improvements in surgical, pathology, and radiation oncology provide evidence that medical oncology practices serve as a good hub for quality of cancer care evaluation and can be relied on to disseminate findings to other specialties, such as pathology and surgery.

The FIQCC monitored and reported on adherence to QCIs at each site, and the individual practice sites took the lead on quality-improvement efforts. As outlined in the methods, FIQCC conferences included reporting of blinded results and practice site discussions about quality initiatives and/or targets for improvement. Consistently across practice sites, results were shared with respective Institutional Quality Review committees (either established or inaugural) and/or at multidisciplinary tumor board meetings. Therefore, improvement efforts were not only practice driven, but also physician driven. For example, both pathologists and surgeons took the initiative to improve communication and documentation of LN harvesting within one FIQCC site. Practice-driven improvement plans included development of treatment consent processes, altering pathology reporting to include missing elements, or improving CEA testing. However, some sites did report challenges in implementing improvement efforts for some QCIs, including a lack of control to select external pathology services due to insurance requirements, no access to the College of American Pathologists synoptic pathology-reporting templates, and institutional delays in implementing informed consent for treatment. The individual practice-driven improvement efforts, as well as challenges encountered, might explain, in part, the variability in improvements by practice site for some QCIs.

Auditing and monitoring the completeness of pathology reports has been reported to improve report quality.28-31 Therefore, it is possible that the monitoring completed as part of FIQCC might have had an impact on the improvements in pathology report quality in 2009 after the 2006 assessment. For pathological QCIs, there was a significant increase in the documentation of LVI and PNI in 2009; with documentation of LVI now surpassing adherence standards of 85%. However, overall adherence for PNI was still only 47%, and the percent change was inconsistent across practice sites. Although use of synoptic pathology reporting might have contributed to the increased documentation, PNI remains an optional field and, therefore, although PNI appears to be an important predictor of prognosis,32 some pathologists continued to not document this optional parameter. Another QCI that has been shown to increase in documentation with synoptic pathologic reporting was the status of radial margins.33 In 2009, the largest increase observed for surgical and pathological QCIs was in the reporting of radial margin status for nonmetastatic rectal cancer patients. However, with 71% adherence, there is still a need to improve adherence to meet the standard benchmark of 85% and achieve adherence similar to previous reports.33 Rectal radial margin status is highly predictive of local recurrence34 and continued improvements in adherence to this QCI should lead to increased identification of patients at risk of recurrence and potentially improved patient outcomes.

The adequate harvest of LNs among nonmetastatic colon cancer patients improves staging and leads to improved outcomes.35,36 Although the significance of the 12-node benchmark remains controversial,37,38 the National Quality Forum has supported this cut point as a QCI20 and it was selected as a required quality benchmark by the American College of Surgeons for hospital accreditation in 2014.39 During a 3-year period of time, we observed considerable improvements in LN harvesting to meet the benchmark of success for the QCI. These improvements, which did vary by practice site, might reflect improved communication between surgeons and pathologists within FIQCC sites or increased awareness of this quality measure by the clinical community at large.

Medical oncologists made substantial improvements in the planning and administration of chemotherapy during the 3-year period between quality of care assessments. These improvements were consistent across medical oncology practices for 2 of 3 QCIs. Although the direct reason for these improvements is unknown, we speculate that raised awareness from the 2006 assessment of the importance of reporting not only the regimen but the drug name, number of cycles, and treatment dose might have played a role in the improvements. In addition, improvements might have been higher for these medical oncology QCIs, as opposed to surgical or pathology QCIs, due to the fact that the primary assessment and reporting of adherence was directly to medical oncology practices. Several practice sites adopted electronic medical record systems during this period of time, which might explain the improved documentation of chemotherapy planning. However, this would not explain improvements in knowledge of National Comprehensive Cancer Network–recommended therapies, which also improved over time. There were, however, no improvements in providing adjuvant chemotherapy within 8 weeks post surgery. Factors associated with delay of treatment are unknown and this lack of improvement suggests that larger organized quality-improvement efforts are needed to address this QCI. There are ongoing intervention studies examining whether oncology nurse navigators improve the quality of early cancer care between diagnosis and initiation of treatment,40 which in turn can assist in reducing the time between diagnosis and initiation of chemotherapy.

The FIQCC followed Institute of Medicine recommendations to monitor quality of care using a core set of QCIs with a focus on process measures.1 Process QCIs have several advantages, such as being closely related to outcomes, easily modifiable, and providing clear guidance for quality-improvement efforts.2 Process QCIs include issues related to patient safety (eg, use of chemotherapy flow sheets), application of evidence-based treatment (eg, use of recommended chemotherapy regimens), and patient-centric care (eg, consent for chemotherapy treatment). Adherence to process QCIs has been the primary focus of ongoing national and regional quality of care efforts, including FIQCC,13,22,23 and are now being incorporated into hospital accreditation and physician pay for performance models.39,41 For example, accreditation by the American College of Surgeons Commission on Cancer currently includes monitoring and compliance with 6 QCIs (3 breast, 3 CRC).41 Despite many of the laudable benefits of examining processes of care, it is unknown how adherence to process QCIs relates to patient outcomes, such as disease-free and overall survival. Regional quality efforts, such as FIQCC, that have assessed adherence to process quality indicators are now uniquely poised to address this gap by leveraging the existing quality of care data and linking them to hospital or state-wide cancer registry outcomes data. The next steps of this research are to assess the long-term outcomes data on the patients included in FIQCC evaluations and identify which process QCIs deserve a higher level of focus for meaningful quality-improvement efforts.

This study presents a regional assessment at 2 time points of the quality of CRC care from 10 medical oncology practices that differ in many respects (eg, patient volume, geographic location, community/academic status, and degree of physician specialization in CRC). Across the FIQCC consortium, there was very little overlap in patient catchment area across FIQCC practice sites, with each site representing 1 to 3 hospitals or health systems. There were 2 sites that shared surgical/pathology services. We evaluated a comprehensive set of 35 validated QCIs in CRC cases using standardized methods and case identification criteria in 2006 and 2009. The chart abstractions were systematically conducted by trained abstractors and data collection was audited. Along with these strengths, there are some limitations that should be considered. Colorectal cancer cases from 2006 and 2009 might not represent current practice patterns; all QCIs were specific for year of assessment (eg, recommended treatment regimens). The small sample size for some indicators might have influenced the interpretation of adherence, especially for rectal cancer patients. The improvement efforts varied across practice sites for several reasons (eg, site-specific results, priorities, and/or funding) and were not formally tracked by FIQCC; therefore, the direct cause of quality improvements over time cannot be fully determined and might be due to secular trends.

CONCLUSIONS

The FIQCC practices conducted self-directed quality-improvement efforts during a 3-year interval and overall improvements in adherence to quality indicators were observed. However, adherence remained low for several indicators (such as PNI documentation, detection of cancer by screening, and timing of adjuvant therapy), suggesting that organized improvement efforts might be needed for some QCIs that remained consistently low across sites, such as quality-focused physician continuing education.42 Although we have demonstrated improvements in process QCIs, additional studies are critical to determine the direct impact of improved adherence on patient outcomes. The FIQCC serves as a model for evaluating and improving the quality of cancer care in regional treatment networks.

Supplementary Material

Acknowledgment

The authors wish to acknowledge the assistance provided by Tracy Simpson, Christine Marsella, Suellen Sachariat, and Joe Wright.

Abbreviations and Acronyms

- CRC

colorectal cancer

- FIQCC

Florida Initiative for Quality Cancer Care

- LN

lymph node

- LVI

lymphovascular invasion

- NICC

National Initiative for Cancer Care Quality

- PNI

perineural invasion

- QCI

quality of care

- QOPI

Quality Oncology Practice Initiative

Footnotes

Disclosure Information: This study was supported by a research grant and funds for travel from Pfizer, Inc. Dr Cartwright receives payment as a consultant for Genentech and as a speaker for Amgen BMS.

Abstract presented at the American College of Surgeons 98th Annual Clinical Congress, Chicago, IL, October 2012.

Author Contributions

Study conception and design: Siegel, Jacobsen, Lee, Malafa, Fulp, Fletcher, Shibata

Acquisition of data: Fletcher, Smith, Brown, Levine, Cartwright, Abesada-Terk, Kim, Alemany, Faig, Sharp, Markham, Lee, Fulp

Analysis and interpretation of data: Siegel, Jacobsen, Lee, Fulp, Fletcher, Shibata

Drafting of manuscript: Siegel, Jacobsen, Lee, Fulp, Fletcher, Shibata

Critical revision: Siegel, Jacobsen, Lee, Malafa, Fulp, Fletcher, Smith, Brown, Levine, Cartwright, Abesada-Terk, Kim, Alemany, Faig, Sharp, Markham, Shibata

REFERENCES

- 1.Hewitt M, Simone JV. Ensuring Quality Cancer Care. Institute of Medicine and National Research Council; Washington, DC: 1999. [PubMed] [Google Scholar]

- 2.Patwardhan M, Fisher DA, Mantyh CR, et al. Assessing the quality of colorectal cancer care: do we have appropriate quality measures? (A systematic review of literature). J Eval Clin Pract. 2007;13:831–845. doi: 10.1111/j.1365-2753.2006.00762.x. [DOI] [PubMed] [Google Scholar]

- 3.Schneider EC, Malin JL, Kahn KL, et al. Developing a system to assess the quality of cancer care: ASCO's national initiative on cancer care quality. J Clin Oncol. Aug 1. 2004;22:2985–2991. doi: 10.1200/JCO.2004.09.087. [DOI] [PubMed] [Google Scholar]

- 4.Malin JL, Schneider EC, Epstein AM, et al. Results of the National Initiative for Cancer Care Quality: how can we improve the quality of cancer care in the United States? J Clin Oncol. 2006;24:626–634. doi: 10.1200/JCO.2005.03.3365. [DOI] [PubMed] [Google Scholar]

- 5.Neuss MN, Jacobson JO, McNiff KK, et al. Evolution and elements of the quality oncology practice initiative measure set. Cancer Control. 2009;16:312–317. doi: 10.1177/107327480901600405. [DOI] [PubMed] [Google Scholar]

- 6.Blayney DW, McNiff K, Hanauer D, et al. Implementation of the Quality Oncology Practice Initiative at a university comprehensive cancer center. J Clin Oncol. 2009;27:3802–3807. doi: 10.1200/JCO.2008.21.6770. [DOI] [PubMed] [Google Scholar]

- 7.McNiff KK, Neuss MN, Jacobson JO, et al. Measuring supportive care in medical oncology practice: lessons learned from the quality oncology practice initiative. J Clin Oncol. 2008;26:3832–3837. doi: 10.1200/JCO.2008.16.8674. [DOI] [PubMed] [Google Scholar]

- 8.Jacobson JO, Neuss MN, McNiff KK, et al. Improvement in oncology practice performance through voluntary participation in the Quality Oncology Practice Initiative. J Clin Oncol. 2008;26:1893–1898. doi: 10.1200/JCO.2007.14.2992. [DOI] [PubMed] [Google Scholar]

- 9.Neuss MN, Desch CE, McNiff KK, et al. A process for measuring the quality of cancer care: the Quality Oncology Practice Initiative. J Clin Oncol. 2005;23:6233–6239. doi: 10.1200/JCO.2005.05.948. [DOI] [PubMed] [Google Scholar]

- 10.Neuss MN, Malin JL, Chan S, et al. Measuring the improving quality of outpatient care in medical oncology practices in the United States. J Clin Oncol. 2013;31:1471–1477. doi: 10.1200/JCO.2012.43.3300. [DOI] [PubMed] [Google Scholar]

- 11.Jacobsen P, Shibata D, Siegel EM, et al. Measuring quality of care in the treatment of colorectal cancer: the Moffitt Quality Practice Initiative. J Oncol Pract. 2007;3:60–65. doi: 10.1200/JOP.0722002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jacobsen PB, Shibata D, Siegel EM, et al. Initial evaluation of quality indicators for psychosocial care of adults with cancer. Cancer Control. 2009;16:328–334. doi: 10.1177/107327480901600407. [DOI] [PubMed] [Google Scholar]

- 13.Gray JE, Laronga C, Siegel EM, et al. Degree of variability in performance on breast cancer quality indicators: findings from the Florida Initiative for Quality Cancer Care. J Oncol Pract. 2011;7:247–251. doi: 10.1200/JOP.2010.000174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Jacobsen PB, Shibata D, Siegel EM, et al. Evaluating the quality of psychosocial care in outpatient medical oncology settings using performance indicators. Psychooncology. 2011;20:1221–1227. doi: 10.1002/pon.1849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Malafa MP, Corman MM, Shibata D, et al. The Florida Initiative for Quality Cancer Care: a regional project to measure and improve cancer care. Cancer Control. 2009;16:318–327. doi: 10.1177/107327480901600406. [DOI] [PubMed] [Google Scholar]

- 16.Desch CE, Benson AB, 3rd, Somerfield MR, et al. Colorectal cancer surveillance: 2005 update of an American Society of Clinical Oncology practice guideline. J Clin Oncol. 2005;23:8512–8519. doi: 10.1200/JCO.2005.04.0063. [DOI] [PubMed] [Google Scholar]

- 17.Desch CE, McNiff KK, Schneider EC, et al. American Society of Clinical Oncology/National Comprehensive Cancer Network Quality Measures. J Clin Oncol. 2008;26:3631–3637. doi: 10.1200/JCO.2008.16.5068. [DOI] [PubMed] [Google Scholar]

- 18.Engstrom PF, Benson AB, 3rd, Chen YJ, et al. Colon cancer clinical practice guidelines in oncology. J Natl Compr Canc Netw. 2005;3:468–491. doi: 10.6004/jnccn.2005.0024. [DOI] [PubMed] [Google Scholar]

- 19.Engstrom PF, Benson AB, 3rd, Chen YJ, et al. Rectal cancer clinical practice guidelines in oncology. J Natl Compr Canc Netw. 2005;3:492–508. doi: 10.6004/jnccn.2005.0025. [DOI] [PubMed] [Google Scholar]

- 20. [March 8, 2011];National Quality Forum Endorsed Standards. Available at: http://wwwqualityforumorg/MeasureDetailsaspx?SubmissionId=455#k=colon+cancer.

- 21.O'Leary J. Report from the American College of Oncology Administrators' (ACOA) liaison to the American College of Surgeons Commission on Cancer (ACoS-CoC). New cancer program standards highlighted. J Oncol Manag. 2003;12:7. [PubMed] [Google Scholar]

- 22.Siegel EM, Jacobsen PB, Malafa M, et al. Evaluating the quality of colorectal cancer care in the state of Florida: results from the Florida Initiative for Quality Cancer Care. J Oncol Pract. 2012;8:239–245. doi: 10.1200/JOP.2011.000477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tanvetyanon T, Corman M, Lee JH, et al. Quality of care in non-small-cell lung cancer: findings from 11 oncology practices in Florida. J Oncol Pract. 2011;7:e25ee31. doi: 10.1200/JOP.2011.000228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Jacobsen PB. Measuring, monitoring, and improving the quality of cancer care: the momentum is building. Cancer Control. 2009;16:280–282. doi: 10.1177/107327480901600401. [DOI] [PubMed] [Google Scholar]

- 25.Donovan KA, Jacobsen PB. Fatigue, depression, and insomnia: evidence for a symptom cluster in cancer. Semin Oncol Nurs. 2007;23:127–135. doi: 10.1016/j.soncn.2007.01.004. [DOI] [PubMed] [Google Scholar]

- 26.Firth D. Bias reduction of maximum likelihood estimates. Biometrika. 1993;80:27–38. [Google Scholar]

- 27.Heinze G, Schemper M. A solution to the problem of separation in logistic regression. Stat Med. 2002;21:2409–2419. doi: 10.1002/sim.1047. [DOI] [PubMed] [Google Scholar]

- 28.Idowu MO, Bekeris LG, Raab S, et al. Adequacy of surgical pathology reporting of cancer: a College of American Pathologists Q-Probes study of 86 institutions. Arch Pathol Lab Med. 2010;134:969–974. doi: 10.5858/2009-0412-CP.1. [DOI] [PubMed] [Google Scholar]

- 29.Onerheim R, Racette P, Jacques A, Gagnon R. Improving the quality of surgical pathology reports for breast cancer: a centralized audit with feedback. Arch Pathol Lab Med. 2008;132:1428–1431. doi: 10.5858/2008-132-1428-ITQOSP. [DOI] [PubMed] [Google Scholar]

- 30.Imperato PJ, Waisman J, Wallen MD, et al. Improvements in breast cancer pathology practices among medicare patients undergoing unilateral extended simple mastectomy. Am J Med Qual. 2003;18:164–170. doi: 10.1177/106286060301800406. [DOI] [PubMed] [Google Scholar]

- 31.Imperato PJ, Waisman J, Wallen M, et al. Results of a cooperative educational program to improve prostate pathology reports among patients undergoing radical prostatectomy. J Community Health. 2002;27:1–13. doi: 10.1023/a:1013823409165. [DOI] [PubMed] [Google Scholar]

- 32.Poeschl EM, Pollheimer MJ, Kornprat P, et al. Perineural invasion: correlation with aggressive phenotype and independent prognostic variable in both colon and rectum cancer. J Clin Oncol. 2010;28:e358ee360. doi: 10.1200/JCO.2009.27.3581. author reply e361–e362. [DOI] [PubMed] [Google Scholar]

- 33.Vergara-Fernandez O, Swallow CJ, Victor JC, et al. Assessing outcomes following surgery for colorectal cancer using quality of care indicators. Can J Surg. 2010;53:232–240. [PMC free article] [PubMed] [Google Scholar]

- 34.Compton CC, Fielding LP, Burgart LJ, et al. Prognostic factors in colorectal cancer. College of American Pathologists Consensus Statement 1999. Arch Pathol Lab Med. 2000;124:979–994. doi: 10.5858/2000-124-0979-PFICC. [DOI] [PubMed] [Google Scholar]

- 35.Compton C, Fenoglio-Preiser CM, Pettigrew N, Fielding LP. American Joint Committee on Cancer Prognostic Factors Consensus Conference: Colorectal Working Group. Cancer. 2000;88:1739–1757. doi: 10.1002/(sici)1097-0142(20000401)88:7<1739::aid-cncr30>3.0.co;2-t. [DOI] [PubMed] [Google Scholar]

- 36.Chang GJ, Rodriguez-Bigas MA, Skibber JM, Moyer VA. Lymph node evaluation and survival after curative resection of colon cancer: systematic review. J Natl Cancer Inst. 2007;99:433–441. doi: 10.1093/jnci/djk092. [DOI] [PubMed] [Google Scholar]

- 37.Wang J, Kulaylat M, Rockette H, et al. Should total number of lymph nodes be used as a quality of care measure for stage III colon cancer? Ann Surg. 2009;249:559–563. doi: 10.1097/SLA.0b013e318197f2c8. [DOI] [PubMed] [Google Scholar]

- 38.Wong SL. Lymph node evaluation in colon cancer: assessing the link between quality indicators and quality. JAMA. 2011;306:1139–1141. doi: 10.1001/jama.2011.1318. [DOI] [PubMed] [Google Scholar]

- 39.Clinical Quality Measures (CQMs) Baltimore, MD: [May 31, 2013]. Available at: http://www.cms.gov/Regulations-and-Guidance/Legislation/EHRIncentivePrograms/ClinicalQualityMeasures.html. [Google Scholar]

- 40.Horner K, Ludman EJ, McCorkle R, et al. An oncology nurse navigator program designed to eliminate gaps in early cancer care. Clin J Oncol Nurs. 2013;17:43–48. doi: 10.1188/13.CJON.43-48. [DOI] [PubMed] [Google Scholar]

- 41.National Quality Forum Endorsed Commission on Cancer Measures for Quality of Cancer Care for Breast and Colorectal Cancers. Chicago: [July 20, 2013]. Available at: http://www.facs.org/cancer/qualitymeasures.html. [Google Scholar]

- 42.Uemura M, Morgan R, Jr, Mendelsohn M, et al. Enhancing quality improvements in cancer care through CME activities at a nationally recognized cancer center. J Cancer Educ. 2013;28:215–220. doi: 10.1007/s13187-013-0467-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.