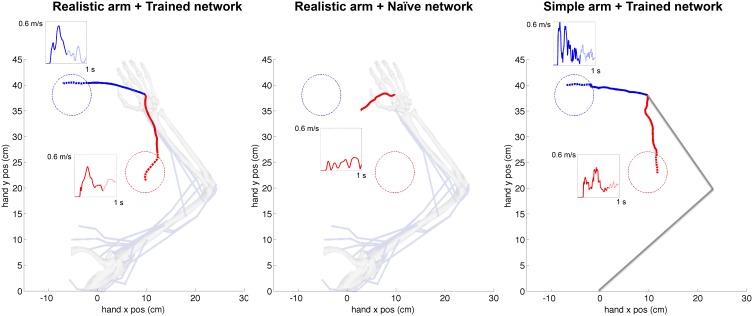

Figure 6.

Representative example of cartesian trajectories and velocity profiles of virtual arm during a reaching task to two targets for realistic arm and trained network (left), realistic arm and naive network (center), and simple arm and trained network (right). Trained networks employed STDP-based reinforcement learning to adapt its synaptic weights to drive the virtual arm to each of these two targets. The naive network drives the arm using the initial random weights and therefore produces random movements independent of the target location. The hand trajectories of the realistic musculoskeletal arm are smoother than those of the simple arm, and show velocity profiles consistent with physiological movement. The initial reaching trajectory from starting point to target area is shown as a solid line, whereas the final part of the trajectory within the target area is shown as a dotted line. The starting configuration of the simple and musculoskeletal arm is shown in the background.