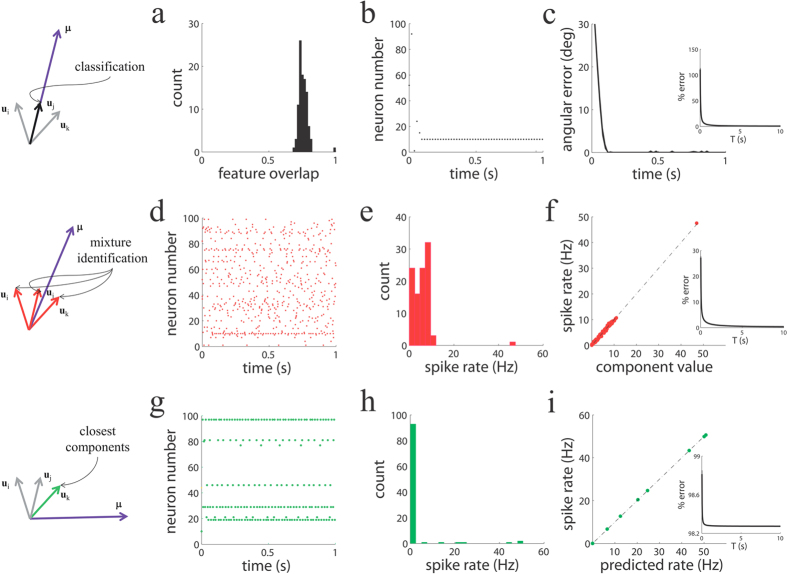

Figure 2. The spiking network solves efficiently a hard discrimination task in a few spikes (top row), identifies all the components of a complex mixture (middle) and finds the closest causes to an odd observation (bottom).

Schematics for the three tasks are shown on the left. The causes that need to be identified are marked with the same color than the panels on their right. For instance, in the classification task, the input vector is proportional to feature vector j (j = 10) and this is the cause that has to be identified. (a) Distribution of overlaps between feature vectors, centered at around 0.75 (Methods). (b) Response of the spiking network upon stimulation with the 10th cause. Only the neuron that encodes the cause used as a input (the 10th neuron) is active after a brief transient consisting of a handful of spikes from other neurons. (c) Angular error decays to close to zero in about 100 ms (T = 20 ms time windows). Inset: percentage error (see Methods) decays to zero as the integration window T increases. (d,g) Population activity of the network stimulated by a strong cause buried under a strong random background (d) or by an input vector that cannot be exactly represented by the feature vectors (g). (e,h) Distribution of firing rates. (f) Observed firing rate of the spiking network vs. the components of the mixture. The spiking network finds the mixture of features that composes the input vector. Inset: percentage error decays to zero as integration window T increases. (i) Observed firing rate of the spiking network vs. the rate predicted from a non-spiking algorithm for the same problem (rate-based network algorithm) in the approximation task. Inset: percentage error decays to the minimum (optimum) value as integration window T increases. In this case the error does not approach zero because in the approximation task the input vector is outside the convex hull formed by the set of all feature vectors.