Abstract

Qualitative research was conducted to adapt and develop an mHealth app for HIV patients with histories of substance abuse. The app provides reactive, visual representations of adherence rates, viral load and CD4 counts. Two sets of focus groups were conducted with 22 participants. The first concentrated on use of reminder system and opinions about ideal adherence features. Results informed adaptation of an existing system, which was then presented to participants in the second set of focus groups. We describe participant responses to candidate app characteristics and their understanding of the HIV disease state based on these changing images. Qualitative results indicate that a balance of provided and requested information is important to maintain interest and support adherence. App characteristics and information can provoke positive and negative reactions and these emotional responses may affect adherence. Conclusion: User understanding of, and reaction to, app visual content was essential to adaptation and design.

1. Introduction

The ubiquity of mobile technologies, such as smartphones, enhances the ability to assess and improve health. There are over 327 million wireless subscribers in the United States; as of January 2014, 90% of U.S. adults own a cell phone and 58% own smart phones [1, 2, 3]. Given that the penetration of mobile technologies into minority and low socioeconomic populations [3] has been more rapid than that observed for the Internet, the “digital divide” for smart phones appears non-existent. The impact of mobile technologies on healthcare is huge as well: 52% of smart phone and 31% of cell phone owners report using their phones to find health and medical information [4]. The high penetration and computing capacity of even basic cell phones potentially allows the assessment of risky health behaviors as they occur, as well as the delivery of interventions precisely at the moment of greatest need. Furthermore, smart phones’ multimodal lines of communication now allow delivery of a stunning breadth of intervention content. The use of mobile technologies for healthcare (known as ‘mHealth’) has therefore created striking opportunities for the treatment of a large number of common, intractable, and expensive medical conditions.

In this tech-saturated culture, mHealth interventions must possess incredible variety and intrinsic appeal to maximize end user engagement and reduce boredom and fatigue. Despite the enormous potential of mobile technologies in health promotion research, several features threaten the acceptability of cellphone-delivered behavioral interventions.

Mobile devices are disruptive technologies, both because they are replacing old methods for delivering health behavior interventions, and because of their capacity for interruption at any time. The ability to deliver interventions when recipients are least likely to be able to use them—such as on the bus, at work, or with friends—is a serious threat to acceptability. This may be particularly important for HIV-related interventions if patients feel a need to keep their HIV status private.

Many existing text-based behavioral interventions are simply too long for use in a mobile space. Text-messages are limited to 160 characters. Interestingly, Twitter feeds are limited to 140 characters because this is the length of a message that a recipient will completely ingest without considering whether s/he wants to do so [5].

Many behavioral interventions are purely cognitive in origin and contain little if any affective content that would improve resonance with recipients. The didactic tone of existing mHealth treatments does more than dampen messages’ resonance with recipients and diminish long-term acceptability. It can potentially contribute to the observed deterioration of treatment effect that occurs within weeks of completion of clinical trials.

Finally, using “canned” interventions or those developed by experts can often miss the mark and be perceived as wrong, ill fitted, or just plain aggravating—with detrimental effects on long-term usability.

iHAART is part of a research program that is seeking to create effective, patient-centered, mHealth-delivered behavioral interventions to improve HIV-medication adherence. To address the above concerns and design a product that is both acceptable and effective, we collected qualitative data from participants representing the target population of the intervention.

An effective HIV medication adherence app requires long term use and must be deliverable in a variety of locations. Therefore the needs of the affected population have been central to the development process, which included three stages: 1) focus groups with target population to discuss their existing adherence strategies and ideal reminder app characteristics; 2) participation in the MIT Media Lab’s Health and Wellness Innovations 2013 conference, which further developed and designed the app’s interface and interaction features; 3) a second round of focus groups in which the re-designed app was demonstrated.

What became clear from this process is that participants were already using a variety of adherence and reminder systems. They had strong opinions about what strategies and app characteristics currently worked for them, and what they would want in a new system. Because the app design incorporated several innovative pictorial interfaces, each of which changed based on information provided by the user, it was essential to understand the meanings imputed to the images in order to determine if patients understood them in the ways the designers intended.

This paper describes the app and the innovative conceptual and visual concepts that have informed it. We describe the formative focus groups and report on those findings, then discuss the relevance of patient-centered research for mHealth intervention development and design.

2. The iHAART app

HIV is a chronic disease which requires strict, lifelong adherence to medication [6]. The iHAART app is named using the acronym for Highly Active Anti Retroviral Therapy, a phrase describing the complex, multiple drug regimens required to treat HIV. Ideal adherence interventions need to support unending therapeutic adherence [7]. Interventions to improve HAART adherence, however, lose effectiveness within months [7, 8]. The iHAART app incorporates several elements that address user fatigue while also providing education about how medication adherence affects long-term health and wellbeing.

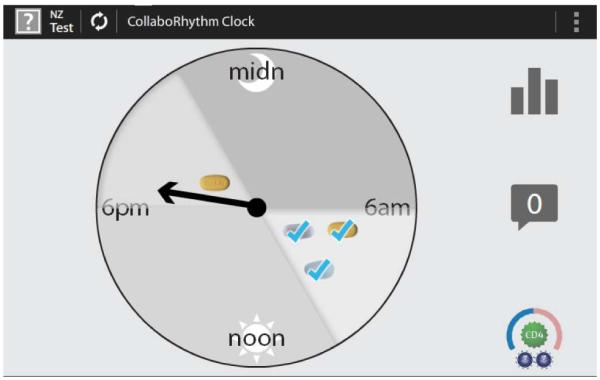

iHAART was built on a platform previously developed by John Moore and colleagues at the MIT Media Lab [9]. It includes a daily medication “clock” icon (Figure 1) which represents 24 hours in a day and shows the patient’s medication schedule, including the time window in which each medicine should be ideally taken. In figure 1, the fictional patient has indicated that s/he has taken the prescribed morning medications. The three pills were taken in the recommended window of time from 6-10 AM. This patient has not taken the one pill prescribed for the 6-10 PM window. The arrow indicates that the current time is just after 6 in the evening.

Figure 1. The clock screen.

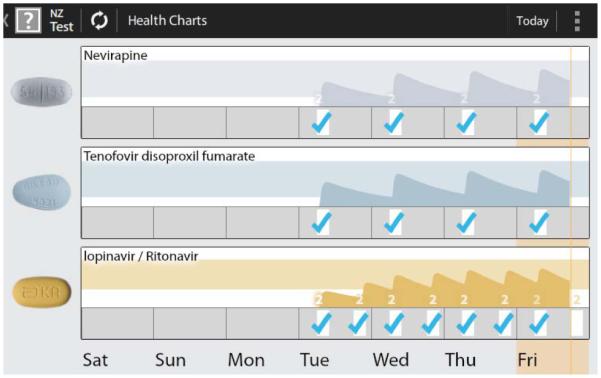

In addition to the clock, several other screens provide a dynamic disease-state simulation of HIV viral load and CD4 cell count which change based on patient’s reported adherence [9]. The charts screen (Figure 2) shows days on which medications were taken correctly (blue checks), or not (no checks), along with a shaded graph representing an estimate of each medication’s blood plasma concentration based upon that self-reported medication taking. The colored bars appearing behind the shaded area represent target blood concentrations for each medication, while the white area below that indicates sub-therapeutic, ineffective, concentrations.

Figure 2. The charts screen.

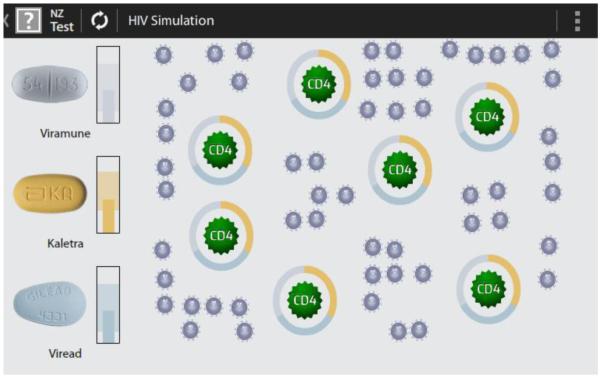

The simulation screen provides another graphic image of the impact of medication adherence/non-adherence by showing how medication taking affects CD4 counts and viral loads. In this image the large CD4 immune cells are dark green and the smaller HIV virus particles are grey. Complete circles surrounding CD4 cells indicate HAART protection; that is, the medication is present in a blood plasma level such that CD4 cells are protected from the virus. Incomplete circles —the result of non-adherence— represent incomplete protection of those CD4 immune cells. The protecting circles are made from three colors representing the three medications in this fictional patient’s HAART regimen. As adherence increases, the proportion of the dark green (effective) CD4 cell increases, while the proportion of grey viral particles decreases. Extremely high, long term adherence removes viral particles from the graphic. Future investigations may use actual biological data from patients to determine the starting point for this graphic. Because graphics are updated on the smartphone from individual participants’ reported adherence data, they are personalized, adaptive, and focused upon the direct effects of HAART adherence.

The automatic adaptation of these images based on users’ reports of their medication adherence is designed to reinforce improved HIV medication adherence or forestall worsening adherence. This process provides updated content for a health intervention that is framed by evidence-based practice, and it is particularly relevant for supporting long-term adherence to therapies for chronic medical conditions like HIV. The app adapts the potency of the intervention message to the current state of adherence while creating a constant stream of fresh prevention content. These features were deliberately designed to prevent intervention fatigue, promote ongoing engagement with therapy, minimize attrition, and prevent non-adherence.

3. Focus Groups

The first step in adapting the initial concept was to conduct formative focus groups. These were held at University of Massachusetts Medical Center in November and December 2012. The research agenda for these groups included questions about participants’ current phone use and characteristics of apps they currently used and liked, both generally and specifically for medication reminders. We also asked about: barriers and facilitators to using a reminder app; security and privacy; ideal relational agent and interface characteristics; the acceptable frequency for initial medication reminders, follow-up reminders and other interruptions. This included how reminders should appropriately escalate if a participant does not respond to a medication-taking prompt.

Focus group participants (n=22) ranged in age from 32-67 years old; 50% were female. They described their ethnic and racial identities as follows: 32% were Hispanic, 67% were non-Hispanic. Additionally 41% identified themselves as Black or African American, 27% as White, 18% as other, 5% as Asian, and 5% as American Indian or Alaskan Native. In educational attainment 5% had attended only elementary school, 14% had attended some High School, 27% were high school graduates or held Graduate Equivalent Degrees; 36% had attended some college and 18% were college graduates.

Focus groups were audio recorded and transcribed. A qualitative codebook was created reflecting topics from the research agendas as well as topics and concerns raised by participants during the discussion. Two coders (RKR and MLR) coded each transcript. Coding was compared, reconciled and entered into NVivo 10 software for analysis.

Participants were enthusiastic about the idea of an app designed to specifically help with medication taking. They also agreed, however, that reminder-based programs can be aggravating when those reminders become too frequent, come at the wrong time, or become annoying for some other reason. Privacy was identified as a concern by many participants, but there was some disagreement about how much information people would be willing to share via the app. Participants made it clear that they lived busy, complex lives and that this complexity affected their remembering to take medicines. This was especially true for people taking medicines for a variety of chronic illnesses.

Participants reported using a variety of existing reminder and medication adherence systems, including pen-and-paper diaries, beepers timed to prompt pill taking, and electronic medication reminder systems, including electronic pill bottle caps, such as the MEMS® cap [10], which reports to a provider how often the pill bottle is opened. Some of these include systems that allow receipt of information from providers—for instance, a propriety program used by a specific clinic to communicate with their own patients.

4. Developing the App Based on Participant Feedback

Knowledge obtained from the focus groups informed further app development in early 2013 at the MIT Media Lab’s Health and Wellness Innovations conference. During this two-week ‘hackathon’, a team that included clinical and outreach workers representing the targeted patient population, doctors, a medical anthropologist, computer system program designers and industry representatives added several additional elements to the concept. These included:

A changing photo/picture that becomes more in focus if medications are being taking properly. This dynamic, momentary reflection of adherence enables users to personalize the app. The image is selected by the user, and can represent anything that might motivate them to take their medications such as pictures of people, places. It can also be a more anonymous and potentially neutral image, such as a team icon. Importantly, it increases the degree of user privacy because it is user selected and does not contain HIV-related content or program name

A sliding toolbar on the simulation screen to both illustrate and teach about what happens when you are more/less adherent. The toolbar functions as both an educational tool and an explanation of the changing CD4 and viral load medication image.

Refinements to the changing adherence graph on the charts screen which illustrate fluctuation in plasma concentration of the medication in order to provide a longitudinal assessment of adherence.

At this phase, it became clear that we needed specific information about how users would interpret the images and interactive changes in these graphs. Understanding users’ reaction to the toolbar and adherence graph was essential. While certain elements of the image respond to self-reported adherence, the base image would not be updated until after blood work or point of care testing with a clinician. The image could not reflect real-time blood levels or viral loads. This realization informed the questions asked in our second round of focus groups.

5. User understandings of the images

In June 2013 this second set of focus groups was held with 17 of the 22 people who attended the first groups. To elucidate the dilemmas raised during the hackathon, specific questions were asked about how participants understood the various components of visual images. In particular, we wanted to know how essential elements like the protecting rings were understood by participants. We also needed to know how users would react to a changing image that was updated based on information they input into the system, but that was NOT representative of their actual blood values.

The second round of groups began with a summary of our findings from the first focus groups. We then showed a video that demonstrated the entire app. We asked “What does this mean to you? How would you use it? Do you have any concerns about it?” We also specifically probed about participants’ reactions “if reality doesn’t reflect what is in the app?”

Finally, we asked about how the app should communicate with the user, and how/when they would use the app for communication with providers. This area of inquiry included asking general questions about how the app should deliver bad news and good news.

5.1. Clock Screen

The clock screen and its features were well received by participants, most of whom found it easy to understand. Most comments about the image were positive and some participants particularly liked that it covered a 24-hour period: “Yeah, it makes perfect sense to me because this is how my medication is. I need to take it every 24 hours, within that window. It’s shaded, and it connects as soon as I look at it” (Ppt 9). Participants also liked that the clock provided a “range” or “window” (participant terms) for taking medication (i.e. 6-10 AM). For example: “I think that the clock is cool because since it's a range, like I said, I use an alarm clock, which is... annoying. As a range, that would be good because if it reminded me at 6:00 a.m., I know I got until 10:00 a.m. to take my meds. Not that that would be a habit, but every now and then, that would be okay. I like the fact of the range, if the clock has the range. I like that” (Ppt 20). Another participant appreciated that the range had images of the pills within it, which made knowing what to take when “common sense” (Ppt 11). Some suggested that the image looked like a weather-related interface, which was welcome, since it didn’t telegraph that it was a system for medication taking. Some participants were less enthusiastic: one said it was too complicated and that she preferred a system that would sound an alarm right at 9AM or 9PM. Another suggested that understanding the clock would require some training.

5.2. Chart Screen

When asked what the chart screen meant, participants understood that the lines represent “the levels of the HIV medicine in your body” (Ppt 4). This excerpt provides a sense of how these conversations went, including that the information on the screens was understood to be interrelated:

Fac: This is the chart screen. What does this mean to you?

2: How good am I doing.

Fac: It means how good am I doing in terms of taking my medications?

7: How good it’s working for me.

Fac: How good it’s working? Now, why do you say that?

7: I say it because the lines I can visually see, and then plus I can go on the other CD4 screen you had to see how it’s working in my body.

Fac: Do you have any concerns about this?

3: I love that idea.

Fac: You love this idea?

3: It’s almost like the app is showing you what your medication is doing inside of your body, which—pretty much we don’t know that until we go to the hospital to get our blood works done.

In some of the conversations it was not clear whether the ideal medication levels were those in the chart’s colored bands or in the white bands (as designed, the white represented sub-optimal levels). To address this some suggested darkening the colors or having a red band identify when levels become problematic.

5.3. Simulation screen

Many participants understood the simulation screen images immediately and correctly “It means I'm taking my meds. The force shield is around all of them, right?” (ppt 18). These participants clearly understood that the rings around the CD4 cells represented a form of protection. They described them as like a “deflector shield” a “force shield” and “the Star Trek force field” Some also wanted to know what the image would look like if the virus was undetectable in their bodies.

There were participants who found the image confusing. Consider this interchange:

Fac: Alright, this is a simulation screen. What does this mean to you?

10: It’s the CD4, your viral load, all that. ...

9: That my cells are being protected. There’s nothing penetrating that medication.

Fac: Okay. 6?

6: It’s a little bit confusing to me. All those dots. I don’t know what the hell that means.

8: I don’t either....I don’t understand. What is it?

Following this excerpt, participants who understood the image articulately explained both the image and the process of HIV viral reproduction and its inhibition to the other participants. One participant then made this suggestion: “I’m educated, and I was an educator so I know the life cycle, what they look like on different kinda screens and whatever. What do you think if when you first sign into the app it tells you the HIV life cycle? It has ... explain the fusion and the reverse transcriptor stage and what it does so that when people see it, they’re not—‘cuz a lot of people are not educated. Some people just take HIV meds” (Ppt 4).

5.4. Representing Adherence

There were a variety of responses to the hypothetical nature of the adherence images. Participants did seem to understand that the images were representations based on recorded adherence. And many were not at all concerned about a mismatch between representation and reality. For example:

20: The app can say one thing and your blood work says another thing. I don't think it's the fault of the app. The app is, like [Ppt 18] said, it's a guide. It's a guideline, general guideline. That's all. It's not my doctor.

18 I wouldn't be terribly upset if the numbers were off at all. I would still use the app. I wouldn't toss it or delete it or anything because it is quite useful. Like he said, I'm not gonna... lose sleep over it.

20: Right. Exactly.

Fac: People recognize it's not like a speedometer on a car.

18: Exactly.

A participant even suggested that there could be a benefit to such a mismatch: “I think that could be good, actually, because if you have perfect adherence and your numbers are going down, then maybe you need to change up your meds... or there's something going on” (Ppt 11).

In this excerpt the participant who is not enthusiastic about the possibility of blood levels of viral load and CD4 cells differing from the representations, suggests a possible fix for the problem:

15: Of course, it's a calculation. What else would it be? It's not static, right? ...

Fac: You're right. It's not static, but it's also not reality. It's a calculation. It's an estimation of what's actually going on in your body.

19: A guesstimate.

20: Right. That's it.

Fac: Here's the issue, as far as I'm concerned. This is where I really need a lot of help. What do you do when you come in to have your viral loads measured and the viral loads in reality are higher than what you think they should be based on this? What do you do? ...

18: You say your app sucks, pretty much. Is there a way that you guys could be more precise with the app based on the medications that we take, or is there a way ... that we could put our personal numbers in this app somehow for maybe a series of maybe six months to a year so it kind of gets the gist of the way my personal body works. Then it would be close to being more accurate.

5.5. Communication with and through the app

Participants told us that they wanted a variety of forms of communication from the app, especially when they are not taking medication. Text messages, images of frowning doctors, emails, alerts, and pop-up notifications were all suggested. And participants wanted both ‘good job’ reminders when things are going well and messages indicating that they needed to improve. One person suggested that phone calls were better for delivering bad news because texting was too impersonal. Another suggested that it was particularly important that a social worker contact them in person if recreational drug use was an element of their non-adherence. One person cautioned us:“Can you stop pointing out the obvious and give me solutions on how to deal with it instead of [just] telling me that I have it?” (ppt 3).

5.6. Emotional responses

A surprising finding in our analysis was a series of references to the emotional responses app components or delivered messages could provoke. The qualitative coders captured this in two codes simply titled ‘it would make me feel bad about myself’ and ‘it would make me feel good about myself.’ One theme in these codes is that when participants know there is a problem they may not want to be contacted by medical staff because they anticipate getting the message that they are not doing well and do not want to be scolded about that. Several suggested that they knew themselves when they were not doing well and did not need the app or their doctor to tell them this.

This person described a telemonitoring program, during which his doctor was notified when his condition worsened. He said the notification “just made me more reluctant to talk to my doctor sometimes. I mean I got a great doctor. I'm happy with him and everything [but] I didn't want to get lectured every time I talked to him. ... The bottom line is, what I'm saying is, when it's an interactive device like that, it might have—it might not have the effect that you want it to have. It might not make you more compliant. It might make you more likely to kind of back away, and say ‘the hell with it’” (ppt. 7).

Several other participants echoed this sentiment, suggesting that the app telling users through images and messages that they are getting sicker could, for some, be a reason to stop engaging with the app: “what if someone's sickly? Every time you're reminding them, ‘Look, your CD4 count's going down. You're dying 'em all.’ [i.e. killing the immune system] ... could you imagine someone being sick and every day that damn phone telling me, ‘Your T count's going' down’? Well thanks for sharing! I'd wanna smash that phone.”

6. Discussion

User understanding of, and interactions with, technology are essential to mHealth design. In the current app market, however, developmental speed and a rush to deliver on the enormous potential of this emerging and rapidly developing field may leave little room for deliberate analysis of user perspectives. These qualitative data illustrate why that is essential for an mHealth app for HIV adherence. During one of the focus groups, a participant noted that the HIV epidemic has resulted in many tragic deaths, of which there are powerful reminders, such as the AIDs quilt. The participant was saying that she doesn’t need another reminder of the mortality associated with HIV. But that is one potential consequence of poor adherence. The intent of this research was to develop awareness of such participant perspectives and strategies to address them.

One possible strategy was suggested by participants who said that while ‘good job’ messages were useful, what they really wanted was an actual reward: extra lifelines on a favorite game app like Candy Crush or Angry Birds, or additional coins for playing bingo. Humor, or even factoids related to HIV, were also suggested as ways to deliver messages.

Another strategy is illustrated by a participant using the automated MEMS® cap, which records when a medication bottle is opened. He described how important it was that someone else was going to know when and if his medications were taken. Knowing that this information was shared was a factor that motivated his adherence. The iHAART app can fulfill a similar funtion by providing adherence information to clinicians along with the adapted images that participants see in the app.

While we have learned much from this research, it does have some limitations. The sample was recruited from one hospital and included patients whose medication adherence strategies were influenced by the clinicians there, some of whom participated in the project. Our focus group questions were related to specific design elements: we asked participants what they thought of an existing system rather than asking them to design their own systems. Participants’ strong opinions with prior reminder systems may influence reaction to the iHAART app even if the opinions were not particularly relevant to that app’s features. Participants responded to images on projected slides rather than on an actual phone. Subsequent research will include qualitative interviews with additional participants who use the actual app on a mobile device.

Most, if not all, mHealth behavioral interventions use a model where a researcher determines the type, content, and timing of interventions that are “pushed” to the patient irrespective of user preferences; “push” approaches are associated with poor user experiences and, hence, early extinction of use [11]. The platform for this app was designed in the belief that mHealth information should be exchanged, not in a purely automated way, but rather with the knowledge that there is patient-specific data and a provider behind these exchanges [9]. This requires both information that is pulled from the user, in the form of adherence reports, and information that is pushed to the user based on that information. Developers believe that together these two components can suport better medication adherence.

A consequence of this perspective, however, is that interaction between the user and the app, and between the user and health care providers to whom they are connected by the app, are both imbued with meaning. Negative emotional responses to communicated information have the potential to derail use of the app, but understanding postitive emotional responses may be essential to both the app’s and the patients’ success.

7. Conclusion

If information or interventions are to be “pushed” to the user, then their acceptability needs to be maximized. While we feel that an iterative approach to mHealth intervention design is critical to a making an app intrisically acceptable, we also believe that the standard iterative approach of convening focus groups to guide mHealth app design requires rethinking. Our approach was typical of a ‘waterfall’ method in intervention development: we assembled a focus group, obtained data, conducted qualitative analyses to identify themes, and then met again with the focus group to see ‘if we got it right.’ Although effective and predictable, this process takes months. A more rapid approach involves the use of ‘agile’ methods in which a small group comprising an interventionist, a programmer, a medical anthropologist, and a typical recipient of the behavioral intervention meet for a period of time (often, approximately 2 weeks) during which the intervention is programmed. This product is tested —briefly— among the members of the target population. After learning the impressions of the target population, the small group reconvenes for refinement of the programming. This process occurs iteratively until a final product is produced. Whereas development of a programmed intervention using waterfall methods can takes months (or years), agile methods can produce the same result in a much shorter time.

To be successful, an agile approach requires effective, timely collaboration between developers, HCI experts, behavioral health researchers, clinicians and users. Translating abstract concepts to the computer screen requires understanding of the images’ valence and meaning to each of these constituencies in order to improve them. There have been important recent efforts to increase communication between and among these different disciplines [12, 13] and we hope that the methods developed during this project can contribute to that discussion.

A 30 person open trial of the iHAART app is planned for late 2014-2015. It will use a version of the app updated based on this formative research.

Figure 3. The simulation screen.

Contributor Information

Rochelle K. Rosen, Behavioral & Preventive Medicine, The Miriam Hospital, Brown School of Public Health, Providence, RI

Megan L. Ranney, Emergency Medicine, Alpert School of Medicine, Brown University, Providence, RI

Edward W. Boyer, Emergency Medicine, UMASS Medical School

8. References

- [1].Fox S, Duggan M. Pew Internet & American Life Project. Pew Charitable Trusts; Washington, DC: 2012. Mobile Health 2012. Washington, DC. [Google Scholar]

- [2].CTIA Wireless Quick Facts. http://www.ctia.org/your-wireless-life/how-wireless-works/wireless-quick-fact/ Accessed June 13, 2014.

- [3].Pew Research Internet Project . Mobile Technology Fact Sheet. Pew Research Center; Washington, DC: http://www.pewinternet.org/data-trend/mobile/cell-phone-and-smartphone-ownership-demographics/ [Google Scholar]

- [4].Pew Research Internet Project . Health Fact Sheet. Pew Research Center; Washington, DC: http://www.pewinternet.org/fact-sheets/health-fact-sheet/ Accessed June 14, 2014. [Google Scholar]

- [5].Sagolla D. 140 Characters: A Style Guide for the Short Form. John Wiley & Sons; Hoboken, NJ: 2009. D. [Google Scholar]

- [6].DHHS . Panel on Antiretroviral Guidelines for Adults and Adolescents. Guidelines for the use of antiretroviral agents in HIV-1-infected adults and adolescents. Department of Health and Human Services; Dec 1, 2009. [Google Scholar]

- [7].Osterberg L, Blaschke T. Adherence to Medication. New England Journal of Medicine. 2005;353:487–92. doi: 10.1056/NEJMra050100. [DOI] [PubMed] [Google Scholar]

- [8].Rigsby M, Rosen M, Beauvais J. Cue-dose training with monetary reinforcement: Pilot study of an antiretroviral adherence intervention. Journal of General Internal Medicine. 2000;15:841–7. doi: 10.1046/j.1525-1497.2000.00127.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Moore JO, Hardy H, Skolnic PR, Moss FH. A collaborative awareness system for Chronic disease medication adherence applied to HIV infection; Conf Proc IEEE Eng Med Biol Soc; 2011. pp. 1523–7. [DOI] [PubMed] [Google Scholar]

- [10].Aardex, Ltd. Medication Event Monitoring System cap. http://www.aardexgroup.com/upload/pdf/MEMS6_TrackCap_datasheet.pdf Accessed June 14, 2014.

- [11].Theofanos M, Stanton B, Wolfson C. Usability and Biometrics. National Institute of Standards and Technology DoC; Gaithersburg, MD: 2008. [Google Scholar]

- [12].Klasnja P, Consolvo S, Pratt W. How to Evaluate Technologies for Health Behavior Change in HCI Research; CHI 2011; Vancouver, BC. May 7-12, 2011. [Google Scholar]

- [13].Hekler EB, Klasnja P, Froehlich JE, Buman MP. Mind the Theoretical Gap: Interpreting, Using and Developing Behavioral Theory in HCI Research; CHI 2013; Paris, France. 2013. April 27-May 2. [Google Scholar]