Science is an enterprise driven fundamentally by social relations and dynamics (1). Thanks to comprehensive bibliometric datasets on scientific production and the development of new tools in network science in the past decade, traces of these relations can now be analyzed in the form of citation and coauthorship networks, shedding light on the complex structure of scientific collaboration patterns (2, 3), on reputation effects (4), and even on the development of entire fields (5, 6). What about funding, however? How do the available funding options influence with whom we collaborate? Are there elite institutions that get more than others? Additionally, how is the funding landscape changing? In PNAS, Ma et al. (7) explore a dataset of 43,000 projects funded by the Engineering and Physical Sciences Research Council, a major government body of research funding in the United Kingdom, offering a unique perspective on these questions. In a longitudinal data analysis covering three decades, Ma et al. (7) shed light into the relations between funding landscapes and scientific collaborations. The study finds increasing inequality over time on two levels: First, an elite circle of academic institutions tends to overattract funding, and, second, the very same institutions prefer to collaborate with each other.

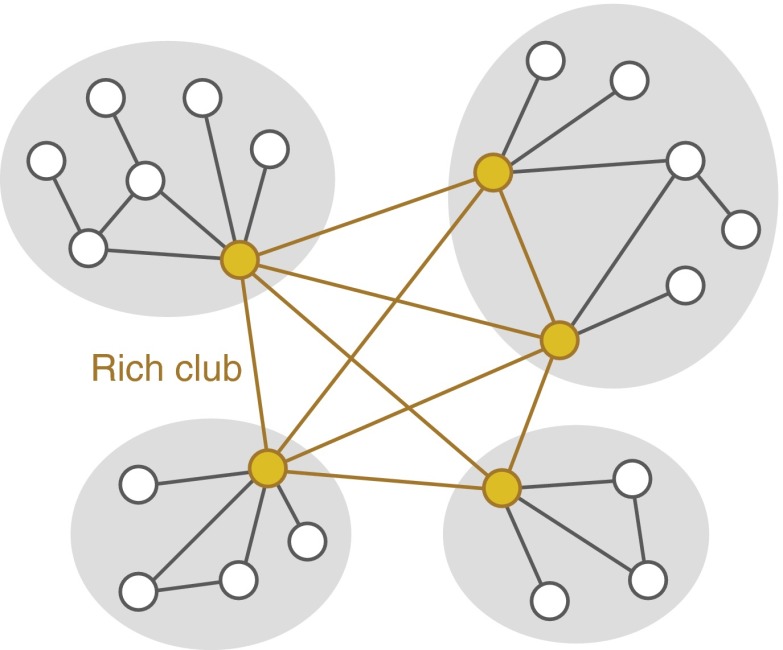

To quantify the elitism in academic collaborations, Ma et al. (7) make use of methods from network science that have become indispensable in the analysis of complex systems (8). Their main metric measures the so-called “rich club” phenomenon (9) which indicates the tendency of nodes with many connections to form a tightly interconnected community (Fig. 1). Because many natural systems exhibit rich clubs, such as the physical structure of the Internet (10), various social systems, or the neural connections of the brain (11), finding one in a collaboration network is not alarming, per se, if the consequences are understood. Being a member of a rich club, on the one hand, implies having easy access to the other elite members in the system; on the other hand, it can provide a strategic position of brokerage standing between nonelite members of different communities. Using an appropriate network measure, Ma et al. (7) indeed find increasing brokerage of top-funded universities in the United Kingdom over time, potentially boosting their power over controlling access to opportunities apart from being well-connected.

Fig. 1.

Schematic illustration of a rich club, adapted from Sporns (11). This network structure indicates the tendency of nodes with high centrality (yellow) to form a tightly interconnected community. Members of the rich club can act as brokers between nodes that belong to different communities (white). Rich clubs are found in diverse systems, including the network of scientific collaborations between academic institutions studied by Ma et al. (7). In this case, the rich club implies a close circle of elite institutions that have increased power to control the access to information or to funding opportunities.

Should we be worried about these developments? The answer is not yet clear, because elite institutions like Imperial College London not only attract more funding but also produce research with higher impact (7). It is clear, however, that the approach of Ma et al. (7) is an important step in our ability to inform policy makers competently on the consequences of their funding strategies. For example, in times of “Big Science” projects with highly focused investments, such as the European Commission’s €1-billion flagship program, failure can lead to a more abrupt loss of resources (12).

By bringing institutions into the spotlight, Ma et al. (7) complement our former understanding of collaboration patterns from mere coauthorship (2–6). Especially nowadays, with the growing size and interdisciplinarity of research teams (13, 14), their approach advances the way toward a more comprehensive framework of success and of team formation in research. With scientists spending considerable time on grant applications (15), increased transparency and scrutiny of the structure of research funding certainly seems a reasonable path toward improving the scientific enterprise. Similar to the open access movement in research publishing, it might reduce unnecessary obstacles and wasteful spending, and it could help to identify and remove the bottlenecks that hinder the funneling of resources toward the scientific talents in most need of financial support.

The quantitative and systematic assessment of research funding is a highly novel approach that has recently brought to light worrisome phenomena in resource allocation with the potential to stifle important scientific developments. For example, evaluators in grant committees systematically give lower scores to proposals that are closer to their own areas of expertise and to those proposals that are too novel (16). On the positive side, better peer review scores in grant proposal evaluations are consistently associated with better research outcomes, demonstrating that, altogether, peer review indeed generates reliable information about the quality of applications that may not be available otherwise (17). In any case, continued large-scale analyses of the funding process will continue to allow us to uncover hidden biases and provide us with the tools for correcting them.

Mining datasets of funded research proposals is just one of the many essential steps toward this goal. To uncover the socioeconomic mechanisms behind knowledge production in science, we must connect the dots between several phenomena, from the mobility of scientists (18) to the spread of ideas (19). Also, what are the dynamics of success in science: How and why do we cite others? Are there regional, gender-based, or other biases that influence the way we hire faculty (20) or quantify impact, thereby distorting the fair allocation of funding? If the opening of new data sources and the data mining advances in the past decade are any indication, answers to these questions will soon arrive, to the significant betterment of science as a whole.

Footnotes

The authors declare no conflict of interest.

See companion article on page 14760.

References

- 1.Merton RK. The Sociology of Science: Theoretical and Empirical Investigations. Univ of Chicago Press; Chicago: 1973. [Google Scholar]

- 2.Newman ME. The structure of scientific collaboration networks. Proc Natl Acad Sci USA. 2001;98(2):404–409. doi: 10.1073/pnas.021544898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Moody J. The structure of a social science collaboration network: Disciplinary cohesion from 1963 to 1999. Am Sociol Rev. 2004;69(2):213–238. [Google Scholar]

- 4.Petersen AM, et al. Reputation and impact in academic careers. Proc Natl Acad Sci USA. 2014;111(43):15316–15321. doi: 10.1073/pnas.1323111111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Redner S. Citation statistics from 110 years of physical review. Phys Today. 2005;58(6):49–54. [Google Scholar]

- 6.Sinatra R, et al. A century of physics. Nat Phys. 2015;11(10):791–796. [Google Scholar]

- 7.Ma A, Mondragón RJ, Latora V. Anatomy of funded research in science. Proc Natl Acad Sci USA. 2015;112:14760–14765. doi: 10.1073/pnas.1513651112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Boccaletti S, Latora V, Moreno Y, Chavez M, Hwang DU. Complex networks: Structure and dynamics. Phys Rep. 2006;424(4):175–308. [Google Scholar]

- 9.Colizza V, Flammini A, Serrano MA, Vespignani A. Detecting rich-club ordering in complex networks. Nat Phys. 2006;2(2):110–115. [Google Scholar]

- 10.Zhou S, Mondragón RJ. The rich-club phenomenon in the Internet topology. IEEE Commun Lett. 2004;8(3):180–182. [Google Scholar]

- 11.Sporns O. Structure and function of complex brain networks. Dialogues Clin Neurosci. 2013;15(3):247–262. doi: 10.31887/DCNS.2013.15.3/osporns. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Theil S. Why the Human Brain Project went wrong—and how to fix it. Scientific American. 2015 Available at www.scientificamerican.com/article/why-the-human-brain-project-went-wrong-and-how-to-fix-it. Accessed October 29, 2015. [Google Scholar]

- 13.Wuchty S, Jones BF, Uzzi B. The increasing dominance of teams in production of knowledge. Science. 2007;316(5827):1036–1039. doi: 10.1126/science.1136099. [DOI] [PubMed] [Google Scholar]

- 14.Börner K, et al. A multi-level systems perspective for the science of team science. Sci Transl Med. 2010;2(49):49cm24. doi: 10.1126/scitranslmed.3001399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.von Hippel T, von Hippel C. To apply or not to apply: A survey analysis of grant writing costs and benefits. PLoS One. 2015;10(3):e0118494. doi: 10.1371/journal.pone.0118494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Boudreau K, Guinan E, Lakhani K, Riedl C. 2015. Looking across and looking beyond the knowledge frontier: Intellectual distance and resource allocation in science. Social Science Research Network. Available at ssrn.com/abstract=2478627. Accessed October 29, 2015.

- 17.Li D, Agha L. Research funding. Big names or big ideas: Do peer-review panels select the best science proposals? Science. 2015;348(6233):434–438. doi: 10.1126/science.aaa0185. [DOI] [PubMed] [Google Scholar]

- 18.Deville P, et al. Career on the move: geography, stratification, and scientific impact. Sci Rep. 2014;4(4770):4770. doi: 10.1038/srep04770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kuhn T, Perc M, Helbing D. Inheritance patterns in citation networks reveal scientific memes. Phys Rev X. 2014;4(4):041036-1-9. [Google Scholar]

- 20.Clauset A, Arbesman S, Larremore DB. Systematic inequality and hierarchy in faculty hiring networks. Science Advances. 2015;1(1):e1400005-1-6. doi: 10.1126/sciadv.1400005. [DOI] [PMC free article] [PubMed] [Google Scholar]