Abstract

Purpose

This study examined vowel characteristics in adult-directed (AD) and infant-directed (ID) speech to children with hearing impairment who received cochlear implants or hearing aids compared with speech to children with normal hearing.

Method

Mothers' AD and ID speech to children with cochlear implants (Study 1, n = 20) or hearing aids (Study 2, n = 11) was compared with mothers' speech to controls matched on age and hearing experience. The first and second formants of vowels /i/, /ɑ/, and /u/ were measured, and vowel space area and dispersion were calculated.

Results

In both studies, vowel space was modified in ID compared with AD speech to children with and without hearing loss. Study 1 showed larger vowel space area and dispersion in ID compared with AD speech regardless of infant hearing status. The pattern of effects of ID and AD speech on vowel space characteristics in Study 2 was similar to that in Study 1, but depended partly on children's hearing status.

Conclusion

Given previously demonstrated associations between expanded vowel space in ID compared with AD speech and enhanced speech perception skills, this research supports a focus on vowel pronunciation in developing intervention strategies for improving speech-language skills in children with hearing impairment.

The nature of spoken language input typically experienced by children with normal hearing has a strong influence on the development of linguistic and cognitive abilities (Hart & Risley, 1995; Hurtado, Marchman, & Fernald, 2008; Kaplan, Bachorowski, Smoski, & Hudenko, 2002; Kaplan, Bachorowski, & Zarlengo-Strouse, 1999; Liu, Kuhl, & Tsao, 2003; Soderstrom, 2007). Infant-direct (ID) speech, often referred to as hyperspeech (Fernald, 2000), is characterized by a number of prosodic, phonetic, morphological, syntactic, and semantic modifications compared with adult-directed (AD) speech (Bernstein Ratner, 1986; Burnham, Kitamura, & Vollmer-Conna, 2002; Fernald, 1989; Kuhl et al., 1997; Papousek, Papousek, & Haekel, 1987; Soderstrom, 2007; Stern, Spieker, Barnett, & MacKain, 1983). Such modifications are suggested to fulfill three main functions: modulate children's attention and arousal levels, communicate maternal affect, and facilitate language acquisition (Fernald, 1989; Stern et al., 1983).

Despite evidence regarding the importance of ID speech for development in children with normal hearing, researchers have only recently started to examine the properties of linguistic input to children with hearing loss (Bergeson, Miller, & McCune, 2006; Kondaurova & Bergeson, 2011; Kondaurova, Bergeson, & Dilley, 2012; Kondaurova, Bergeson, & Xu, 2013; C. Lam & Kitamura, 2010). The effect of child hearing status on maternal speech input may serve an important role as a predictor of the enormous variability observed in language acquisition skills in pediatric populations with hearing impairment (Houston, Ying, Pisoni, & Kirk, 2003; McKinley & Warren, 2000; Miyamoto, Svirsky, & Robbins, 1997; Niparko et al., 2010; Pisoni et al., 2008; Svirsky, Robbins, Kirk, Pisoni, & Miyamoto, 2000). Depending on the degree of hearing loss and the amount of residual hearing, children with hearing impairment may receive a hearing aid (for little to moderate hearing loss) or a cochlear implant (for severe to profound hearing loss; Joint Committee on Infant Hearing, 2007). The present study examined the phonetic characteristics of ID speech to children with hearing impairment who received an assistive device (i.e., cochlear implant or hearing aid) compared with that to matches with normal hearing, focusing specifically on the vowel space to children with hearing impairment.

Substantial evidence demonstrates that one of the major phonetic modifications in ID compared with AD speech concerns the articulation of point vowels /i/, /ɑ/, and /u/, as reflected in an expanded acoustic vowel space, which is indexed by first and second formant frequencies (F1 and F2, respectively; Burnham et al., 2002; Kuhl et al., 1997; Liu et al., 2003; Liu, Tsao, & Kuhl, 2009; Uther, Knoll, & Burnham, 2007). An expanded vowel space in ID relative to AD speech has now been documented in several languages and dialects, including American and Australian English, Swedish, Russian, and Mandarin Chinese (Burnham et al., 2002; Kuhl et al., 1997; Liu et al., 2009). Kuhl et al. (1997) proposed that the increased acoustic distance between vowel categories promotes language acquisition by more effectively separating sounds into contrastive categories and highlighting between-categories differences in ID relative to AD speech. Since this “hyperarticulation hypothesis” was first put forward (Kuhl et al., 1997), several studies have demonstrated evidence in support of the idea that the increased acoustic distance between vowels facilitates language acquisition in children (Liu et al., 2003; Song, Demuth, & Morgan, 2010). Liu et al. (2003) found a positive correlation between the degree of the expansion of mothers' acoustic vowel space in speech to 6- to 8-month-old and 10- to 12-month-old children and the children's ability to discriminate native Mandarin Chinese consonant contrasts. In a more recent study, Song et al. (2010) showed that vowel space expansion facilitated 19-month-old children's word recognition in American English.

Recent studies have presented contradictory results on the degree of vowel space expansion in ID speech to populations both with and without hearing impairment (Cristia & Seidl, 2014; Englund & Behne, 2006; Kondaurova et al., 2012; C. Lam & Kitamura, 2010, 2012). Several studies have demonstrated no enhancement of the distance between vowel categories and/or expansion of the acoustic vowel space area in speech to children with normal hearing compared with AD speech (Cristia & Seidl, 2014; Englund & Behne, 2006). Although evidence of vowel space expansion exists when a talker is aware that an adult listener has a hearing impairment (Ferguson & Kewley-Port, 2002; Picheny, Durlach, & Braida, 1986), partial or no vowel space expansion was observed in speech to children with hearing impairment (Kondaurova et al., 2012; C. Lam & Kitamura, 2010).

A case study by C. Lam and Kitamura (2010) demonstrated that the vowel space area to a 15.5-month-old child with hearing impairment fitted bilaterally with hearing aids was decreased compared with the vowel space area in speech to his twin brother with normal hearing and to an adult experimenter. Research with children who were prelingually deaf prior to cochlear implantation demonstrated an expanded vowel space in ID speech for only a subset of vowels (/u/ and /ɪ/; Kondaurova et al., 2012). In order to investigate the effect of hearing impairment on vowel space in ID speech with more participants, C. Lam and Kitamura (2012) simulated hearing loss in infants with normal hearing. They placed mothers and infants in separate rooms and used closed-circuit television to allow visual contact between the mother and infant while manipulating the volume of the auditory signal delivered to the child. The authors found that mothers expanded their vowel space when the infants could hear them at full or partial volume but that mothers did not expand their vowel space when the infants could not hear their speech.

Several considerations suggest the need to better understand the phonetic characteristics of speech to children with hearing impairment. First, given the prior links established between vowel space expansion and speech-language development (Kuhl et al., 1997; Liu et al., 2003), it is noteworthy that children with hearing impairment demonstrate lower responsiveness during mother–child interaction compared with children with normal hearing (Koester, 1995; Pressman, Pipp-Siegel, Yoshinaga-Itano, Kubicek, & Emde, 1998; Schlesinger & Meadow, 1972; Wedell-Monning & Lumley, 1980). For this reason, mothers might face greater demand for engaging and maintaining the attention of children with hearing impairment, which could be achieved by sacrificing vowel space expansion in favor of acoustic characteristics of ID speech that function primarily to modulate children's attention (e.g., pitch, vowel duration, speech rate; C. Lam & Kitamura, 2012). Recent research also suggests that mothers adjust prosodic characteristics of their speech to their child with hearing impairment on the basis of the child's amount of hearing experience rather than the child's chronological age (Bergeson et al., 2006; Kondaurova & Bergeson, 2011; Kondaurova et al., 2013). However, mothers also produced more syllables in speech overall to both age-matched and experience-matched children than to the children's peers with hearing impairment at 3, 6, and 12 months after cochlear implantation. These findings further support the possibility that a child's hearing status may affect the segmental properties of ID speech (Kondaurova et al., 2013). Overall, further investigation of phonetic characteristics of ID speech to sizeable groups of children with hearing impairment who received assistive devices (both cochlear implants and hearing aids) is needed in order to understand how properties of maternal speech vary as a function of child hearing status and how this input may contribute to language delays (and their prevention) in pediatric populations with hearing impairment.

The present research examines acoustic characteristics of three point vowels—/i/, /ɑ/, and /u/—in speech to children with hearing impairment who received either cochlear implants (Study 1) or hearing aids (Study 2). Investigating speech to both groups of children with hearing impairment allows us to separately examine the characteristics of ID speech specific to each of these groups that differ in age and type of assistive device (which may be related to amount of residual hearing). These acoustic properties of speech to children with hearing impairment were then compared with those produced in speech to children with normal hearing, matched by either chronological age or hearing experience, and to an adult experimenter. This study design permitted a determination of whether mothers tailor their speech input to the amount of hearing experience of a child with hearing impairment, as has been found in previous studies (Bergeson et al., 2006; Kondaurova & Bergeson, 2011; Kondaurova et al., 2013). We predicted that the acoustic properties of the point vowels /i/, /ɑ/, and /u/ would be more distinctive in ID than in AD speech in the population with normal hearing (Burnham et al., 2002; Kuhl et al., 1997; Liu et al., 2003, 2009; Uther et al., 2007). We also predicted that there would be less vowel space expansion in input directed to children with hearing impairment than in that directed to control groups for ID relative to AD speech (Kondaurova et al., 2012; C. Lam & Kitamura, 2010, 2012).

Study 1

Study 1 investigated how a child's hearing status affected the vowel space characteristics associated with the point vowels /i/, /ɑ/, and /u/ between ID and AD speech. In this study, we focused on vowels in speech directed to children with profound hearing loss who were fitted with cochlear implants (HI-CI), compared with speech directed to children with normal hearing matched on chronological age (NH-CAM) or amount of hearing experience (NH-HEM).

Method

Participants

All mothers were native speakers of American English. Twenty dyads of mothers with normal hearing and their children with hearing impairment fitted with cochlear implants (HI-CI; five girls, 15 boys) were recruited from the clinical population at the Indiana University School of Medicine Department of Otolaryngology—Head and Neck Surgery. The HI-CI group of participants was invited for two visits at approximately 3 and 6 months after cochlear implant stimulation. Table 1 shows the means, standard deviations, and ranges for the ages of the children with hearing impairment. Table 2 provides available information on communication method, deafness etiology, and the type of cochlear implant device for each child in the HI-CI group.

Table 1.

Age (in months) and hearing experience of participants with hearing impairment fitted with cochlear implants (HI-CI) or hearing aids (HI-HA), their chronological-age matches with normal hearing (NH-CAM), and of their hearing-experience matches with normal hearing (NH-HEM).

| Group and age classification | First session |

Second session |

||

|---|---|---|---|---|

| Range | M (SD) | Range | M (SD) | |

| Study 1 | ||||

| HI-CI group: chronological age | 11.2–27.6 | 18.2 (4.9) | 15.8–32.2 | 21.6 (5.0) |

| NH-CAM group: chronological age | 11.4–27.5 | 18.2 (4.8) | 15.9–32.0 | 21.6 (4.9) |

| HI-CI group: hearing experience | 2.8–3.6 | 3.1 (0.3) | 5.6–8.0 | 6.5 (0.6) |

| NH-HEM group: chronological age | 2.8–4.3 | 3.2 (0.5) | 5.0–9.3 | 6.7 (1.2) |

| Study 2 | ||||

| HI-HA group: chronological age | 5.6–22.3 | 11.9 (5.6) | 8.7–24.2 | 14.8 (5.2) |

| NH-CAM group: chronological age | 5.5–21.6 | 11.5 (5.3) | 8.6–23.8 | 14.7 (5.0) |

| HI-HA group: hearing experience | 2.3–4.5 | 3.3 (0.5) | 4.7–7.9 | 6.2 (0.8) |

| NH-HEM group: chronological age | 2.3–6.6 | 3.4 (1.1) | 5.0–8.0 | 6.2 (0.8) |

Table 2.

Hearing assistive device type and processor, communication method, and deafness etiology for participants with hearing impairment fitted with cochlear implants (Study 1) or hearing aids (Study 2)

| Code | Device | Processor | Communication methoda | Etiology |

|---|---|---|---|---|

| Study 1 | ||||

| 2514 | Nucleus 24 K | Sprint | Total | Genetic |

| 2515 | Nucleus 24 Contour | Sprint | Cued speech | Auditory neuropathy |

| 2518 | Nucleus 24 Contour | Sprint | Oral | Genetic |

| 2519 | Med-El C 40+ | Tempo + | Oral | Waardenburg syndrome |

| 2523 | Nucleus 24 Contour | Sprint | Oral | Unknown |

| 2528 | Nucleus 24 Contour | Sprint | Total | Unknown |

| 2529 | Med-El C 40+ | Tempo + | Oral | Branchio-oto-renal syndrome |

| 2533 | Nucleus 24 Contour | Sprint | Oral | Genetic |

| 2534 | Nucleus 24 Contour | Sprint | Oral | Auditory neuropathy/LVA |

| 2535 | Nucleus Freedom—Contour Adv. | Freedom | Oral | Mondini |

| 2536 | Clarion HiRes 90 k | PSP | Total | Unknown |

| 2537 | Nucleus Freedom—Straight | Freedom | Total | VATER syndrome |

| 2540 | Nucleus Freedom—Contour Adv. | Freedom | Oral | Unknown |

| 2542 | Nucleus Freedom—Contour Adv. | Freedom | Oral | Unknown |

| 2543 | Nucleus Freedom—Contour Adv. | Freedom | Oral | Unknown |

| 2795 | Nucleus Freedom—Contour Adv. | Freedom | Oral | Genetic |

| 2813 | Nucleus Freedom—Contour Adv. | Freedom | Oral | Unknown |

| 3029 | Advanced Bionics HiRes 90 K | PSP/Harmony | Oral | Unknown |

| 3058 | Nucleus Freedom—Contour Adv. | Freedom | Oral | Unknown |

| 3098 | Nucleus Freedom—Contour Adv. | Freedom | Oral | Unknown |

| Study 2 | ||||

| 2114 | Oticon Gaia BTEs | Oral | Genetic | |

| 2489 | Phonak Maxx 211 BTE | Total | Unknown | |

| 2491 | Phonak Maxx 311 BTEs | Unavailable | Unavailable | |

| 2493 | Oticon Gaia BTEs | Unavailable | Unavailable | |

| 2744 | Phonak Power Maxx 411 BTEs | Unavailable | Unavailable | |

| 2769 | Phonak Maxx 311 BTEs | Oral/Signed Exact English | mitochondrial myopathy/LVA | |

| 2884 | Phonak Maxx 311 BTEs | Total | Unknown | |

| 2891 | Oticon Gaia Power BTEs | Oral | Leigh's disease | |

| 3029 | Oticon Tego Pro BTEs | Oral | Unknown | |

| 3031 | Oticon Sumo BTE (L), Oticon Tego Pro BTE | Oral/American Sign Language | Unknown | |

| 3699 | Oticon Tego Pro BTEs | Oral | Unknown | |

Note. LVA = large vestibular aqueduct; Adv. = advanced; VATER = vertebral, anus, trachea, esophagus, and/or renal abnormalities; BTE = behind-the-ear.

Communication method describes exclusively spoken language (oral) or a combination of spoken language with Signed Exact English (total).

Twenty dyads of mothers with normal hearing and their chronological age–matched children with normal hearing (NH-CAM; 13 girls, seven boys) were recruited from the local community (see Table 1 for details). The NH-CAM children had a chronological age that was matched to that of the HI-CI children at the time of each of the two visits. Twenty dyads of mothers with normal hearing and their hearing experience–matched children with normal hearing (NH-HEM; eight girls, 12 boys) were recruited from the local community (see Table 1 for details).1 They were invited for two sessions that coincided with the HI-CI children's amount of hearing experience (i.e., time poststimulation) at the time of the visit. Thus, NH-HEM children were approximately 3 and 6 months of age at the two visits.

This research and the recruitment of human participants were approved by the Indiana University Institutional Review Board. All mothers were paid $10 per visit, and most mothers of HI-CI children received reimbursement for mileage and lodging.

Procedure

Recordings

Mothers were digitally recorded speaking to their children and to an adult experimenter in a double-walled, copper-shielded sound booth (Industrial Acoustics Co., New York, NY). In the ID condition the mother was asked to sit with her child on a chair or blanket on the floor and was instructed to speak to her child as she normally would at home. The mother was provided with quiet toys (i.e., green key, pink ball, green turtle, brown dog, blue button, and black cat) but was not explicitly told to say the toy names. In the AD condition each mother gave responses in a semistructured short interview, with an adult experimenter utilizing open-ended questions. Both tasks used spontaneous speech in order to generate a sample that was as naturalistic as possible. ID sessions averaged 5.1 min in length (SD = 0.9; range = 2.5–9.6) and AD sessions averaged 4.6 min in length (SD = 2.7; range = 1.3–20.5). The order of ID and AD recordings was counterbalanced across mothers.

Mothers' speech was recorded in one of two ways. The initial system used a hypercardioid microphone (ES933/H, Audio-Technica, Tokyo, Japan) powered by a phantom power source and linked to an amplifier (DSC 240) and digital audio tape recorder (DTC-690, Sony, Tokyo, Japan). Partway through this longitudinal project, the equipment was updated to an SLX Wireless Microphone System (Shure, Niles, IL). This system included an SLX1 Bodypack transmitter with a built-in microphone and an SLX4 wireless receiver, which was connected to a 3CCD Digital Video Camcorder GL2 NTSC (Canon, Tokyo, Japan) and recorded the speech samples directly onto a Macintosh computer (OSX Version 10.4.10; Apple Inc., Cupertino, CA) via Hack TV (Version 1.11) software. No systematic differences were found across recording sessions or participant groups in terms of recording technology. Recordings were made at a sampling rate of 22050 Hz with a 16-bit quantization rate.

Mothers were recorded speaking to their children at two sessions and to an adult experimenter at one or both of these sessions. In total, there were 238 recordings (ID condition: HI-CI group = 40 recordings, NH-CAM group = 40 recordings, NH-HEM group = 40 recordings; AD condition: HI-CI group = 38 recordings, NH-CAM group = 40 recordings, NH-HEM group = 40 recordings).2

Token Identification

For each ID and AD condition, instances of target /i/, /ɑ/, and /u/ vowels were identified. We included only those vowels that occurred in stressed syllables, defined as monosyllabic content words and primary-stressed syllables of polysyllabic words. Because vowels were extracted from spontaneous speech, they occurred in a variety of segmental contexts; however, the segmental contexts were comparably varied across ID and AD conditions. Vowels in onomatopoeic speech were included only if the onomatopoeic words were part of a standard vocabulary of imitation sounds (e.g., beep, boo, moo). Due to a vowel merger between /ɑ/ and /ɔ/ in progress in Indiana (Labov, Ash, & Boberg, 2006), it was impossible to reliably determine for a given speaker whether a particular token of a low back vowel reflected a single phonemic category or two, given the expectation of substantial within-category variation in the vowel space for the ID register overall. As a result, all instances of low back vowels were treated as a single category. Furthermore, vowel tokens immediately followed by /l/ and /r/ were excluded from the study to prevent any effects of /l/- and /r/-coloring on formant measurements.

The acoustic analysis included measurements of formant frequencies for the three point vowels /i/, /ɑ/, and /u/, which also formed the basis for calculations of vowel space area and vowel space dispersion. The spontaneous nature of the speech led to a low number of analyzable tokens of these vowels in many of the mothers' speech samples. Therefore, speech samples from both recording intervals were combined and treated as a single speech sample in order to ensure that enough data were available for analysis. In addition, the following procedures were applied in the collection of tokens from participants: (a) If a mother in any group (HI-CI, NH-CAM, NH-HEM) produced fewer than three tokens of a point vowel that met inclusion criteria in either speech condition (AD, ID), the mother's data for that vowel would be excluded from the following acoustic analysis; (b) if a mother produced more than 20 tokens of a given vowel in either speech condition (AD, ID), a random sample of 20 vowel tokens was used for the analysis; (c) if a mother was excluded from the analysis of any point vowel on the basis of criterion a, this mother's data was also excluded from measurements of vowel space area and dispersion because these measures are dependent on having samples of all three point vowels; and (d) tokens from speech to children with normal hearing were included only in the analyses in which the HI-CI match child had met the previous inclusion criteria and vice versa. On the basis of these standards, 57 participants (19 per group: HI-CI, NH-CAM, and NH-HEM) were included for /ɑ/ formant measures, 45 participants (15 per group) for /i/, and 42 participants (14 per group) for /u/. Thirty-three participants (11 per group) were included in measures of vowel space area and dispersion.

On average, 11 (SD = 5.2) tokens of each vowel (/i/, /ɑ/, and /u/) were analyzed in the ID speech condition and 16 (SD = 5.6) tokens of each vowel for each speaker were analyzed in the AD speech condition. A total of 929 tokens in the ID speech condition and 1,609 tokens in the AD speech condition were analyzed. The PRAAT 5.0.21 editor (Boersma & Weenink, 2012) and MATLAB (Math Works, 2009) software were used to identify and segment out each vowel in recorded speech on the basis of a combination of waveform and spectral cues.

Acoustic Analysis

Formant frequencies. Phonetic analysts trained in formant analysis first identified the onset and offset of each randomly selected vowel token via visual inspection of spectrogram and waveform information using segmentation criteria established for the Buckeye Corpus (Pitt et al., 2007). Measurements of F1 and F2 were then taken at the vowel midpoint using a combination of spectral slices, visual inspection of spectrograms, and linear predictive coding estimates; all F1 and F2 measurements were checked by hand for correctness. Analysts identified individual tokens of the target vowels as usable if the first two formants were reasonably clear and measurements fell within an expected range of the mean, plus or minus 3 SD, as determined by mean formant values for female talkers across multiple studies tabulated in Kent and Read (1992). Tokens that fell outside the expected range, that had strongly stratified harmonics, or for which F1 or F2 could not be determined due to high fundamental frequency (F0), coarticulation, poor sound quality, background noise, and so on were checked by one of the authors for usability before being included in or excluded from the analysis. All tokens with an F0 of 350 Hz or higher were excluded due to the quantization of the spectrum (i.e., the trade-off between F0 and formant resolution in time-frequency analysis under source-filter theory; Johnson, 2004; Stevens, 2000); this provided a means of reducing variability and unreliability associated with measurements of high-F0 tokens (Vallabha & Tuller, 2002). If a randomly selected token of a given vowel was excluded for any reason, it was replaced by another token of that vowel randomly selected from among the remaining tokens produced by that mother in the same speech condition. A total of 12% of selected tokens were excluded for various reasons (5% AD condition, 19% ID condition); of these, 65% were excluded for high F0 (33% AD condition, 76% ID condition).

Formant values in Hertz were then converted to mel units. The mel scale is based on psychophysical studies of pitch distance and reflects human perception of frequency more directly than linear Hertz. The relationship between the mel scale and Hertz is a nonlinear, strictly monotonic increasing function, such that above 500 Hz larger and larger intervals are judged by listeners to produce equal pitch increments. The mel scale has been used in many prior studies of vowel space and formant characteristics (e.g., Bradlow, Torretta, & Pisoni, 1996; Englund & Behne, 2005; Kuhl et al., 1997; C. Lam & Kitamura, 2010, 2012). The following equation was used for the conversion of Hertz (Hz) to mels (Fant, 1973, in Bradlow et al., 1996):

The mels conversion provided the basis for all analyses reported. The means and standard deviations of F1 and F2 were determined for each speaker in both the ID and AD speech conditions.

Vowel space area. Vowel space triangles were constructed in an x–y plane, where the average F1 and F2 values of /i/, /ɑ/, and /u/ vowels were the respective x and y coordinates of the corners. The area of the resultant triangles in both the ID and AD conditions was calculated using the following equation (Liu et al., 2003):

Vowel space dispersion. Previous research on AD speech has identified vowel space dispersion as a good index of speech clarity (Bradlow et al., 1996). The vowel space dispersion is calculated by measuring the distance of each token from a central point in the talker's vowel space. This measure provides an indication of the overall expansion or compaction of the set of vowel tokens from each participant and detects fine-grained individual differences in acoustic–phonetic characteristics (Bradlow et al., 1996). By capturing an aspect of vowel production characteristics that is slightly different than that captured using the traditional Heron method (Kuhl et al., 1997; Neel, 2008), this metric helps to provide an assessment of vowel clarity. Vowel space dispersion was calculated using the centroid of each speaker's vowel space triangle and averaging the distances of the individual tokens from the centroid (see Appendix for further detail; Bradlow et al., 1996).

Reliability

The formants of any vowel token whose F1 or F2 was 2 SD or more away from a given participant's mean F1 or F2, respectively, were checked by hand to ensure accuracy. In addition, trained analysts remeasured a random selection of 5% of the tokens used in each speech sample for an analysis of interrater reliability. The percentage difference (Δi) between the first rater's measurement (r1) and the second rater's measurement (r2) was calculated using the equation (Kuhl et al., 1997)

The average interrater percentage difference was 8.0% (SD = 8.4). This is in line with reliability reported in previous studies and indicates high interrater reliability (e.g., Kuhl et al., 1997).

Results and Discussion

Vowel Space Area and Vowel Space Dispersion

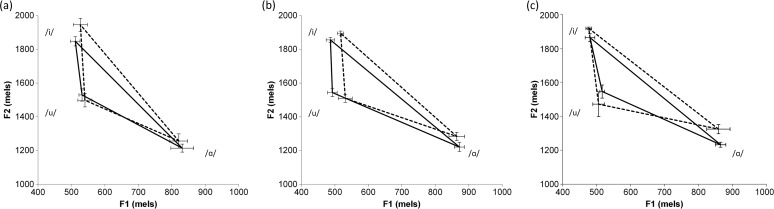

We first examined whether an overall difference in vowel space area or vowel space dispersion existed. The means and standard deviations of the vowel space area and dispersion values for ID and AD speech in all three groups are reported in Table 3, and Figure 1 shows vowel space triangles for each group. A 2 (speech style: AD, ID) × 3 (hearing status: HI-CI, NH-CAM, NH-HEM) mixed-measures analysis of variance (ANOVA) was conducted separately for vowel space area and vowel space dispersion, with speech style as a within-subject factor and hearing status as a between-subjects factor.

Table 3.

Average (SD) vowel space area in mels squared and vowel space dispersion in mels for infant-directed (ID) and adult-directed (AD) speech to participants with hearing impairment fitted with cochlear implants (HI-CI, Study1) or hearing aids (HI-HA, Study 2), their chronological-age matches with normal hearing (NH-CAM), and their hearing-experience matches with normal hearing (NH-HEM).

| Variable | Hearing status | AD | ID |

|---|---|---|---|

| Study 1 | |||

| Area | HI-CI | 54878 (32496) | 82922 (41161) |

| NH-CAM | 63672 (31090) | 79326 (35213) | |

| NH-HEM | 52730 (23493) | 79213 (36981) | |

| All | 57094 (28769) | 80487 (36707) | |

| Dispersion | HI-CI | 330 (31) | 336 (35) |

| NH-CAM | 322 (33) | 333 (34) | |

| NH-HEM | 309 (32) | 342 (32) | |

| All | 337 (33) | 320 (32) | |

| Study 2 | |||

| Area | HI-HA | 45159 (19952) | 62913 (21529) |

| NH-CAM | 58609 (14510) | 61842 (14207) | |

| NH-HEM | 48914 (13235) | 74872 (43906) | |

| All | 50894 (16264) | 66542 (28277) | |

| Dispersion | HI-HA | 307 (23) | 349 (43) |

| NH-CAM | 329 (19) | 315 (18) | |

| NH-HEM | 310 (22) | 347 (31) | |

| All | 315 (23) | 337 (34) | |

Figure 1.

Infant-directed (dashed line) and adult-directed (solid line) vowel space triangles that are based on average first formant (F1) and second formant (F2) values for speech to (a) participants with hearing impairment fitted with cochlear implants, (b) chronological-age matches with normal hearing, and (c) hearing-experience matches with normal hearing. Error bars show mean ± 1 SEM.

The vowel space area results demonstrated a significant effect of speech style, with ID (M = 80487 mels, SD = 36707 mels) greater than AD (M = 57094 mels, SD = 28769 mels) speech, F(1, 30) = 12.299, p = .001, ηp2 = .291, but no significant main effect of hearing status or interaction between hearing status and speech style were found. The vowel space dispersion results demonstrated a significant effect of speech style, with ID (M = 337, SD = 33) greater than AD (M = 320 mels, SD = 32 mels) speech, F(1, 30) = 5.967, p = .021, ηp2 = .166, but no significant main effect of hearing status or interaction between hearing status and speech style were found.

Formant Frequencies

The means and standard deviations for F1 and F2 frequencies of each point vowel in each group are shown in Table 4. A 2 (speech style: AD, ID) × 3 (hearing status: HI-CI, NH-CAM, NH-HEM) mixed-measures ANOVA was conducted separately for each point vowel (/i/, /ɑ/, and /u/), with speech style as a within-subject factor and hearing status as a between-subjects factor.

Table 4.

Average (SD) first formant (F1) and second formant (F2) frequencies in mels for the point vowels /i/, /ɑ/, and /u/ in infant-directed (ID) and adult-directed (AD) speech to participants with hearing impairment fitted with cochlear implants (HI-CI, Study 1) or hearing aids (HI-HA, Study 2), their chronological-age matches with normal hearing (NH-CAM), and their hearing-experience matches with normal hearing (NH-HEM).

| Hearing status | Speech style | /i/ |

/ɑ/ |

/u/ |

|||

|---|---|---|---|---|---|---|---|

| F1 | F2 | F1 | F2 | F1 | F2 | ||

| Study 1 | |||||||

| HI-CI | AD | 475 (28) | 1866 (60) | 835 (73) | 1238 (126) | 526 (40) | 1531 (104) |

| ID | 481 (36) | 1924 (51) | 842 (60) | 1300 (77) | 520 (50) | 1409 (146) | |

| NH-CAM | AD | 495 (21) | 1852 (43) | 808 (122) | 1145 (195) | 499 (30) | 1475 (116) |

| ID | 495 (25) | 1895 (46) | 825 (62) | 1211 (141) | 525 (30) | 1391 (150) | |

| NH-HEM | AD | 502 (34) | 1805 (76) | 869 (53) | 1232 (45) | 508 (25) | 1510 (92) |

| ID | 490 (33) | 1895 (36) | 839 (80) | 1291 (94) | 534 (54) | 1440 (163) | |

| Study 2 | |||||||

| HI-HA | AD | 515 (33) | 1853 (59) | 829 (64) | 1209 (80) | 522 (24) | 1523 (94) |

| ID | 524 (46) | 1933 (77) | 814 (54) | 1272 (97) | 527 (45) | 1469 (93) | |

| NH-CAM | AD | 485 (30) | 1842 (35) | 869 (28) | 1233 (61) | 498 (35) | 1526 (53) |

| ID | 500 (32) | 1892 (29) | 877 (51) | 1295 (56) | 524 (50) | 1456 (106) | |

| NH-HEM | AD | 484 (38) | 1853 (63) | 868 (46) | 1227 (36) | 505 (23) | 1488 (161) |

| ID | 485 (24) | 1914 (52) | 863 (81) | 1309 (66) | 516 (40) | 1398 (197) | |

The results for /i/ demonstrated a significant effect of speech style, with F2 higher in ID compared with AD speech, F(1, 42) = 60.087, p < .001, ηp2 = .589, indicating a more advanced or fronted tongue position in ID (M = 1905 mels, SD = 46 mels) relative to AD (M = 1841 mels, SD = 66 mels) speech. The results also demonstrated a significant effect of hearing status for F2, F(1, 42) = 3.681, p = .034, ηp2 = .149, but no interaction between hearing status and speech style was found. Posthoc Tukey tests revealed that the F2 frequency was higher in the HI-CI group (M = 1895 mels, SD = 597 mels) compared with the NH-HEM group (M = 1850 mels, SD = 584 mels), p = .026. These results suggest a more advanced or fronted tongue position across speech styles for the HI-CI group compared with the NH-HEM group. Because this difference is present for both ID and AD speech, it is not likely due to an effect of the child's hearing status and may reflect other variables, such as interindividual differences. No significant main effects or interaction were found for F1.

The results for /ɑ/ demonstrated a significant effect of speech style, with F2 higher in ID compared with AD speech, F(1, 54) = 11.165, p = .002 , ηp2 = .171, indicating a more advanced or fronted tongue position in ID (M = 1267 mels, SD = 113 mels) relative to AD (M = 1205 mels, SD = 141 mels) speech. The results also demonstrated a significant effect of hearing status for F2, F(1, 54) = 4.744, p = .013, ηp2 = .149, but no interaction between hearing status and speech style was found for F2. Posthoc Tukey tests revealed that the F2 frequency was higher in the HI-CI group (M = 1269 mels, SD = 107 mels) than in the NH-CAM group (M = 1178 mels, SD = 171 mels), p = .020. These results suggest a more advanced or fronted tongue position across speech styles for the HI-CI group than for the NH-CAM group. Because this difference in F2 is present for both ID and AD speech, it is not likely due to an effect of the child's hearing status and may reflect other variables, such as interindividual differences. No significant main effects or interaction were found for F1.

The results for /u/ demonstrated a significant effect of speech style, with F2 lower in ID compared with AD speech, F(1, 39) = 16.728, p < .001, ηp2 = .300, suggesting a more retracted or backed tongue position in ID (M = 1413 mels, SD = 151 mels) relative to AD (M = 1506 mels, SD = 105 mels) speech. No main effect of hearing status or interaction between hearing status and speech style were found for F2. No significant main effects or interaction were found for F1.

Summary

Overall, Study 1 demonstrated a more expanded vowel space area and greater vowel space dispersion in ID compared with AD speech, which was reflected in the distribution of the individual vowels /i/, /ɑ/, and /u/ as a systematic shifting in F2 frequency between ID and AD speech across all three groups (HI-CI, NH-CAM, NH-HEM). These findings indicate that the /ɑ/ and /i/ vowels were produced with a more fronted or advanced tongue position and that the /u/ vowel was produced with a more backed or retracted tongue position in ID compared with AD speech. A relatively expanded vowel space area and greater dispersion are consistent with previous findings of greater vowel contrastiveness and clarity in ID than AD speech (e.g., Kuhl et al., 1997).

Study 2

Whereas Study 1 examined vowels in speech to children with profound hearing loss who were fitted with cochlear implants, Study 2 focused on vowels in speech to children with little to moderate hearing loss who had some residual hearing. Due to different amounts of hearing loss and the presence of residual hearing for children with hearing aids, it is possible that the results from Study 1 would not be generalizable to this population. In addition, children are typically fitted with hearing aids at an earlier age than a cochlear implant, which may affect characteristics of speech style to younger children with or without hearing impairment. Study 2 therefore investigated the vowel space characteristics associated with the point vowels /i/, /ɑ/, and /u/ between ID and AD speech directed to children with hearing impairment fitted with hearing aids and children with normal hearing matched on chronological age or amount of hearing experience.

Method

Participants

All mothers were native speakers of American English. Eleven dyads of mothers with normal hearing and children with hearing impairment fitted with hearing aids (HI-HA; five girls, six boys) were recruited from the same population as Study 1 at the DeVault Otologic Research Laboratory at Indiana University School of Medicine. The HI-HA group of participants was invited for two visits at approximately 3 and 6 months after hearing aid fitting. Table 1 shows the means, standard deviations, and ranges for the ages of the children with hearing impairment. Table 2 provides available information on communication method, deafness etiology, and the type of hearing aid device for each child in the HI-HA group.

Eleven dyads of mothers with normal hearing and their chronological age–matched children with normal hearing (NH-CAM; three females, eight males) were recruited from the local community (see Table 1 for details). The NH-CAM children had a chronological age that was matched to that of the HI-HA children at the time of each of the two visits. Eleven dyads of mothers with normal hearing and their hearing experience–matched children with normal hearing (NH-HEM; six females, five males) were recruited from the local community (see Table 1 for details). They were invited for two sessions that coincided with the HI-HA children's amount of hearing experience at the time of the visit. Thus, NH-HEM children were approximately 3 and 6 months of age at the two visits. Eight NH-HEM participants were also included as NH-HEMs in Study 1. Research approval and participant reimbursement were identical to that in Study 1.

Procedure

Recordings

The recording procedure was identical to that in Study 1. ID sessions averaged 4.9 min in length (SD = 0.7; range = 2.8–6.9) and AD sessions averaged 5.1 min in length (SD = 2.7; range = 1.3–13.4). In total, there were 130 recordings (ID condition: HI-HA group = 22 recordings, NH-CAM group = 22 recordings, NH-HEM group = 22 recordings; AD condition: HI-HA group = 22 recordings, NH-CAM group = 21 recordings, NH-HEM group = 21 recordings).

Token identification. The token identification procedure and inclusion criteria were identical to those in Study 1. Thirty participants (10 per group, HI-HA, NH-CAM, and NH-HEM) were included for /ɑ/ formant measures, 27 participants (nine per group) for /i/, and 27 participants (nine per group) for /u/. Eighteen participants (six per group) were included in measures of vowel space area and dispersion.

On average, 10 (SD = 5.8) tokens of each vowel (/i/, /ɑ/, and /u/) were analyzed in the ID speech condition and 18 (SD = 3.6) tokens of each vowel for each speaker were analyzed in the AD speech condition. A total of 1,631 tokens in the ID speech condition and 2,319 tokens in the AD speech condition were analyzed.

Acoustic analysis. Analysis procedures for measuring vowel space area, vowel space dispersion, and F1 and F2 frequencies were identical to those in Study 1. A total of 13% of selected tokens were excluded for various reasons (7% AD condition, 22% ID condition); of these, 59% were excluded for high F0 (26% AD condition, 74% ID condition).

Reliability. Interrater reliability analysis procedures were identical to those in Study 1. The average interrater percentage difference was 6.8% (SD = 6.2), indicating high reliability consistent with that of prior studies (e.g., Kuhl et al., 1997).

Results and Discussion

Vowel Space Area and Vowel Space Dispersion

We first examined whether an overall difference in vowel space area or vowel space dispersion existed. The means and standard deviations of the vowel space area and dispersion values for ID and AD speech in all three groups of participants are reported in Table 3, and Figure 2 shows vowel space triangles for each group. A 2 (speech style: AD, ID) × 3 (hearing status: HI-HA, NH-CAM, NH-HEM) mixed-measures ANOVA was conducted separately for vowel space area and vowel space dispersion, with speech style as a within-subject factor and hearing status as a between-subjects factor.

Figure 2.

Infant-directed (dashed line) and adult-directed (solid line) vowel space triangles that are based on average first formant (F1) and second formant (F2) values for speech to (a) participants with hearing impairment fitted with hearing aids, (b) chronological-age matches with normal hearing, and (c) hearing-experience matches with normal hearing. Error bars show mean ± 1 SEM.

The vowel space area results demonstrated narrowly missed significance for the effect of speech style, F(1, 15) = 4.075, p = .062, ηp2 = .214; in addition, no significant main effect of hearing status or interaction between hearing status and speech style were found. The vowel space dispersion results demonstrated a significant effect of speech style, F(1, 15) = 6.911, p = .019, ηp2 = .315, with ID (M = 337 mels, SD = 34 mels) greater than AD (M = 315 mels, SD = 23 mels) speech. Although no main effect of hearing status was found, there was a significant interaction between hearing status and speech style, F(2, 15) = 4.884, p = .023, ηp2 = .394. This interaction appears to be driven by the fact that the HI-HA group, but not the other two groups, showed significantly greater ID than AD dispersion in a one-way ANOVA examining the simple effects of speech style; HI-HA: F(1, 5) = 8.717, p = .032, ηp2 = .635; NH-CAM: F(1, 5) = 1.270, p = .203, ηp2 = .635; NH-HEM: F(1, 5) = 5.790, p = .061, ηp2 = .537.

Formant Frequencies

The means and standard deviations for F1 and F2 frequencies of each point vowel in each group are shown in Table 4. A 2 (speech style: AD, ID) × 3 (hearing status: HI-HA, NH-CAM, NH-HEM) mixed-measures ANOVA was conducted separately for each point vowel (/i/, /ɑ/, and /u/), with speech style as a within-subject factor and hearing status as a between-subjects factor.

The results for /i/ demonstrated a significant effect of speech style, with F2 higher in ID compared with AD speech, F(1, 24) = 35.216, p < .001, ηp2 = .595, indicating a more advanced or fronted tongue position in ID (M = 1913 mels, SD = 57 mels) relative to AD (M = 1849 mels, SD = 52 mels) speech. No significant main effect of hearing status or interaction between hearing status and speech style were found for F2. For F1, a significant effect of hearing status was found, F(1, 24) = 3.934, p = .033, ηp2 = .247, and a posthoc Tukey test demonstrated that F1 was higher in the HI-HA group (M = 520 mels, SD = 39 mels) compared with the NH-HEM group (M = 484 mels, SD = 31 mels), p = .033. No significant effect of speech style or interaction between hearing status and speech style were found for F1.

The results for /ɑ/ demonstrated a significant effect of speech style, with F2 higher in ID compared with AD speech, F(1, 27) = 24.002, p < .001, ηp2 = .471, indicating a more advanced or fronted tongue position in ID (M = 1292 mels, SD = 74 mels) relative to AD (M = 1223 mels, SD = 60 mels) speech. No significant main effect of hearing status or interaction between hearing status and speech style were found for F2. No significant main effects or interaction were found for F1.

The results for /u/ demonstrated a significant effect of speech style, with F2 lower in ID compared with AD speech, F(1, 24) = 10.898, p = .003, ηp2 = .312, suggesting a more retracted or backed tongue position in ID (M = 1441 mels, SD = 138 mels) relative to AD (M = 1512 mels, SD = 109 mels) speech. No significant main effect of hearing status or interaction between hearing status and speech style were found for F2. No significant main effects or interaction were found for F1.

Summary

Overall, Study 2 demonstrated greater vowel space dispersion in ID compared with AD speech, which was reflected in the distribution of the individual vowels /i/, /ɑ/, and /u/ as a systematic shifting in F2 frequency in all three groups (HI-HA, NH-CAM, NH-HEM). These findings indicate that the /ɑ/ and /i/ vowels were produced with a more fronted or advanced tongue position and that the /u/ vowel was produced with a more backed or retracted tongue position in ID compared with AD speech. These results also suggest that hearing status affected the production of acoustic characteristics of the vowel /i/. The lack of a significant effect of speech style (ID vs. AD, p = .062) on vowel space area may reflect a Type II error associated with relatively small sample size and/or substantial interindividual variability.

General Discussion

Previous studies have demonstrated a relationship between the degree of mothers' speech clarity measured by vowel space area expansion and enhanced speech-language skills in children with normal hearing (Kuhl et al., 1997; Liu et al., 2003). Given that phonetic characteristics of mothers' speech input are affected by a child's hearing status (Bergeson et al., 2006; Kondaurova & Bergeson, 2011; Kondaurova et al., 2013; C. Lam & Kitamura, 2010, 2012), we investigated the effects of hearing status on production of vowel formant frequencies to understand the nature of the spoken language input typically experienced by children with hearing impairment. Here, we examined the modification of acoustic characteristics of three point vowels (/i/, /ɑ/, and /u/) in spontaneous speech directed to children with hearing impairment and to children and adults with normal hearing. Across two studies, mothers of children with hearing impairment fitted with either a cochlear implant (Study 1) or a hearing aid (Study 2) were matched to mothers of children with normal hearing who had the same chronological age or the same amount of hearing experience as the children with hearing impairment.

The present studies demonstrated overall a more expanded vowel space area and dispersion in ID speech to children with and without hearing impairment compared with AD speech. The identified expansion of vowel space area and dispersion in ID speech to children with hearing impairment is a novel finding, as this is the first study to demonstrate that mothers produce more distinctive point vowels in speech to children with hearing impairment compared with AD speech. The present study also demonstrated generally larger vowel space dispersion in ID compared with AD speech. Vowel space dispersion reflects the overall expansion, or compaction, of the tokens from each talker and is positively correlated with both vowel space area and intelligibility (Bradlow et al., 1996). Our results using the vowel dispersion metric in spontaneous speech are consistent with the idea that mothers are producing vowels in a clearer manner when talking to children both with and without hearing impairment. Given prior links established with speech-language skills (Liu et al., 2003), developing an evidence base for how specific characteristics of speech-language input might contribute to—or prevent—language delays in pediatric populations with hearing impairment is the next step. Such investigations are especially needed given that (a) speech-language delays in these populations are common and (b) there are highly variable speech-language outcomes, particularly for children with hearing impairment using cochlear implants (Houston et al., 2003; Miyamoto et al., 1997; Niparko et al., 2010; Pisoni et al., 2008; Svirsky et al., 2000). This variability in outcomes may be explained by differences in the quality of maternal speech input to children with hearing impairment, a factor that is potentially amenable to intervention by trained speech-language pathologists.

Many researchers have investigated the benefits of speech produced with a clear speaking style. Smiljanic and Sladen (2013) found that children between the ages of 5 and 13 years both with and without hearing impairments who listened to speech in noise showed improved understanding when listening to clear speech (characterized by a slower speech rate, longer target vowels, and expanded vowel space) in comparison with a control speech context. Ferguson (2012) found that the intelligibility-enhancing properties of vowels in clear speech were beneficial for young adults with normal hearing as well as older adults with hearing impairments. J. Lam, Tjaden, and Wilding (2012) asked speakers to read sentences with different instructions for eliciting clear speech; they found that the greatest magnitude of change in vowel spectral measures resulted from the instruction to “overenunciate,” followed by the instruction to “talk to someone with a hearing loss” and then to “speak clearly.” Hazan and Baker (2011) investigated spontaneous speech modification and found that talkers asked to speak as if talking to someone with a hearing loss read sentences with more extreme changes in acoustic–phonetic characteristics compared with intelligibility-challenging conditions (i.e., vocoded speech and speech with babble noise added) but that speakers modulated their speech according to their interlocutors' needs even when not directly experiencing the challenging listening condition.

Recent research by C. Lam and Kitamura (2010, 2012) attempted to investigate how vowels may be modified to a population with hearing impairment. Although informative, neither of these studies can be confidently generalized to a broader population of children with hearing impairment. For example, C. Lam and Kitamura (2012) used a subject population with normal hearing combined with an experimental manipulation designed to simulate deafness and found a difference in the size of the mothers' vowel space area in speech directed to children with normal hearing depending on whether the child could hear his or her mother. Moreover, in a case study involving a single pair of twins, C. Lam and Kitamura (2010) showed that the vowel space area in speech directed to a child with hearing impairment was reduced compared with that in speech directed to his twin with normal hearing. The present research is the first to investigate speech to a relatively large sample of children with actual (rather than simulated) hearing impairment, including children with both hearing aids and cochlear implants, thereby significantly extending the generalizability of research findings in these populations.

The generally greater vowel space area and dispersion in ID compared with AD speech found in the current study can largely be accounted for by the shift in F2 frequencies across point vowels observed for ID compared with AD speech. The increases in F2 for /i/ and /ɑ/ indicate a more fronted tongue position in ID compared with AD speech, whereas the decrease in F2 for /u/ indicates a more backed tongue position in ID speech, consistent with prior research (Bernstein Ratner, 1984; Kondaurova et al., 2012; Kuhl et al., 1997). The point vowels in each study occurred in varied segmental contexts across ID and AD spontaneous speech; thus, these differences between ID and AD speech are unlikely to be accounted for by coarticulatory influences. Overall, the results provide some support for the hypothesis that the point vowels in ID speech are produced in a more phonologically contrastive manner with more distinctive articulatory positions compared with those in AD speech (Kuhl et al., 1997), which extends the tests of this hyperarticulation hypothesis to speech directed to children with hearing impairment. Because enhanced contrastiveness among vowels has been claimed to bootstrap the learning of sound categories by children (Kuhl et al., 1997; Liu et al., 2003), the findings of the current study suggest that mothers may be facilitating language acquisition for children with hearing impairment by producing an expanded acoustic vowel space area and/or increased vowel space dispersion in ID compared with AD speech.

The next question the current study investigated was whether the child's hearing status, amount of hearing experience, or chronological age affected the degree of expansion of vowel space area or dispersion in ID speech. The pattern of results was similar across both studies in that the vowel space area and dispersion were greater in ID compared with AD speech across groups differing in hearing status. Overall, there were few findings that vowel space characteristics differed as a function of hearing status, amount of hearing experience, or chronological age.

Previous research comparing prosodic characteristics (e.g., pitch and timing) of ID speech to children with hearing impairment using cochlear implants demonstrated that mothers adjusted these characteristics to the hearing experience rather than the chronological age of their children (Bergeson et al., 2006; Kondaurova & Bergeson, 2011; Kondaurova et al., 2013). By contrast, in the current study, overall there were few findings that mothers tailored vowel space characteristics to their child's hearing status or produced input that reflected the degree of hearing experience of a child. The present results extend prior studies examining prosodic characteristics in speech to children with hearing impairment and normal hearing (Bergeson et al., 2006; Kondaurova & Bergeson, 2011; Kondaurova et al., 2013) to the production of segmental characteristics of speech. Vowel space modification has been linked to enhanced speech sound discrimination and word recognition (Liu et al., 2003; Song et al., 2010). These findings, coupled with our results showing few differences in mothers' vowel input to their children as a function of hearing status, suggest potential benefits of therapeutic clinical interventions aimed at shaping the vowel space characteristics of mothers' speech to their children with hearing impairment. However, such a recommendation awaits direct evidence of a causal link between particular vowel space modifications and enhancements to speech and/or language processing or production because prior evidence of relationships between an expanded vowel space and speech discrimination measures has been correlational (e.g., Liu et al., 2003). Moreover, an increasing body of evidence suggests that the nature of phonetic changes that occur in ID speech is complex, with no preponderance of evidence that the changes would benefit learning of phonetic categories (Cristia & Seidl, 2014; Englund, 2005; McMurray, Kovack-Lesh, Goodwin, & McEchron, 2013; Sundberg & Lacerda, 1999).

The current study demonstrated that the distribution of both F1 and F2 frequencies in the acoustic vowel space of a mother depended on the hearing status of her child. Both groups with hearing impairment showed an increase in either F1 or F2 for /i/ and/or /ɑ/ relative to the groups with normal hearing, suggesting that mothers produced these vowels with a more fronted tongue position in their speech. However, these results did not translate to differences in vowel space area or dispersion. Instead, our study found that in speech to children with cochlear implants there was an increase in F2 frequencies for /i/ and /ɑ/ vowels relative to speech in groups matched on chronological age (/ɑ/) and hearing experience (/i/). In contrast, speech to children with hearing aids was characterized by an increase only in F1 frequency values for the /i/ vowel relative to the group matched on hearing experience. The group formant differences shown in this study may be due in part to interindividual differences of some mothers in the levels of our independent variables or to the use of spontaneous speech. Although spontaneous speech has high ecological validity because it constitutes the natural input mediating language acquisition, it is also uncontrolled in a great many variables, including differences in consonantal environment, position of target words in an utterance, length of utterances, and differences in speech rate, all of which can affect acoustic characteristics of vowels (Bernstein Ratner, 1986; Cristia & Seidl, 2014; Englund & Behne, 2005, 2006; Hillenbrand, Clark, & Nearey, 2001; Kondaurova et al., 2012; Kuhl et al., 1997; Stevens & House, 1963). It has been suggested that prosodic differences (e.g., slowed rate, prosodic position) between ID and AD speech may drive some differences in phonetic attributes, including vowel formants (McMurray et al., 2013), and the present study did not assess the impact of such prosodic differences. Therefore, it remains desirable for future research to tease apart these variables and their potential interaction with vowel characteristics of speech to children who differ in degree of hearing impairment and type of assistive device in order to understand how these factors affect language acquisition in populations with hearing impairment.

In summary, this study demonstrated that, overall, mothers modified their acoustic vowel space for the point vowels /i/, /ɑ/, and /u/ in spontaneous speech to children with hearing impairment who received cochlear implants or hearing aids in comparison with AD speech. Overall there were few findings reflecting differences in the nature of acoustic vowel space modifications in ID speech directed to children with hearing impairment relative to matched controls. Taken together, these results suggest that mothers of children with hearing loss who received cochlear implants or hearing aids produce a more contrastive vowel space for the point vowels /i/, /ɑ/, and /u/ in a manner consistent with the hyperarticulation hypothesis of ID speech (Burnham et al., 2002; Kuhl et al., 1997; Liu et al., 2009; Uther et al., 2007). Given that vowel space modification has been linked to enhanced speech sound discrimination and word recognition (Liu et al., 2003; Song et al., 2010), the present findings suggest potential benefits of therapeutic clinical interventions aimed at shaping the vowel space characteristics of mothers' speech to their children with hearing impairment.

Acknowledgments

This research was supported by National Institute on Deafness and Other Communication Disorders Research Grant R01 DC 008581 to T. R. Bergeson. We thank members of the Rhythm, Attention, and Perception Laboratory at Bowling Green State University, Ohio, and the Speech-Perception Production Lab at Michigan State University for helping in the analysis of the speech tokens. We also thank Shannon Aronjo, Erin Crask, Kabreea Dunn, Carrie Hansel, Heidi Neuburger, Brittnie Ostler, Crystal Spann, Julie Wescliff, Heather Winegard, and Neil Wright for their help in preparing materials and recording and analyzing mothers' speech.

Appendix

Vowel Space Dispersion Calculations

First, the centroid (C) of each speaker-condition vowel space triangle was calculated using the formula:

where /i/, /ɑ/, and /u/ were the corners of each vowel space triangle and F1 and F2 were the x and y coordinates of each of the corners.

Next, the Euclidean distance (|d|) of each token from the centroid was calculated using the formula:

where F1C and F2C were the x and y coordinates, respectively, of the centroid and F1t and F2t were the first and second formant values, respectively, for the token in question.

Last, the vowel space dispersion (D) was calculated as the ratio of the Euclidean distances (|d|) of each token from the centroid of the triangle to the number of tokens (n) using the formula:

Funding Statement

This research was supported by National Institute on Deafness and Other Communication Disorders Research Grant R01 DC 008581 to T. R. Bergeson.

Footnotes

One mother contributed two speech samples in this study. One sample was from her interaction with her child who had a cochlear implant, and the second was from a separate recorded interaction with her child with normal hearing who was used as an NH-HEM to a different HI-CI participant.

Two mothers from the HI-CI group had AD recordings at only one session, whereas the rest of the participants had AD recordings at both sessions. All the participants had ID recordings at both sessions.

References

- Bergeson T. R., Miller R. J., & McCune K. (2006). Mothers' speech to hearing-impaired infants and children with cochlear implants. Infancy, 10(3), 221–240. doi:10.1207/s15327078in1003_2 [Google Scholar]

- Bernstein Ratner N. (1984). Patterns of vowel modification in mother-child speech. Journal of Child Language, 11, 557–578. doi:10.1017/S030500090000595X [PubMed] [Google Scholar]

- Bernstein Ratner N. (1986). Durational cues which mark clause boundaries in mother-child speech. Journal of Phonetics, 14, 303–309. [Google Scholar]

- Boersma P., & Weenink D. (2012). Praat: Doing phonetics by computer (Version 4.0.26) [Computer software and manual]. Retrieved from http://www.praat.org

- Bradlow A. R., Torretta G. M., & Pisoni D. B. (1996). Intelligibility of normal speech I: Global and fine-grained acoustic-phonetic talker characteristics. Speech Communication, 20, 255–272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burnham D., Kitamura C., & Vollmer-Conna U. (2002, May 24). What's new, pussycat? On talking to babies and animals. Science, 296, 1435. [DOI] [PubMed] [Google Scholar]

- Cristia A., & Seidl A. (2014). The hyperarticulation hypothesis of infant-directed speech. Journal of Child Language, 41, 913–934. doi:10.1017/S0305000912000669 [DOI] [PubMed] [Google Scholar]

- Englund K. (2005). Voice onset time in infant directed speech over the first six months. First Language, 25, 220–234. doi:10.1177/0142723705050286 [Google Scholar]

- Englund K., & Behne D. M. (2005). Infant directed speech in natural interaction—Norwegian vowel quantity and quality. Journal of Psycholinguistic Research, 34, 259–280. [DOI] [PubMed] [Google Scholar]

- Englund K., & Behne D. M. (2006). Changes in infant directed speech in the first six months. Infant and Child Development, 15, 139–160. doi:10.1002/icd.445 [Google Scholar]

- Fant G. (1973). Speech sounds and features. Cambridge, MA: MIT Press. [Google Scholar]

- Ferguson S. H. (2012). Talker differences in clear and conversational speech: Vowel intelligibility for older adults with hearing loss. Journal of Speech, Language, and Hearing Research, 55, 779–790. doi:10.1044/1092-4388(2011/10-0342) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferguson S. H., & Kewley-Port D. (2002). Vowel intelligibility in clear and conversational speech for normal-hearing and hearing-impaired listeners. The Journal of the Acoustical Society of America, 112, 259–271. [DOI] [PubMed] [Google Scholar]

- Fernald A. (1989). Intonation and communicative intent in mothers' speech to infants: Is the melody the message? Child Development, 60, 1497–1510. [PubMed] [Google Scholar]

- Fernald A. (2000). Speech to infants as hyperspeech: Knowledge-driven processes in early word recognition. Phonetica, 57, 242–254. [DOI] [PubMed] [Google Scholar]

- Hart B., & Risley T. R. (1995). Meaningful differences in the everyday experience of young American children. Baltimore, MD: Brookes. [Google Scholar]

- Hazan V., & Baker R. (2011). Acoustic-phonetic characteristics of speech produced with communicative intent to counter adverse listening conditions. The Journal of the Acoustical Society of America, 130, 2139–2152. doi:10.1121/1.3623753 [DOI] [PubMed] [Google Scholar]

- Hillenbrand J. M., Clark M. J., & Nearey T. M. (2001). Effects of consonantal environment on vowel formant patterns. The Journal of the Acoustical Society of America, 109, 748–763. [DOI] [PubMed] [Google Scholar]

- Houston D. M., Ying E. A., Pisoni D. B., & Kirk K. I. (2003). Development of pre-word-learning skills in infants with cochlear implants. The Volta Review, 103, 303–326. [PMC free article] [PubMed] [Google Scholar]

- Hurtado N., Marchman V. A., & Fernald A. (2008). Does input influence uptake? Links between maternal talk, processing speed and vocabulary size in Spanish-learning children. Developmental Science, 11, F31–F39. doi:10.1111/j.1467-7687.2008.00768.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson K. (2004). Acoustic and auditory phonetics. Phonetica, 61, 56–58. [Google Scholar]

- Joint Committee on Infant Hearing. (2007). Year 2007 position statement: Principles and guidelines for early hearing detection and intervention. Retrieved from http://www.asha.org/policy [PubMed]

- Kaplan P. S., Bachorowski J.-A., Smoski M. J., & Hudenko W. J. (2002). Infants of depressed mothers, although competent learners, fail to learn in response to their own mothers' infant-directed speech. Psychological Science, 13, 268–271. [DOI] [PubMed] [Google Scholar]

- Kaplan P. S., Bachorowski J.-A., & Zarlengo-Strouse P. (1999). Child-directed speech produced by mothers with symptoms of depression fails to promote associative learning in 4-month-old infants. Child Development, 70, 560–570. [DOI] [PubMed] [Google Scholar]

- Kent R. D., & Read C. (1992). The acoustic analysis of speech. San Diego, CA: Singular. [Google Scholar]

- Koester L. (1995). Face-to-face interactions between hearing mothers and their deaf or hearing infants. Infant Behavior & Development, 18, 145–153. [Google Scholar]

- Kondaurova M. V., & Bergeson T. R. (2011). The effects of age and infant hearing status on maternal use of prosodic cues for clause boundaries in speech. Journal of Speech, Language, and Hearing Research, 54, 740–754. [DOI] [PubMed] [Google Scholar]

- Kondaurova M. V., Bergeson T. R., & Dilley L. C. (2012). Effects of deafness on acoustic characteristics of American English tense/lax vowels in maternal speech to infants. The Journal of the Acoustical Society of America, 132, 1039–1049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kondaurova M. V., Bergeson T. R., & Xu H. (2013). Age-related changes in prosodic features of maternal speech to prelingually deaf infants with cochlear implants. Infancy, 18(5), 1–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl P. K., Andruski J. E., Chistovich I. A., Chistovich L. A., Kozhevnikova E. V., Ryskina V. L., … Lacerda F. (1997, August 1). Cross-language analysis of phonetic units in language addressed to infants. Science, 277, 684–686. [DOI] [PubMed] [Google Scholar]

- Labov W., Ash S., & Boberg C. (2006). The atlas of North American English: Phonetics, phonology, sound change: A multimedia reference tool. Berlin, Germany: Walter de Gruyter. [Google Scholar]

- Lam C., & Kitamura C. (2010). Maternal interactions with a hearing and hearing-impaired twin: Similarities and differences in speech input, interaction quality, and word production. Journal of Speech, Language, and Hearing Research, 53, 543–555. [DOI] [PubMed] [Google Scholar]

- Lam C., & Kitamura C. (2012). Mommy, speak clearly: Induced hearing loss shapes vowel hyperarticulation. Developmental Science, 15, 212–221. [DOI] [PubMed] [Google Scholar]

- Lam J., Tjaden K., & Wilding G. (2012). Acoustics of clear speech: Effect of instruction. Journal of Speech, Language, and Hearing Research, 55, 1807–1821. doi:10.1044/1092-4388(2012/11-0154) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu H.-M., Kuhl P. K., & Tsao F.-M. (2003). An association between mothers' speech clarity and infants' speech discrimination skills. Developmental Science, 6, F1–F10. doi:10.1111/1467-7687.00275 [Google Scholar]

- Liu H.-M., Tsao F.-M., & Kuhl P. K. (2009). Age-related changes in acoustic modifications of Mandarin maternal speech to preverbal infants and five-year-old children: A longitudinal study. Journal of Child Language, 36, 909–922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Math Works. (2009). MATLAB [Computer software]. Natick, MA: Author. [Google Scholar]

- McKinley A. M., & Warren S. F. (2000). The effectiveness of cochlear implants for children with prelingual deafness. Journal of Early Intervention, 23, 252–263. [Google Scholar]

- McMurray B., Kovack-Lesh K. A., Goodwin D., & McEchron W. (2013). Infant directed speech and the development of speech perception: Enhancing development or an unintended consequence? Cognition, 129, 362–378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miyamoto R. T., Svirsky M. A., & Robbins A. M. (1997). Enhancement of expressive language in prelingually deaf children with cochlear implants. Acta Otolaryngologica, 117, 154–157. [DOI] [PubMed] [Google Scholar]

- Neel A. T. (2008). Vowel space characteristics and vowel identification accuracy. Journal of Speech, Language, and Hearing Research, 51, 574–585. [DOI] [PubMed] [Google Scholar]

- Niparko J. K., Tobey E. A., Thal D. J., Eisenberg L. S., Wang N.-Y., Quittner A. L., & Fink N. E. (2010). Spoken language development in children following cochlear implantation. Journal of the American Medical Association, 303, 1498–1506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papousek M., Papousek H., & Haekel M. (1987). Didactic adjustments in fathers' and mothers' speech to their 3-month-old infants. Journal of Psycholinguistic Research, 16, 491–516. [Google Scholar]

- Picheny M. A., Durlach N. I., & Braida L. D. (1986). Speaking clearly for the hard of hearing II: Acoustic characteristics of clear and conversational speech. Journal of Speech, Language, and Hearing Research, 29, 434–446. [DOI] [PubMed] [Google Scholar]

- Pisoni D. B., Conway C. M., Kronenberger W. C., Horn D. L., Karpicke J., & Henning S. C. (2008). Efficacy and effectiveness of cochlear implants in deaf children. In Marschark M., & Hauser P. C. (Eds.), Deaf cognition: Foundations and outcomes (pp. 52–100). Oxford, United Kingdom: Oxford University Press. [Google Scholar]

- Pitt M., Dilley L. C., Johnson K., Kiesling S., Raymond W., Hume E., & Fosler-Lussier E. (2007). Buckeye Corpus of Conversational Speech (Final release) [Computer software]. Retrieved from http://www.buckeyecorpus.osu.edu

- Pressman L. J., Pipp-Siegel S., Yoshinaga-Itano C., Kubicek L., & Emde R. N. (1998). A comparison of the links between emotional availability and language gain in young children with and without hearing loss. The Volta Review, 100, 251–277. [Google Scholar]

- Schlesinger H. S., & Meadow K. P. (1972). Sound and sign: Child deafness and mental health. Berkeley, CA: University of California Press. [Google Scholar]

- Smiljanic R., & Sladen D. (2013). Acoustic and semantic enhancements for children with cochlear implants. Journal of Speech, Language, and Hearing Research, 56, 1085–1096. doi:10.1044/1092-4388(2012/12-0097) [DOI] [PubMed] [Google Scholar]

- Soderstrom M. (2007). Beyond babytalk: Re-evaluating the nature and content of speech input to preverbal infants. Developmental Review, 27, 501–532. [Google Scholar]

- Song J. Y., Demuth K., & Morgan J. L. (2010). Effects of the acoustic properties of infant-directed speech on infant word recognition. The Journal of the Acoustical Society of America, 128, 389–400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stern D. N., Spieker S. S., Barnett R. K., & MacKain K. (1983). The prosody of maternal speech: Infant age and context related changes. Journal of Child Language, 10, 1–15. [DOI] [PubMed] [Google Scholar]

- Stevens K. N. (2000). Acoustic phonetics. Cambridge, MA: MIT Press. [Google Scholar]

- Stevens K. N., & House A. S. (1963). Perturbation of vowel articulations by consonantal context: An acoustical study. Journal of Speech, Language, and Hearing Research, 6, 111–128. [DOI] [PubMed] [Google Scholar]

- Sundberg U., & Lacerda F. (1999). Voice onset time in speech to infants and adults. Phonetica, 56, 186–199. doi:10.1159/000028450 [Google Scholar]

- Svirsky M. A., Robbins A. M., Kirk K. I., Pisoni D. B., & Miyamoto R. T. (2000). Language development in profoundly deaf children with cochlear implants. Psychological Science, 11, 153–158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uther M., Knoll M. A., & Burnham D. (2007). Do you speak E-NG-L-I-SH? A comparison of foreigner- and infant-directed speech. Speech Communication, 49, 2–7. [Google Scholar]

- Vallabha G. K., & Tuller B. (2002). Systematic errors in the formant analysis of steady-state vowels. Speech Communication, 38, 141–160. [Google Scholar]

- Wedell-Monning J., & Lumley J. M. (1980). Child deafness and mother-child interaction. Child Development, 51, 766–774. [PubMed] [Google Scholar]