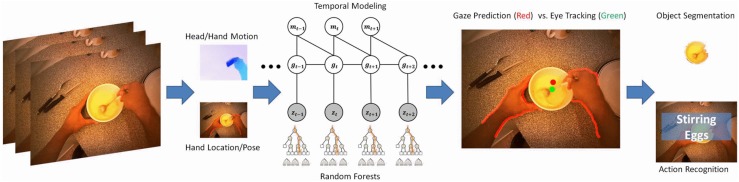

Figure 3.

Gaze prediction without reference to saliency or the activity model [32]. Egocentric features, which are head/hand motion and hand location/pose, are leveraged to predict gaze. A model that takes account of eye-hand and eye-head coordination, combined with temporal dynamics of gaze, is designed for gaze prediction. Only egocentric videos have been used, and the performance is compared to the ground truth acquired with an eye tracker (reprinted from [32]).