Abstract

Objective

Physicians typically respond to roughly half of the clinical decision support prompts they receive. This study was designed to test the hypothesis that selectively highlighting prompts in yellow would improve physicians’ responsiveness.

Study Design

conducted a randomized controlled trial using the Child Health Improvement through Computer Automation clinical decision support system in four urban primary care pediatric clinics. Half of a set of electronic prompts of interest was highlighted in yellow when presented to physicians in two clinics. The other half of the prompts was highlighted when presented to physicians in the other two clinics. Analyses compared physician responsiveness to the two randomized sets of prompts: highlighted versus not highlighted. Additionally, several prompts deemed “high-priority” were highlighted during the entire study period in all clinics. Physician response rates to the high-priority highlighted prompts were compared to response rates for those prompts from the year before the study period, when they were not highlighted.

Results

Physicians did not respond to prompts that were highlighted at higher rates than prompts that were not highlighted (62% and 61% respectively, OR=1.056, p=0.259, ns). Similarly, physicians were no more likely to respond to high-priority prompts that were highlighted, compared to the year prior when the prompts were not highlighted (59% and 59%, respectively, χ2=0.067, p=0.796, ns).

Conclusions

Highlighting reminder prompts did not increase physicians’ responsiveness. We provide possible explanations as to why highlighting did not improve responsiveness and offer alternate strategies to evaluate for increasing physician responsiveness to prompts.

Keywords: Clinical Decision Support, Alert Fatigue, Reminders, Prompts, Pediatric

INTRODUCTION

As clinical decision support systems (CDSS) in health care have advanced, research has increasingly focused on the use of these systems across a variety of health care settings as well as how they are being used. Some argue that CDSS is the optimal means of ensuring that evidence based care guidelines are immediately available to clinicians at the point of care.1,2 Physicians in a variety of settings have expressed interest in, and a need for, decision support that helps them to care for their patients better.2–5

The use of decision support prompts and reminders has been studied across a variety of health care settings and health care issues.2,6,7 Prompts can improve health care provider compliance with guidelines1,2,8–10 and, ultimately, improve health care quality and outcomes for patients.6,7,10,11 They have been found to improve delivery of anticipatory guidance in pediatric offices,9,12 reduce inappropriate antibiotic prescribing,11 and increase post-surgery antibiotic administration to reduce post-operative infections.1

However, other researchers have found that, although sentiments towards clinical decision support are typically positive,1,3 the rates at which clinicians adopt and rely on clinician decision support prompts and reminders vary and can be quite low.1,3,13 The earliest research on CDSS from the 1970s by McDonald and colleagues indicated that only half of prompts are responded to or acted on in a clinical decision support system.14 More recently, we found that this response rate has remained steady approximately three decades later.15 The low likelihood of physician response is a substantial impediment to the effective use of clinical decision support systems.

Several challenges to the adoption and use of clinical decision support prompts have been identified. These vary from costs, to electronic infrastructure, to the setting itself.16 Another reason that has been offered is “alert fatigue.”17,18 Alert fatigue occurs when clinicians encounter a large number of alerts or the same alerts many times. As a result they become desensitized to the information, finding it uninformative and no longer notice it or choose to ignore it.17–20 What is largely unknown, however, is how to successfully increase physician response rates to CDSS prompts.

This study was designed to test the hypothesis that highlighting prompts in a CDSS can increase rates of physician response.

MATERIALS AND METHODS

The Child Health Improvement through Computer Automation (CHICA) System

The CHICA system is a CDSS which was implemented in 2004 and has been used by a variety of pediatric health care providers continuously since its implementation.8,9,21 Currently, the CHICA system operates in four outpatient pediatric clinics in Indianapolis. CHICA has captured data from over 255,000 encounters with more than 37,500 unique patients.

Data are captured by CHICA through two means.9 The first source of data is the 20-item pre-screener form (PSF), a paper form with 20 yes/no questions that families complete upon arrival to the clinic while awaiting their appointment. The items presented on an individual patient’s PSF are electronically generated by an algorithm using the child’s age and other demographic data, data captured at other prior encounters, and data contained elsewhere within the child’s electronic medical record. Once completed, the form is scanned to capture the parents’ answers as coded data. Examples of questions include, “Does [child’s name] always wear a helmet when riding her bike or tricycle?” and “Do you feel safe in your home?”

The other CHICA data source is the physician worksheet (PWS). This paper form is completed by the physician during the encounter with the patient. It includes up to six prompts generated both by responses provided on the PSF by families, as well as age-specific general care guidelines. Each PWS prompt alerts the physician to possible interventions. Each prompt has up to six check boxes through which the physician can document assessments or actions taken in response to the reminder. The number of prompts appropriate for most encounters exceeds six so CHICA uses a prioritization scheme based on expected value to select the six highest priority prompts to print on the PWS.22

Prior research with the CHICA system has examined human and system errors23; successes of clinical interventions, such as a parental smoking cessation system24; clinical guideline evaluation8,21; chronic condition management25; developmental milestones and mental health outcomes26,27; and prioritization strategies of preventive care reminders.28

Design and Sample

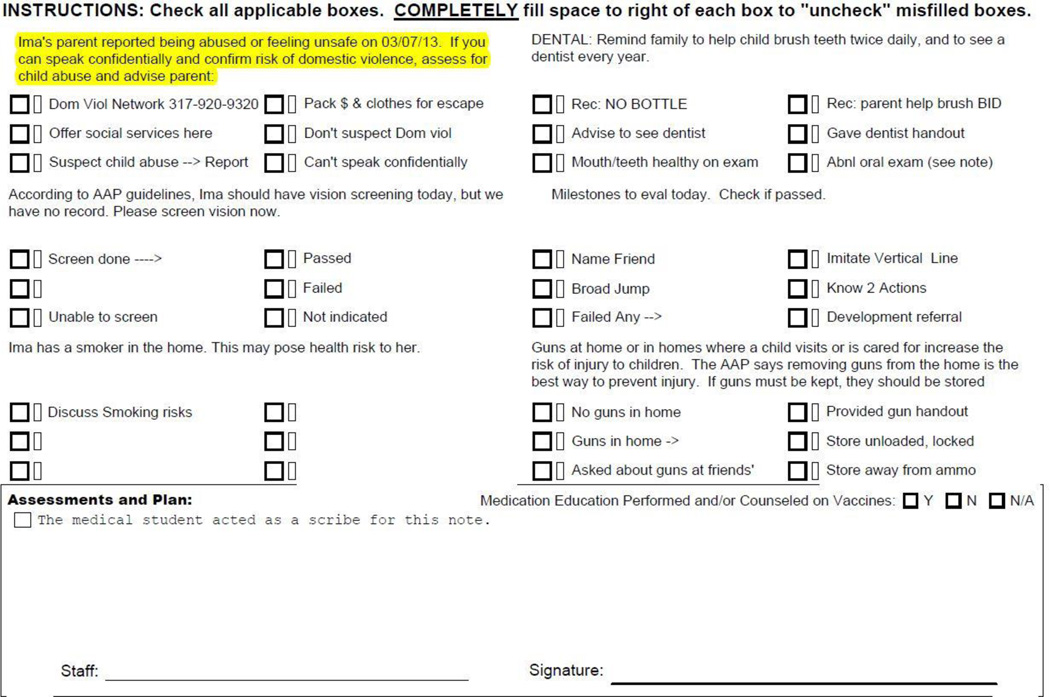

With the hypothesis that highlighting the prompts would increase physician responsiveness, we programmed CHICA to print certain prompts with yellow highlighting over the alert (Figure 1). To select prompts for study inclusion, we ordered the CHICA system’s PWS prompts by how frequently they were printed for physicians and how frequently physicians responded to them. The prompts were matched in pairs with similar priority, frequency of printing, and response rates. The prompts we identified for randomization between clinics were also those that we were comfortable randomizing to being either color highlighted or not. Additionally, “high-priority” prompts were identified by the investigators as being so critical that they were always highlighted during the intervention. Specifically, we determined that it was unethical to randomize these high-priority prompts to being either highlighted or not highlighted given our hypothesis that highlighting would increase physician response to prompts and our concern that highlighting could potentially decrease responses to (less salient) prompts that were not highlighted. This trial ran from 05/16/2012 to 08/14/2012.

Figure 1.

Example of yellow highlighted prompt seen by physician on Physician Worksheet (PWS).

Seven PWS prompts of interest were included for randomization among clinics. The Supplemental Table lists the prompts, how they were triggered to appear to physicians on the PWS, and the age range of the child targeted by the prompt. Pairs of clinics were matched on size by number of providers. One of each clinic pair was randomly by coin flip assigned to receive one set of highlighted prompts. The other clinic was given the other set of highlighted prompts. This design is perfectly balanced because each clinic group served as a control for the other. Physicians did not see any prompts that were highlighted only some of the time; if a prompt was highlighted in that clinic, it was highlighted for the entire study duration at that clinic (and never highlighted at the other two control clinic sites). Figure 1 shows an example of a highlighted prompt on the PWS.

Additionally, four “high-priority” PWS prompts were highlighted every time they appeared throughout the intervention period. These included one prompt pertaining to concerns of possible abuse of the patient, one about concerns of possible domestic violence in the patient’s household, and two dealing with adolescent depression and suicide. For analysis purposes, we compared physicians’ responses to these prompts when highlighted compared to their responses to these same prompts in the year prior to the study period.

By nature of implementing a visual-based intervention (i.e., color-highlighting), study personnel were therefore not blinded to the study design. However, data were extracted automatically by the CHICA system to avoid any bias in interpretation.

Statistical Analyses

For the randomized control trial, we used chi-square (χ2) analysis and binary logistic regression. We controlled the regression for patient sex, age, insurance status, race, as well as the position of the prompt on the PWS, because our previous work has shown these influence the response rates of physicians.29 We used Bonferroni correction to establish a cutoff for statistical significance for the eight separate comparisons conducted for these analyses (0.05/8 = 0.00625).

To compare response rates to the high-priority prompts before and during the intervention period, we used chi-square (χ2) analysis to determine if responsiveness to a given prompt changed over time.

The dependent variable in all analyses was whether the physician responded to the prompt by checking any box signifying that they saw the prompt and did or did not take action.

The Indiana University Institutional Review Board approved this study.

RESULTS

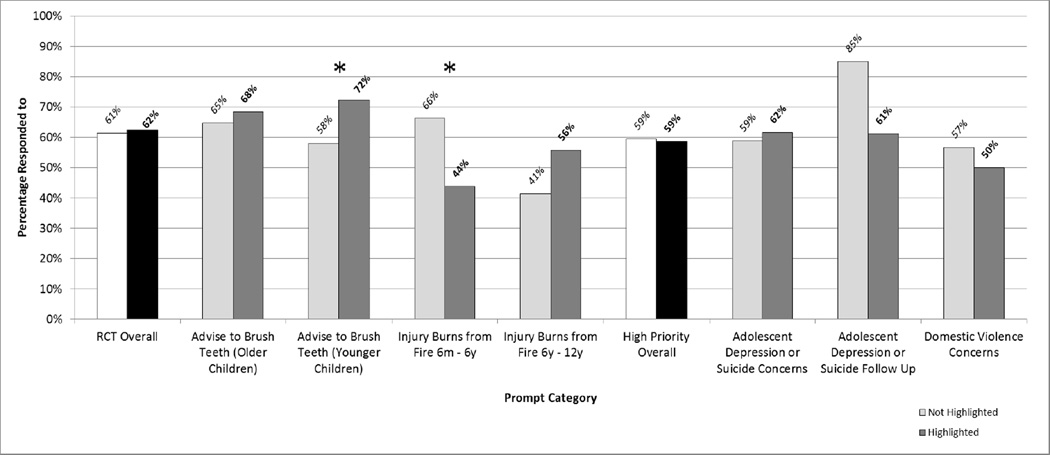

Overall, randomized prompts were printed 2,237 times during the study period. Physicians did not respond to prompts that were highlighted at significantly different rates than those that were not highlighted (OR = 1.056, CI = 0.956–1.167, p = 0.259, ns, χ2 = 0.3, p = 0.58, ns). Differences in physicians’ responses to prompts when highlighted compared to when not highlighted were not significant in six comparisons (p > 0.00625). When highlighted, physicians responded to the burn injury prompt for 6 month – 6 year olds 44% of the time compared to when not highlighted, where they responded 66% of the time; i.e., response decreased when the prompt was highlighted (χ2 = 31.5609, p < 0.001). On the other hand, when highlighted, physicians responded to the prompt concerning teeth brushing for younger children 72% of the time, compared to 58% of the time when not highlighted (χ2 = 16.4218, p < 0.001). Table 1 presents the counts of how frequently prompts were presented to physicians and how frequently physicians responded to the prompts as a function of whether they were highlighted. Figure 2 depicts the rates of physicians’ responses to the randomized prompts as a function of highlighting (prompts not presented to physicians at least 15 times during the study period are not depicted in Figure 2). A post-hoc power calculation30 based on the overall sample size of highlighted versus not highlighted prompts indicates that this study was 80% powered to detect an overall absolute difference in response rate of about 5%, both for the randomized and the high-priority prompts included in this study..

Table 1.

Counts of how frequently the randomized prompts were presented to physicians and how frequently physicians responded to them as a function of whether they were highlighted.

| Highlighted | Not Highlighted | χ2 | p-value | |||||

|---|---|---|---|---|---|---|---|---|

| Total # Times Presented |

# Times Responded To by Physician |

Response Rate (%) |

Total # Times Presented |

# Times Responded To by Physician |

Response Rate (%) |

|||

|

Overall (All Randomized Prompts) |

1,076 | 672 | 62 | 1,161 | 712 | 61 | 0.3 | 0.58 |

|

Advise to Brush Teeth (Older Children) |

164 | 112 | 68 | 283 | 183 | 65 | 0.61 | 0.44 |

|

Advise to Brush Teeth (Younger Children) |

487 | 352 | 72 | 289 | 168 | 58 | 16.42 | <0.001* |

| Alcohol High Risk | 3 | 0 | 0 | 3 | 1 | 33 | 1.2 | 0.27 |

| Alcohol Low Risk | 13 | 6 | 46 | 6 | 3 | 50 | 0.02 | 0.88 |

| Drugs High Risk | 4 | 3 | 75 | 2 | 0 | 0 | 3 | 0.08 |

| Drugs Low Risk | 0 | 0 | n/a | 1 | 1 | 100 | n/a | n/a |

| Injury Burns from Fire 6m - 6y | 222 | 97 | 44 | 473 | 313 | 66 | 31.56 | <0.001* |

| Injury Burns from Fire 6y - 12y | 183 | 102 | 56 | 104 | 43 | 41 | 5.49 | 0.02 |

|

Overall (All High-Priority Prompts) |

292 | 171 | 59 | 661 | 393 | 59 | 0.07 | 0.8 |

| Household Abuse Concerns | 9 | 8 | 89 | 42 | 29 | 1.47 | 0.23 | |

|

Adolescent Depression or Suicide Concerns |

169 | 104 | 62 | 352 | 207 | 59 | 0.35 | 0.55 |

|

Adolescent Depression or Suicide Follow Up |

18 | 11 | 61 | 20 | 17 | 85 | 2.79 | 0.1 |

| Domestic Violence Concerns | 96 | 48 | 50 | 247 | 140 | 57 | 1.25 | 0.26 |

Indicates statistical significance accounting for Bonferonni Correction (0.05/8 = 0.00625).

Figure 2.

Proportions of physicians’ responses to prompts as a function of whether they were color higlighted. (Data from prompts not presented to physicians at least 15 times total during the study period have been omitted from this graph.)

*Indicates statistical significance accounting for Bonferonni Correction (0.05/8 = 0.00625).

Similar to the RCT, analyses of the high-priority prompts also revealed that, overall, they were not more likely to be answered when highlighted than in the year prior when they were not (χ2 = 0.067, p = 0.796, ns). None of the four prompts selected for study inclusion produced response differences that attained statistical significance (p > .05). Table 1 and Figure 2 also present these high-priority prompt data.

DISCUSSION

Overall, highlighting prompts did not increase physicians’ responsiveness to them. This lack of an effect held both for our RCT between clinics, as well as our before-after analysis of high-priority reminder prompts. The study was 80% powered to find differences in responsiveness of approximately5%. These findings suggest that highlighting is not an effective strategy to increase the rates with which physicians attend to reminder prompts.

We offer several possible explanations for why highlighting did not impact physicians’ responses to reminder prompts. First, it could be the case that highlighting a prompt is simply not a strong enough indicator to render a prompt more perceptually salient. In the context of alert fatigue, highlighting might not be a strong enough cue to overcome fatigue. Other explanations for lack of physician responsiveness to prompts includes that they disagree with the content of the reminder, they need to address more pressing issues with the patient, or they think the data on which the reminder is based are incorrect. Signal detection theory speaks to this phenomenon,31,32 and refers to the capacity to discriminate between environmental input that does (known as a stimulus or signal) or does not (known as noise) provide useful information or require a response.31 The lack of highlighting’s efficacy in this study, therefore, could be conceptualized as a failure to render the prompts salient enough to reach a perceptual threshold wherein physicians acknowledge them as a stimulus or signal among the “noise” present in a clinical encounter. In an instance in which there is no response when a stimulus or signal is present (in this study, a prompt), signal detection theorists refer to it as a “miss.” Therefore, highlighting prompts in this study was unable to convert these misses into “hits.”

Another possible explanation, tied to principles of operant conditioning33 and human motivation,34 is that when there are no outcomes--either intrinsically or extrinsically--tied to one’s actions or failures to act, individuals may be unmotivated to act. In the case of reminder prompts, it could be that physicians notice the prompts (i.e., they are perceptually salient). However, if physicians are not either intrinsically motivated or have no extrinsic motivations, such as avoiding consequences or attaining incentives, this could render it difficult to change their behavior to respond to prompts.

There are some limitations to the study that warrant consideration. The clinic sites are concentrated in an urban pediatric outpatient setting, so generalizability to other settings is cautioned; however, we do not have any reason to suspect that color highlighting prompts would be more effective in other settings. This study was also conducted using paper-based prompts; it is possible that highlighting on a computer screen might make a difference, although we have no evidence to support that this would be the case. Further, we did not ask physicians why they did or did not respond to prompts (highlighted or not). Identifying physicians’ reasons for responding or not responding would be a useful next step. Factors impacting responsiveness could include their perceived level of knowledge or training about a given issue, the perceived “actionability” of the prompt, and/or their belief in the effectiveness of what they may say to a family about the issue. Additionally, we did not control for provider demographic information in our analyses. Given that our study design was perfectly balanced with each clinic pair serving as the other pair’s control, any demographic differences should be irrelevant unless the interaction between each prompt and each physician happened to be exactly equal in magnitude and opposite in direction as the hypothesized effect of the color-highlighting. Lastly, although this study was sufficiently powered overall, one could argue that that it was potentially under-powered with respect to specific prompts. Power to detect a change in each specific prompt category is lower both because of the smaller number of times each of these was printed and because of the Bonferroni correction for multiple comparisons

Although highlighting the prompts did not increase physicians’ responsiveness to them in this study, it offers ideas for future studies to improve responsiveness. We recommend that some sort of outcome be tied to failure to respond to prompts. For example, use of a “hard stop” in an electronic CDSS could prohibit the physician from advancing in the electronic system without clicking a checkbox to signify that she at least saw the prompt.35 Alternately, if the physician attempts to advance in the electronic system without clicking to acknowledge the prompt, the system could present a pop-up box that reviews the physician’s decision to ignore the prompt that they would have to confirm. In a paper-based system like CHICA, physicians could be given regular feedback concerning how many and what types of prompts they ignored. There could also be asynchronous feedback structures such that physicians receive daily summaries of patients for whom they ignored reminder prompts, to provide an opportunity to attend to those decisions when they have finished seeing patients for the day during a, perhaps, less hectic timeframe.

CONCLUSION

We hypothesized that color highlighting reminder prompts would improve physician responsiveness to them, but this hypothesis was not supported. We encourage investigators to evaluate other strategies to increase physicians’ response rates to reminder prompts.

Supplementary Material

What’s New.

This study tested the hypothesis that selectively color highlighting prompts in yellow would improve physicians’ responsiveness. Color highlighting reminder prompts does not appear to be an effective strategy to increase physicians’ responsiveness to clinical prompts.

Acknowledgments

Funding Acknowledgements: No external funding was secured for this study. The Child Health Improvement through Computer Automation (CHICA) clinical decision support system, from which data for this study were extracted, receives support from the following HHS grants: R01DK092717, R01HS017939, R01HS018453, R01HS020640.

We thank Htaw Htoo, Tammy Dugan, and Ashley Street for their assistance with data extraction and management for this study. We thank Elaine Cuevas for her project and IRB documentation management. We thank Katie Schwartz for her assistance with manuscript editing. We also thank other associates of the CHICA system and members of Child Health Improvement Research and Development Laboratory (CHIRDL).

Abbreviations

- CDSS

Clinical Decision Support Systems

- CHICA

Child Health Improvement through Computer Automation

Footnotes

Financial Disclosures: The authors have no financial relationships relevant to this article to disclose.

Conflicts of Interest: The authors have no conflicts of interest to disclose.

Contributors’ Statement Page

Kristin S. Hendrix: Dr. Hendrix assisted conceptualization and design, analysis and results interpretation, drafted and revised manuscript, and approved the final manuscript as submitted.

Stephen M. Downs: Dr. Downs assisted with conceptualization and design, reviewed and revised the manuscript, and approved the final manuscript as submitted. Dr. Downs also conceived of, designed, and oversees the Child Health Improvement through Computer Automation (CHICA) clinical decision support system.

Aaron E. Carroll: Dr. Carroll assisted with conceptualization and design, reviewed and revised the manuscript, and approved the final manuscript as submitted. Dr. Carroll also helped to design and oversees the Child Health Improvement through Computer Automation (CHICA) clinical decision support system.

References

- 1.Schwann NM, Bretz KA, Eid S, et al. Point-of-care electronic prompts: an effective means of increasing compliance, demonstrating quality, and improving outcome. Anesthesia and analgesia. 2011 Oct;113(4):869–876. doi: 10.1213/ANE.0b013e318227b511. [DOI] [PubMed] [Google Scholar]

- 2.Tierney WM, Overhage JM, Murray MD, et al. Can computer-generated evidence-based care suggestions enhance evidence-based management of asthma and chronic obstructive pulmonary disease? A randomized, controlled trial. Health services research. 2005 Apr;40(2):477–497. doi: 10.1111/j.1475-6773.2005.00368.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lindenauer PK, Ling D, Pekow PS, et al. Physician characteristics, attitudes, and use of computerized order entry. Journal of hospital medicine : an official publication of the Society of Hospital Medicine. 2006 Jul;1(4):221–230. doi: 10.1002/jhm.106. [DOI] [PubMed] [Google Scholar]

- 4.Overhage JM, Tierney WM, McDonald CJ. Computer reminders to implement preventive care guidelines for hospitalized patients. Archives of internal medicine. 1996 Jul 22;156(14):1551–1556. [PubMed] [Google Scholar]

- 5.Rosenbloom ST, Talbert D, Aronsky D. Clinicians' perceptions of clinical decision support integrated into computerized provider order entry. International journal of medical informatics. 2004 Jun 15;73(5):433–441. doi: 10.1016/j.ijmedinf.2004.04.001. [DOI] [PubMed] [Google Scholar]

- 6.Pearson SA, Moxey A, Robertson J, et al. Do computerised clinical decision support systems for prescribing change practice? A systematic review of the literature (1990-2007) BMC health services research. 2009;9:154. doi: 10.1186/1472-6963-9-154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wolfstadt JI, Gurwitz JH, Field TS, et al. The effect of computerized physician order entry with clinical decision support on the rates of adverse drug events: a systematic review. Journal of general internal medicine. 2008 Apr;23(4):451–458. doi: 10.1007/s11606-008-0504-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Anand V, Carroll AE, Downs SM. Automated primary care screening in pediatric waiting rooms. Pediatrics. 2012 May;129(5):e1275–e1281. doi: 10.1542/peds.2011-2875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Anand V, Biondich PG, Liu G, Rosenman M, Downs SM. Child Health Improvement through Computer Automation: the CHICA system. Studies in health technology and informatics. 2004;107(Pt 1):187–191. [PubMed] [Google Scholar]

- 10.Tierney WM, Overhage JM, Takesue BY, et al. Computerizing guidelines to improve care and patient outcomes: the example of heart failure. Journal of the American Medical Informatics Association : JAMIA. 1995 Sep-Oct;2(5):316–322. doi: 10.1136/jamia.1995.96073834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.McGregor JC, Weekes E, Forrest GN, et al. Impact of a computerized clinical decision support system on reducing inappropriate antimicrobial use: a randomized controlled trial. Journal of the American Medical Informatics Association : JAMIA. 2006 Jul-Aug;13(4):378–384. doi: 10.1197/jamia.M2049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Adams WG, Mann AM, Bauchner H. Use of an electronic medical record improves the quality of urban pediatric primary care. Pediatrics. 2003 Mar;111(3):626–632. doi: 10.1542/peds.111.3.626. [DOI] [PubMed] [Google Scholar]

- 13.Ip IK, Schneider LI, Hanson R, et al. Adoption and meaningful use of computerized physician order entry with an integrated clinical decision support system for radiology: ten-year analysis in an urban teaching hospital. Journal of the American College of Radiology : JACR. 2012 Feb;9(2):129–136. doi: 10.1016/j.jacr.2011.10.010. [DOI] [PubMed] [Google Scholar]

- 14.McDonald CJ. Protocol-based computer reminders, the quality of care and the non-perfectability of man. The New England journal of medicine. 1976 Dec 9;295(24):1351–1355. doi: 10.1056/NEJM197612092952405. [DOI] [PubMed] [Google Scholar]

- 15.Downs SM, Anand V, Dugan TM, Carroll AE. You can lead a horse to water: physicians' responses to clinical reminders. AMIA … Annual Symposium proceedings / AMIA Symposium. AMIA Symposium. 2010;2010:167–171. [PMC free article] [PubMed] [Google Scholar]

- 16.McDonald CJ. The barriers to electronic medical record systems and how to overcome them. Journal of the American Medical Informatics Association : JAMIA. 1997 May-Jun;4(3):213–221. doi: 10.1136/jamia.1997.0040213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ash JS, Sittig DF, Campbell EM, Guappone KP, Dykstra RH. Some unintended consequences of clinical decision support systems; AMIA … Annual Symposium proceedings / AMIA Symposium. AMIA Symposium; 2007. pp. 26–30. [PMC free article] [PubMed] [Google Scholar]

- 18.Kesselheim AS, Cresswell K, Phansalkar S, Bates DW, Sheikh A. Clinical decision support systems could be modified to reduce 'alert fatigue' while still minimizing the risk of litigation. Health Aff (Millwood) 2011 Dec;30(12):2310–2317. doi: 10.1377/hlthaff.2010.1111. [DOI] [PubMed] [Google Scholar]

- 19.Cash JJ. Alert fatigue. American journal of health-system pharmacy : AJHP : official journal of the American Society of Health-System Pharmacists. 2009 Dec 1;66(23):2098–2101. doi: 10.2146/ajhp090181. [DOI] [PubMed] [Google Scholar]

- 20.Lee EK, Mejia AF, Senior T, Jose J. Improving Patient Safety through Medical Alert Management: An Automated Decision Tool to Reduce Alert Fatigue. AMIA … Annual Symposium proceedings / AMIA Symposium. AMIA Symposium. 2010;2010:417–421. [PMC free article] [PubMed] [Google Scholar]

- 21.Carroll AE, Biondich PG, Anand V, et al. Targeted screening for pediatric conditions with the CHICA system. Journal of the American Medical Informatics Association : JAMIA. 2011 Jul-Aug;18(4):485–490. doi: 10.1136/amiajnl-2011-000088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Downs SM, Uner H. Expected value prioritization of prompts and reminders; Proceedings / AMIA … Annual Symposium. AMIA Symposium; 2002. pp. 215–219. [PMC free article] [PubMed] [Google Scholar]

- 23.Downs SM, Carroll AE, Anand V, Biondich PG. Human and system errors, using adaptive turnaround documents to capture data in a busy practice; AMIA … Annual Symposium proceedings / AMIA Symposium. AMIA Symposium; 2005. pp. 211–215. [PMC free article] [PubMed] [Google Scholar]

- 24.Downs SM, Zhu V, Anand V, Biondich PG, Carroll AE. The CHICA smoking cessation system; AMIA … Annual Symposium proceedings / AMIA Symposium. AMIA Symposium; 2008. pp. 166–170. [PMC free article] [PubMed] [Google Scholar]

- 25.Carroll AE, Anand V, Dugan TM, Sheley ME, Xu SZ, Downs SM. Increased Physician Diagnosis of Asthma with the Child Health Improvement through Computer Automation Decision Support System. Pediatric Allergy, Immunology, and Pulmonology. 2012 Sep;25(3):168–171. 2012. [Google Scholar]

- 26.Bauer NS, Gilbert AL, Carroll AE, Downs SM. Associations of early exposure to intimate partner violence and parental depression with subsequent mental health outcomes. JAMA pediatrics. 2013 Apr;167(4):341–347. doi: 10.1001/jamapediatrics.2013.780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bennett WE, Jr, Hendrix KS, Thompson-Fleming RT, Downs SM, Carroll AE. Early cow's milk introduction is associated with failed personal-social milestones after 1 year of age. European journal of pediatrics. 2014 Jan 24; doi: 10.1007/s00431-014-2265-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Biondich PG, Downs SM, Anand V, Carroll AE. Automating the recognition and prioritization of needed preventive services: early results from the CHICA system; AMIA … Annual Symposium proceedings / AMIA Symposium. AMIA Symposium; 2005. pp. 51–55. [PMC free article] [PubMed] [Google Scholar]

- 29.Carroll AE, Anand V, Downs SM. Understanding why clinicians answer or ignore clinical decision support prompts. Applied Clinical Informatics. 2012;3(3):309–317. doi: 10.4338/ACI-2012-04-RA-0013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Browner W, Newman T, Hulley S. Estimating Sample Size and Power: Applications and Examples. In: Hulley S, Cummings S, Browner W, Grady D, Newman T, editors. Designing Clinical Research. Third ed. Philadelphia, PA: Lippincott Williams & Wilkins; 2007. [Google Scholar]

- 31.Birdsall TG. The theory of signal detectability. In: Quastler H, editor. Information theory in psychology: problems and methods. New York, NY US: Free Press; 1956. pp. 391–402. [Google Scholar]

- 32.Smithburger PL, Buckley MS, Bejian S, Burenheide K, Kane-Gill SL. A critical evaluation of clinical decision support for the detection of drug-drug interactions. Expert opinion on drug safety. 2011 Nov;10(6):871–882. doi: 10.1517/14740338.2011.583916. [DOI] [PubMed] [Google Scholar]

- 33.Skinner BF. The behavior of organisms: an experimental analysis. Oxford England: Appleton-Century; 1938. [Google Scholar]

- 34.Sansone C, Harackiewicz JM. Intrinsic and extrinsic motivation: The search for optimal motivation and performance. San Diego, CA US: Academic Press; 2000. [Google Scholar]

- 35.Gross PA, Bates DW. A pragmatic approach to implementing best practices for clinical decision support systems in computerized provider order entry systems. Journal of the American Medical Informatics Association : JAMIA. 2007 Jan-Feb;14(1):25–28. doi: 10.1197/jamia.M2173. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.