Abstract

Resurgence following removal of alternative reinforcement has been studied in non-human animals, children with developmental disabilities, and typically functioning adults. Adult human laboratory studies have included responses without a controlled history of reinforcement, included only two response options, or involved extensive training. Arbitrary responses allow for control over history of reinforcement. Including an inactive response never associated with reinforcement allows the conclusion that resurgence exceeds extinction-induced variability. Although procedures with extensive training produce reliable resurgence, a brief procedure with the same experimental control would allow more efficient examination of resurgence in adult humans. We tested the acceptability of a brief, single-session, three-alternative forced-choice procedure as a model of resurgence in undergraduates. Selecting a shape was the target response (reinforced in Phase I), selecting another shape was the alternative response (reinforced in Phase II), and selecting a third shape was never reinforced. Despite manipulating number of trials and probability of reinforcement, resurgence of the target response did not consistently exceed increases in the inactive response. Our findings reiterate the importance of an inactive control response and call for reexamination of resurgence studies using only two response options. We discuss potential approaches to generate an acceptable, brief human laboratory resurgence procedure.

Keywords: operant conditioning, forced-choice, human, resurgence, relapse, mouse click

1. Introduction

Although behavioral treatments using alternative reinforcement can be effective when the interventions are in place, problem behavior can relapse when alternative reinforcement is removed or reduced post-treatment (e.g., Dobson et al., 2008; Higgins et al., 2007; Volkert, Lerman, Call, & Trosclair-Lasserre, 2009). In operant conditioning, relapse following the removal of alternative reinforcement is called resurgence (Epstein & Skinner, 1980). Often, the resurgence phenomenon is studied in laboratory animal models using pigeons (e.g., Leitenberg, Rawson, & Mulick, 1975, Experiment 3; Lieving & Lattal, 2003; Podlesnik & Shahan, 2009, Experiment 2; Sweeney & Shahan, 2013a) or rats (e.g., Leitenberg, Rawson, & Bath, 1970; Leitenberg et al., 1975, Experiments 1, 2, & 4; Winterbauer & Bouton, 2010; Winterbauer, Lucke, & Bouton, 2013; Sweeney & Shahan, 2013b; Sweeney & Shahan, 2015). A laboratory model of resurgence generally consists of three experimental phases. Phase I involves the training of an operant target response. For example, the target response for a rat may be to press a particular lever in an operant chamber to receive a food pellet delivery. In Phase II, which simulates alternative reinforcement treatment, extinction is in place for the target response and alternative reinforcement is introduced. For a rat, presses to the target lever would not result in the delivery of a food pellet, but a new response such as pulling a chain provides an alternative source of reinforcement. Phase III of the laboratory model is a probe for the effect of alternative reinforcement removal on the target response. During Phase III, alternative reinforcement is removed and extinction of the target response remains in place such that no response will produce reinforcement. It is during Phase III that resurgence of the suppressed target response can occur.

The generality of laboratory animal research findings has been supported by research examining resurgence in children with intellectual or developmental disabilities (e.g., Volkert et al., 2009, Wacker et al., 2011; Wacker et al., 2013), and in typically functioning adult participants (e.g., Dixon & Hayes, 1998; Doughty, Cash, Finch, Holloway, & Wallington, 2010; Doughty, Kastner, & Bismark, 2011; Bruzek, Thompson, & Peters, 2009; Mechner, Hyten, Field, & Madden, 1997; Wilson & Hayes, 1996). For example, research with laboratory animals has shown increased time in extinction plus alternative reinforcement may decrease subsequent resurgence (Leitenberg et al., 1975, Experiment 4; Sweeney & Shahan, 2013a; but see Winterbauer et al., 2013). Similarly, researchers examining the resurgence of problem behavior following alternative reinforcement treatment in children with intellectual disabilities found an overall decrease in resurgence as function of increased exposure to extinction plus alternative reinforcement treatment (Wacker et al. 2011). Another commonality is that increased length of baseline reinforcement is related to more robust resurgence in both animal research (Winterbauer et al., 2013) and in laboratory research with typically functioning adult humans (Bruzek et al., 2009; Doughty et al., 2011). The general agreement of animal and human resurgence findings is promising for future translational research.

On the other hand, current laboratory models of resurgence within typically functioning adult populations may limit the ability to isolate and manipulate predictors of persistence and resurgence. For example, Bruzek et al. (2009) conducted a study examining the resurgence of infant caregiving responses. Caregiving responses to a baby doll were negatively reinforced through the cessation of pre-recorded infant cry. This protocol is face valid, and resurgence in these scenarios may have important practical implications. However, the use of non-arbitrary responses makes it difficult to control the history of reinforcement for the target response. This is apparent in that one participant showed resurgence of the target caregiving response, but also showed a comparable increase in a response that was never explicitly reinforced in the study. The authors point out that this response may have had a history of reinforcement outside of the laboratory. Using such naturalistic responses may make it difficult to experimentally control history of reinforcement.

Some laboratory studies with adult humans have used arbitrary responses to control the history of reinforcement, but have had only two measurable behaviors (i.e., Dixon & Hayes, 1998; McHugh, Procter, Herzog, Schock, & Reed, 2012). The presence of only two measurable behaviors makes it difficult to establish that resurgence occurring in these preparations is a result of the history of reinforcement for the target behavior above and beyond extinction-induced variability (e.g., Antonitis, 1951, Morgan & Lee, 1996; Neuringer, Kornell, & Olufs, 2001). In animal models of resurgence, resurgence is distinguished from a simple increase in response variability by the inclusion of an inactive response. For example, in order to be considered resurgence, target response rates when alternative reinforcement is removed ought to exceed rates on a lever that has never been associated with food (e.g, Podlesnik, Jimenez-Gomez, & Shahan, 2006; Sweeney & Shahan, 2013a; Sweeney & Shahan, 2013b; Sweeney & Shahan, 2015). Therefore, an ideal resurgence task must include at least one response that has no history of reinforcement to control for random increases in responding not associated with reinforcement history.

Other research examining resurgence in adult humans has controlled for history of reinforcement and controlled for extinction-induced variability, but has required lengthy or repeated sessions (e.g., Doughty, Cash, Finch, Holloway, & Wallington, 2010; Doughty, Kastner, & Bismark, 2011; Mechner, Hyten, Field, & Madden, 1997; Wilson & Hayes, 1996). This is a function of examining resurgence of conditional discriminations, derived stimulus relations, or revealed operant behavior, which require more extensive accuracy training on the prerequisite discriminations than the relatively more simple discriminations described above (Dixon & Hayes, 1998; McHugh et al., 2012). Determining subject characteristics that predict response to alternative reinforcement treatments may necessitate larger sample sizes. The time consuming nature of studies with extensive training does not necessarily preclude larger sample sizes, but a brief procedure that similarly controls for history of reinforcement and extinction-induced variability may be more time- and cost- effective.

A novel resurgence procedure should also attempt to constrain variability that has made between-subject comparisons difficult in prior human operant resurgence research. One way to do this would be to have a trial-based procedure that forces a choice between three arbitrary stimuli. Past studies examining resurgence of conditional discriminations or derived stimulus relations used discrete-trial matching to sample procedures and observed resurgence (Wilson and Hayes, 1996; Doughty et al., 2010; Doughty et al., 2011), but the matching to sample aspect of the trials requiring lengthy training may not be necessary. Rather than free-operant response rate across session or matching to sample performance, proportion preference for a given stimulus during a particular trial block could be measured. This procedure is simple and would not require accuracy training beyond familiarization with the contingencies. This would increase the likelihood of adoption by other laboratories and clinical settings for replication and extension. Thus, we examined resurgence during a behavioral task in which each trial required the choice of one of three arbitrary stimuli in order to maximize points.

2. Method

2.1 Participants

Participants were 36 adult college students recruited through the Introduction to Psychology participant pool and announcements in psychology courses. We advertised that the volunteer who earned the most points at the end of the study would receive a $75 gift card to the Utah State University bookstore. Each participant completed all parts of the study in a single visit to the laboratory.

2.2 Procedure

2.2.1 Instructions

Following informed consent, participants sat in the chair in front of the experimental computer. General instructions (based in part on instructions found in Kangas et al., 2009 and Doughty et al., 2011) were read aloud to the participant. The full instructions script is in Appendix A. After reading the task instructions and answering any questions, the researcher left the participant alone until the task was completed.

2.2.2 Demographic Information

The computer program asked the participants to provide the following basic demographic information: age, gender, race/ethnicity, and years of education following high school. Gender and race/ethnicity had an option that read, “I prefer not to answer.”

2.2.3 Behavioral Task

The behavioral task (programmed using E-prime software; Psychology Software Tools, Pittsburgh, PA) consisted of three phases. In Phase I, selecting the target stimulus earned the participant 10 points with a probability that varied according to which version, or condition, of the task they experienced. Choosing the alternative stimulus or the inactive stimulus never resulted in points. The stimuli serving as the target and alternative were selected in a pseudorandom order. The length of Phase I also varied according to condition. In Phase II, selection of the alternative stimulus produced points with a given probability according to condition, whereas selection of the target stimulus or control stimulus never produced points. In the third phase, no points could be produced no matter which stimulus was selected.

These phases are analogous to the typical three-phase resurgence procedures: Phase I in which the target response is trained, Phase II in which the alternative response is trained and the target response is no longer productive, and Phase III in which no response is productive. The key difference is that rather than response rate in terms of responses per minute to the target or the alternative serving as the primary measure of responding, it is relative preference for the target, alternative, or control stimulus during any given block of 12 trials. Choices distributed equally between stimuli (around four per stimulus) illustrate no preference, whereas unequal choices illustrate a relative preference for stimuli chosen more often within that trial block. The control stimulus served as an indication of an effect of reward history over extinction-induced variability.

2.2.3.1 Stimuli

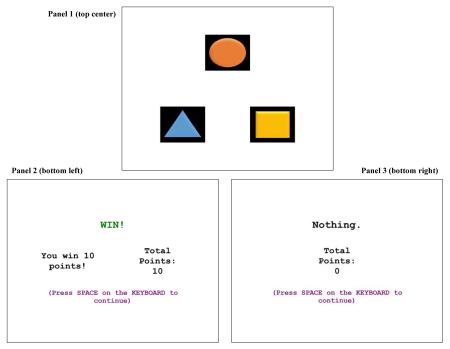

Target, alternative, and control stimuli were a yellow square, an orange circle, and a blue triangle. All colored shapes were in the foreground of a black square to ensure equal total surface area of the stimulus. Representative screen images of the program are in Appendix B.

2.2.3.2 Choice Screen

In each trial, participants viewed a stimulus “choice” screen with a white background and the three shapes in a triangular configuration. Each choice screen allowed the participants to choose between three options (circle, square, or triangle) and the participant indicated the selection by clicking anywhere on the shape, including the black background. There was no minimum or maximum time limit on the choice screen (i.e., limited hold). The only way to advance was by clicking on a shape with the mouse. The arrangement of the shapes was randomized across the trials. The mouse changed position randomly at the start of each choice trial, but it was always equidistant between two shapes or at the center of the screen. This was to increase participants’ engagement with the screen by compelling them to look at the screen before selecting the shape, and at the same time ensured the mouse position was never biased toward only one shape. All three shapes were present throughout all phases of the experiment.

2.2.3.3 Feedback Screen

Immediately following the participants’ selection, the three shapes and mouse pointer disappeared and the “feedback” screen displayed the message “Win!” in green letters if the selection earned points or “Nothing.” in black if the selection did not earn points. A running total of points earned always displayed on the bottom of the feedback screen. There was no minimum or maximum time limit on the feedback screen. The only way to advance was by pressing SPACE, which immediately brought up the next choice screen.

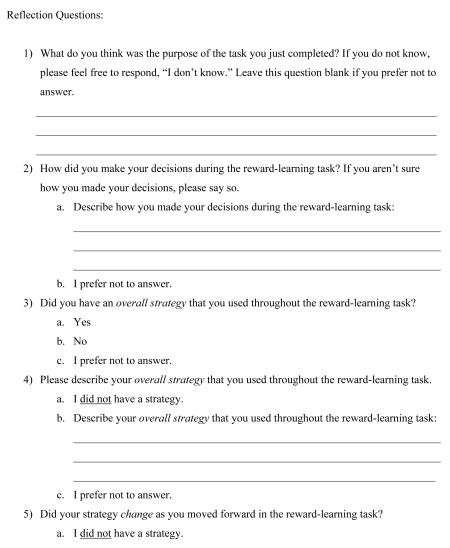

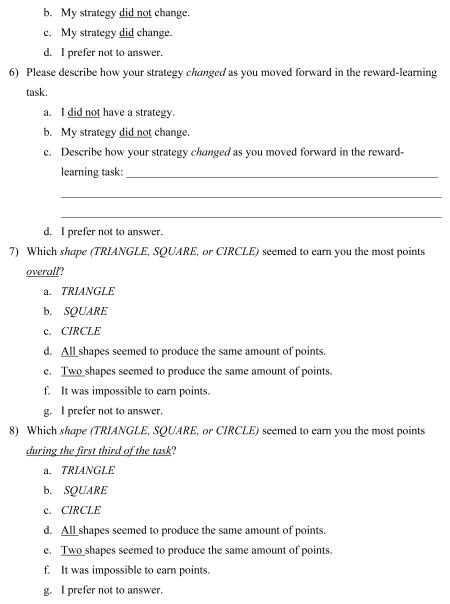

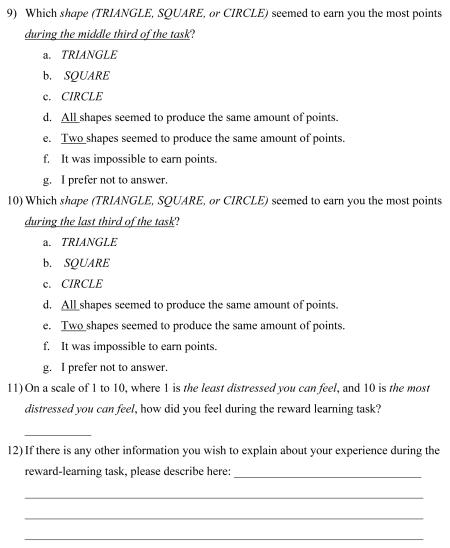

2.2.4 Reflection questions

Following the behavioral task, the participants were asked a series of questions (via Qualtrics®) that were designed to help us understand how participants’ subjective experience mapped on to their performance during the behavioral task. The questions are available in Appendix C.

2.3 Acceptability

Acceptability of the program was assessed after every five participants. An acceptable procedure would be one for which four of five participants showed resurgence and the data were generally consistent at the individual subject level. This incidence of resurgence would be generally consistent with the degree of resurgence observed in existing animal and human literature outlined in the Introduction. Resurgence was defined as an increase in the selection of the target stimulus during the first block of Phase III (when no points are available for any selection) relative to the last block of Phase II, and also that the selection of the target stimulus during the first block of Phase III is greater than for the inactive control stimulus (which never produced points). The inactive control stimulus serves as our indication of an effect of reward history beyond extinction-induced variability. Thus, if there is an increase in preference for the target stimulus during Phase III relative to the end of Phase II, and also a preference for the target stimulus over the inactive control stimulus, then we can say that resurgence has occurred. The extent of this increase in preference and the difference between preference for the target and control stimulus indicate the magnitude of the resurgence effect. If resurgence did not occur in four of five participants, then we modified the procedure. The order and nature of the modifications (conditions) are discussed below.

2.4 Conditions

When resurgence was not observed in four of five participants, we changed the procedure. There were seven variations of the task outlined above that are described in Table 1. Five participants experienced each condition. The first strategy of change was to increase the length of Phase I (acquisition of the target) relative to Phase II (acquisition of the alternative; Conditions 1, 2, & 3), as longer Phase I (Bruzek et al., 2009; Doughty et al., 2011; Winterbauer et al., 2013) and shorter Phase II (Sweeney & Shahan, 2013a; Leitenberg et al., 1975, Experiment 4) may increase the magnitude of resurgence. The second strategy was to modify the probability of reward. We began with a high probability of .8, and because acquisition of preference for the target stimulus was rapid, we decreased the probability of reward in order to make extinction less discriminable from reinforcement for the target, which can increase persistence in certain circumstances (Nevin et al., 2001). We first decreased the probability to .5 in both Phases I and II (Condition 4). Then, we decreased the probability of reward to .1 during Phase I, but kept the probability of reward at .5 in Phase II (Condition 5). We kept the probability of reward higher during Phase II because the data suggested that any lower probability would likely have required an increase in the length of Phase II, which would counter our efforts to see a resurgence effect. When we decreased to .1 during Phase I, acquisition of preference for the target stimulus never emerged, so we increased the probability of reward in Phase I to .2 (Condition 6). When acquisition of preference for the target stimulus remained inconsistent at a probability of .2, we increased the probability of reward during Phase I to .3 (Condition 7). In Condition 7, acquisition of preference for the target stimulus emerged, but no resurgence was observed. Because the manipulation of phase length made no difference and the range of reward probabilities was exhausted, we stopped data collection using this procedure.

Table 1.

Experimental Conditions: Variations of the Three-Phase Procedure

| Number of Trials |

Probability of Reward |

||||

|---|---|---|---|---|---|

| Condition | Phase I | Phase II | Phase III | Phase I | Phase II |

|

|

|

||||

| 1 | 60 | 60 | 60 | .8 | .8 |

| 2 | 120 | 60 | 60 | .8 | .8 |

| 3 | 180 | 60 | 60 | .8 | .8 |

| 4 | 180 | 60 | 60 | .5 | .5 |

| 5 | 180 | 60 | 60 | .1 | .5 |

| 6 | 180 | 60 | 60 | .2 | .5 |

| 7 | 180 | 60 | 60 | .3 | .5 |

Note. Above are the seven conditions of the experiment. Aside from the number of trials and the probability of reward, the methodology remained the same across all conditions. Five participants experienced each condition.

3. Results

3.1 Participants

Participants had a mean age of 21.0 years (SD = 2.4) and a mean of 2.2 years of education following high school (SD = 1.3). Of the 36 total participants, 19 identified as female and 17 as male. One participant identified as American Indian or Alaska Native, 34 identified as White, and one participant preferred not to answer this question. There were no systematic differences in performance as a function of participant gender, age, or education. Participant 18 experienced a program malfunction and his data were excluded from the rest of the analysis.

3.2 Behavioral Task Performance

Mean duration of the behavioral task was 13 min (SD = 3 min). Figures 1 and 2 display mean number of times the subjects chose each stimulus during a 12-trial block (i.e., preference) across Phase I (acquisition of the target), Phase II (acquisition of the alternative), and Phase III (resurgence test). Table 2 displays general performance for critical phases and point totals. Except for Condition 5, where the probability of reward during Phase I was .1, and Condition 6, where the probability of reward during Phase I was .2, acquisition of preference for the target stimulus and subsequent acquisition of the alternative stimulus during Phase II were consistent with the reinforcement contingencies.

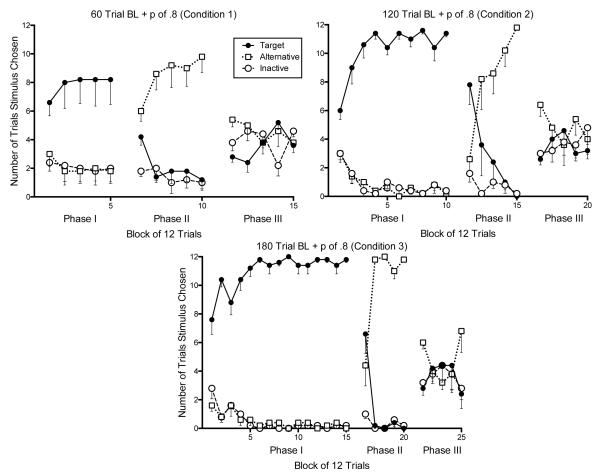

Figure 1.

Mean preference for the target, alternative, and inactive stimulus for Condition 1 (top left), Condition 2 (top right), and Condition 3 (bottom) across 12-trial blocks. Standard error is shown below the data points only.

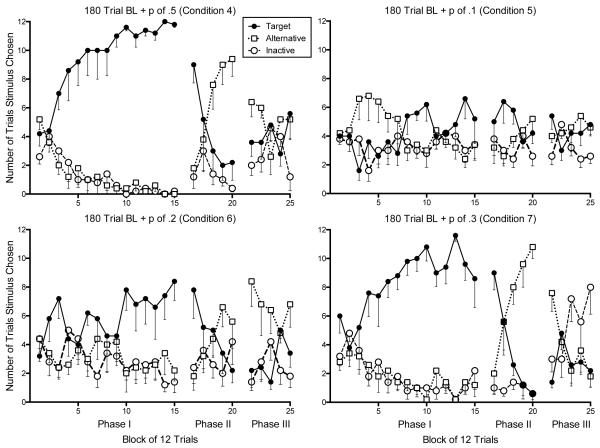

Figure 2.

Mean preference for the target, alternative, and inactive stimulus for Condition 4 (top left), Condition 5 (top right), Condition 6 (bottom left), and Condition 7 (bottom right) across 12-trial blocks. Standard error is shown below the data points only.

Table 2.

Mean Performance for Critical Trial Blocks, Mean Point Total, and Mean Subjective Distress for Each Condition

| Last Block Phase I |

Last Block Phase II |

First Block Phase III |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Condition | Target | Alt | Inactive | Target | Alt | Inactive | Target | Alt | Inactive | Total Points | Subjective Distress |

| 1 | 8.2 | 1.8 | 2.0 | 1.2 | 9.8 | 1.0 | 2.8 | 5.4 | 3.8 | 640.0 | 4.1 |

| (3.9) | (1.6) | (2.3) | (1.6) | (2.5) | (1.0) | (0.8) | (1.1) | (1.3) | (201.4) | (2.9) | |

| 2 | 11.4 | 0.2 | 0.4 | 0.0 | 11.8 | 0.2 | 2.6 | 6.4 | 3.0 | 1148.0 | 2.9 |

| (0.5) | (0.4) | (0.5) | (0.0) | (0.4) | (0.4) | (0.9) | (1.8) | (1.2) | (46.0) | (0.8) | |

| 3 | 11.8 | 0.0 | 0.2 | 0.0 | 11.8 | 0.2 | 2.8 | 6.0 | 3.2 | 1726.0 | 3.1 |

| (0.4) | (0.0) | (0.4) | (0.0) | (0.4) | (0.4) | (1.1) | (1.0) | (0.8) | (66.6) | (0.9) | |

| 4 | 11.8 | 0.0 | 0.2 | 2.2 | 9.4 | 0.4 | 3.6 | 6.4 | 2.0 | 858.0 | 2.9 |

| (0.4) | (0.0) | (0.4) | (2.8) | (2.7) | (0.5) | (2.2) | (2.3) | (1.6) | (94.2) | (0.9) | |

| 5 | 5.2 | 3.4 | 3.4 | 4.2 | 5.2 | 2.6 | 5.4 | 4.0 | 2.6 | 154.0 | 3.4 |

| (3.8) | (2.2) | (2.4) | (1.6) | (1.8) | (1.5) | (2.1) | (1.0) | (1.3) | (24.1) | (2.1) | |

| 6 | 8.4 | 2.2 | 1.4 | 2.2 | 5.6 | 4.2 | 2.2 | 8.4 | 1.4 | 274.0 | 4.9 |

| (3.0) | (1.6) | (1.7) | (1.9) | (4.2) | (3.6) | (2.7) | (3.9) | (1.3) | (46.7) | (1.7) | |

| 7 | 8.6 | 1.2 | 2.2 | 0.6 | 10.8 | 0.6 | 1.4 | 7.6 | 3.0 | 546.0 | 6.8 |

| (4.5) | (1.8) | (2.9) | (0.9) | (1.8) | (1.3) | (0.9) | (2.9) | (2.0) | (106.7) | (0.9) | |

| Total | 9.3 | 1.3 | 1.4 | 1.5 | 9.2 | 1.3 | 3.0 | 6.3 | 2.7 | 763.7 | 4.0 |

| (3.5) | (1.8) | (2.0) | (2.0) | (3.3) | (2.1) | (1.9) | (2.4) | (1.5) | (516.3) | (2.0) | |

Note. Performance for critical block of 12 trials as the mean number of times the target, alternative, or inactive stimulus was chosen. Total Points is the mean points obtained in the entire session. Units of Subjective Distress (from 1-10 where 1 is the least distressed you can feel, and 10 is the most distressed you can feel reports the mean for responses to question 11 of the reflection task, available in Appendix C. Standard deviations are below the means in parentheses. n = 5 for each condition.

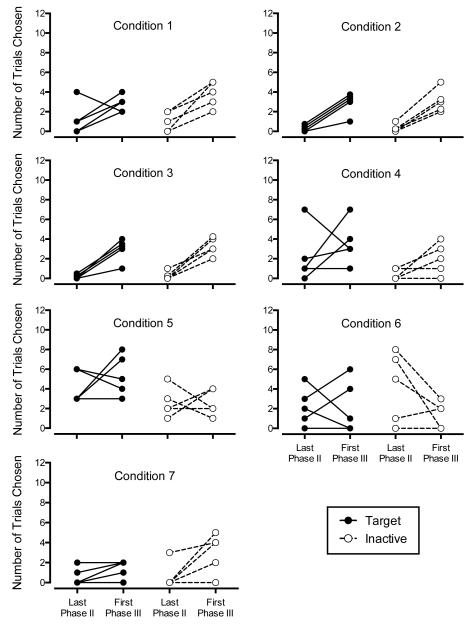

Figures 1 and 2 show that in Conditions 1-4 and 7, there was a visual increase in mean preference for the target stimulus during each 12 trial block of Phase III relative to the last 12-trial block of Phase II. In Conditions 5 and 6, there was no clear mean increase in target preference during Phase III. In no condition was the visual increase in mean preference for the target stimulus distinguishable from preference for the inactive stimulus. We also examined increases in preference for the target and inactive stimulus on the individual subject level. Figure 3 shows the number of trials the target and alternative stimuli were chosen in the last 12-trial block of Phase II relative to the first 12-trial block of Phase III. The individual pattern of responses in Figure 3 clearly show a tendency for participants to increase their preferences for the target response during the first trial block of Phase III relative to the last trial block of Phase II in Conditions 1-3. Data in Conditions 4 and 7 are more equivocal. Participants in Conditions 5 and 6 did not show a reliable increase. Figure 3 also displays preference for the inactive stimulus on the last 12-trial block of Phase II relative to the first 12-trial block of Phase III. In no condition was the pattern of individual subject preferences for the target and inactive stimuli clearly different. Whether examined on the individual subject level, by condition, or overall, the increase in preference for the target upon removal of alternative reinforcement was indistinguishable from the increase in preference for the inactive control stimulus.

Figure 3.

Individual subject target and inactive preference from the last trial block (12 trials) of Phase II and the first trial block of Phase III for all conditions. Each participant is represented by two data points (closed circles) and a connecting solid line for the target stimulus and two data points (open circles) and a connecting dotted line for the inactive stimulus. Each panel displays five participants. Identical data series (e.g., two or more participants with the same values for the last trial bock of Phase II and the first trial block of Phase III) are offset in increments of .25 so that each participant is visible on the graph. Offset data series were only necessary in Condition 2 (three target series offset; two inactive series offset) and Condition 3 (two target series offset; one inactive series offset).

In addition to the mean and individual subject examination of performance when alternative reinforcement was removed, each participant was coded as indicating an increase in preference for the target stimulus, the inactive stimulus, or no preference between target and inactive. An increase in preference for the target or inactive stimulus was indicated if (1) the participant selected the target or inactive stimulus more during the first block of Phase III than it was selected during the last block of Phase II, and (2) the participant chose the target or inactive stimulus more than the other stimulus during the first block of Phase III.

Using these criteria, nine participants showed an increase and preference for the target, 12 showed an increase and preference for the inactive, and 14 did not increase and/or show a preference for either the target or the inactive. This distribution is within the range of what would be expected if the task engendered indifference: many showing no increase or preference and a roughly equal number increased and preferred the target as did the inactive. Participants who showed a preference for the either the target or inactive did not differ systematically in terms of Phase I or Phase II acquisition or point totals.

3.3 Reflection Task

When asked what they thought the purpose of the experiment was, most participants provided a response (31) and four responded, “I don’t know.” The most common responses were that the task was to examine the tendency to choose a response after being rewarded for choosing that response (n = 10) and to see how well people can identify patterns (n = 11). Participant 12 thought the purpose might be to “see if people would search for extra information in the room or on the computer to help make decisions”. This participant reported looking through the office and reading some study notes. His data were not distinguishable in any systematic way from other participants and so were included in the analyses for Condition 3.

When participants were asked how they made their decisions, all participants provided a response. The most common theme in these responses was that participants reported finding a response that worked and then switching when it no longer worked (e.g., “I just chose whichever shape had given me points. When that one stopped giving me points I tried different shapes,” n = 22). Of those 22, participants described four approaches after the second shape “stopped working,” or what was likely Phase III following alternative reinforcement removal. One reported “I would go back to the one that I was rewared(sic) on,” two reported trying the inactive shape, eight indicated that they responded randomly, and one responded consistently choosing one shape hoping that would make it work. When describing the purpose of the task or how they made their decisions, it was common for participants to report attempting to find a pattern but failing (n = 14). This was especially common in Conditions 5 and 6 where acquisition was generally poor (nine out of ten participants). Answers to questions about describing strategy and how strategy changed were redundant with participant descriptions of how they made their decisions.

Because loss of alternative reinforcement could be considered a stressor, we examined subjective units of distress (on a scale of 1 to 10 where 1 is “the least distressed you can feel,” and 10 is “the most distressed you can feel”) as a function of whether the participants were coded (described in section 3.3) as showing an increase and preference during the first block of Phase III for the target (M = 3.72, SD = 1.37), the inactive (M = 4.02, SD = 2.30), or no increase/indifferent (M = 4.23, SD = 2.19) and found no clear differences. We also examined responses from participants about the purpose of the task and how they made their decisions as a function of showing an increase and preference for a stimulus during the first block of Phase III. It was relatively more common for those participants who showed an increase and preference for the target to report trying to find a pattern and failing (n = 5; 56% of target) relative to those showing an increase and preference for the inactive (n = 5; 42% of inactive), or those who showed no increase/preference (n = 4; 29% of no increase/indifferent). Further, it was relatively less common for those who showed an increase and preference for the target to report finding a response that worked and switching when it no longer worked (n = 3; 33% of target) relative to those showing an increase and preference for the inactive (n = 8; 67% of inactive), or no increase/preference (n = 11; 79%).

4. Discussion

Although consistent increases in preference for the target stimulus were observed, they could not be distinguished from increases in preference for the inactive control stimulus that was never associated with reinforcement. Thus, the inclusion of the inactive response proved an important control condition that may call for reexamination of two past resurgence studies with human participants that used only two response options. Dixon and Hayes (1998) instructed participants that there were two possible responses, repeating a pattern of movements of a circle, or moving the circle in different patterns. Similarly, McHugh et al. (2012, p. 407), participants were told, “You must press either quickly or slowly in order to earn points.” The dichotomy of responses in Dixon and Hayes and McHugh et al. without a control response does not allow for interpretation of resurgence as something above extinction-induced variability. If participants were operating under the rule, “This response is not working, it must be some other response that is working,” then the reason we did not observe resurgence comparable to Dixon and Hayes and McHugh et al. is that we included a response that was not associated with a history of reinforcement. Because of this, the increase in the target topography seen by Dixon and Hayes and McHugh et al. cannot be separated from the collective increase in preference for both the target stimulus and the inactive stimulus seen in this study.

Resurgence could be characterized as a specific form of extinction-induced variability, where behaviors with a history of reinforcement reappear as well as novel responses. In that case, it could be that rather than introducing an essential control by including an inactive stimulus, we are adding unnecessary complexity. However, if resurgence is a function of a history of reinforcement associated with the target response, then it is important to distinguish between response recovery and response novelty. In animal research, an increase in the target response does not occur when the target response has no history of reinforcement (Winterbauer & Bouton, 2010). In addition, animal studies often include an inactive response that has never been associated with reward, and the resurgence of the target response is readily distinguishable from the small increases of the inactive response during Phase III (Podlesnik et al., 2006; Shahan, Craig, & Sweeney, 2015; Sweeney & Shahan, 2013a; Sweeney & Shahan, 2013b; Sweeney & Shahan, 2015). The variables that have governed resurgence of a previously reinforced response in animal research may not similarly affect increases in novel responding. Therefore, if we hope to generalize knowledge based on animal studies of resurgence, a response that has never been associated with reinforcement is a necessary control in human research.

There are three additional differences in this procedure relative to past research that may account for the lack of resurgence observed: The brevity of baseline training phase, the use of a discrete-trial choice procedure rather than a VI schedule, and the presence of the alternative response during baseline training. In animal studies, the alternative response is not always available during the baseline training phase. For example, the alternative response might be a nose poke that is not illuminated during baseline but a light comes on in the nose poke once alternative reinforcement is introduced (Sweeney & Shahan, 2013b), or a chain is introduced in the roof of the chamber during Phases II and III (Podlesnik et al., 2006; Sweeney & Shahan, 2015). In these studies, the inactive response was present from the outset, and so the availability of the inactive response throughout the phases in our study is not a departure from past research. Even though the presence of the alternative stimulus during baseline in this study represents a departure from some animal research studies, increased experience with non-reinforcement of the alternative response would, if anything, negatively affect alternative response acquisition rather than impair target response relapse. In this study, however, alternative response acquisition proceeded as anticipated, except in Conditions 5 and 6 where acquisition was poor for both the target and the alternative. Further, from a generalization perspective, having only two stimuli during Phase I and three stimuli during Phases II and III would make Phase III less similar to baseline reinforcement of the target response and may prevent resurgence rather than encourage it. Finally, human studies of resurgence that have used matching to sample procedures have included the alternative response from the outset, and it did not prevent resurgence (Doughty et al., 2010; Doughty et al., 2011; Wilson & Hayes, 1996). Therefore, the presence of the alternative stimulus during baseline is a minor procedural difference and not likely responsible for the lack of resurgence in the present study.

Another difference between our procedure and existing animal studies of resurgence is the use of a discrete-trial choice procedure rather than a procedure in which the trial is not signaled, such as a VI schedule. The use of a choice procedure here is not unique among human laboratory procedures designed to produce resurgence. Wilson and Hayes (1996), Doughty et al. (2010), and Doughty et al. (2011) used discrete-trial matching to sample procedures and observed resurgence. The key difference may be that in an effort to create a brief, very simple resurgence experiment, the baseline training phase in our procedure was not as extended in time as in other examples. It could be that in an effort to create a more time- and cost-effective human laboratory model of resurgence that we left out a key variable in resurgence: the length of the baseline history of reinforcement for the target response. Experimental research that tests the effect of length of baseline training for the target response on subsequent resurgence is very limited (Bruzek et al., 2009; Doughty et al., 2010; Winterbauer et al., 2013), but does suggest that lengthier baseline training is associated with greater resurgence, even when acquisition of the target response reaches comparable levels at the end of baseline. Despite the limited knowledge of the parametric effects of length of baseline training on subsequent resurgence, it may be that by having lengthy baseline training sessions or even multiple visits to the laboratory, past research was able to see resurgence, whereas we were not.

This leaves at least two options for future work: dramatically increase the number of trials in Phase I of a trial-based procedure beyond what was implemented here, or move to VI schedules to more closely parallel the animal laboratory. The mean duration of the behavioral task reported here was very brief (M = 13 min, SD = 3 min), so there is some room for expansion. On the other hand, that the tripling of Phase I length here had no effect on subsequent preference during Phase III is not promising for simply lengthening Phase I. Also, repeated sessions or a very lengthy session is contrary to the goals of a brief procedure that can be implemented easily with many participants to tease out subject characteristics that may be predictive of resurgence. Perhaps the best course of action would be to implement a similar procedure of comparable duration with arbitrary stimuli using VI schedules of reinforcement for responses to the target and alternative stimuli. If the brief baseline training period with a VI schedule does not produce consistent resurgence, then the length of baseline training can be increased. Even if the resulting experimental preparation results in equally lengthy experimental sessions as more complex matching to sample discriminations, it would have the added advantage of simplicity of response topography and closer resemblance to animal studies, which would make replication of basic animal research in human subjects more straightforward. The presence of the inactive control stimulus remains critical to establish that manipulations of reinforcement ought to affect resurgence.

Highlights.

Resurgence is relapse that occurs when alternative reinforcement is removed.

We tested a forced-choice procedure as model of resurgence in college students.

We included target, alternative, and an inactive control response options.

Resurgence of the target did not exceed increases in the inactive response.

The data caution against inferring resurgence without an inactive control response.

Acknowledgments

We thank Gregory J. Madden, Amy L. Odum, Timothy A. Slocum, and Michael P. Twohig for helpful comments on a previous version of this manuscript. This study served as part of the doctoral dissertation of the first author in the Experimental and Applied Psychological Science Program at Utah State University.

Role of the Funding Source

This research was supported in part by NIH grant R01HD064576. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Appendix A

Instructions

Welcome to our study of reward learning! Your task today will be completed on a computer using the keyboard and mouse to respond. First, you will be asked some basic demographic information. Next, you will proceed to the reward-learning task. The computer will present you with many trials. On each trial, you will be presented with the choice between three options indicated by a TRIANGLE, a SQUARE, and a CIRCLE. You will indicate your choice by clicking on your choice with the mouse. Sometimes, your choice will earn you points. How you respond is completely up to you. Psychology 1010 research credit is not dependent on how well you play. However, the participant who scores the most total points will receive a $75 gift card to the Utah State University Bookstore, so try to earn as many points as you can! Following the reward-learning task, you will be asked to answer a few questions that will help us understand your experience during the task. Watches and cellular phones are not allowed in the experimental room. They can be safely stored during the session with me. Please leave the room only when the task is completed, in the event of an emergency, or if you wish to withdraw from the study. Do you have any questions?

Appendix B

Screen images

Panel 1 (top center) displays a representative image of the display for the program on the “Choice Screen” including the three stimuli (triangle, circle, and square). Panel 2 (bottom left) shows the “Feedback Screen” when the selection earned points, and Panel 3 (bottom right) shows the “Feedback Screen” when the selection did not earn points. The details of the program are outlined in section 2.2.3.

Appendix C

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Antonitis JJ. Response variability in the white rat during conditioning, extinction, and reconditioning. Journal of Experimental Psychology. 1951;42(4):273–281. doi: 10.1037/h0060407. [DOI] [PubMed] [Google Scholar]

- Bruzek JL, Thompson RH, Peters LC. Resurgence of infant caregiving responses. Journal of the Experimental Analysis of Behavior. 2009;92(3):327–343. doi: 10.1901/jeab.2009-92-327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon MR, Hayes LJ. Effects of differing instructional histories on the resurgence of rule-following. The Psychological Record. 1998;48(2):275–292. [Google Scholar]

- Dobson KS, Hollon SD, Dimidjian S, Schmaling KB, Kohlenberg RJ, Gallop R, Jacobson NS. Randomized trial of behavioral activation, cognitive therapy, and antidepressant medication in the prevention of relapse and recurrence in major depression. Journal of Consulting and Clinical Psychology. 2008;76(3):468. doi: 10.1037/0022-006X.76.3.468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doughty AH, Cash JD, Finch EA, Holloway C, Wallington LK. Effects of training history on resurgence in humans. Behavioural Processes. 2010;83:340–343. doi: 10.1016/j.beproc.2009.12.001. [DOI] [PubMed] [Google Scholar]

- Doughty AH, Kastner RM, Bismark BD. Resurgence of derived stimulus relations: Replication and extensions. Behavioural Processes. 2011;86:152–155. doi: 10.1016/j.beproc.2010.08.006. [DOI] [PubMed] [Google Scholar]

- Epstein R, Skinner BF. Resurgence of responding after the cessation of response-independence reinforcement. Proceedings of the National Academy of Sciences, U.S.A. 1980;77:6251–6253. doi: 10.1073/pnas.77.10.6251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higgins ST, Heil SH, Dantona R, Donham R, Matthews M, Badger GJ. Effects of varying the monetary value of voucher-based incentives on abstinence achieved during and following treatment among cocaine-dependent outpatients. Addiction. 2007;102(2):271–281. doi: 10.1111/j.1360-0443.2006.01664.x. [DOI] [PubMed] [Google Scholar]

- Kangas BD, Berry MS, Cassidy RN, Dallery J, Vaidya M, Hackenberg TD. Concurrent performance in a three-alternative choice situation: Response allocation in a Rock/Paper/Scissors game. Behavioural Processes. 2009;82:164–172. doi: 10.1016/j.beproc.2009.06.004. [DOI] [PubMed] [Google Scholar]

- Leitenberg H, Rawson RA, Bath K. Reinforcement of competing behavior during extinction. Science. 1970;169(3942):301–303. doi: 10.1126/science.169.3942.301. [DOI] [PubMed] [Google Scholar]

- Leitenberg H, Rawson RA, Mulick JA. Extinction and reinforcement of alternative behavior. Journal of Comparative and Physiological Psychology. 1975;88:640–652. [Google Scholar]

- Lieving GA, Lattal KA. Recency, repeatability, and reinforcer retrenchment: An experimental analysis of resurgence. Journal of the Experimental Analysis of Behavior. 2003;80(2):217–233. doi: 10.1901/jeab.2003.80-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McHugh L, Procter J, Herzog M, Schock AK, Reed P. The effect of mindfulness on extinction and behavioral resurgence. Learning & Behavior. 2012;40(4):405–415. doi: 10.3758/s13420-011-0062-2. [DOI] [PubMed] [Google Scholar]

- Mechner F, Hyten C, Field DP, Madden GJ. Using revealed operants to study the structure and properties of human operant behavior. The Psychological Record. 1997;47:45–68. [Google Scholar]

- Morgan DL, Lee K. Extinction-induced response variability in humans. The Psychological Record. 1996;46(1):145–159. [Google Scholar]

- Neuringer A, Kornell N, Olufs M. Stability and variability in extinction. Journal of Experimental Psychology: Animal Behavior Processes. 2001;27(1):79–94. [PubMed] [Google Scholar]

- Nevin JA, McLean AP, Grace RC. Resistance to extinction: Contingency termination and generalization decrement. Animal Learning & Behavior. 2001;29(2):176–191. [Google Scholar]

- Podlesnik CA, Shahan TA. Behavioral momentum and relapse of extinguished operant responding. Learning & Behavior. 2009;37(4):357–364. doi: 10.3758/LB.37.4.357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Podlesnik CA, Jimenez-Gomez C, Shahan TA. Resurgence of alcohol seeking produced by discontinuing non-drug reinforcement as an animal model of drug relapse. Behavioural Pharmacology. 2006;17(4):369–374. doi: 10.1097/01.fbp.0000224385.09486.ba. [DOI] [PubMed] [Google Scholar]

- Shahan TA, Craig AR, Sweeney MM. Resurgence of sucrose and cocaine seeking in free-feeding rats. Behavioural Brain Research. 2015;279:47–51. doi: 10.1016/j.bbr.2014.10.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sweeney MM, Shahan TA. Behavioral momentum and resurgence: Effects of time in extinction and repeated resurgence tests. Learning & Behavior. 2013a;41(4):414–424. doi: 10.3758/s13420-013-0116-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sweeney MM, Shahan TA. Effects of high, low, and thinning rates of alternative reinforcement on response elimination and resurgence. Journal of the Experimental Analysis of Behavior. 2013b;100(1):102–116. doi: 10.1002/jeab.26. [DOI] [PubMed] [Google Scholar]

- Sweeney MM, Shahan TA. Renewal, resurgence, and alternative reinforcement context. Behavioural Processes. 2015;116:43–49. doi: 10.1016/j.beproc.2015.04.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Volkert VM, Lerman DC, Call NA, Trosclair-Lasserre N. An evaluation of resurgence during treatment with functional communication training. Journal of Applied Behavior Analysis. 2009;42(1):145–160. doi: 10.1901/jaba.2009.42-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wacker DP, Harding JW, Berg WK, Lee JF, Schieltz KM, Padilla YC, Nevin JA, Shahan TA. An evaluation of persistence of treatment effects during long-term treatment of destructive behavior. Journal of the Experimental Analysis of Behavior. 2011;96(2):261–282. doi: 10.1901/jeab.2011.96-261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wacker DP, Harding JW, Morgan TA, Berg WK, Schieltz KM, Lee JF, Padilla YC. An evaluation of resurgence during functional communication training. Psychological Record. 2013;63(1):3–20. doi: 10.11133/j.tpr.2013.63.1.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson KG, Hayes SC. Resurgence of derived stimulus relations. Journal of the Experimental Analysis of Behavior. 1996;66:267–281. doi: 10.1901/jeab.1996.66-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winterbauer NE, Bouton ME. Mechanisms of resurgence of an extinguished instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes. 2010;3(3):343–353. doi: 10.1037/a0017365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winterbauer NE, Lucke S, Bouton ME. Some factors modulating the strength of resurgence after extinction of an instrumental behavior. Learning and Motivation. 2013;44(1):60–71. doi: 10.1016/j.lmot.2012.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]