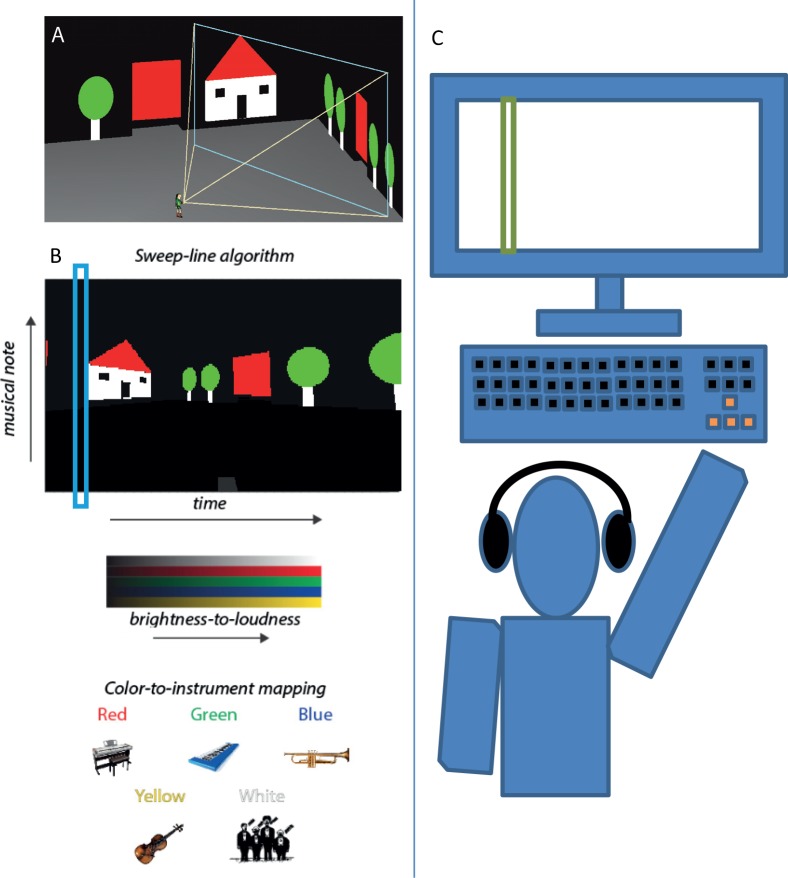

Fig 1. The EyeMusic SSD and experimental setup.

(A) A 3rd person view of the user's avatar and field of view, the contents of which will be sonified in the EyeMusic's algorithm shown below it. (B) Illustrates the principles of the EyeMusic visual-to-audio transformation: after down-sampling images to 24*40, each pixel in the image is assigned a value such that its y-axis value is converted to a musical note (the higher the value the higher the note), its x-axis value to time (the closer to the left the earlier in the soundscape), its brightness to volume and its color to musical instrument (C) Illustration of the experimental setup.